library(tidyverse)27 Logistic regression

27.1 Finding wolf spiders on the beach

A team of Japanese researchers were investigating what would cause the burrowing wolf spider Lycosa ishikariana to be found on a beach. They hypothesized that it had to do with the size of the grains of sand on the beach. They went to 28 beaches in Japan, measured the average sand grain size (in millimetres), and observed the presence or absence of this particular spider on each beach. The data are in link.

Why would logistic regression be better than regular regression in this case?

Read in the data and check that you have something sensible. (Look at the data file first: the columns are aligned.)

Make a boxplot of sand grain size according to whether the spider is present or absent. Does this suggest that sand grain size has something to do with presence or absence of the spider?

Fit a logistic regression predicting the presence or absence of spiders from the grain size, and obtain its

summary. Note that you will need to do something with the response variable first.Is there a significant relationship between grain size and presence or absence of spiders at the \(\alpha=0.10\) level? Explain briefly.

Obtain predicted probabilities of spider presence for a representative collection of grain sizes. I only want predicted probabilities, not any kind of intervals.

Given your previous work in this question, does the trend you see in your predicted probabilities surprise you? Explain briefly.

27.2 Killing aphids

An experiment was designed to examine how well the insecticide rotenone kills aphids that feed on the chrysanthemum plant called Macrosiphoniella sanborni. The explanatory variable is the log concentration (in milligrams per litre) of the insecticide. At each of the five different concentrations, approximately 50 insects were exposed. The number of insects exposed at each concentration, and the number killed, are shown below.

Log-Concentration Insects exposed Number killed

0.96 50 6

1.33 48 16

1.63 46 24

2.04 49 42

2.32 50 44

Get these data into R. You can do this by copying the data into a file and reading that into R (creating a data frame), or you can enter the data manually into R using

c, since there are not many values. In the latter case, you can create a data frame or not, as you wish. Demonstrate that you have the right data in R.* Looking at the data, would you expect there to be a significant effect of log-concentration? Explain briefly.

Run a suitable logistic regression, and obtain a summary of the results. (There was a part (c) here before, but I took it out.)

Does your analysis support your answer to (here)? Explain briefly.

Obtain predicted probabilities of an insect’s being killed at each of the log-concentrations in the data set.

Obtain a plot of the predicted probabilities of an insect’s being killed, as it depends on the log-concentration.

People in this kind of work are often interested in the “median lethal dose”. In this case, that would be the log-concentration of the insecticide that kills half the insects. Based on your predictions, roughly what do you think the median lethal dose is?

27.3 The effects of Substance A

In a dose-response experiment, animals (or cell cultures or human subjects) are exposed to some toxic substance, and we observe how many of them show some sort of response. In this experiment, a mysterious Substance A is exposed at various doses to 100 cells at each dose, and the number of cells at each dose that suffer damage is recorded. The doses were 10, 20, … 70 (mg), and the number of damaged cells out of 100 were respectively 10, 28, 53, 77, 91, 98, 99.

Find a way to get these data into R, and show that you have managed to do so successfully.

Would you expect to see a significant effect of dose for these data? Explain briefly.

Fit a logistic regression modelling the probability of a cell being damaged as it depends on dose. Display the results. (Comment on them is coming later.)

Does your output indicate that the probability of damage really does increase with dose? (There are two things here: is there really an effect, and which way does it go?)

Obtain predicted damage probabilities for some representative doses.

Draw a graph of the predicted probabilities, and to that add the observed proportions of damage at each dose. Hints: you will have to calculate the observed proportions first. See here, near the bottom, to find out how to add data to one of these graphs. The

geom_pointline is the one you need.

Looking at the predicted probabilities, would you say that the model fits well? Explain briefly.

27.4 What makes an animal get infected?

Some animals got infected with a parasite. We are interested in whether the likelihood of infection depends on any of the age, weight and sex of the animals. The data are at link. The values are separated by tabs.

Read in the data and take a look at the first few lines. Is this one animal per line, or are several animals with the same age, weight and sex (and infection status) combined onto one line? How can you tell?

* Make suitable plots or summaries of

infectedagainst each of the other variables. (You’ll have to think aboutsex, um, you’ll have to think about thesexvariable, because it too is categorical.) Anything sensible is OK here. You might like to think back to what we did in Question here for inspiration. (You can also investigatetable, which does cross-tabulations.)Which, if any, of your explanatory variables appear to be related to

infected? Explain briefly.Fit a logistic regression predicting

infectedfrom the other three variables. Display thesummary.* Which variables, if any, would you consider removing from the model? Explain briefly.

Are the conclusions you drew in (here) and (here) consistent, or not? Explain briefly.

* The first and third quartiles of

ageare 26 and 130; the first and third quartiles ofweightare 9 and 16. Obtain predicted probabilities for all combinations of these andsex. (You’ll need to start by making a new data frame, usingdatagridto get all the combinations.)

27.5 The brain of a cat

A large number (315) of psychology students were asked to imagine that they were serving on a university ethics committee hearing a complaint against animal research being done by a member of the faculty. The students were told that the surgery consisted of implanting a device called a cannula in each cat’s brain, through which chemicals were introduced into the brain and the cats were then given psychological tests. At the end of the study, the cats’ brains were subjected to histological analysis. The complaint asked that the researcher’s authorization to carry out the study should be withdrawn, and the cats should be handed over to the animal rights group that filed the complaint. It was suggested that the research could just as well be done with computer simulations.

All of the psychology students in the survey were told all of this. In addition, they read a statement by the researcher that no animal felt much pain at any time, and that computer simulation was not an adequate substitute for animal research. Each student was also given one of the following scenarios that explained the benefit of the research:

“cosmetic”: testing the toxicity of chemicals to be used in new lines of hair care products.

“theory”: evaluating two competing theories about the function of a particular nucleus in the brain.

“meat”: testing a synthetic growth hormone said to potentially increase meat production.

“veterinary”: attempting to find a cure for a brain disease that is killing domesticated cats and endangered species of wild cats.

“medical”: evaluating a potential cure for a debilitating disease that afflicts many young adult humans.

Finally, each student completed two questionnaires: one that would assess their “relativism”: whether or not they believe in universal moral principles (low score) or whether they believed that the appropriate action depends on the person and situation (high score). The second questionnaire assessed “idealism”: a high score reflects a belief that ethical behaviour will always lead to good consequences (and thus that if a behaviour leads to any bad consequences at all, it is unethical).1

After being exposed to all of that, each student stated their decision about whether the research should continue or stop.

I should perhaps stress at this point that no actual cats were harmed in the collection of these data (which can be found as a .csv file at link). The variables in the data set are these:

decision: whether the research should continue or stop (response)idealism: score on idealism questionnairerelativism: score on relativism questionnairegenderof studentscenarioof research benefits that the student read.

A more detailed discussion^(“[If you can believe it.] of this study is at link.

Read in the data and check by looking at the structure of your data frame that you have something sensible. Do not call your data frame

decision, since that’s the name of one of the variables in it.Fit a logistic regression predicting

decisionfromgender. Is there an effect of gender?To investigate the effect (or non-effect) of

gender, create a contingency table by feedingdecisionandgenderintotable. What does this tell you?* Is your slope for

genderin the previous logistic regression positive or negative? Is it applying to males or to females? Looking at the conclusions from your contingency table, what probability does that mean your logistic regression is actually modelling?Add the two variables

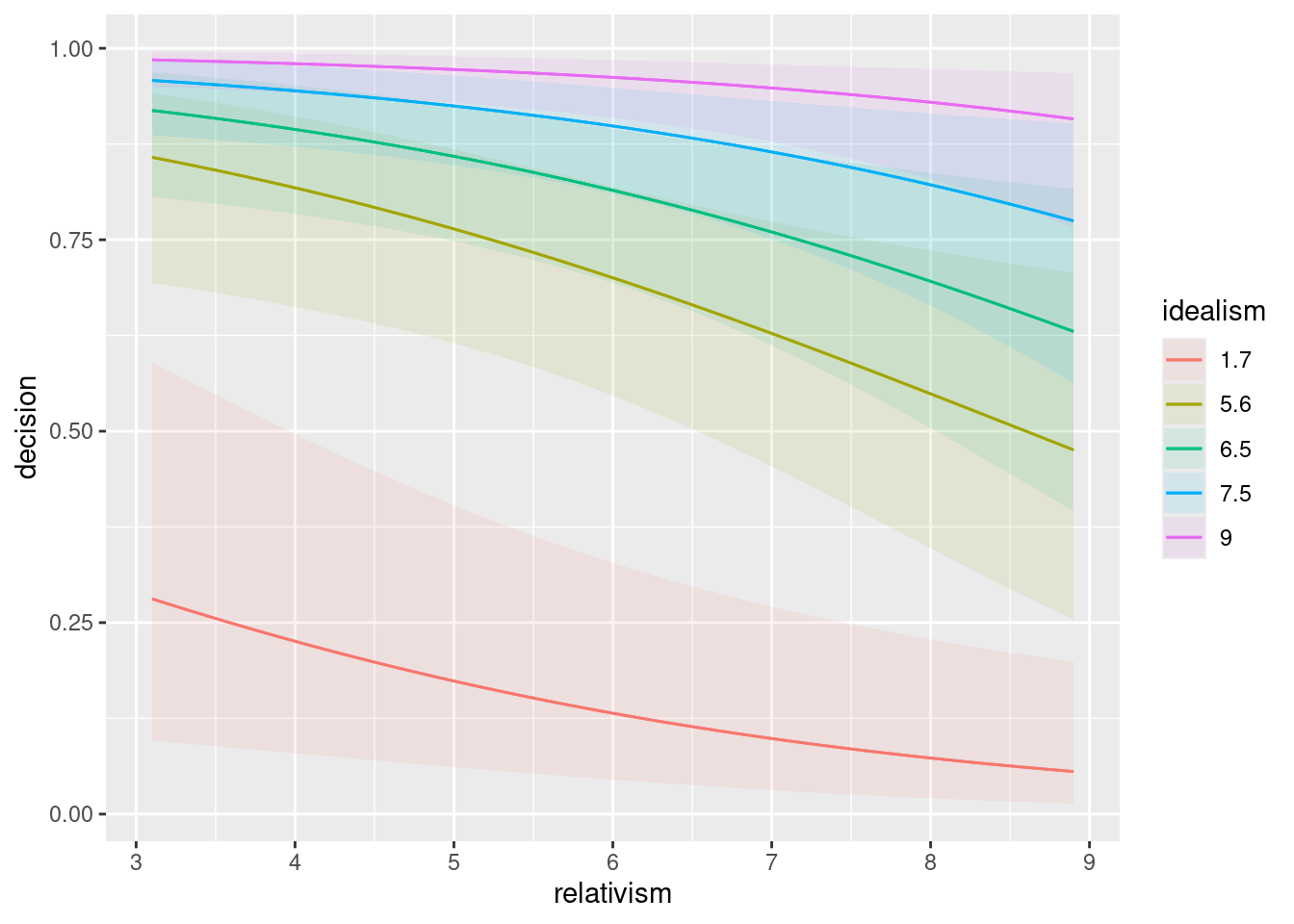

idealismandrelativismto your logistic regression. Do either or both of them add significantly to your model? Explain briefly.Add the variable

scenarioto your model. That is, fit a new model with that variable plus all the others.Use

anovato compare the models with and withoutscenario. You’ll have to add atest="Chisq"to youranova, to make sure that the test gets done. Doesscenariomake a difference or not, at \(\alpha=0.10\)? Explain briefly. (The reason we have to do it this way is thatscenariois a factor with five levels, so it has four slope coefficients. To test them all at once, which is what we need to make an overall test forscenario, this is the way it has to be done.)Look at the

summaryof your model that containedscenario. Bearing in mind that the slope coefficient forscenariocosmeticis zero (since this is the first scenario alphabetically), which scenarios have the most positive and most negative slope coefficients? What does that tell you about those scenarios’ effects?Describe the effects that having (i) a higher idealism score and (ii) a higher relativity score have on a person’s probability of saying that the research should stop. Do each of these increase or decrease that probability? Explain briefly.

27.6 How not to get heart disease

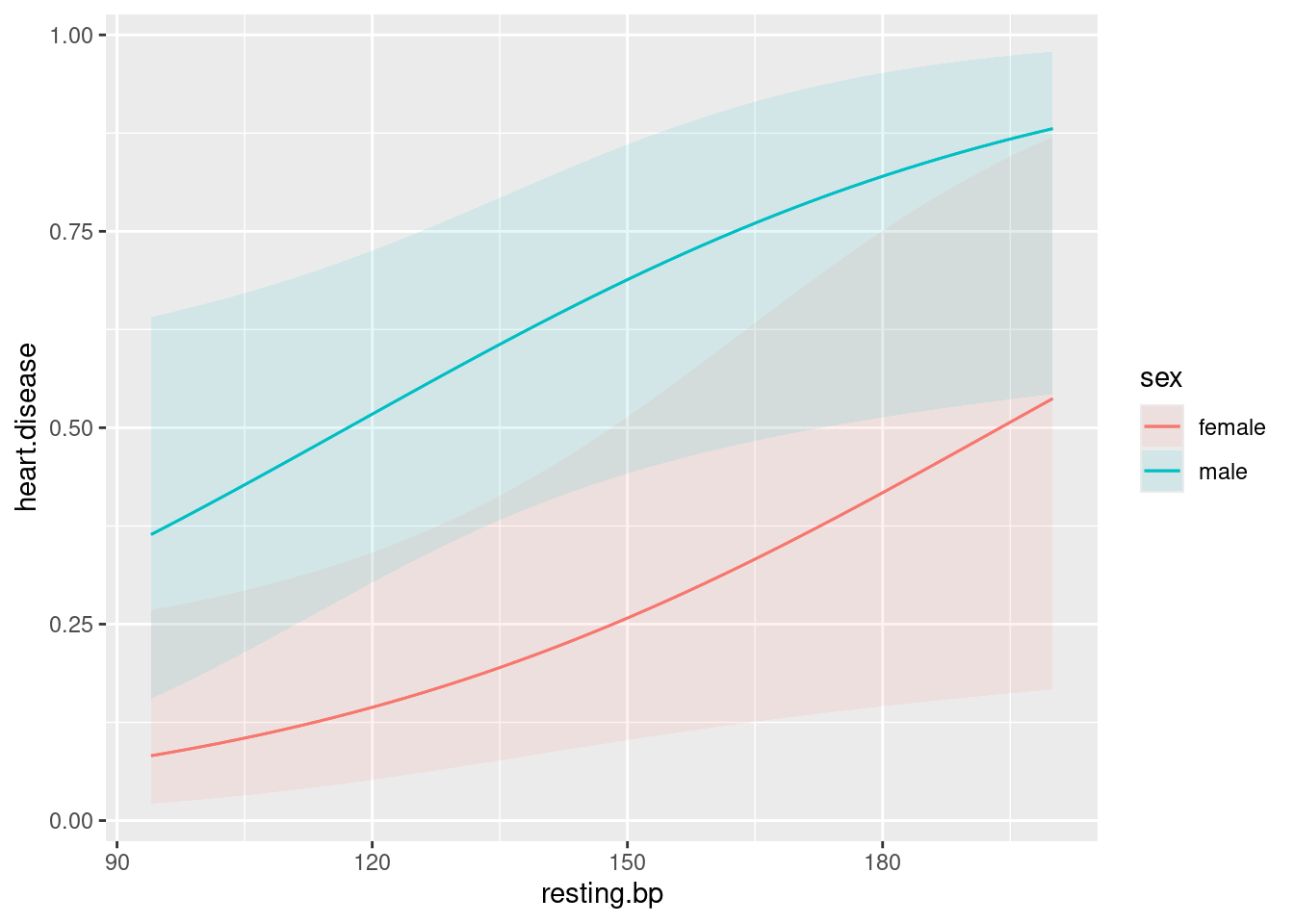

What is associated with heart disease? In a study, a large number of variables were measured, as follows:

age(years)sexmale or femalepain.typeChest pain type (4 values: typical angina, atypical angina, non-anginal pain, asymptomatic)resting.bpResting blood pressure, on admission to hospitalserum.cholSerum cholesterolhigh.blood.sugar: greater than 120, yes or noelectroresting electrocardiographic results (normal, having ST-T, hypertrophy)max.hrMaximum heart rateanginaExercise induced angina (yes or no)oldpeakST depression induced by exercise relative to rest. See link.slopeSlope of peak exercise ST segment. Sloping up, flat or sloping downcolorednumber of major vessels (0–3) coloured by fluoroscopythalnormal, fixed defect, reversible defectheart.diseaseyes, no (response)

I don’t know what most of those are, but we will not let that stand in our way. Our aim is to find out what variables are associated with heart disease, and what values of those variables give high probabilities of heart disease being present. The data are in link.

Read in the data. Display the first few lines and convince yourself that those values are reasonable.

In a logistic regression, what probability will be predicted here? Explain briefly but convincingly. (Is each line of the data file one observation or a summary of several?)

* Fit a logistic regression predicting heart disease from everything else (if you have a column called

XorX1, ignore that), and display the results.Quite a lot of our explanatory variables are factors. To assess whether the factor as a whole should stay or can be removed, looking at the slopes won’t help us very much (since they tell us whether the other levels of the factor differ from the baseline, which may not be a sensible comparison to make). To assess which variables are candidates to be removed, factors included (properly), we can use

drop1. Feeddrop1a fitted model and the wordstest="Chisq"(take care of the capitalization!) and you’ll get a list of P-values. Which variable is the one that you would remove first? Explain briefly.I’m not going to make you do the whole backward elimination (I’m going to have you use

stepfor that later), but do one step: that is, fit a model removing the variable you think should be removed, usingupdate, and then rundrop1again to see which variable will be removed next.Use

stepto do a backward elimination to find which variables have an effect on heart disease. Display your final model (which you can do by saving the output fromstepin a variable, and asking for the summary of that. Instep, you’ll need to specify a starting model (the one from part (here)), the direction of elimination, and the test to display the P-value for (the same one as you used indrop1). (Note: the actual decision to keep or drop explanatory variables is based on AIC rather than the P-value, with the result thatstepwill sometimes keep variables you would have dropped, with P-values around 0.10.)Display the summary of the model that came out of

step.We are going to make a large number of predictions. Create and save a data frame that contains predictions for all combinations of representative values for all the variables in the model that came out of

step. By “representative” I mean all the values for a categorical variable, and the five-number summary for a numeric variable. (Note that you will get a lot of predictions.)Find the largest predicted probability (which is the predicted probability of heart disease) and display all the variables that it was a prediction for.

Compare the

summaryof the final model fromstepwith your highest predicted heart disease probability and the values of the other variables that make it up. Are they consistent?

27.7 Successful breastfeeding

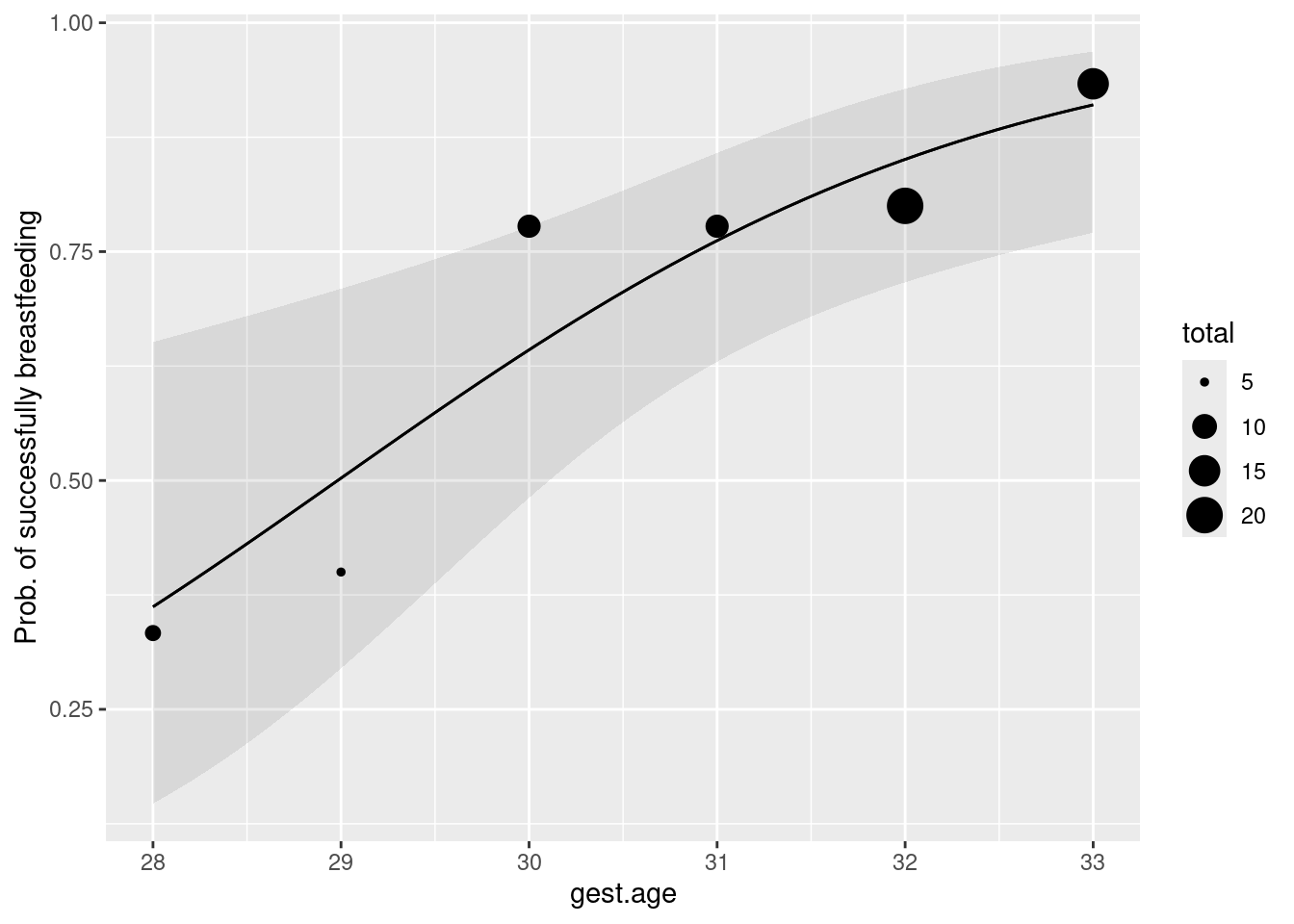

A regular pregnancy lasts 40 weeks, and a baby that is born at or before 33 weeks is called “premature”. The number of weeks at which a baby is born is called its “gestational age”. Premature babies are usually smaller than normal and may require special care. It is a good sign if, when the mother and baby leave the hospital to go home, the baby is successfully breastfeeding.

The data in link are from a study of 64 premature infants. There are three columns: the gestational age (a whole number of weeks), the number of babies of that gestational age that were successfully breastfeeding when they left the hospital, and the number that were not. (There were multiple babies of the same gestational age, so the 64 babies are summarized in 6 rows of data.)

Read the data into R and display the data frame.

Verify that there were indeed 64 infants, by having R do a suitable calculation on your data frame that gives the right answer for the right reason.

Do you think, looking at the data, that there is a relationship between gestational age and whether or not the baby was successfully breastfeeding when it left the hospital? Explain briefly.

Why is logistic regression a sensible technique to use here? Explain briefly.

Fit a logistic regression to predict the probability that an infant will be breastfeeding from its gestational age. Show the output from your logistic regression.

Does the significance or non-significance of the slope of

gest.agesurprise you? Explain briefly.Is your slope (in the

Estimatecolumn) positive or negative? What does that mean, in terms of gestational ages and breastfeeding? Explain briefly.Obtain the predicted probabilities that an infant will successfully breastfeed for a representative collection of gestational ages.

Make a graph of the predicted probability that an infant will successfully breastfeed as it depends on gestational age.

27.8 Making it over the mountains

In 1846, the Donner party (Donner and Reed families) left Springfield, Illinois for California in covered wagons. After reaching Fort Bridger, Wyoming, the leaders decided to find a new route to Sacramento. They became stranded in the eastern Sierra Nevada mountains at a place now called Donner Pass, when the region was hit by heavy snows in late October. By the time the survivors were rescued on April 21, 1847, 40 out of 87 had died.

After the rescue, the age and gender of each person in the party was recorded, along with whether they survived or not. The data are in link.

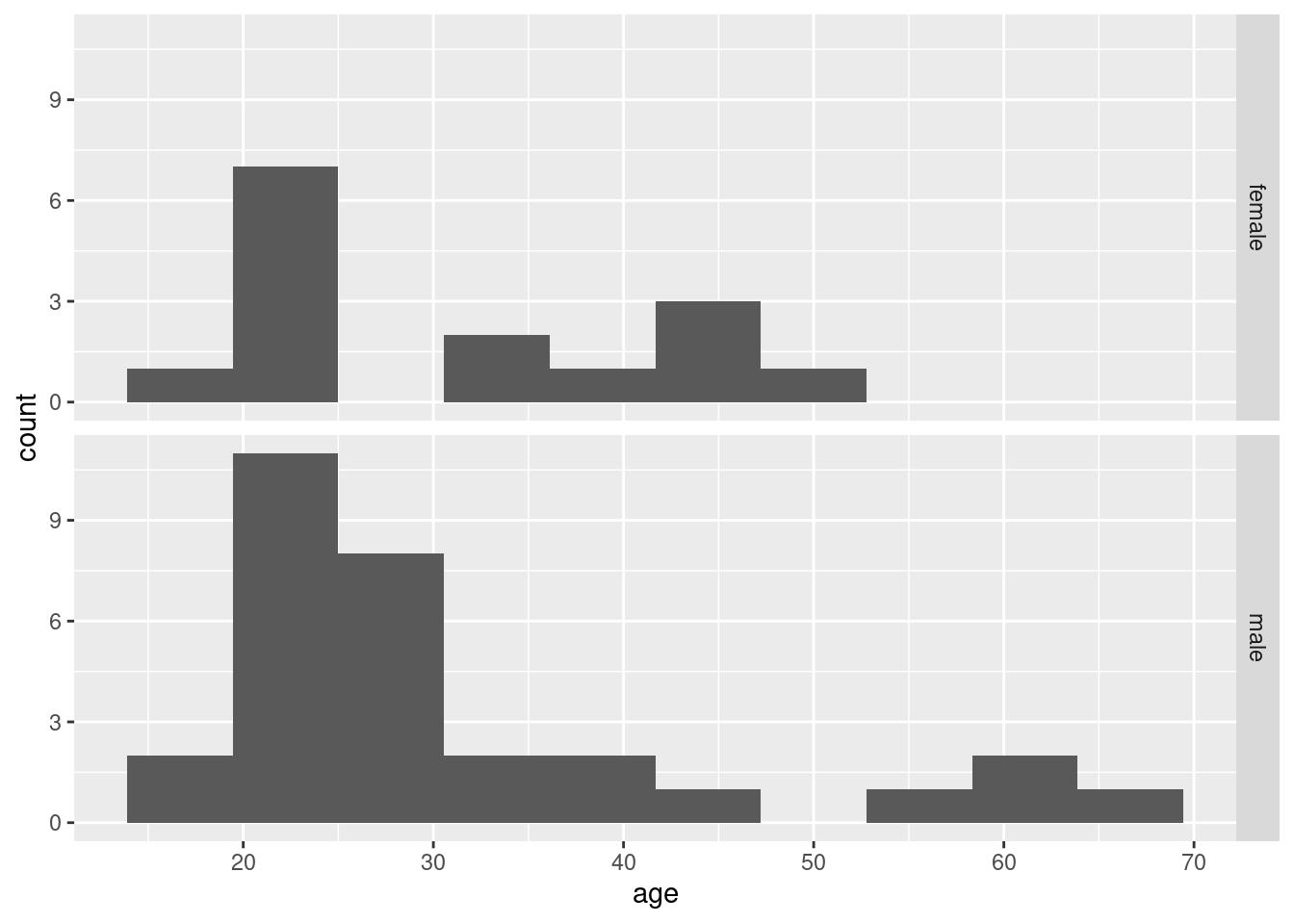

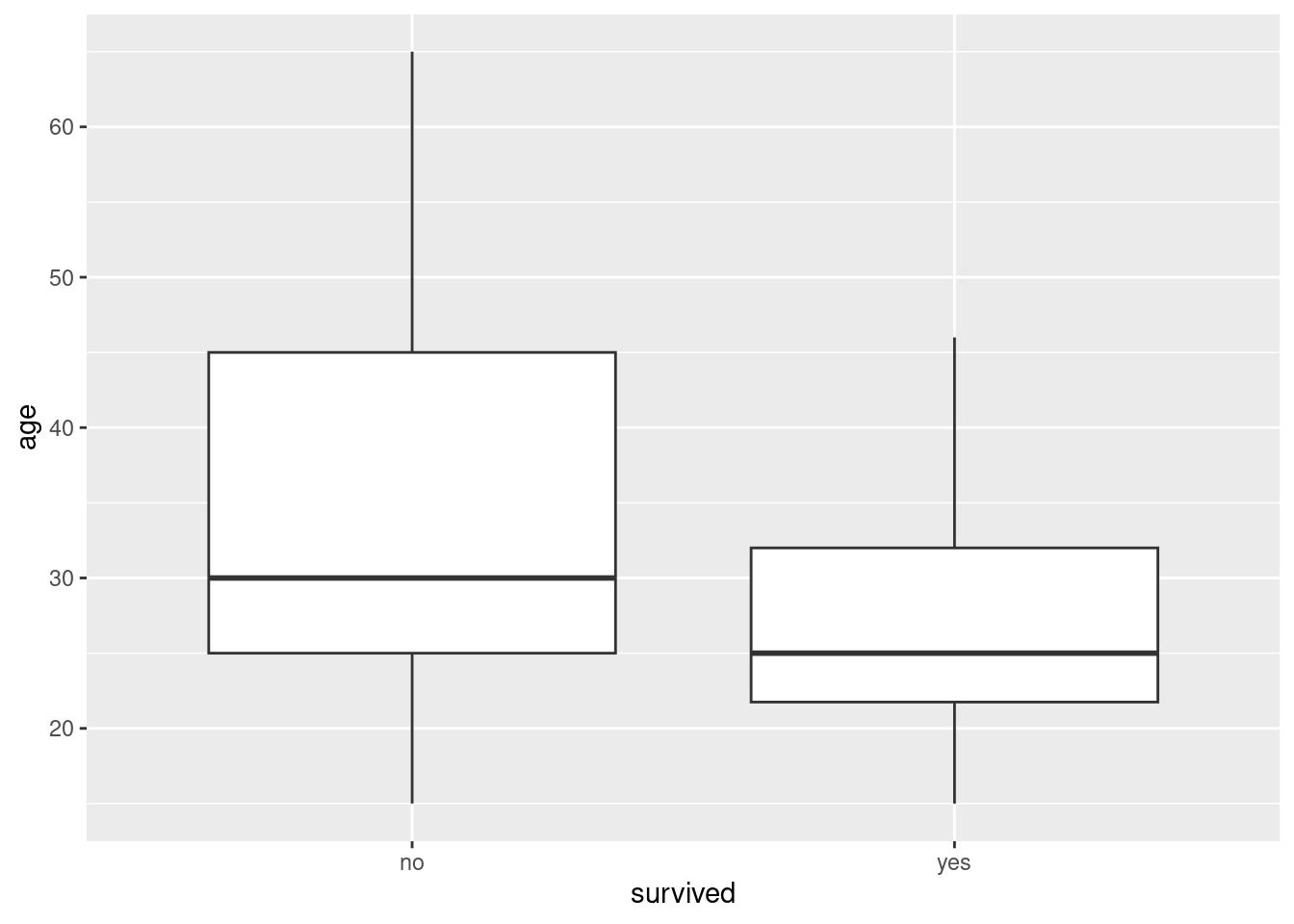

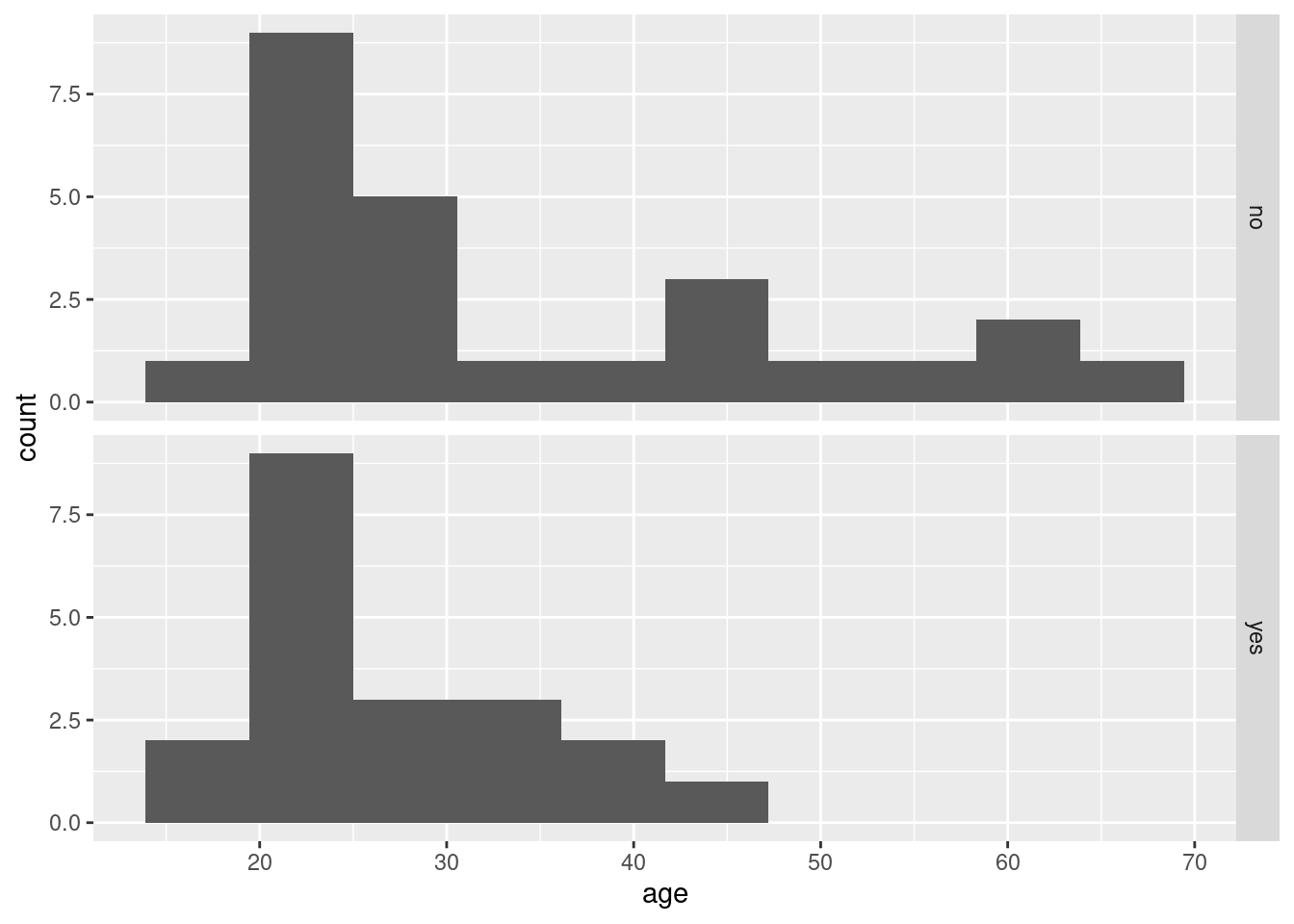

Read in the data and display its structure. Does that agree with the description in the previous paragraph?

Make graphical or numerical summaries for each pair of variables. That is, you should make a graph or numerical summary for each of

agevs.gender,agevs.

survivedandgendervs.survived. In choosing the kind of graph or summary that you will use, bear in mind thatsurvivedandgenderare factors with two levels.For each of the three graphs or summaries in the previous question, what do they tell you about the relationship between the pair of variables concerned? Explain briefly.

Fit a logistic regression predicting survival from age and gender. Display the summary.

Do both explanatory variables have an impact on survival? Does that seem to be consistent with your numerical or graphical summaries? Explain briefly.

Are the men typically older, younger or about the same age as the women? Considering this, explain carefully what the negative

gendermaleslope in your logistic regression means.Obtain predicted probabilities of survival for each combination of some representative ages and of the two genders in this dataset.

Do your predictions support your conclusions from earlier about the effects of age and gender? Explain briefly.

27.9 Who needs the most intensive care?

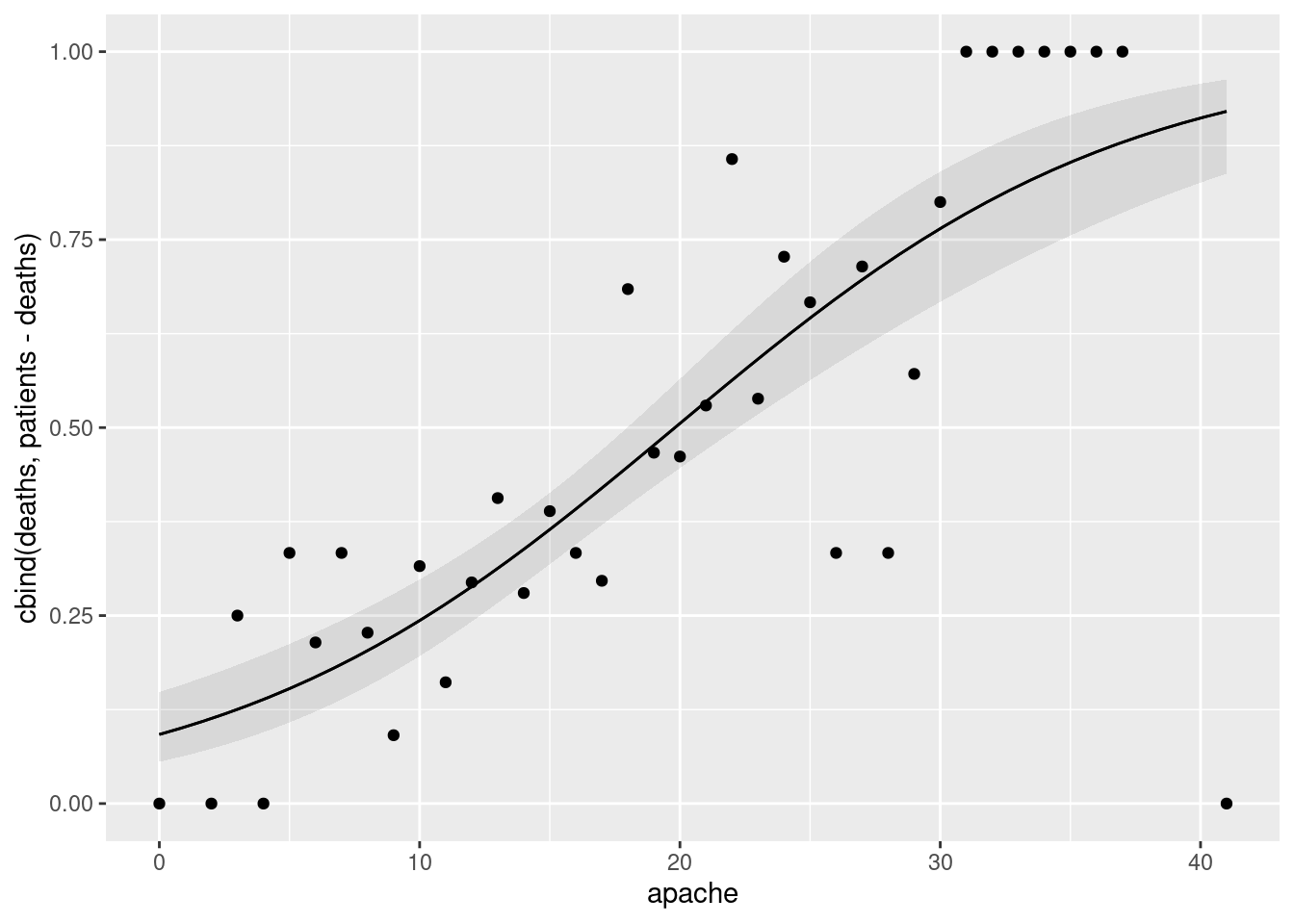

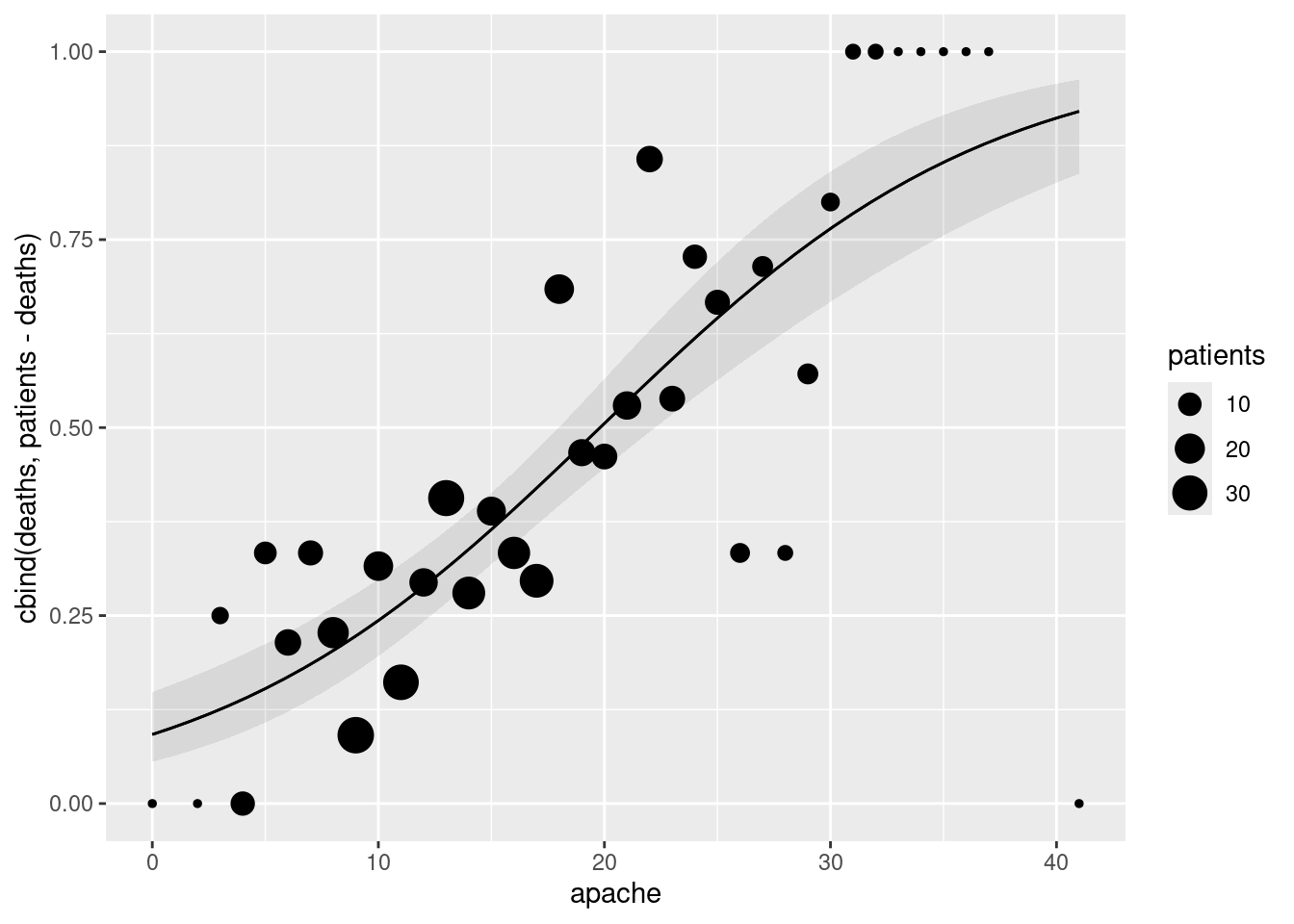

The “APACHE II” is a scale for assessing patients who arrive in the intensive care unit (ICU) of a hospital. These are seriously ill patients who may die despite the ICU’s best attempts. APACHE stands for “Acute Physiology And Chronic Health Evaluation”.2 The scale score is calculated from several physiological measurements such as body temperature, heart rate and the Glasgow coma scale, as well as the patient’s age. The final result is a score between 0 and 71, with a higher score indicating more severe health issues. Is it true that a patient with a higher APACHE II score has a higher probability of dying?

Data from one hospital are in link. The columns are: the APACHE II score, the total number of patients who had that score, and the number of patients with that score who died.

Read in and display the data (however much of it displays). Why are you convinced that have the right thing?

Does each row of the data frame relate to one patient or sometimes to more than one? Explain briefly.

Explain why this is the kind of situation where you need a two-column response.

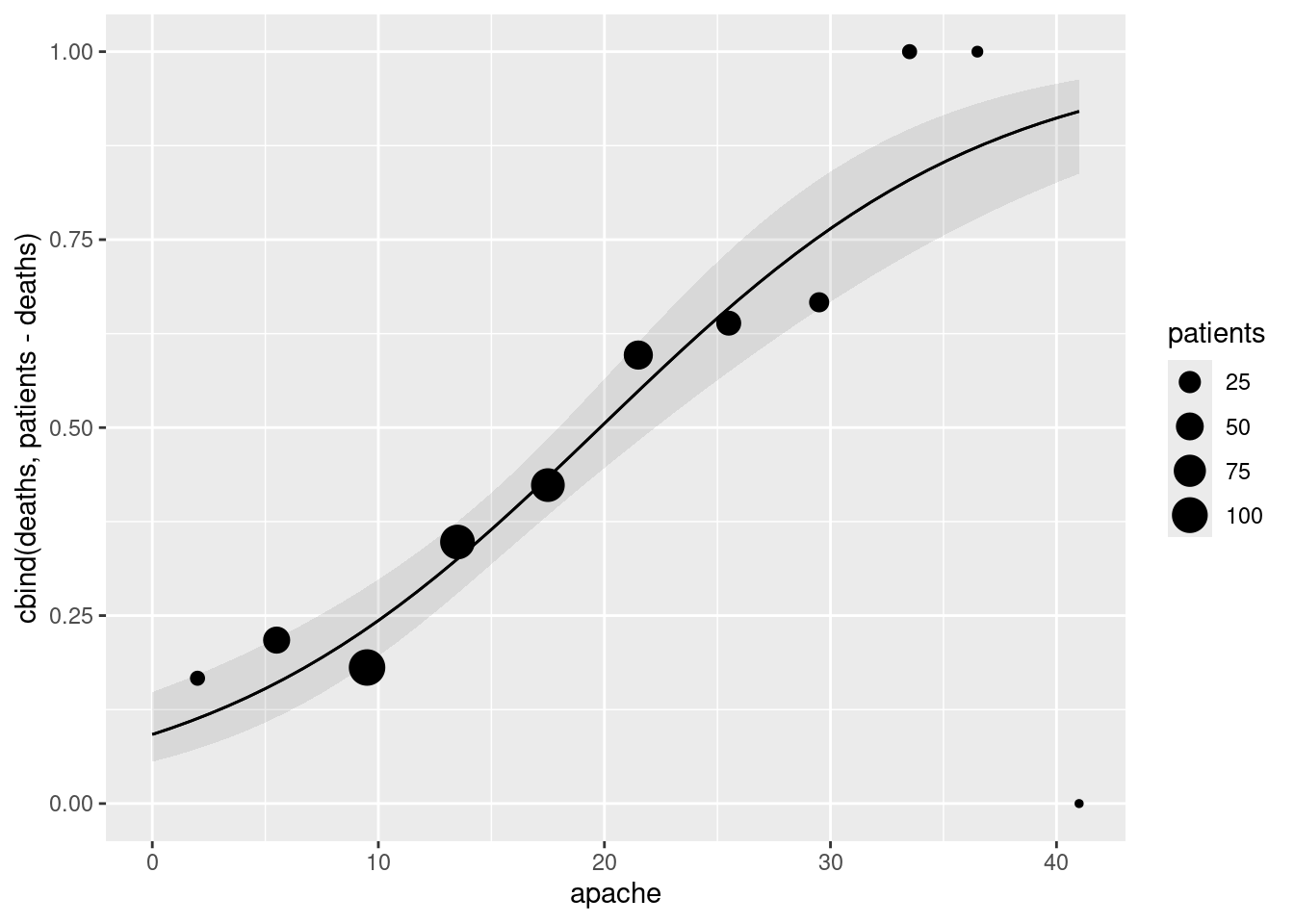

Fit a logistic regression to estimate the probability of death from the

apachescore, and display the results.Is there a significant effect of

apachescore on the probability of survival? Explain briefly.Is the effect of a larger

apachescore to increase or to decrease the probability of death? Explain briefly.Obtain the predicted probability of death for a representative collection of the

apachescores that were in the data set. Do your predictions behave as you would expect (from your earlier work)?Make a plot of predicted death probability against

apachescore (joined by lines) with, also on the plot, the observed proportion of deaths within eachapachescore, plotted againstapachescore. Does there seem to be good agreement between observation and prediction? Hint: calculate what you need to from the original dataframe first, save it, then make a plot of the predictions, and then to the plot add the appropriate thing from the dataframe you just saved.

27.10 Go away and don’t come back!

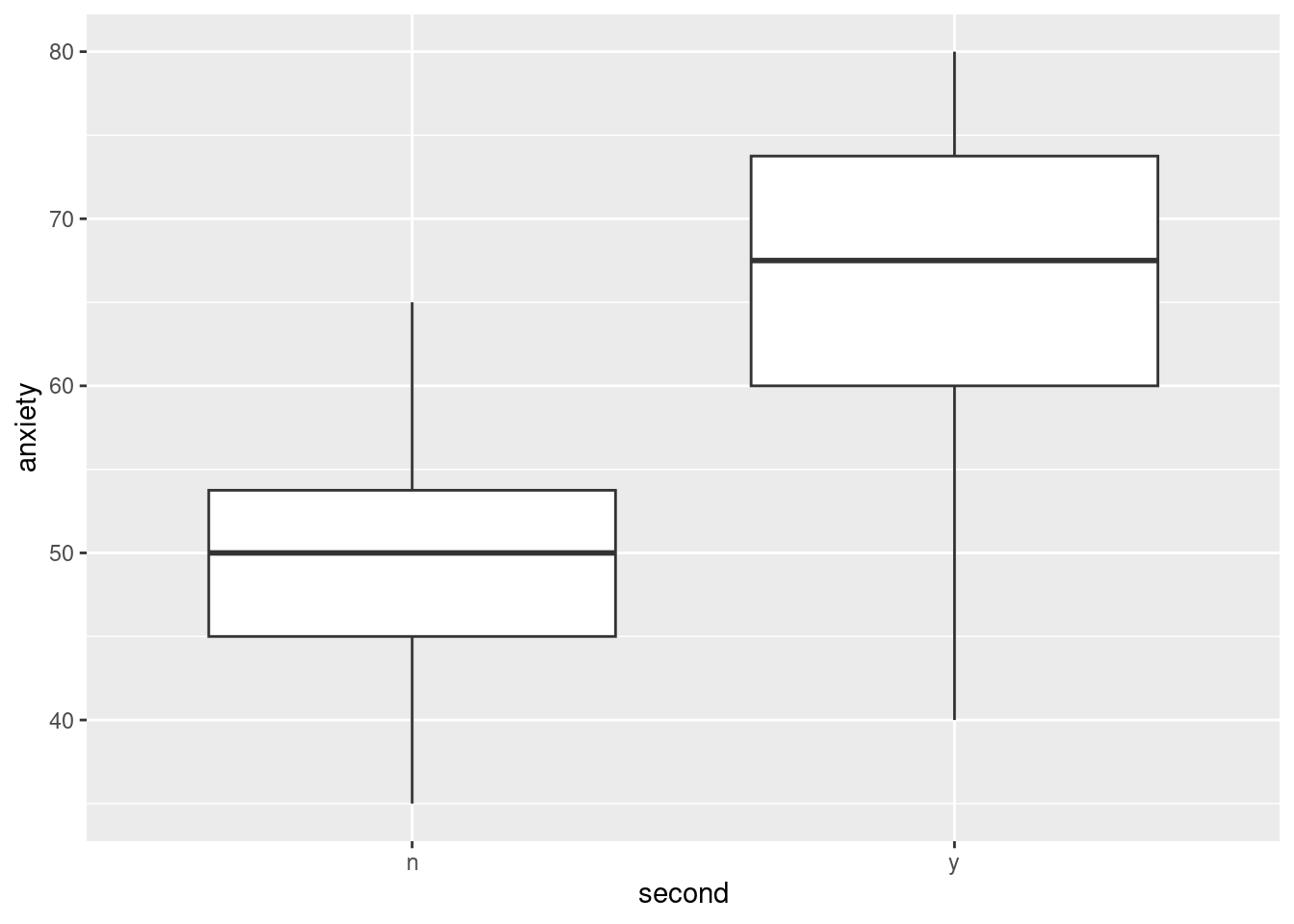

When a person has a heart attack and survives it, the major concern of health professionals is to prevent the person having a second heart attack. Two factors that are believed to be important are anger and anxiety; if a heart attack survivor tends to be angry or anxious, they are believed to put themselves at increased risk of a second heart attack.

Twenty heart attack survivors took part in a study. Each one was given a test designed to assess their anxiety (a higher score on the test indicates higher anxiety), and some of the survivors took an anger management course. The data are in link; y and n denote “yes” and “no” respectively. The columns denote (i) whether or not the person had a second heart attack, (ii) whether or not the person took the anger management class, (iii) the anxiety score.

Read in and display the data.

* Fit a logistic regression predicting whether or not a heart attack survivor has a second heart attack, as it depends on anxiety score and whether or not the person took the anger management class. Display the results.

In the previous part, how can you tell that you were predicting the probability of having a second heart attack (as opposed to the probability of not having one)?

* For the two possible values

yandnofangerand the anxiety scores 40, 50 and 60, make a data frame containing all six combinations, and use it to obtain predicted probabilities of a second heart attack. Display your predicted probabilities side by side with what they are predictions for.Use your predictions from the previous part to describe the effect of changes in

anxietyandangeron the probability of a second heart attack.Are the effects you described in the previous part consistent with the

summaryoutput fromglmthat you obtained in (here)? Explain briefly how they are, or are not. (You need an explanation for each ofanxietyandanger, and you will probably get confused if you look at the P-values, so don’t.)

My solutions follow:

27.11 Finding wolf spiders on the beach

A team of Japanese researchers were investigating what would cause the burrowing wolf spider Lycosa ishikariana to be found on a beach. They hypothesized that it had to do with the size of the grains of sand on the beach. They went to 28 beaches in Japan, measured the average sand grain size (in millimetres), and observed the presence or absence of this particular spider on each beach. The data are in link.

- Why would logistic regression be better than regular regression in this case?

Solution

Because the response variable, whether or not the spider is present, is a categorical yes/no success/failure kind of variable rather than a quantitative numerical one, and when you have this kind of response variable, this is when you want to use logistic regression.

\(\blacksquare\)

- Read in the data and check that you have something sensible. (Look at the data file first: the columns are aligned.)

Solution

The nature of the file means that you need read_table:

my_url <- "http://ritsokiguess.site/datafiles/spiders.txt"

spider <- read_table(my_url)

── Column specification ────────────────────────────────────────────────────────

cols(

Grain.size = col_double(),

Spiders = col_character()

)spiderWe have a numerical sand grain size and a categorical variable Spiders that indicates whether the spider was present or absent. As we were expecting. (The categorical variable is actually text or chr, which will matter in a minute.)

\(\blacksquare\)

- Make a boxplot of sand grain size according to whether the spider is present or absent. Does this suggest that sand grain size has something to do with presence or absence of the spider?

Solution

ggplot(spider, aes(x = Spiders, y = Grain.size)) + geom_boxplot()

The story seems to be that when spiders are present, the sand grain size tends to be larger. So we would expect to find some kind of useful relationship in the logistic regression.

Note that we have reversed the cause and effect here: in the boxplot we are asking “given that the spider is present or absent, how big are the grains of sand?”, whereas the logistic regression is asking “given the size of the grains of sand, how likely is the spider to be present?”. But if one variable has to do with the other, we would hope to see the link either way around.

\(\blacksquare\)

- Fit a logistic regression predicting the presence or absence of spiders from the grain size, and obtain its

summary. Note that you will need to do something with the response variable first.

Solution

The presence or absence of spiders is our response. This is actually text at the moment:

spiderso we need to make a factor version of it first. I’m going to live on the edge and overwrite everything:

spider <- spider %>% mutate(Spiders = factor(Spiders))

spiderSpiders is now a factor, correctly. (Sometimes a text variable gets treated as a factor, sometimes it needs to be an explicit factor. This is one of those times.) Now we go ahead and fit the model. I’m naming this as response-with-a-number, so I still have the Capital Letter. Any choice of name is good, though.

Spiders.1 <- glm(Spiders ~ Grain.size, family = "binomial", data = spider)

summary(Spiders.1)

Call:

glm(formula = Spiders ~ Grain.size, family = "binomial", data = spider)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -1.648 1.354 -1.217 0.2237

Grain.size 5.122 3.006 1.704 0.0884 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 35.165 on 27 degrees of freedom

Residual deviance: 30.632 on 26 degrees of freedom

AIC: 34.632

Number of Fisher Scoring iterations: 5\(\blacksquare\)

- Is there a significant relationship between grain size and presence or absence of spiders at the \(\alpha=0.10\) level? Explain briefly.

Solution

The P-value on the Grain.size line is just less than 0.10 (it is 0.088), so there is just a significant relationship. It isn’t a very strong significance, though. This might be because we don’t have that much data: even though we have 28 observations, which, on the face of it, is not a very small sample size, each observation doesn’t tell us much: only whether the spider was found on that beach or not. Typical sample sizes in logistic regression are in the hundreds — the same as for opinion polls, because you’re dealing with the same kind of data. The weak significance here lends some kind of weak support to the researchers’ hypothesis, but I’m sure they were hoping for something better.

\(\blacksquare\)

- Obtain predicted probabilities of spider presence for a representative collection of grain sizes. I only want predicted probabilities, not any kind of intervals.

Solution

Make a data frame of values to predict from directly, using tribble or for that matter datagrid. For some reason, I chose these four values:

new <- tribble(

~Grain.size,

0.2,

0.5,

0.8,

1.1

)

newand then

cbind(predictions(Spiders.1, newdata = new))One of the above is all I need. I prefer the first one, since that way we don’t even have to decide what th e representative values are.

Extra:

Note that the probabilities don’t go up linearly. (If they did, they would soon get bigger than 1!). It’s actually the log-odds that go up linearly.

To verify this, you can add a type to the predictions:

cbind(predictions(Spiders.1, newdata = new, type = "link"))This one, as you see shortly, makes more sense with equally-spaced grain sizes.

The meaning of that slope coefficient in the summary, which is about 5, is that if you increase grain size by 1, you increase the log-odds by 5. In the table above, we increased the grain size by 0.3 each time, so we would expect to increase the log-odds by \((0.3)(5)=1.5\), which is (to this accuracy) what happened.

Log-odds are hard to interpret. Odds are a bit easier, and to get them, we have to exp the log-odds:

cbind(predictions(Spiders.1, newdata = new, type = "link")) %>%

mutate(exp_pred = exp(estimate))Thus, with each step of 0.3 in grain size, we multiply the odds of finding a spider by about

exp(1.5)[1] 4.481689or about 4.5 times. If you’re a gambler, this might give you a feel for how large the effect of grain size is. Or, of course, you can just look at the probabilities.

\(\blacksquare\)

- Given your previous work in this question, does the trend you see in your predicted probabilities surprise you? Explain briefly.

Solution

My predicted probabilities go up as grain size goes up. This fails to surprise me for a couple of reasons: first, in my boxplots, I saw that grain size tended to be larger when spiders were present, and second, in my logistic regression summary, the slope was positive, so likewise spiders should be more likely as grain size goes up. Observing just one of these things is enough, though of course it’s nice if you can spot both.

\(\blacksquare\)

27.12 Killing aphids

An experiment was designed to examine how well the insecticide rotenone kills aphids that feed on the chrysanthemum plant called Macrosiphoniella sanborni. The explanatory variable is the log concentration (in milligrams per litre) of the insecticide. At each of the five different concentrations, approximately 50 insects were exposed. The number of insects exposed at each concentration, and the number killed, are shown below.

Log-Concentration Insects exposed Number killed

0.96 50 6

1.33 48 16

1.63 46 24

2.04 49 42

2.32 50 44

- Get these data into R. You can do this by copying the data into a file and reading that into R (creating a data frame), or you can enter the data manually into R using

c, since there are not many values. In the latter case, you can create a data frame or not, as you wish. Demonstrate that you have the right data in R.

Solution

There are a couple of ways. My current favourite is the tidyverse-approved tribble method. A tribble is a “transposed tibble”, in which you copy and paste the data, inserting column headings and commas in the right places. The columns don’t have to line up, since it’s the commas that determine where one value ends and the next one begins:

dead_bugs <- tribble(

~log_conc, ~exposed, ~killed,

0.96, 50, 6,

1.33, 48, 16,

1.63, 46, 24,

2.04, 49, 42,

2.32, 50, 44

)

dead_bugsNote that the last data value has no comma after it, but instead has the closing bracket of tribble.

You can have extra spaces if you wish. They will just be ignored. If you are clever in R Studio, you can insert a column of commas all at once (using “multiple cursors”). I used to do it like this. I make vectors of each column using c and then glue the columns together into a data frame:

log_conc <- c(0.96, 1.33, 1.63, 2.04, 2.32)

exposed <- c(50, 48, 46, 49, 50)

killed <- c(6, 16, 24, 42, 44)

dead_bugs2 <- tibble(log_conc, exposed, killed)

dead_bugs2The values are correct — I checked them.

Now you see why tribble stands for “transposed tibble”: if you want to construct a data frame by hand, you have to work with columns and then glue them together, but tribble allows you to work “row-wise” with the data as you would lay it out on the page.

The other obvious way to read the data values without typing them is to copy them into a file and read that. The values as laid out are aligned in columns. They might be separated by tabs, but they are not. (It’s hard to tell without investigating, though a tab is by default eight spaces and these values look farther apart than that.) I copied them into a file exposed.txt in my current folder (or use file.choose):

bugs2 <- read_table("exposed.txt")Warning: Missing column names filled in: 'X6' [6]

── Column specification ────────────────────────────────────────────────────────

cols(

`Log-Concentration` = col_double(),

Insects = col_double(),

exposed = col_double(),

Number = col_logical(),

killed = col_character(),

X6 = col_character()

)Warning: 5 parsing failures.

row col expected actual file

1 -- 6 columns 4 columns 'exposed.txt'

2 -- 6 columns 4 columns 'exposed.txt'

3 -- 6 columns 4 columns 'exposed.txt'

4 -- 6 columns 4 columns 'exposed.txt'

5 -- 6 columns 4 columns 'exposed.txt'bugs2This didn’t quite work: the last column Number killed got split into two, with the actual number killed landing up in Number and the column killed being empty. If you look at the data file, the data values for Number killed are actually aligned with the word Number, which is why it came out this way. Also, you’ll note, the column names have those “backticks” around them, because they contain illegal characters like a minus sign and spaces. Perhaps a good way to pre-empt3 all these problems is to make a copy of the data file with the illegal characters replaced by underscores, which is my file exposed2.txt:

bugs2 <- read_table("exposed2.txt")

── Column specification ────────────────────────────────────────────────────────

cols(

Log_Concentration = col_double(),

Insects_exposed = col_double(),

Number_killed = col_double()

)bugs2You might get a fictitious fourth column, but if you do, you can safely ignore it the rest of the way. We have all our actual data. We’d have to be careful with Capital Letters this way, but it’s definitely good.

You may have thought that this was a lot of fuss to make about reading in data, but the point is that data can come your way in lots of different forms, and you need to be able to handle whatever you receive so that you can do some analysis with it.

\(\blacksquare\)

- * Looking at the data, would you expect there to be a significant effect of log-concentration? Explain briefly.

Solution

The numbers of insects killed goes up sharply as the concentration increases, while the numbers of insects exposed don’t change much. So I would expect to see a strong, positive effect of concentration, and I would expect it to be strongly significant, especially since we have almost 250 insects altogether.

\(\blacksquare\)

- Run a suitable logistic regression, and obtain a summary of the results.

Solution

This is one of those where each row of data is more than one individual (there were about 50 insects at each concentration), so the response variable needs to have two columns: one of how many insects were killed at that dose, and the other of how many insects survived. This is done using cbind. We don’t actually have a number of insects that survived, but we know how many were exposed, so we can work out how many survived by doing a subtraction inside the cbind:

bugs.1 <- glm(cbind(killed, exposed - killed) ~ log_conc, family = "binomial", data = dead_bugs)

summary(bugs.1)

Call:

glm(formula = cbind(killed, exposed - killed) ~ log_conc, family = "binomial",

data = dead_bugs)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -4.8923 0.6426 -7.613 2.67e-14 ***

log_conc 3.1088 0.3879 8.015 1.11e-15 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 96.6881 on 4 degrees of freedom

Residual deviance: 1.4542 on 3 degrees of freedom

AIC: 24.675

Number of Fisher Scoring iterations: 4Extra: In the original part (c), I had you make the response matrix first, which is how I used to teach it, but I came to realize that this way plays better with marginaleffects because all the things in the glm line are in the dataframe.

\(\blacksquare\)

- Does your analysis support your answer to (here)? Explain briefly.

Solution

That’s a very small P-value, \(1.1\times 10^{-15}\), on log_conc, so there is no doubt that concentration has an effect on an insect’s chances of being killed. This is exactly what I guessed in (here), which I did before looking at the results, honest!

You might also note that the Estimate for log_conc is positive and, by the standards of these things, pretty large in size, so the probability of being killed goes up pretty sharply as log-concentration increases.

\(\blacksquare\)

- Obtain predicted probabilities of an insect’s being killed at each of the log-concentrations in the data set.

Solution

Use a newdata that is the original dataframe, and display only the columns of interest:

cbind(predictions(bugs.1, newdata = dead_bugs)) %>%

select(log_conc, exposed, killed, estimate)The advantage of this is that you can compare the observed with the predicted. For example, 44 out of 50 insects were killed at log-dose 2.32, which is a proportion of 0.88, pretty close to the prediction of 0.91.

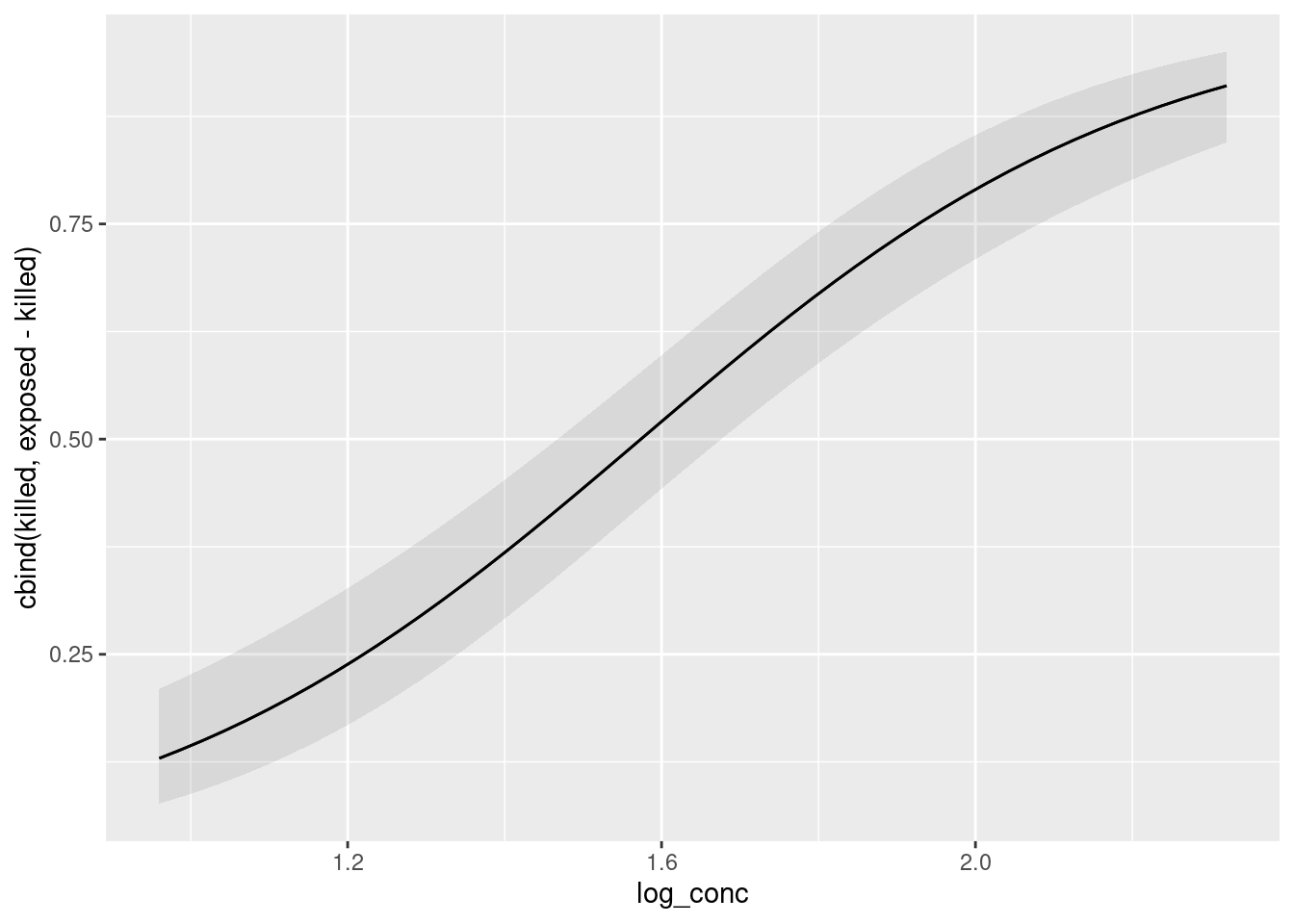

- Obtain a plot of the predicted probabilities of an insect’s being killed, as it depends on the log-concentration.

This is plot_predictions, pretty much the obvious way:

plot_predictions(model = bugs.1, condition = "log_conc")

The thing I always forget is that the name of the explanatory variable has to be in quotes, which is not what we are used to from the tidyverse. The odd thing on the \(y\)-axis is our response variable; the predictions are the probability of being killed, which we could make our \(y\)-axis actually say, like this:

plot_predictions(model = bugs.1, condition = "log_conc") +

labs(y = "Prob(killed)")

As the log-concentration goes up, you can see how clearly the probability of the aphid being killed goes up. The confidence band is narrow because there is lots of data (almost 250 aphids altogether), and the confidence interval is much higher at the end than at the beginning, which is why the P-value came out so tiny.

\(\blacksquare\)

- People in this kind of work are often interested in the “median lethal dose”. In this case, that would be the log-concentration of the insecticide that kills half the insects. Based on your predictions, roughly what do you think the median lethal dose is?

Solution

This is probably easier to see from the graph, though the \(x\)-scale doesn’t make it very easy to be accurate: look across to where the prediction crosses the probability of 0.5, which is somewhere between a log-concentration of 1.5 and 1.6.

If you go back and look at the predictions, the log-concentration of 1.63 is predicted to kill just over half the insects, so the median lethal dose should be a bit less than 1.63. It should not be as small as 1.33, though, since that log-concentration only kills less than a third of the insects. So I would guess somewhere a bit bigger than 1.5. Any guess somewhere in this ballpark is fine: you really cannot be very accurate.

Extra: this is kind of a strange prediction problem, because we know what the response variable should be, and we want to know what the explanatory variable’s value is. Normally we do predictions the other way around.4 So the only way to get a more accurate figure is to try some different log-concentrations, and see which one gets closest to a probability 0.5 of killing the insect.

Something like this would work:

new <- datagrid(model = bugs.1, log_conc = seq(1.5, 1.63, 0.01))

cbind(predictions(bugs.1, newdata = new))The closest one of these to a probability of 0.5 is 0.4971, which goes with a log-concentration of 1.57: indeed, a bit bigger than 1.5 and a bit less than 1.63, and close to what I read off from my graph. The seq in the construction of the new data frame is “fill sequence”: go from 1.5 to 1.63 in steps of 0.01. We are predicting for values of log_conc that we chose, so the way to go is to make a new dataframe with datagrid and then feed that into predictions with newdata.

Now, of course this is only our “best guess”, like a single-number prediction in regression. There is uncertainty attached to it (because the actual logistic regression might be different from the one we estimated), so we ought to provide a confidence interval for it. But I’m riding the bus as I type this, so I can’t look it up right now.

Later: there’s a function called dose.p in MASS that appears to do this:

lethal <- dose.p(bugs.1)

lethal Dose SE

p = 0.5: 1.573717 0.05159576We have a sensible point estimate (the same 1.57 that we got by hand), and we have a standard error, so we can make a confidence interval by going up and down twice it (or 1.96 times it) from the estimate. The structure of the result is a bit arcane, though:

str(lethal) 'glm.dose' Named num 1.57

- attr(*, "names")= chr "p = 0.5:"

- attr(*, "SE")= num [1, 1] 0.0516

..- attr(*, "dimnames")=List of 2

.. ..$ : chr "p = 0.5:"

.. ..$ : NULL

- attr(*, "p")= num 0.5It is what R calls a “vector with attributes”. To get at the pieces and calculate the interval, we have to do something like this:

(lethal_est <- as.numeric(lethal))[1] 1.573717(lethal_SE <- as.vector(attr(lethal, "SE")))[1] 0.05159576and then make the interval:

lethal_est + c(-2, 2) * lethal_SE[1] 1.470526 1.6769091.47 to 1.68.

I got this idea from page 4.14 of link. I think I got a little further than he did. An idea that works more generally is to get several intervals all at once, say for the “quartile lethal doses” as well:

lethal <- dose.p(bugs.1, p = c(0.25, 0.5, 0.75))

lethal Dose SE

p = 0.25: 1.220327 0.07032465

p = 0.50: 1.573717 0.05159576

p = 0.75: 1.927108 0.06532356This looks like a data frame or matrix, but is actually a “named vector”, so enframe will get at least some of this and turn it into a genuine data frame:

enframe(lethal)That doesn’t get the SEs, so we’ll make a new column by grabbing the “attribute” as above:

enframe(lethal) %>% mutate(SE = attr(lethal, "SE"))and now we make the intervals by making new columns containing the lower and upper limits:

enframe(lethal) %>%

mutate(SE = attr(lethal, "SE")) %>%

mutate(LCL = value - 2 * SE, UCL = value + 2 * SE)Now we have intervals for the median lethal dose, as well as for the doses that kill a quarter and three quarters of the aphids.

\(\blacksquare\)

27.13 The effects of Substance A

In a dose-response experiment, animals (or cell cultures or human subjects) are exposed to some toxic substance, and we observe how many of them show some sort of response. In this experiment, a mysterious Substance A is exposed at various doses to 100 cells at each dose, and the number of cells at each dose that suffer damage is recorded. The doses were 10, 20, … 70 (mg), and the number of damaged cells out of 100 were respectively 10, 28, 53, 77, 91, 98, 99.

- Find a way to get these data into R, and show that you have managed to do so successfully.

Solution

There’s not much data here, so we don’t need to create a file, although you can do so if you like (in the obvious way: type the doses and damaged cell numbers into a .txt file or spreadsheet and read in with the appropriate read_ function). Or, use a tribble:

dr <- tribble(

~dose, ~damaged,

10, 10,

20, 28,

30, 53,

40, 77,

50, 91,

60, 98,

70, 99

)

drOr, make a data frame with the values typed in:

dr2 <- tibble(

dose = seq(10, 70, 10),

damaged = c(10, 28, 53, 77, 91, 98, 99)

)

dr2seq fills a sequence “10 to 70 in steps of 10”, or you can just list the doses.

For these, find a way you like, and stick with it.

\(\blacksquare\)

- Would you expect to see a significant effect of dose for these data? Explain briefly.

Solution

The number of damaged cells goes up sharply as the dose goes up (from a very small number to almost all of them). So I’d expect to see a strongly significant effect of dose. This is far from something that would happen by chance.

\(\blacksquare\)

- Fit a logistic regression modelling the probability of a cell being damaged as it depends on dose. Display the results. (Comment on them is coming later.)

Solution

This has a bunch of observations at each dose (100 cells, in fact), so we need to do the two-column response thing: the successes in the first column and the failures in the second. This means that we need to calculate the number of cells at each dose that were not damaged, by subtracting the number that were damaged from 100. This is best done within the glm, so that everything is accessible to plot_predictions later.

Now we fit our model:

cells.1 <- glm(cbind(damaged, 100 - damaged) ~ dose, family = "binomial", data = dr)

summary(cells.1)

Call:

glm(formula = cbind(damaged, 100 - damaged) ~ dose, family = "binomial",

data = dr)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -3.275364 0.278479 -11.76 <2e-16 ***

dose 0.113323 0.008315 13.63 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 384.13349 on 6 degrees of freedom

Residual deviance: 0.50428 on 5 degrees of freedom

AIC: 31.725

Number of Fisher Scoring iterations: 4The programmer in you is probably complaining “but, 100 is a number and damaged is a vector of 7 numbers. How does R know to subtract each one from 100?” Well, R has what’s known as “recycling rules”: if you try to add or subtract (or elementwise multiply or divide) two vectors of different lengths, it recycles the smaller one by repeating it until it’s as long as the longer one. So rather than 100-damaged giving an error, it does what you want.5

\(\blacksquare\)

- Does your output indicate that the probability of damage really does increase with dose? (There are two things here: is there really an effect, and which way does it go?)

Solution

The slope of dose is significantly nonzero (P-value less than \(2.2 \times 10^{-16}\), which is as small as it can be). The slope itself is positive, which means that as dose goes up, the probability of damage goes up.

That’s all I needed, but if you want to press on: the slope is 0.113, so an increase of 1 in dose goes with an increase of 0.113 in the log-odds of damage. Or it multiplies the odds of damage by \(\exp(0.113)\). Since 0.113 is small, this is about \(1.113\) (mathematically, \(e^x\simeq 1+x\) if \(x\) is small), so that the increase is about 11%. If you like, you can get a rough CI for the slope by going up and down twice its standard error (this is the usual approximately-normal thing). Here that would be

0.113323 + c(-2, 2) * 0.008315[1] 0.096693 0.129953I thought about doing that in my head, but thought better of it, since I have R just sitting here. The interval is short (we have lots of data) and definitely does not contain zero.

\(\blacksquare\)

- Obtain predicted damage probabilities for some representative doses.

Solution

Pick some representative doses first, and then use them as newdata. The ones in the original data are fine (there are only seven of them), but you can use some others if you prefer:

cbind(predictions(cells.1, newdata = dr)) %>%

select(dose, damaged, estimate, conf.low, conf.high) -> p

pI saved mine to use again later, but you don’t have to unless you want to use your predictions again later.

\(\blacksquare\)

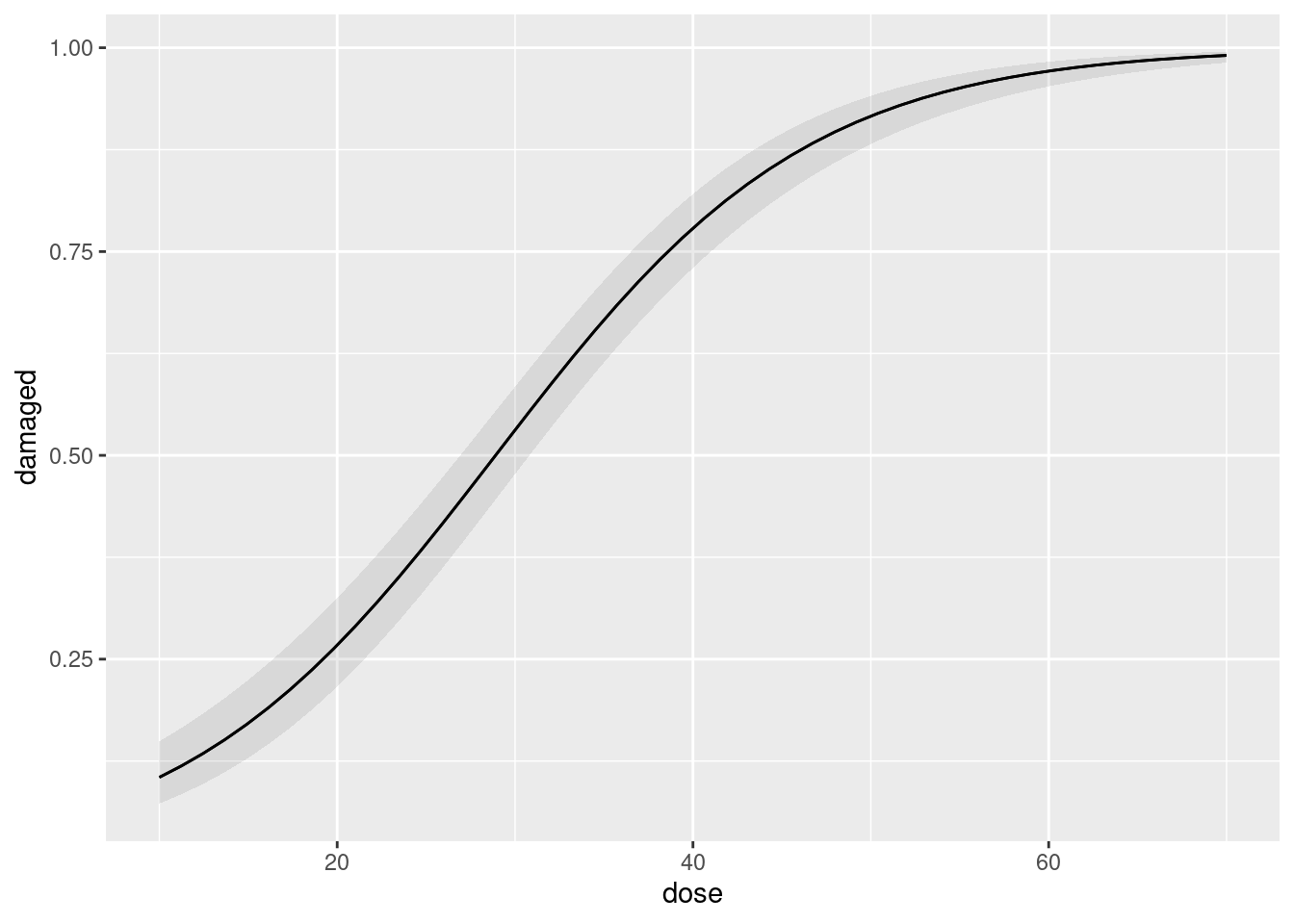

- Draw a graph of the predicted probabilities, and to that add the observed proportions of damage at each dose. Hints: you will have to calculate the observed proportions first. Looking at the predicted probabilities, would you say that the model fits well? Explain briefly.

Solution

Because we used the cbind thing in our glm, we can start with plot_predictions as expected:

plot_predictions(cells.1, condition = "dose")

The predicted probability of a cell being damaged is very precisely estimated all the way up, and the probability is almost certainly very close to 1 for large doses.

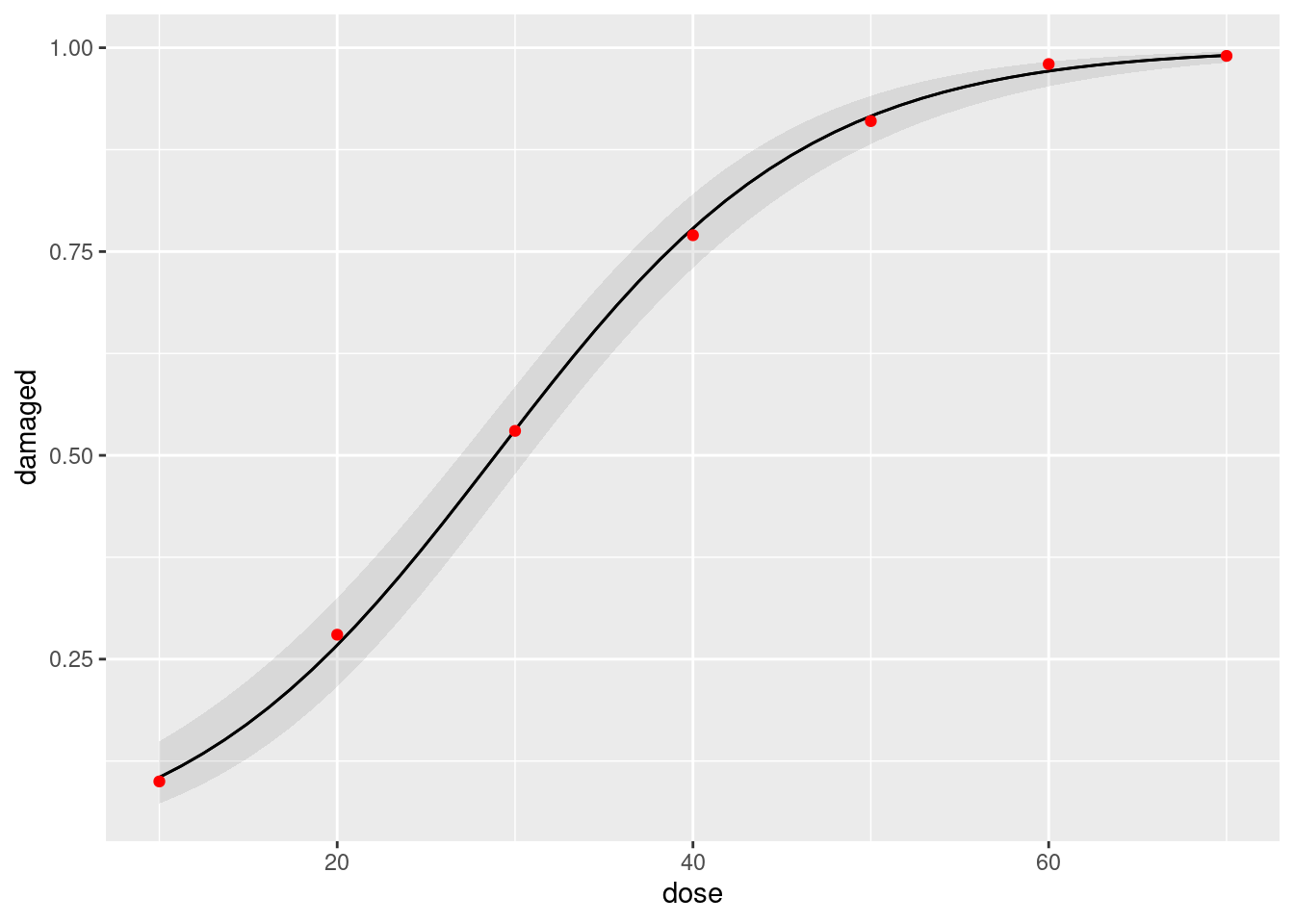

Now, we need to figure out how to add the data to this graph. Let’s take a look at our original dataframe:

drTo that we need to add a column of proportion damaged, which is damaged divided by 100 (we know there were exactly 100 cells at each dose):

dr %>% mutate(prop = damaged / 100) -> dr2

dr2Check. I saved this to add to the graph.

Now you can add this to the graph we made before with a geom_point with a data = and an aes, making the points red:

plot_predictions(cells.1, condition = "dose") +

geom_point(data = dr2, aes(x = dose, y = prop), colour = "red")

I also made the data points red (you don’t need to, but if you want to, put the colour outside the aes, to make it clear that the colour is constant, not determined by any of the variables in your dataframe).

The predicted probabilities ought to be close to the observed proportions. They are in fact very close to them, so the model fits very well indeed.

Your actual words are a judgement call, so precisely what you say doesn’t matter so much, but I think this model fit is actually closer than you could even hope to expect, it’s that good. But, your call. I think your answer ought to contain “close” or “fits well” at the very least.

\(\blacksquare\)

27.14 What makes an animal get infected?

Some animals got infected with a parasite. We are interested in whether the likelihood of infection depends on any of the age, weight and sex of the animals. The data are at link. The values are separated by tabs.

- Read in the data and take a look at the first few lines. Is this one animal per line, or are several animals with the same age, weight and sex (and infection status) combined onto one line? How can you tell?

Solution

The usual beginnings, bearing in mind the data layout:

my_url <- "http://ritsokiguess.site/datafiles/infection.txt"

infect <- read_tsv(my_url)Rows: 81 Columns: 4

── Column specification ────────────────────────────────────────────────────────

Delimiter: "\t"

chr (2): infected, sex

dbl (2): age, weight

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.infectSuccess. This appears to be one animal per line, since there is no indication of frequency (of “how many”). If you were working as a consultant with somebody’s data, this would be a good thing to confirm with them before you went any further.

You can check a few more lines to convince yourself and the story is much the same. The other hint is that you have actual categories of response, which usually indicates one individual per row, but not always. If it doesn’t, you have some extra work to do to bash it into the right format.

Extra: let’s see whether we can come up with an example of that. I’ll make a smaller example, and perhaps the place to start is “all possible combinations” of a few things. crossing is the same idea as datagrid, except that it doesn’t need a model, and so is better for building things from scratch:

d <- crossing(age = c(10, 12), gender = c("f", "m"), infected = c("y", "n"))

dThese might be one individual per row, or they might be more than one, as they would be if we have a column of frequencies:

d <- d %>% mutate(freq = c(12, 19, 17, 11, 18, 26, 16, 8))

dNow, these are multiple observations per row (the presence of frequencies means there’s no doubt about that), but the format is wrong: infected is my response variable, and we want the frequencies of infected being y or n in separate columns — that is, we have to untidy the data a bit to make it suitable for modelling. This is pivot_wider, the opposite of pivot_longer:

d %>% pivot_wider(names_from=infected, values_from=freq) -> d1

d1Now you can pull out the columns y and n and make them into your response, and predict that from age and gender:

response <- with(d1, cbind(y, n))

response y n

[1,] 19 12

[2,] 11 17

[3,] 26 18

[4,] 8 16The moral of this story is that if you are going to have multiple observations per row, you probably want the combinations of explanatory variables one per row, but you want the categories of the response variable in separate columns.

Back to where we were the rest of the way.

\(\blacksquare\)

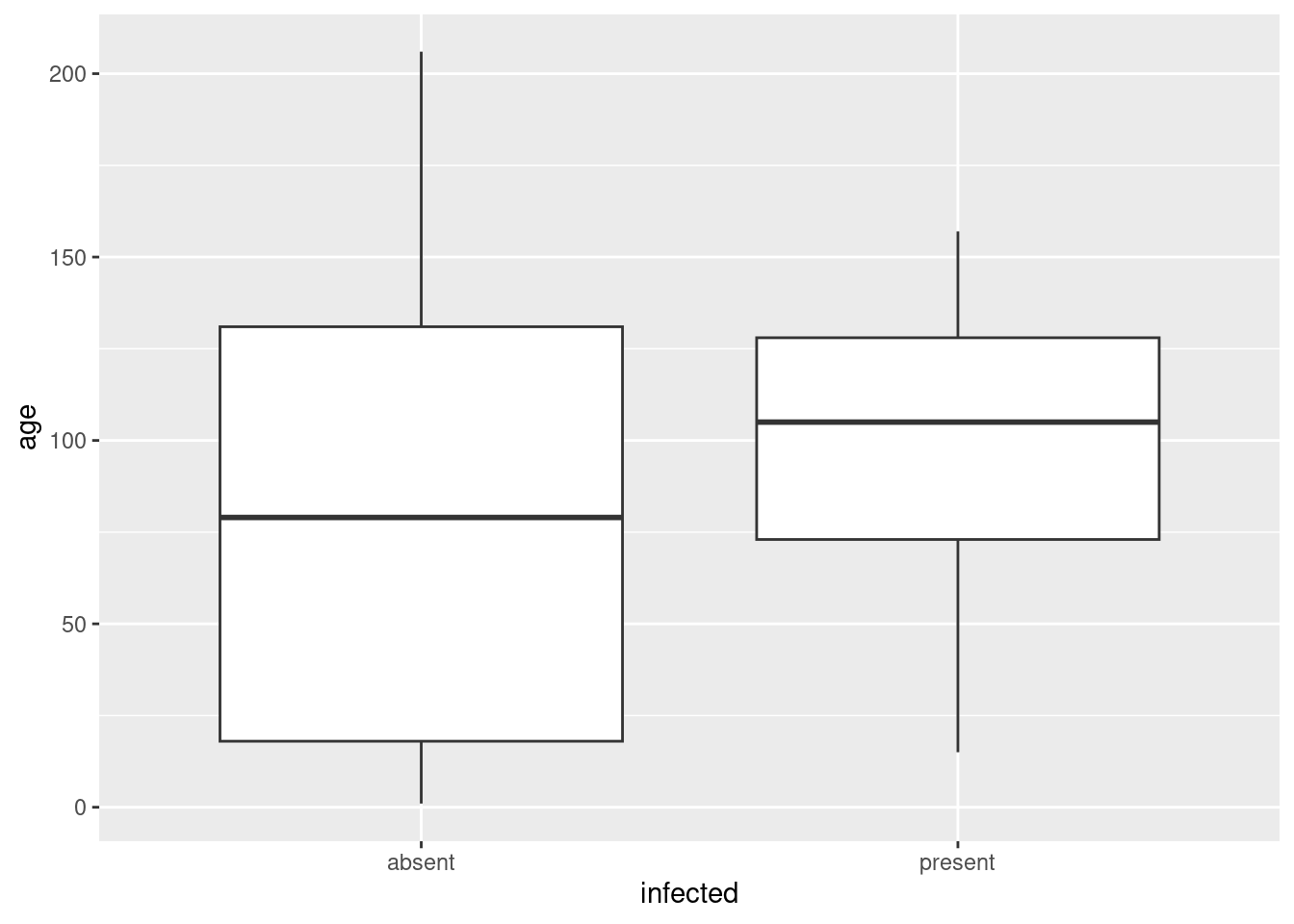

- * Make suitable plots or summaries of

infectedagainst each of the other variables. (You’ll have to think aboutsex, um, you’ll have to think about thesexvariable, because it too is categorical.) Anything sensible is OK here. You might like to think back to what we did in Question here for inspiration. (You can also investigatetable, which does cross-tabulations.)

Solution

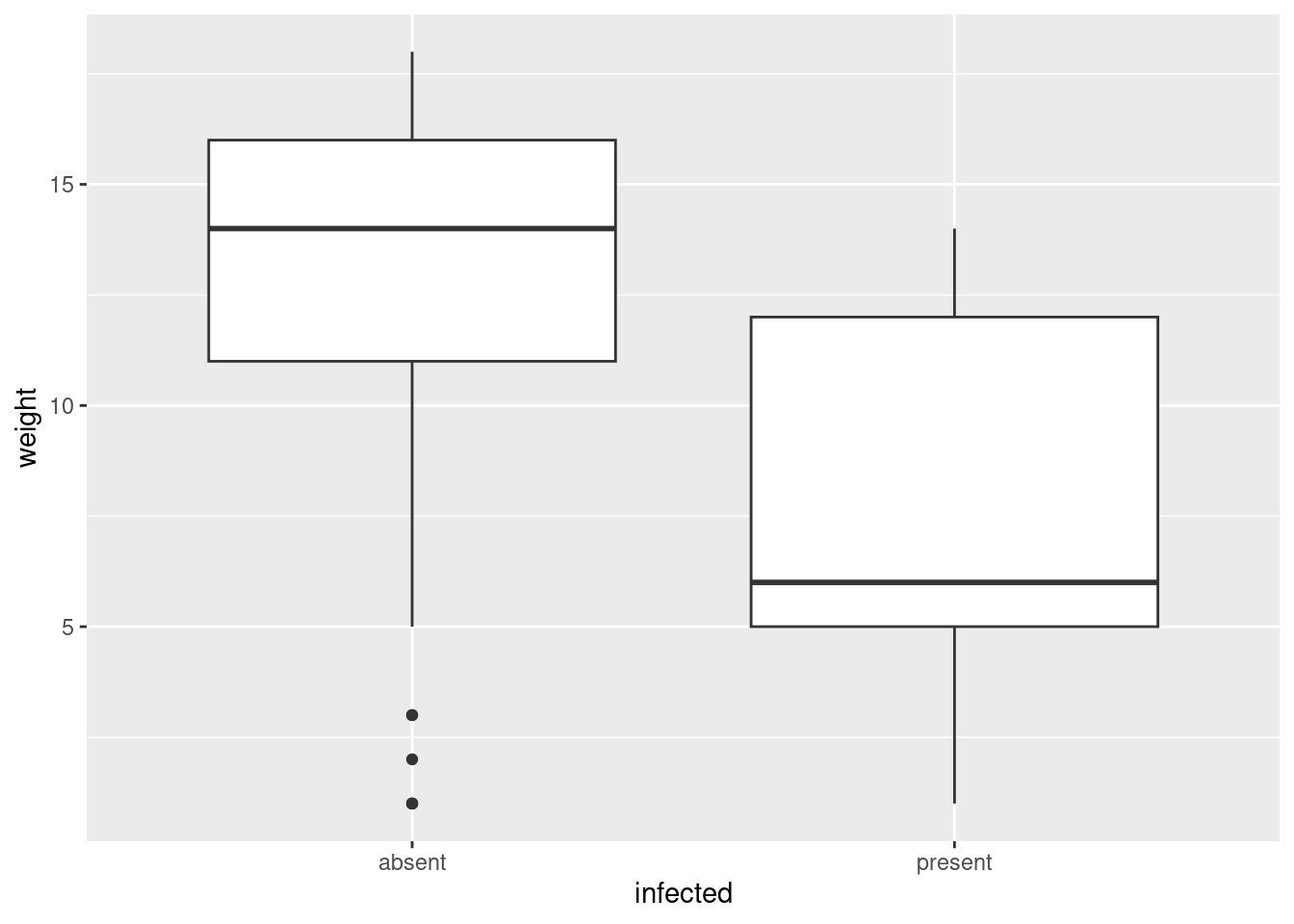

What comes to my mind for the numerical variables age and weight is boxplots:

ggplot(infect, aes(x = infected, y = age)) + geom_boxplot()

ggplot(infect, aes(x = infected, y = weight)) + geom_boxplot()

The variables sex and infected are both categorical. I guess a good plot for those would be some kind of grouped bar plot, which I have to think about. So let’s first try a numerical summary, a cross-tabulation, which is gotten via table:

with(infect, table(sex, infected)) infected

sex absent present

female 17 11

male 47 6Or, if you like the tidyverse:

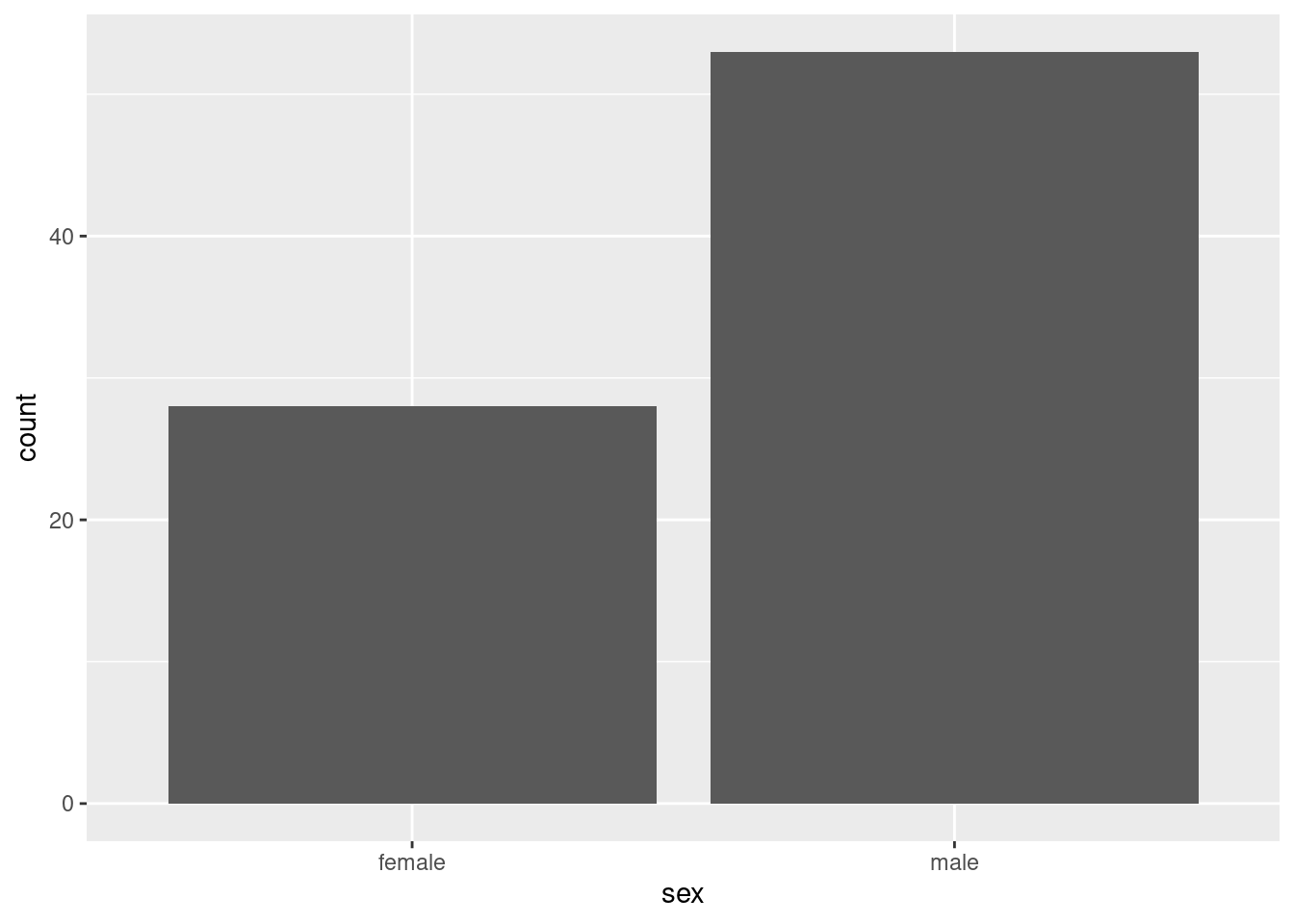

infect %>% count(sex, infected)Now, bar plots. Let’s start with one variable. The basic bar plot has categories of a categorical variable along the \(x\)-axis and each bar is a count of how many observations were in that category. What is nice about geom_bar is that it will do the counting for you, so that the plot is just this:

ggplot(infect, aes(x = sex)) + geom_bar()

There are about twice as many males as females.

You may think that this looks like a histogram, which it almost does, but there is an important difference: the kind of variable on the \(x\)-axis. Here, it is a categorical variable, and you count how many observations fall in each category (at least, ggplot does). On a histogram, the \(x\)-axis variable is a continuous numerical one, like height or weight, and you have to chop it up into intervals (and then you count how many observations are in each chopped-up interval).

Technically, on a bar plot, the bars have a little gap between them (as here), whereas the histogram bars are right next to each other, because the right side of one histogram bar is the left side of the next.

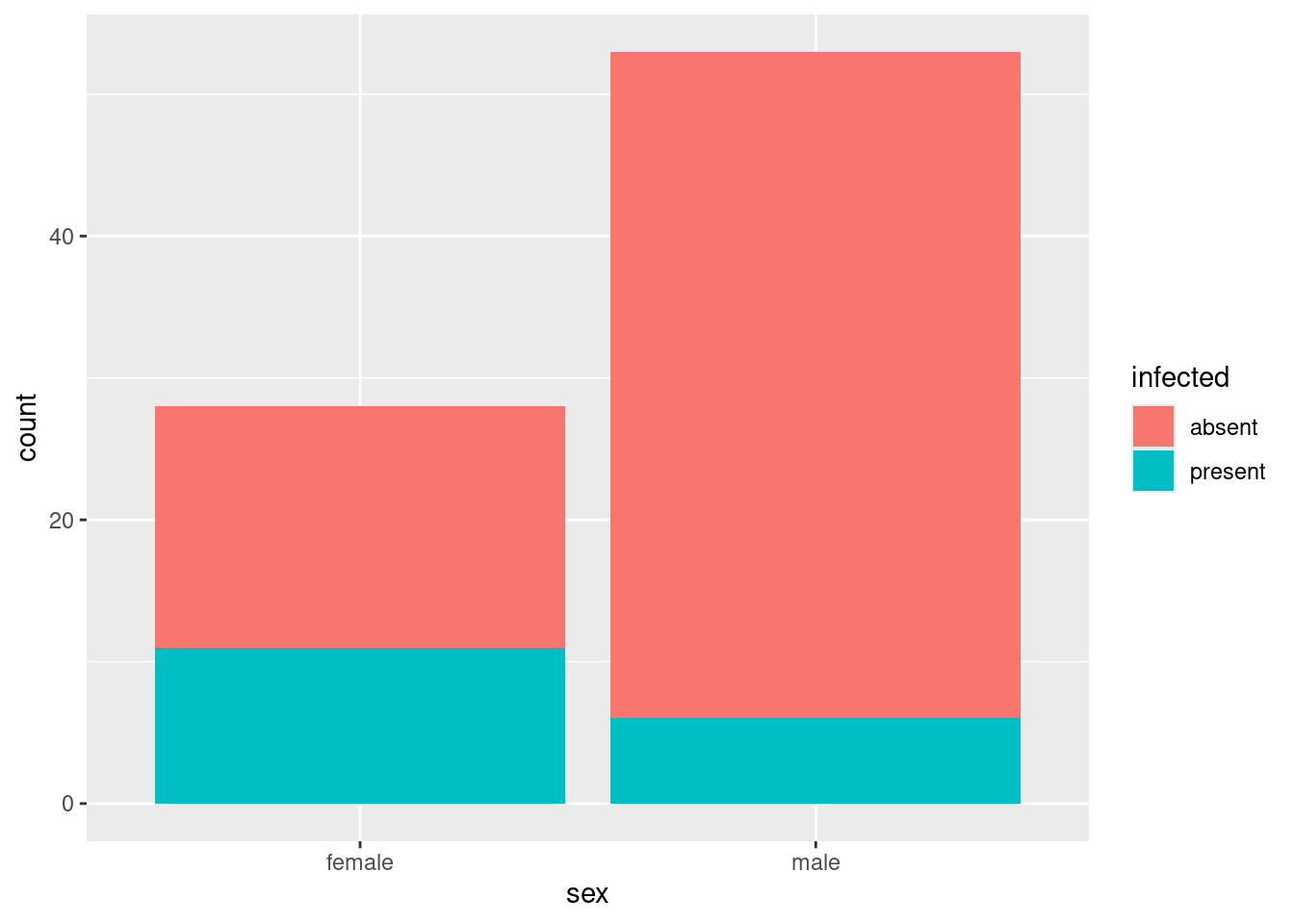

All right, two categorical variables. The idea is that you have each bar divided into sub-bars based on the frequencies of a second variable, which is specified by fill. Here’s the basic idea:

ggplot(infect, aes(x = sex, fill = infected)) + geom_bar()

This is known in the business as a “stacked bar chart”. The issue is how much of each bar is blue, which is unnecessarily hard to judge because the male bar is taller. (Here, it is not so bad, because the amount of blue in the male bar is smaller and the bar is also taller. But we got lucky here.)

There are two ways to improve this. One is known as a “grouped bar chart”, which goes like this:

ggplot(infect, aes(x = sex, fill = infected)) +

geom_bar(position = "dodge")

The absent and present frequencies for females are next to each other, and the same for males, and you can read off how big they are from the \(y\)-scale. This is my preferred graph for two (or more than two) categorical variables.

You could switch the roles of sex and infected and get a different chart, but one that conveys the same information. Try it. (The reason for doing it the way around I did is that being infected or not is the response and sex is explanatory, so that on my plot you can ask “out of the males, how many were infected?”, which is the way around that makes sense.)

The second way is to go back to stacked bars, but make them the same height, so you can compare the fractions of the bars that are each colour. This is position="fill":

ggplot(infect, aes(x = sex, fill = infected)) +

geom_bar(position = "fill")

This also shows that more of the females were infected than the males, but without getting sidetracked into the issue that there were more males to begin with.

I wrote this question in early 2017. At that time, I wrote:

I learned about this one approximately two hours ago. I just ordered Hadley Wickham’s new book “R for Data Science” from Amazon, and it arrived today. It’s in there. (A good read, by the way. I’m thinking of using it as a recommended text next year.) As is so often the way with

ggplot, the final answer looks very simple, but there is a lot of thinking required to get there, and you end up having even more respect for Hadley Wickham for the clarity of thinking that enabled this to be specified in a simple way.

(end quote)

\(\blacksquare\)

- Which, if any, of your explanatory variables appear to be related to

infected? Explain briefly.

Solution

Let’s go through our output from (here). In terms of age, when infection is present, animals are (slightly) older. So there might be a small age effect. Next, when infection is present, weight is typically a lot less. So there ought to be a big weight effect. Finally, from the table, females are somewhere around 50-50 infected or not, but very few males are infected. So there ought to be a big sex effect as well. This also appears in the grouped bar plot, where the red (“absent”) bar for males is much taller than the blue (“present”) bar, but for females the two bars are almost the same height. So the story is that we would expect a significant effect of sex and weight, and maybe of age as well.

\(\blacksquare\)

- Fit a logistic regression predicting

infectedfrom the other three variables. Display thesummary.

Solution

Thus:

infect.1 <- glm(infected ~ age + weight + sex, family = "binomial", data = infect)Error in eval(family$initialize): y values must be 0 <= y <= 1Oh, I forgot to turn infected into a factor. This is the shortcut way to do that:

# infect

infect.1 <- glm(factor(infected) ~ age + weight + sex, family = "binomial", data = infect)

summary(infect.1)

Call:

glm(formula = factor(infected) ~ age + weight + sex, family = "binomial",

data = infect)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 0.609369 0.803288 0.759 0.448096

age 0.012653 0.006772 1.868 0.061701 .

weight -0.227912 0.068599 -3.322 0.000893 ***

sexmale -1.543444 0.685681 -2.251 0.024388 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 83.234 on 80 degrees of freedom

Residual deviance: 59.859 on 77 degrees of freedom

AIC: 67.859

Number of Fisher Scoring iterations: 5drop1(infect.1, test = "Chisq")Or you could create a new or redefined column in the data frame containing the factor version of infected, for example in this way:

infect %>%

mutate(infected = factor(infected)) %>%

glm(infected ~ age + weight + sex, family = "binomial", data = .) -> infect.1a

summary(infect.1a)

Call:

glm(formula = infected ~ age + weight + sex, family = "binomial",

data = .)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 0.609369 0.803288 0.759 0.448096

age 0.012653 0.006772 1.868 0.061701 .

weight -0.227912 0.068599 -3.322 0.000893 ***

sexmale -1.543444 0.685681 -2.251 0.024388 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 83.234 on 80 degrees of freedom

Residual deviance: 59.859 on 77 degrees of freedom

AIC: 67.859

Number of Fisher Scoring iterations: 5Either way is good, and gives the same answer. The second way uses the data=. trick to ensure that the input data frame to glm is the output from the previous step, the one with the factor version of infected in it. The data=. is needed because glm requires a model formula first rather than a data frame (if the data were first, you could just omit it).

\(\blacksquare\)

- * Which variables, if any, would you consider removing from the model? Explain briefly.

Solution

This is the same idea as in multiple regression: look at the end of the line for each variable to get its individual P-value, and if that’s not small, you can take that variable out. age has a P-value of 0.062, which is (just) larger than 0.05, so we can consider removing this variable. The other two P-values, 0.00089 and 0.024, are definitely less than 0.05, so those variables should stay.

Alternatively, you can say that the P-value for age is small enough to be interesting, and therefore that age should stay. That’s fine, but then you need to be consistent in the next part.

You probably noted that sex is categorical. However, it has only the obvious two levels, and such a categorical variable can be assessed for significance this way. If you were worried about this, the right way to go is drop1:

drop1(infect.1, test = "Chisq")The P-values are similar, but not identical.6

I have to stop and think about this. There is a lot of theory that says there are several ways to do stuff in regression, but they are all identical. The theory doesn’t quite apply the same in generalized linear models (of which logistic regression is one): if you had an infinite sample size, the ways would all be identical, but in practice you’ll have a very finite amount of data, so they won’t agree.

I’m thinking about my aims here: I want to decide whether each \(x\)-variable should stay in the model, and for that I want a test that expresses whether the model fits significantly worse if I take it out. The result I get ought to be the same as physically removing it and comparing the models with anova, eg. for age:

infect.1b <- update(infect.1, . ~ . - age)

anova(infect.1b, infect.1, test = "Chisq")This is the same thing as drop1 gives.

So, I think: use drop1 to assess whether anything should come out of a model like this, and use summary to obtain the slopes to interpret (in this kind of model, whether they’re positive or negative, and thus what kind of effect each explanatory variable has on the probability of whatever-it-is.)

\(\blacksquare\)

Solution

I think they are extremely consistent. When we looked at the plots, we said that weight and sex had large effects, and they came out definitely significant. There was a small difference in age between the infected and non-infected groups, and age came out borderline significant (with a P-value definitely larger than for the other variables, so that the evidence of its usefulness was weaker).

\(\blacksquare\)

- * The first and third quartiles of

ageare 26 and 130; the first and third quartiles ofweightare 9 and 16. Obtain predicted probabilities for all combinations of these andsex. (You’ll need to start by making a new data frame, usingdatagridto get all the combinations.)

Solution

Here’s how datagrid goes. Note my use of plural names to denote the things I want all combinations of:

ages <- c(26, 130)

weights <- c(9, 16)

sexes <- c("female", "male")

new <- datagrid(model = infect.1, age = ages, weight = weights, sex = sexes)

newThis is about on the upper end of what you would want to do just using the one line with datagrid in it and putting the actual values in instead of ages, weights etc. To my mind, once it gets longer than about this long, doing on one line starts to get unwieldy. But your taste might be different than mine.

Aside:

I could have asked you to include some more values of age and weight, for example the median as well, to get a clearer picture. But that would have made infect.new bigger, so I stopped here. (If we had been happy with five representative values of age and weight, we could have done predictions (below) with variables and not had to make new at all.)

datagrid makes a data frame from input vectors, so it doesn’t matter if those are different lengths. In fact, it’s also possible to make this data frame from things like quartiles stored in a data frame. To do that, you wrap the whole datagrid in a with:

d <- tibble(age = ages, weight = weights, sex = sexes)

dnew <- with(d, datagrid(model = infect.1, age=age, weight=weight, sex=sex))

newThis one is a little confusing because in eg. age = age, the first age refers to the column that will be in new, and the second one refers to the values that are going in there, namely the column called age in the dataframe d.7

End of aside.

Next, the predictions:

# predictions(infect.1, new)

cbind(predictions(infect.1, new)) %>%

select(estimate, age:sex)Extra: I didn’t ask you to comment on these, since the question is long enough already. But that’s not going to stop me!

These, in predicted, are predicted probabilities of infection.8

The way I remember the one-column-response thing is that the first level is the baseline (as it is in a regression with a categorical explanatory variable), and the second level is the one whose probability is modelled (in the same way that the second, third etc. levels of a categorical explanatory variable are the ones that appear in the summary table).

Let’s start with sex. The probabilities of a female being infected are all much higher than of a corresponding male (with the same age and weight) being infected. Compare, for example, lines 1 and 2. Or 3 and 4. Etc. So sex has a big effect.

What about weight? As weight goes from 9 to 16, with everything else the same, the predicted probability of infection goes sharply down. This is what we saw before: precisely, the boxplot showed us that infected animals were likely to be less heavy.

Last, age. As age goes up, the probabilities go (somewhat) up as well. Compare, for example, lines 1 and 5 or lines 4 and 8. I think this is a less dramatic change than for the other variables, but that’s a judgement call.

I got this example from (horrible URL warning) here: link It starts on page 275 in my edition. He goes at the analysis a different way, but he finishes with another issue that I want to show you.

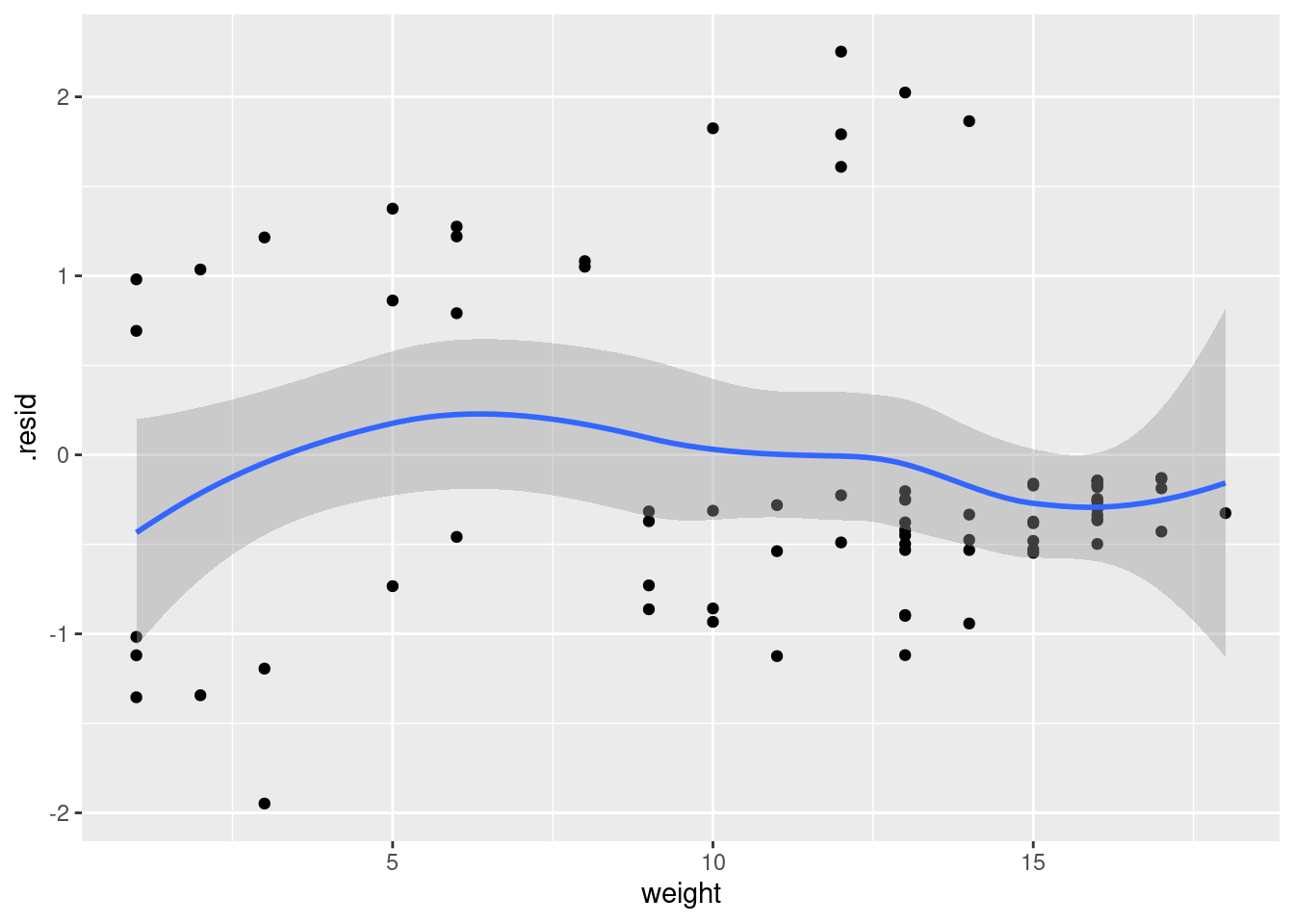

Let’s work out the residuals and plot them against our quantitative explanatory variables. I think the best way to do this is augment from broom, to create a data frame containing the residuals alongside the original data:

library(broom)

infect.1a <- infect.1 %>% augment(infect)

infect.1a %>% as_tibble()ggplot(infect.1a, aes(x = weight, y = .resid)) + geom_point() +

geom_smooth()`geom_smooth()` using method = 'loess' and formula = 'y ~ x'

infect.1a is, I think, a genuine data.frame rather than a tibble.

I don’t quite know what to make of that plot. It doesn’t look quite random, and yet there are just some groups of points rather than any real kind of trend.

The corresponding plot with age goes the same way:

ggplot(infect.1a, aes(x = age, y = .resid)) + geom_point() +

geom_smooth()`geom_smooth()` using method = 'loess' and formula = 'y ~ x'

Crawley found the slightest suggestion of an up-and-down curve in there. I’m not sure I agree, but that’s what he saw. As with a regular regression, the residuals against anything should look random, with no trends. (Though the residuals from a logistic regression can be kind of odd, because the response variable can only be 1 or 0.) Crawley tries adding squared terms to the logistic regression, which goes like this. The glm statement is long, as they usually are, so it’s much easier to use update:

infect.2 <- update(infect.1, . ~ . + I(age^2) + I(weight^2))As we saw before, when thinking about what to keep, we want to look at drop1:

drop1(infect.2, test = "Chisq")The squared terms are both significant. The linear terms, age and weight, have to stay, regardless of their significance.9 What do the squared terms do to the predictions? Before, there was a clear one-directional trend in the relationships with age and weight. Has that changed? Let’s see. We’ll need a few more ages and weights to investigate with. I think the set of five representative values that comes out of predictions with variables would be ideal (and saves us having to make another new).

Let’s start by assessing the effect of age:

summary(infect) infected age weight sex

Length:81 Min. : 1.00 Min. : 1.00 Length:81

Class :character 1st Qu.: 26.00 1st Qu.: 9.00 Class :character

Mode :character Median : 87.00 Median :13.00 Mode :character

Mean : 83.65 Mean :11.49

3rd Qu.:130.00 3rd Qu.:16.00

Max. :206.00 Max. :18.00 new <- datagrid(model = infect.2, age = c(1, 26, 84, 130, 206))

newcbind(predictions(infect.2, newdata = new)) %>%

select(estimate, age, weight, sex)The actual values of age we chose are as shown. The other columns are constant; the values are the mean weight and the more common sex. We really can see the effect of age with all else constant.

Here, the predicted infection probabilities go up with age and then come down again, as a squared term in age will allow them to do, compared to what we had before:

cbind(predictions(infect.1, newdata = new)) %>%

select(estimate, age, weight, sex)where the probabilities keep on going up.

All right, what about the effect of weight? Here’s the first model:

new <- datagrid(model = infect.2, weight = c(1, 9, 13, 16, 18))

cbind(predictions(infect.1, newdata = new)) %>%

select(estimate, age, weight, sex)and here’s the second one with squared terms:

cbind(predictions(infect.2, newdata = new)) %>%

select(estimate, age, weight, sex)This one is not so dissimilar: in the linear model, the predicted probabilities of infection start high and go down, but in the model with squared terms they go slightly up before going down.

We couldn’t have a squared term in sex, since there are only two sexes (in this data set), so the predictions might be pretty similar for the two models:

new <- datagrid(model = infect.2, sex = c("female", "male"))

cbind(predictions(infect.1, newdata = new)) %>%

select(estimate, age, weight, sex)and

cbind(predictions(infect.2, newdata = new)) %>%

select(estimate, age, weight, sex)They are actually quite different. For the model with squared terms in age and weight, the predicted probabilities of infection are a lot higher for both males and females, at least at these (mean) ages and weights.

\(\blacksquare\)

27.15 The brain of a cat

A large number (315) of psychology students were asked to imagine that they were serving on a university ethics committee hearing a complaint against animal research being done by a member of the faculty. The students were told that the surgery consisted of implanting a device called a cannula in each cat’s brain, through which chemicals were introduced into the brain and the cats were then given psychological tests. At the end of the study, the cats’ brains were subjected to histological analysis. The complaint asked that the researcher’s authorization to carry out the study should be withdrawn, and the cats should be handed over to the animal rights group that filed the complaint. It was suggested that the research could just as well be done with computer simulations.

All of the psychology students in the survey were told all of this. In addition, they read a statement by the researcher that no animal felt much pain at any time, and that computer simulation was not an adequate substitute for animal research. Each student was also given one of the following scenarios that explained the benefit of the research:

“cosmetic”: testing the toxicity of chemicals to be used in new lines of hair care products.

“theory”: evaluating two competing theories about the function of a particular nucleus in the brain.

“meat”: testing a synthetic growth hormone said to potentially increase meat production.

“veterinary”: attempting to find a cure for a brain disease that is killing domesticated cats and endangered species of wild cats.

“medical”: evaluating a potential cure for a debilitating disease that afflicts many young adult humans.

Finally, each student completed two questionnaires: one that would assess their “relativism”: whether or not they believe in universal moral principles (low score) or whether they believed that the appropriate action depends on the person and situation (high score). The second questionnaire assessed “idealism”: a high score reflects a belief that ethical behaviour will always lead to good consequences (and thus that if a behaviour leads to any bad consequences at all, it is unethical).10

After being exposed to all of that, each student stated their decision about whether the research should continue or stop.

I should perhaps stress at this point that no actual cats were harmed in the collection of these data (which can be found as a .csv file at link). The variables in the data set are these:

decision: whether the research should continue or stop (response)idealism: score on idealism questionnairerelativism: score on relativism questionnairegenderof studentscenarioof research benefits that the student read.

A more detailed discussion11 of this study is at link.

- Read in the data and check by looking at the structure of your data frame that you have something sensible. Do not call your data frame

decision, since that’s the name of one of the variables in it.

Solution

So, like this, using the name decide in my case:

my_url <- "https://ritsokiguess.site/datafiles/decision.csv"

decide <- read_csv(my_url)Rows: 315 Columns: 5

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (3): decision, gender, scenario

dbl (2): idealism, relativism

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.decideThe variables are all the right things and of the right types: the decision, gender and the scenario are all text (representing categorical variables), and idealism and relativism, which were scores on a test, are quantitative (numerical). There are, as promised, 315 observations.

\(\blacksquare\)

- Fit a logistic regression predicting

decisionfromgender. Is there an effect of gender?

Solution

Turn the response into a factor somehow, either by creating a new variable in the data frame or like this:

decide.1 <- glm(factor(decision) ~ gender, data = decide, family = "binomial")

summary(decide.1)

Call:

glm(formula = factor(decision) ~ gender, family = "binomial",

data = decide)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 0.8473 0.1543 5.491 3.99e-08 ***

genderMale -1.2167 0.2445 -4.976 6.50e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 425.57 on 314 degrees of freedom

Residual deviance: 399.91 on 313 degrees of freedom

AIC: 403.91

Number of Fisher Scoring iterations: 4The P-value for gender is \(6.5 \times 10^{-7}\), which is very small, so there is definitely an effect of gender. It’s not immediately clear what kind of effect it is: that’s the reason for the next part, and we’ll revisit this slope coefficient in a moment. Categorical explanatory variables are perfectly all right as text. Should I have used drop1 to assess the significance? Maybe:

drop1(decide.1, test = "Chisq")The thing is, this gives us a P-value but not a slope, which we might have wanted to try to interpret. Also, the P-value in summary is so small that it is likely to be still significant in drop1 as well.

\(\blacksquare\)

- To investigate the effect (or non-effect) of

gender, create a contingency table by feedingdecisionandgenderintotable. What does this tell you?

Solution

with(decide, table(decision, gender)) gender

decision Female Male

continue 60 68

stop 140 47Females are more likely to say that the study should stop (a clear majority), while males are more evenly split, with a small majority in favour of the study continuing.

If you want the column percents as well, you can use prop.table. Two steps: save the table from above into a variable, then feed that into prop.table, calling for column percentages rather than row percentages:

tab <- with(decide, table(decision, gender))

prop.table(tab, 2) gender

decision Female Male

continue 0.3000000 0.5913043

stop 0.7000000 0.4086957Why column percentages? Well, thinking back to STAB22 or some such place, when one of your variables is acting like a response or outcome (decision here), make the percentages out of the other one. Given that a student is a female, how likely are they to call for the research to stop? The other way around makes less sense: given that a person wanted the research to stop, how likely are they to be female?

About 70% of females and 40% of males want the research to stop. That’s a giant-sized difference. No wonder it was significant.

The other way of making the table is to use xtabs, with the same result:

xtabs(~ decision + gender, data = decide) gender

decision Female Male

continue 60 68