library(tidyverse)17 Tidying data

17.1 Baseball and softball spaghetti

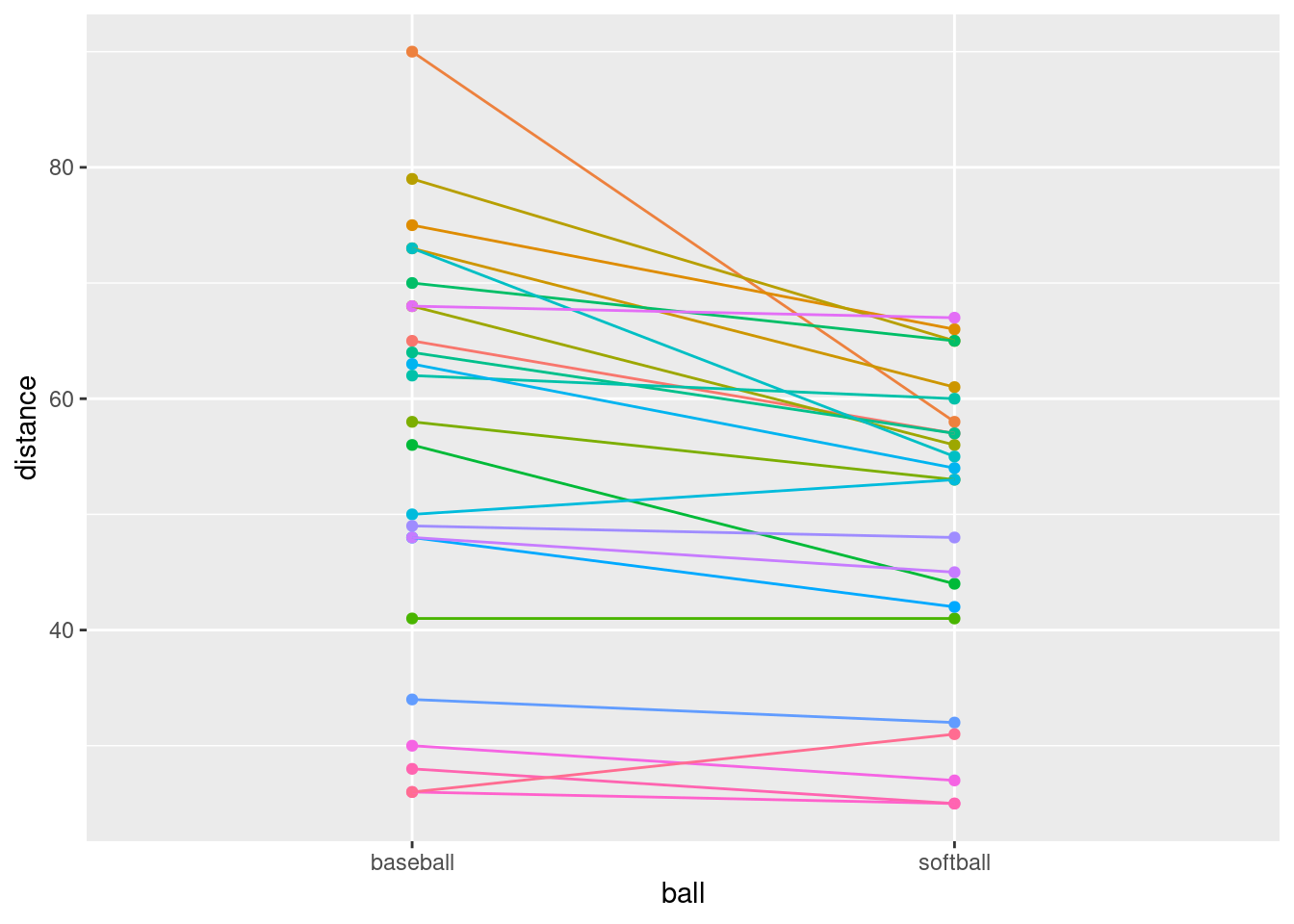

On a previous assignment, we found that students could throw a baseball further than they could throw a softball. In this question, we will make a graph called a “spaghetti plot” to illustrate this graphically. (The issue previously was that the data were matched pairs: the same students threw both balls.)

This seems to work most naturally by building a pipe, a line or two at a time. See if you can do it that way. (If you can’t make it work, use lots of temporary data frames, one to hold the result of each part.)

Read in the data again from link. The variables had no names, so supply some, as you did before.

Create a new column that is the students turned into a

factor, adding it to your data frame. The reason for this will become clear later.Collect together all the throwing distances into one column, making a second column that says which ball was thrown.

Using your new data frame, make a “scatterplot” of throwing distance against type of ball.

Add two things to your plot: something that will distinguish the students by colour (this works best if the thing distinguished by colour is a factor),1 and something that will join the two points for the same student by a line.

The legend is not very informative. Remove it from the plot, using

guides.What do you see on the final spaghetti plot? What does that tell you about the relative distances a student can throw a baseball vs. a softball? Explain briefly, blah blah blah.

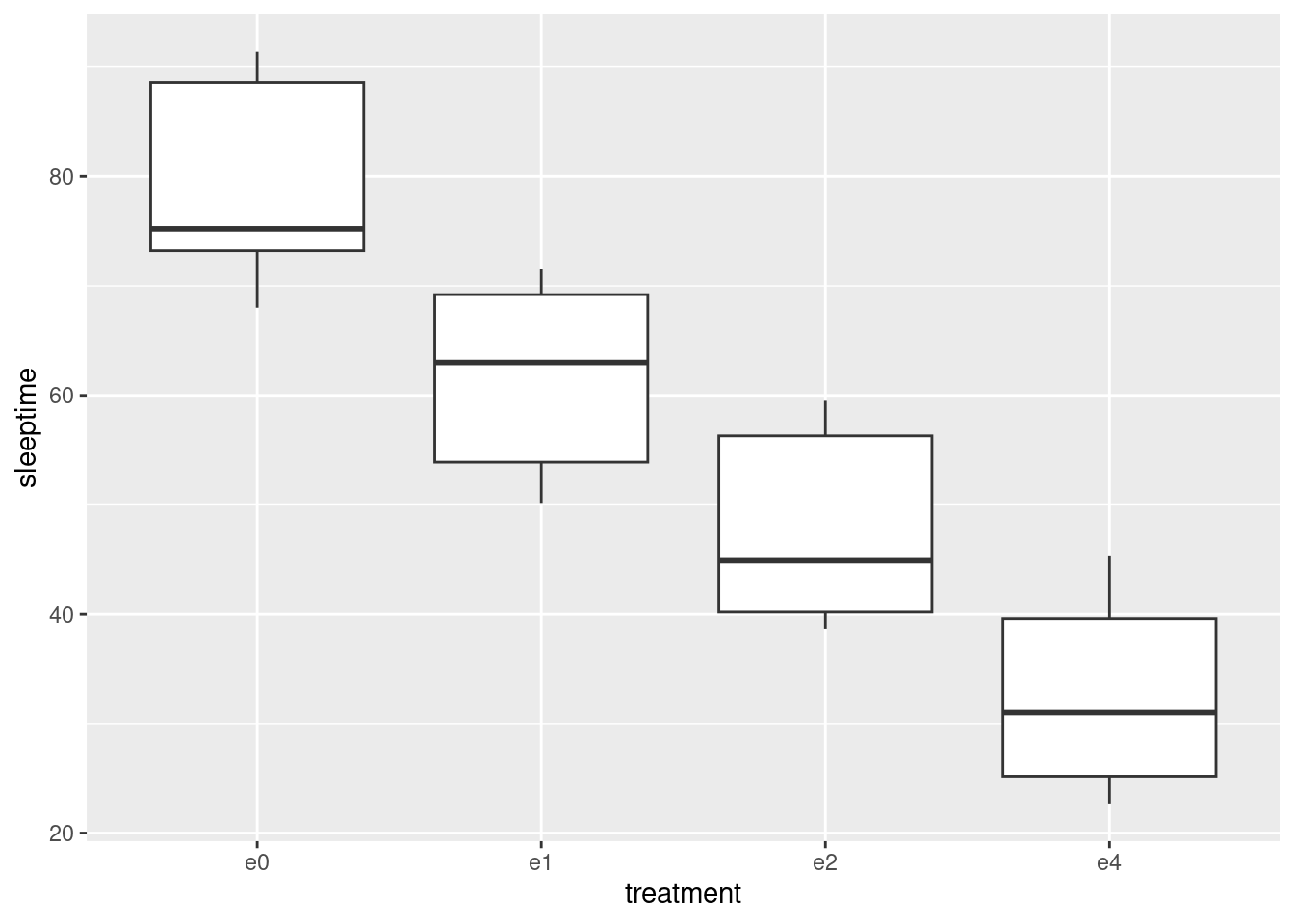

17.2 Ethanol and sleep time in rats

A biologist wished to study the effects of ethanol on sleep time in rats. A sample of 20 rats (all the same age) was selected, and each rat was given an injection having a particular concentration (0, 1, 2 or 4 grams per kilogram of body weight) of ethanol. These are labelled e0, e1, e2, e4. The “0” treatment was a control group. The rapid eye movement (REM) sleep time was then recorded for each rat. The data are in link.

Read the data in from the file. Check that you have four rows of observations and five columns of sleep times.

Unfortunately, the data are in the wrong format. All the sleep times for each treatment group are on one row, and we should have one column containing all the sleep times, and the corresponding row should show which treatment group that sleep time came from. Transform this data frame into one that you could use for modelling or making graphs.

Using your new data frame, make side-by-side boxplots of sleep time by treatment group.

In your boxplots, how does the median sleep time appear to depend on treatment group?

There is an assumption about spread that the analysis of variance needs in order to be reliable. Do your boxplots indicate that this assumption is satisfied for these data, bearing in mind that you have only five observations per group?

Run an analysis of variance to see whether sleep time differs significantly among treatment groups. What do you conclude?

Would it be a good idea to run Tukey’s method here? Explain briefly why or why not, and if you think it would be a good idea, run it.

What do you conclude from Tukey’s method? (This is liable to be a bit complicated.) Is there a treatment that is clearly best, in terms of the sleep time being largest?

17.3 Growth of tomatoes

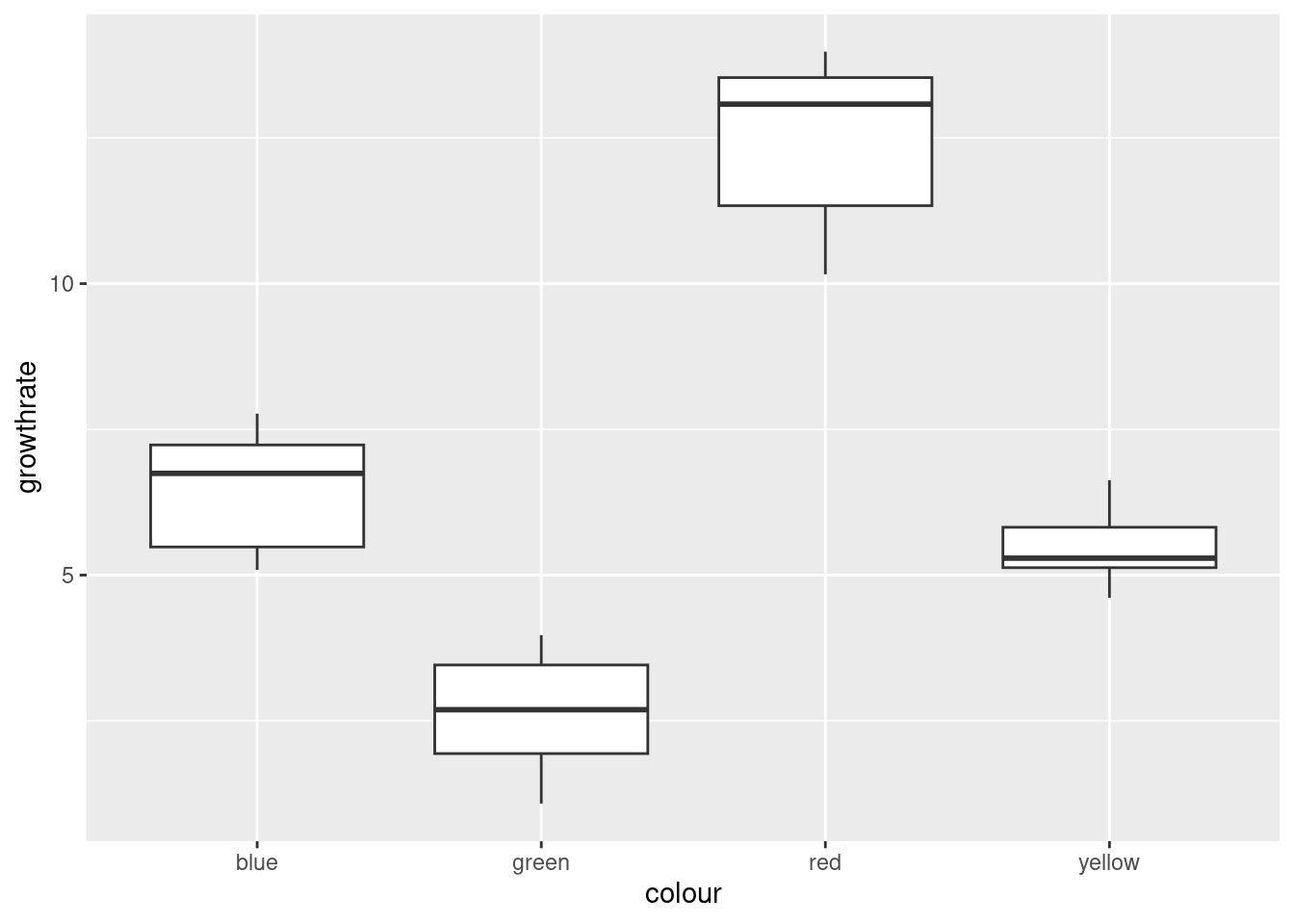

A biology graduate student exposed each of 32 tomato plants to one of four different colours of light (8 plants to each colour). The growth rate of each plant, in millimetres per week, was recorded. The data are in link.

Read the data into R and confirm that you have 8 rows and 5 columns of data.

Re-arrange the data so that you have one column containing all the growth rates, and another column saying which colour light each plant was exposed to. (The aim here is to produce something suitable for feeding into

aovlater.)Save the data in the new format to a text file. This is most easily done using

write_csv, which is the opposite ofread_csv. It requires two things: a data frame, and the name of a file to save in, which should have a.csvextension.Make a suitable boxplot, and use it to assess the assumptions for ANOVA. What do you conclude? Explain briefly.

Run (regular) ANOVA on these data. What do you conclude? (Optional extra: if you think that some other variant of ANOVA would be better, run that as well and compare the results.)

If warranted, run a suitable follow-up. (If not warranted, explain briefly why not.)

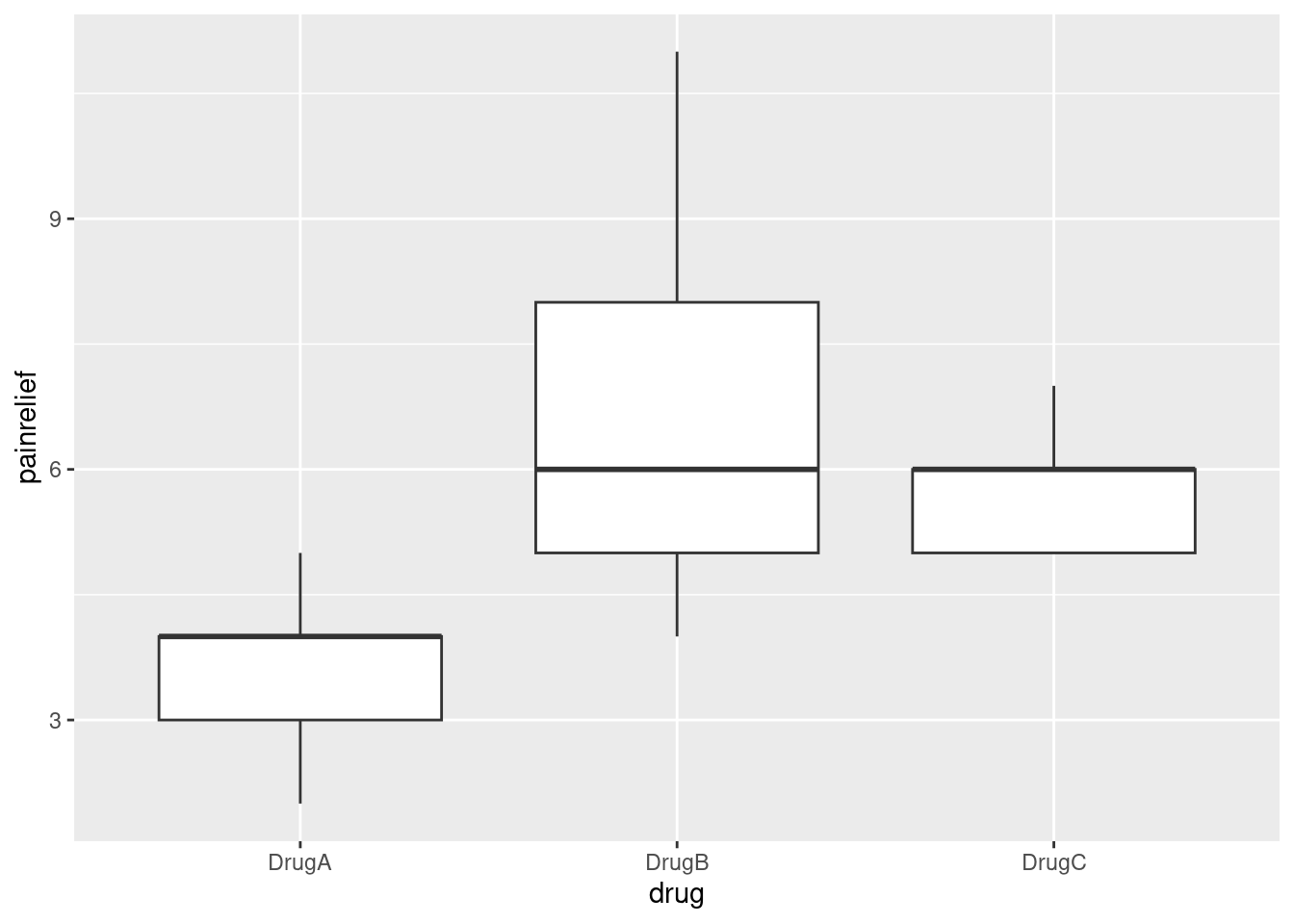

17.4 Pain relief in migraine headaches (again)

The data in link are from a study of pain relief in migraine headaches. Specifically, 27 subjects were randomly assigned to receive one of three pain relieving drugs, labelled A, B and C. Each subject reported the number of hours of pain relief they obtained (that is, the number of hours between taking the drug and the migraine symptoms returning). A higher value is therefore better. Can we make some recommendation about which drug is best for the population of migraine sufferers?

Read in and display the data. Take a look at the data file first, and see if you can say why

read_tablewill work andread_delimwill not.What is it about the experimental design that makes a one-way analysis of variance plausible for data like this?

What is wrong with the current format of the data as far as doing a one-way ANOVA analysis is concerned? (This is related to the idea of whether or not the data are “tidy”.)

“Tidy” the data to produce a data frame suitable for your analysis.

Go ahead and run your one-way ANOVA (and Tukey if necessary). Assume for this that the pain relief hours in each group are sufficiently close to normally distributed with sufficiently equal spreads.

What recommendation would you make about the best drug or drugs? Explain briefly.

17.5 Location, species and disease in plants

The table below is a “contingency table”, showing frequencies of diseased and undiseased plants of two different species in two different locations:

Species Disease present Disease absent

Location X Location Y Location X Location Y

A 44 12 38 10

B 28 22 20 18

The data were saved as link. In that file, the columns are coded by two letters: a p or an a to denote presence or absence of disease, and an x or a y to denote location X or Y. The data are separated by multiple spaces and aligned with the variable names.

Read in and display the data.

Explain briefly how these data are not “tidy”.

Use a suitable

tidyrtool to get all the things that are the same into a single column. (You’ll need to make up a temporary name for the other new column that you create.) Show your result.Explain briefly how the data frame you just created is still not “tidy” yet.

Use one more

tidyrtool to make these data tidy, and show your result.Let’s see if we can re-construct the original contingency table (or something equivalent to it). Use the function

xtabs. This requires first a model formula with the frequency variable on the left of the squiggle, and the other variables separated by plus signs on the right. Second it requires a data frame, withdata=. Feed your data frame from the previous part intoxtabs. Save the result in a variable and display the result.Take the output from the last part and feed it into the function

ftable. How has the output been changed? Which do you like better? Explain briefly.

17.6 Mating songs in crickets

Male tree crickets produce “mating songs” by rubbing their wings together to produce a chirping sound. It is hypothesized that female tree crickets identify males of the correct species by how fast (in chirps per second) the male’s mating song is. This is called the “pulse rate”. Some data for two species of crickets are in link. The columns, which are unlabelled, are temperature and pulse rate (respectively) for Oecanthus exclamationis (first two columns) and Oecanthus niveus (third and fourth columns). The columns are separated by tabs. There are some missing values in the first two columns because fewer exclamationis crickets than niveus crickets were measured. The research question is whether males of the different species have different average pulse rates. It is also of interest to see whether temperature has an effect, and if so, what. Before we get to that, however, we have some data organization to do.

Read in the data, allowing for the fact that you have no column names. You’ll see that the columns have names

X1throughX4. This is OK.Tidy these untidy data, going as directly as you can to something tidy. (Some later parts show you how it used to be done.) Begin by: (i) adding a column of row numbers, (ii)

rename-ing the columns to species name, an underscore, and the variable contents (keepingpulserateas one word), and then usepivot_longer. Note that the column names encode two things.If you found (b) a bit much to take in, the rest of the way we take a rather more leisurely approach towards the tidying.

These data are rather far from being tidy. There need to be three variables, temperature, pulse rate and species, and there are \(14+17=31\) observations altogether. This one is tricky in that there are temperature and pulse rate for each of two levels of a factor, so I’ll suggest combining the temperature and chirp rate together into one thing for each species, then pivoting them longer (“combining”), then pivoting them wider again (“splitting”). Create new columns, named for each species, that contain the temperature and pulse rate for that species in that order, united together. For the rest of this question, start from the data frame you read in, and build a pipe, one or two steps at a time, to save creating a lot of temporary data frames.

The two columns

exclamationisandniveusthat you just created are both temperature-pulse rate combos, but for different species. Collect them together into one column, labelled by species. (This is a straighttidyrpivot_longer, even though the columns contain something odd-looking.)Now split up the temperature-pulse combos at the underscore, into two separate columns. This is

separate. When specifying what to separate by, you can use a number (“split after this many characters”) or a piece of text, in quotes (“when you see this text, split at it”).Almost there. Temperature and pulse rate are still text (because

uniteturned them into text), but they should be numbers. Create new variables that are numerical versions of temperature and pulse rate (usingas.numeric). Check that you have no extraneous variables (and, if necessary, get rid of the ones you don’t want). (Species is also text and really ought to be a factor, but having it as text doesn’t seem to cause any problems.) You can, if you like, useparse_numberinstead ofas.numeric. They should both work. The distinction I prefer to make is thatparse_numberis good for text with a number in it (that we want to pull the number out of), whileas.numericis for turning something that looks like a number but isn’t one into a genuine number.2

17.7 Number 1 songs

The data file link contains a lot of information about songs popular in 2000. This dataset is untidy. Our ultimate aim is to answer “which song occupied the #1 position for the largest number of weeks?”. To do that, we will build a pipe that starts from the data frame read in from the URL above, and finishes with an answer to the question. I will take you through this step by step. Each part will involve adding something to the pipe you built previously (possibly after removing a line or two that you used to display the previous result).

Read the data and display what you have.

The columns

x1st.weekthroughx76th.weekcontain the rank of each song in the Billboard chart in that week, with week 1 being the first week that the song appeared in the chart. Convert all these columns into two: an indication of week, calledweek, and of rank, calledrank. Most songs appeared in the Billboard chart for a lot less than 76 weeks, so there are missing values, which you want to remove. (I say “indication of week” since this will probably be text at the moment). Display your new data frame. Do you have fewer columns? Why do you have a lot more rows? Explain briefly.Both your

weekandrankcolumns are (probably) text. Create new columns that contain just the numeric values, and display just your new columns, again adding onto the end of your pipe. If it so happens thatrankis already a number, leave it as it is.The meaning of your week-number column is that it refers to the number of weeks after the song first appeared in the Billboard chart. That is, if a song’s first appearance (in

date.entered)is July 24, then week 1 is July 24, week 2 is July 31, week 3 is August 7, and so on. Create a columncurrentby adding the appropriate number of days, based on your week number, todate.entered. Displaydate.entered, your week number, andcurrentto show that you have calculated the right thing. Note that you can add a number of days onto a date and you will get another date.Reaching the #1 rank on the Billboard chart is one of the highest accolades in the popular music world. List all the songs that reached

rank1. For these songs, list the artist (as given in the data set), the song title, and the date(s) for which the song was ranked number 1. Arrange the songs in date order of being ranked #1. Display all the songs (I found 55 of them).Use R to find out which song held the #1 rank for the largest number of weeks. For this, you can assume that the song titles are all unique (if it’s the same song title, it’s the same song), but the artists might not be (for example, Madonna might have had two different songs reach the #1 rank). The information you need is in the output you obtained for the previous part, so it’s a matter of adding some code to the end of that. The last mark was for displaying only the song that was ranked #1 for the largest number of weeks, or for otherwise making it easy to see which song it was.

17.8 Bikes on College

The City of Toronto collects all kinds of data on aspects of life in the city. See link. One collection of data is records of the number of cyclists on certain downtown streets. The data in link are a record of the cyclists on College Street on the block west from Huron to Spadina on September 24, 2010. In the spreadsheet, each row relates to one cyclist. The first column is the time the cyclist was observed (to the nearest 15 minutes). After that, there are four pairs of columns. The observer filled in (exactly) one X in each pair of columns, according to whether (i) the cyclist was male or female, (ii) was or was not wearing a helmet, (iii) was or was not carrying a passenger on the bike, (iv) was or was not riding on the sidewalk. We want to create a tidy data frame that has the time in each row, and has columns containing appropriate values, often TRUE or FALSE, for each of the four variables measured.

I will lead you through the process, which will involve developing a (long) pipe, one step at a time.

Take a look at the spreadsheet (using Excel or similar: this may open when you click the link). Are there any obvious header rows? Is there any extra material before the data start? Explain briefly.

Read the data into an R data frame. Read without headers, and instruct R how many lines to skip over using

skip=and a suitable number. When this is working, display the first few lines of your data frame. Note that your columns have namesX1throughX9.What do you notice about the times in your first column? What do you think those “missing” times should be?

Find something from the

tidyversethat will fill3 in those missing values with the right thing. Start a pipe from the data frame you read in, that updates the appropriate column with the filled-in times.R’s

ifelsefunction works like=IFin Excel. You use it to create values for a new variable, for example in amutate. The first input to it is a logical condition (something that is either true or false); the second is the value your new variable should take if the condition is true, and the third is the value of your new variable if the condition is false. Create a new columngenderin your data frame that is “male” or “female” depending on the value of yourX2column, usingmutate. (You can assume that exactly one of the second and third columns has anXin it.) Add your code to the end of your pipe and display (the first 10 rows of) the result.Create variables

helmet,passengerandsidewalkin your data frame that areTRUEif the “Yes” column containsXandFALSEotherwise. This will usemutateagain, but you don’t needifelse: just set the variable equal to the appropriate logical condition. As before, the best way to create these variables is to test the appropriate things for missingness. Note that you can create as many new variables as you like in onemutate. Show the first few lines of your new data frame. (Add your code onto the end of the pipe you made above.)Finally (for the data manipulation), get rid of all the original columns, keeping only the new ones that you created. Save the results in a data frame and display its first few rows.

The next few parts are a quick-fire analysis of the data set. They can all be solved using

count. How many male and how many female cyclists were observed in total?How many male and female cyclists were not wearing helmets?

How many cyclists were riding on the sidewalk and carrying a passenger?

What was the busiest 15-minute period of the day, and how many cyclists were there then?

17.9 Feeling the heat

In summer, the city of Toronto issues Heat Alerts for “high heat or humidity that is expected to last two or more days”. The precise definitions are shown at link. During a heat alert, the city opens Cooling Centres and may extend the hours of operation of city swimming pools, among other things. All the heat alert days from 2001 to 2016 are listed at link.

The word “warning” is sometimes used in place of “alert” in these data. They mean the same thing.4

- Read the data into R, and display the data frame. Note that there are four columns:

a numerical

id(numbered upwards from the first Heat Alert in 2001; some of the numbers are missing)the

dateof the heat alert, in year-month-day format with 4-digit years.a text

codefor the type of heat alerttextdescribing the kind of heat alert. This can be quite long.

In your data frame, are the dates stored as genuine dates or as text? How can you tell?

Which different heat alert codes do you have, and how many of each?

Use the

textin your dataset (or look back at the original data file) to describe briefly in your own words what the various codes represent.How many (regular and extended) heat alert events are there altogether? A heat alert event is a stretch of consecutive days, on all of which there is a heat alert or extended heat alert. Hints: (i) you can answer this from output you already have; (ii) how can you tell when a heat alert event starts?

We are going to investigate how many heat alert days there were in each year. To do that, we have to extract the year from each of our dates.

Count the number of heat alert days for each year, by tabulating the year variable. Looking at this table, would you say that there have been more heat alert days in recent years? Explain (very) briefly.

17.10 Isoflavones

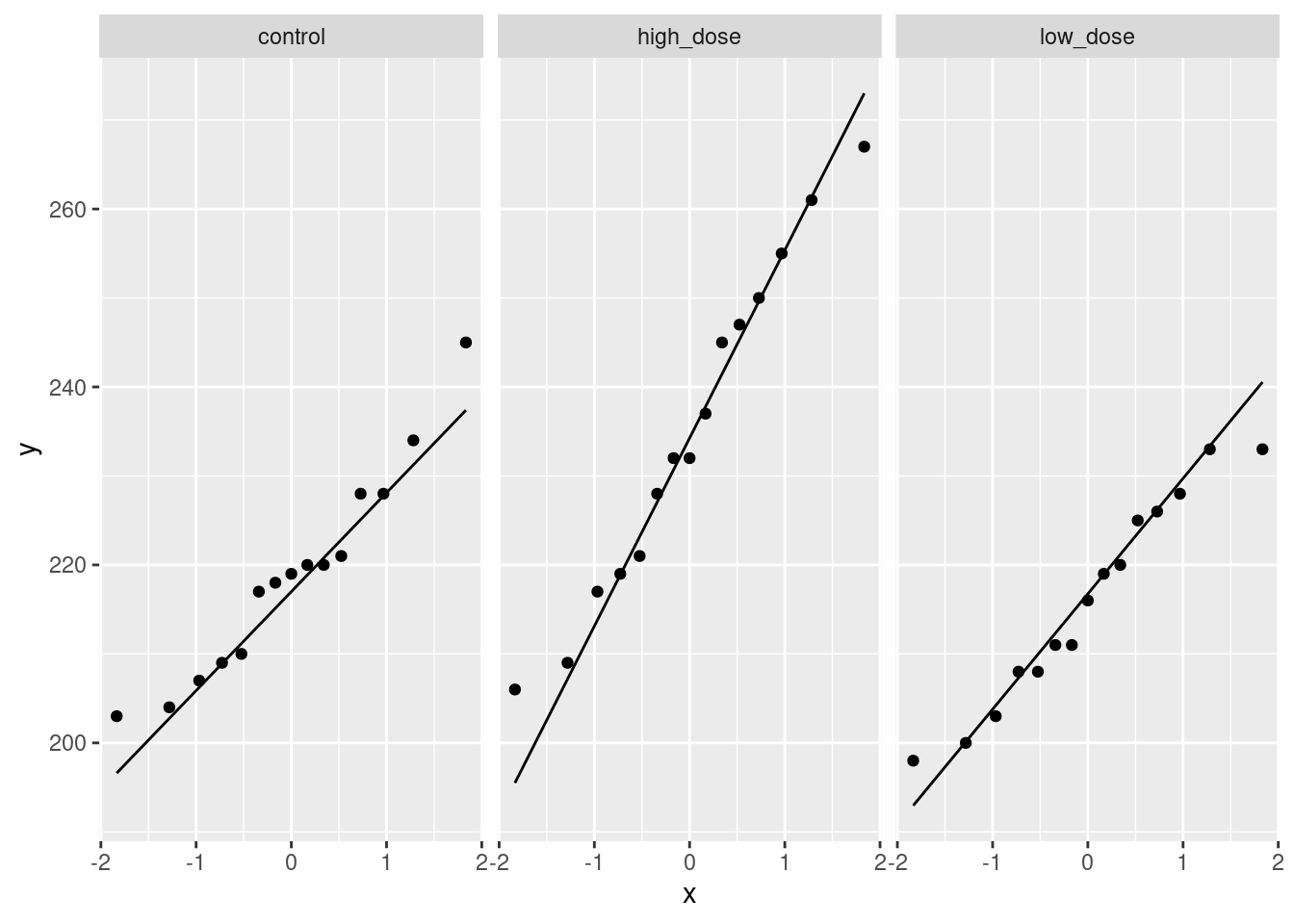

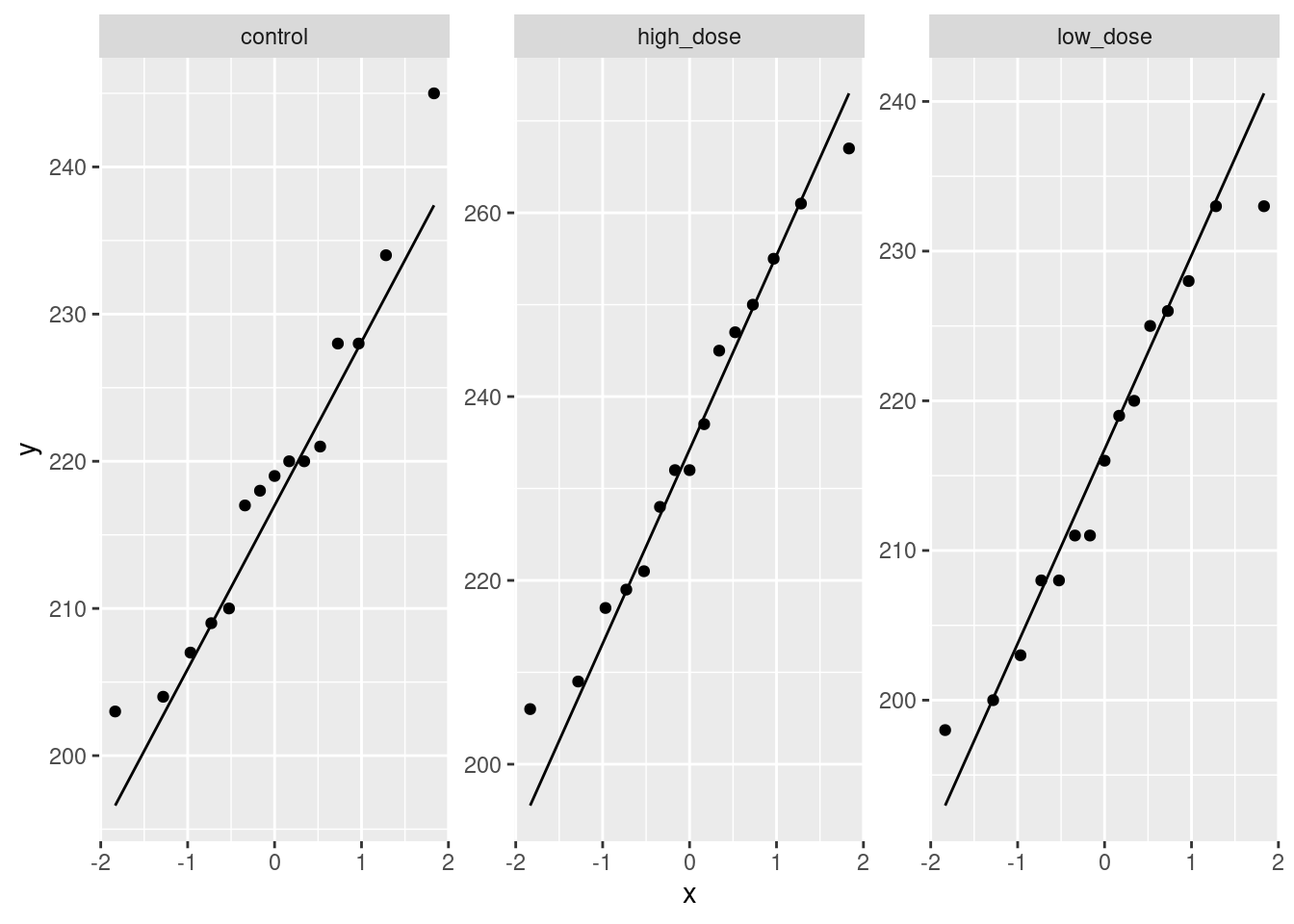

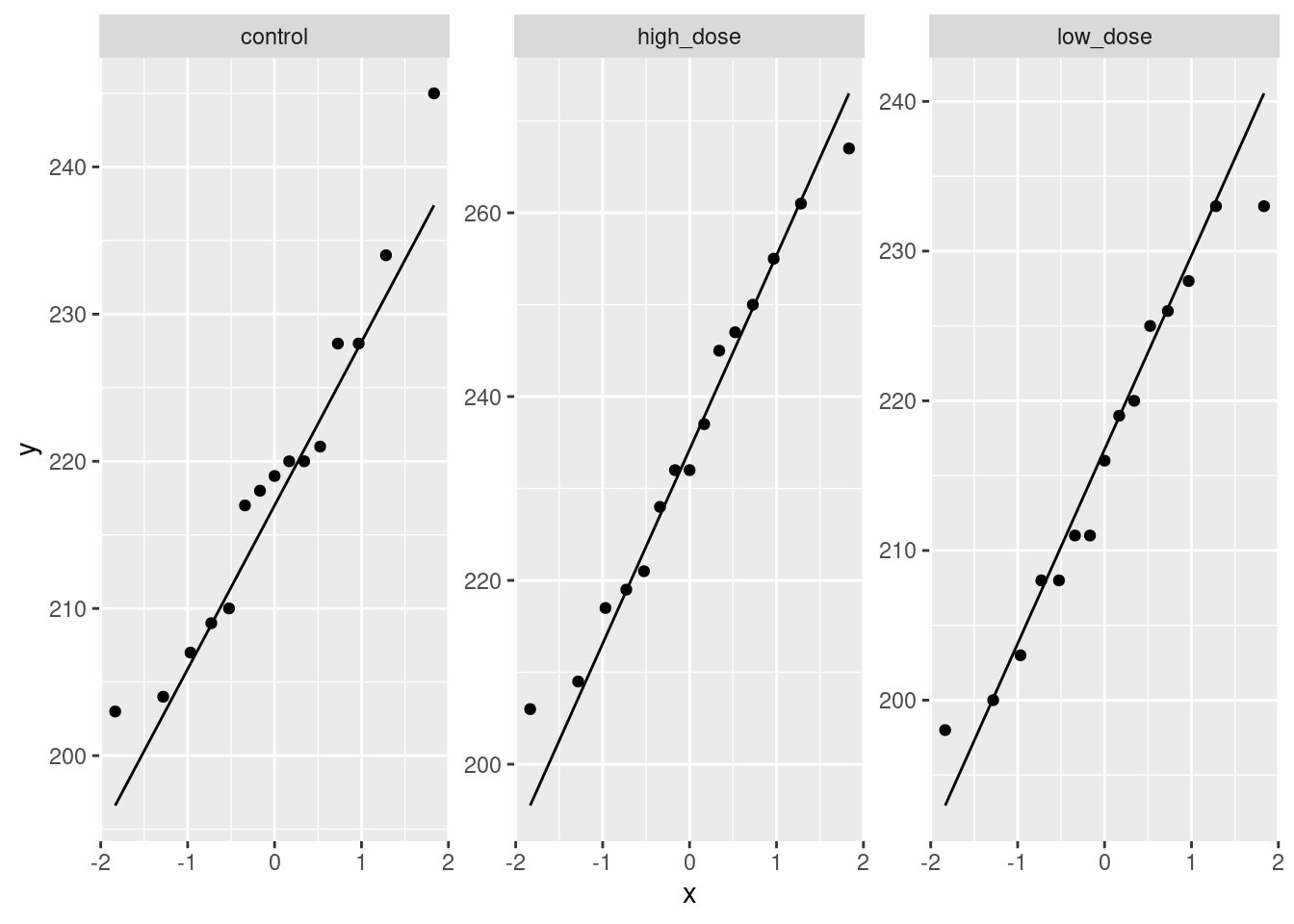

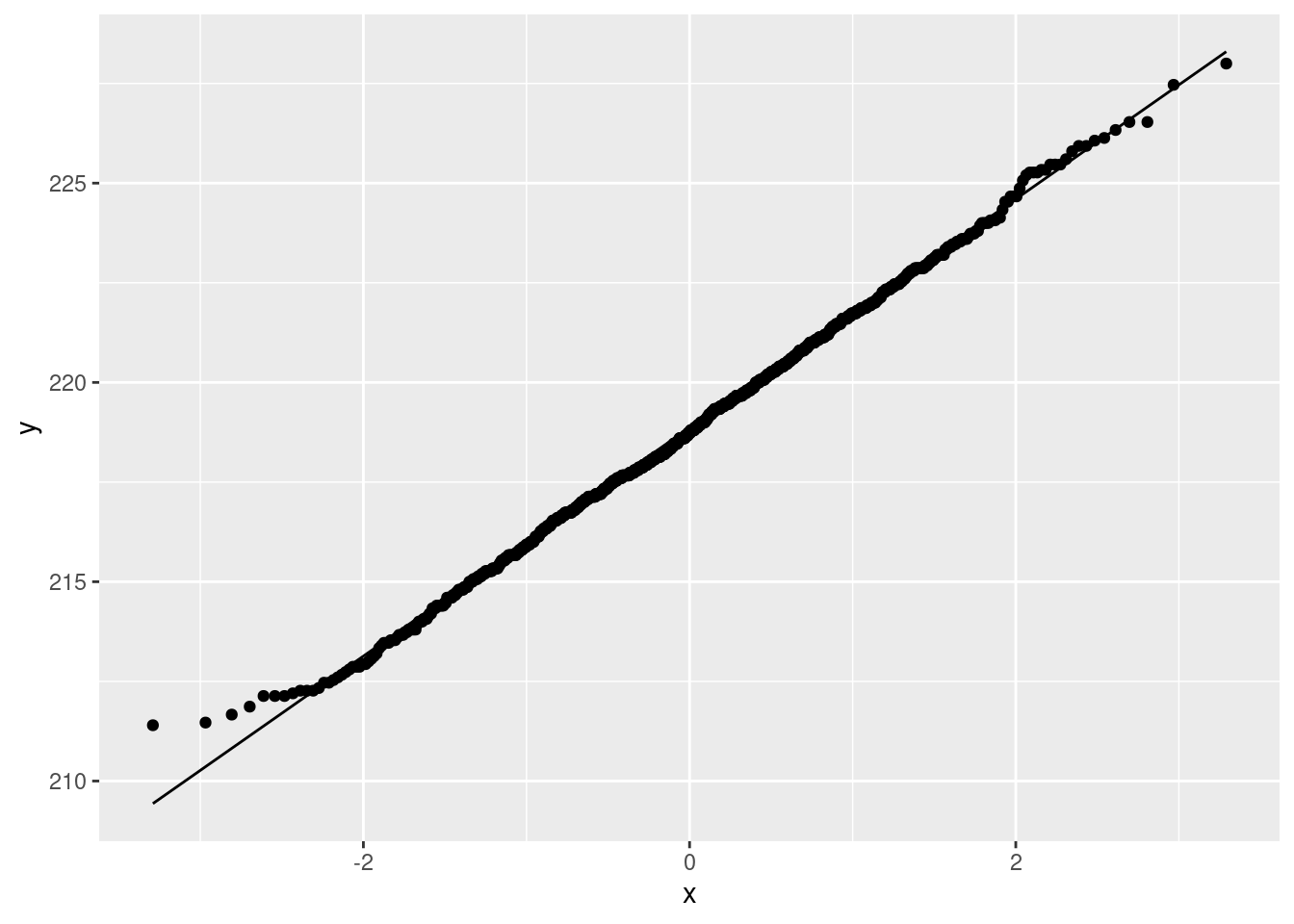

The plant called kudzu was imported to the US South from Japan. It is rich in isoflavones, which are believed to be beneficial for bones. In a study, rats were randomly assigned to one of three diets: one with a low dose of isoflavones from kudzu, one with a high dose, and a control diet with no extra isoflavones. At the end of the study, each rat’s bone density was measured, in milligrams per square centimetre. The data as recorded are shown in http://ritsokiguess.site/isoflavones.txt.5 There are 15 observations for each treatment, and hence 45 altogether.

Here are some code ideas you might need to use later, all part of the tidyverse. You may need to find out how they work.

col_names(in theread_functions)convert(in varioustidyversefunctions)fillna_ifrenameseparate_rowsskip(in theread_functions)values_drop_na(in thepivot_functions)

If you use any of these, cite the webpage(s) or other source(s) where you learned about them.

Take a look at the data file. Describe briefly what you see.

Read in the data, using

read_table, and get it into a tidy form, suitable for making a graph. This means finishing with (at least) a column of treatments with a suitable name (the treatments will be text) and a column of bone density values (numbers), one for each rat. You can have other columns as well; there is no obligation to get rid of them. Describe your process clearly enough that someone new to this data set would be able to understand what you have done and reproduce it on another similar dataset. Before you begin, think about whether or not you want to keep the column headers that are in the data file or not. (It can be done either way, but one way is easier than the other.)The statistician on this study is thinking about running an ordinary analysis of variance to compare the bone mineral density for the different treatments. Obtain a plot from your tidy dataframe that will help her decide whether that is a good idea.

Based on your graph, and any additional graphs you wish to draw, what analysis would you recommend for this dataset? Explain briefly. (Don’t do the analysis.)

17.11 Jocko’s Garage

Insurance adjusters are concerned that Jocko’s Garage is giving estimates for repairing car damage that are too high. To see whether this is indeed the case, ten cars that had been in collisions were taken to both Jocko’s Garage and another garage, and the two estimates for repair were recorded. The data as recorded are here.

Take a look at the data file (eg. by using your web browser). How are the data laid out? Do there appear to be column headers?

Read in and display the data file, bearing in mind what you just concluded about it. What names did the columns acquire?

Make this data set tidy. That is, you need to end up with columns containing the repair cost estimates at each of the two garages and also identifying the cars, with each observation on one row. Describe your thought process. (It needs to be possible for the reader to follow your description and understand why it works.) Save your tidy dataframe.

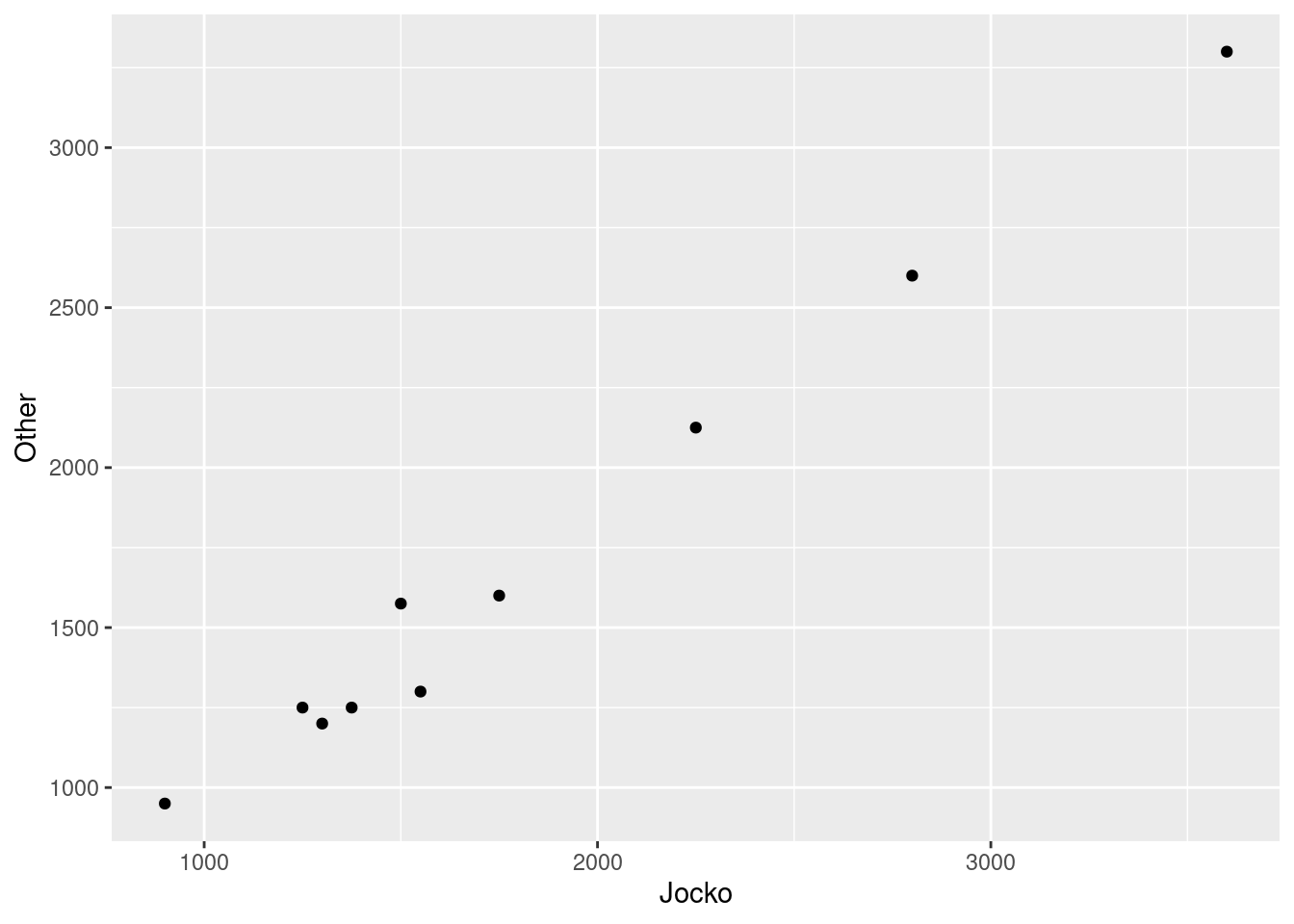

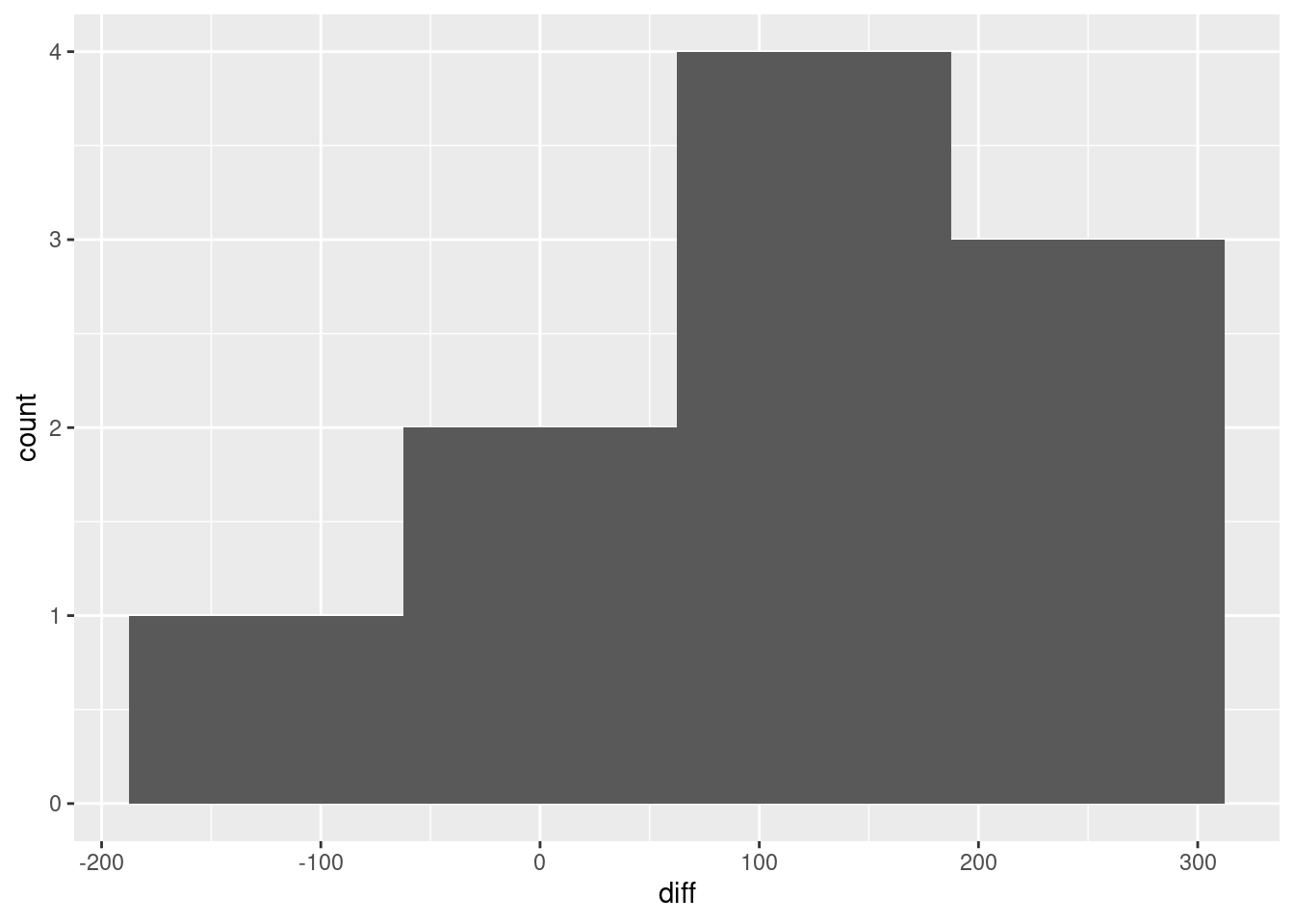

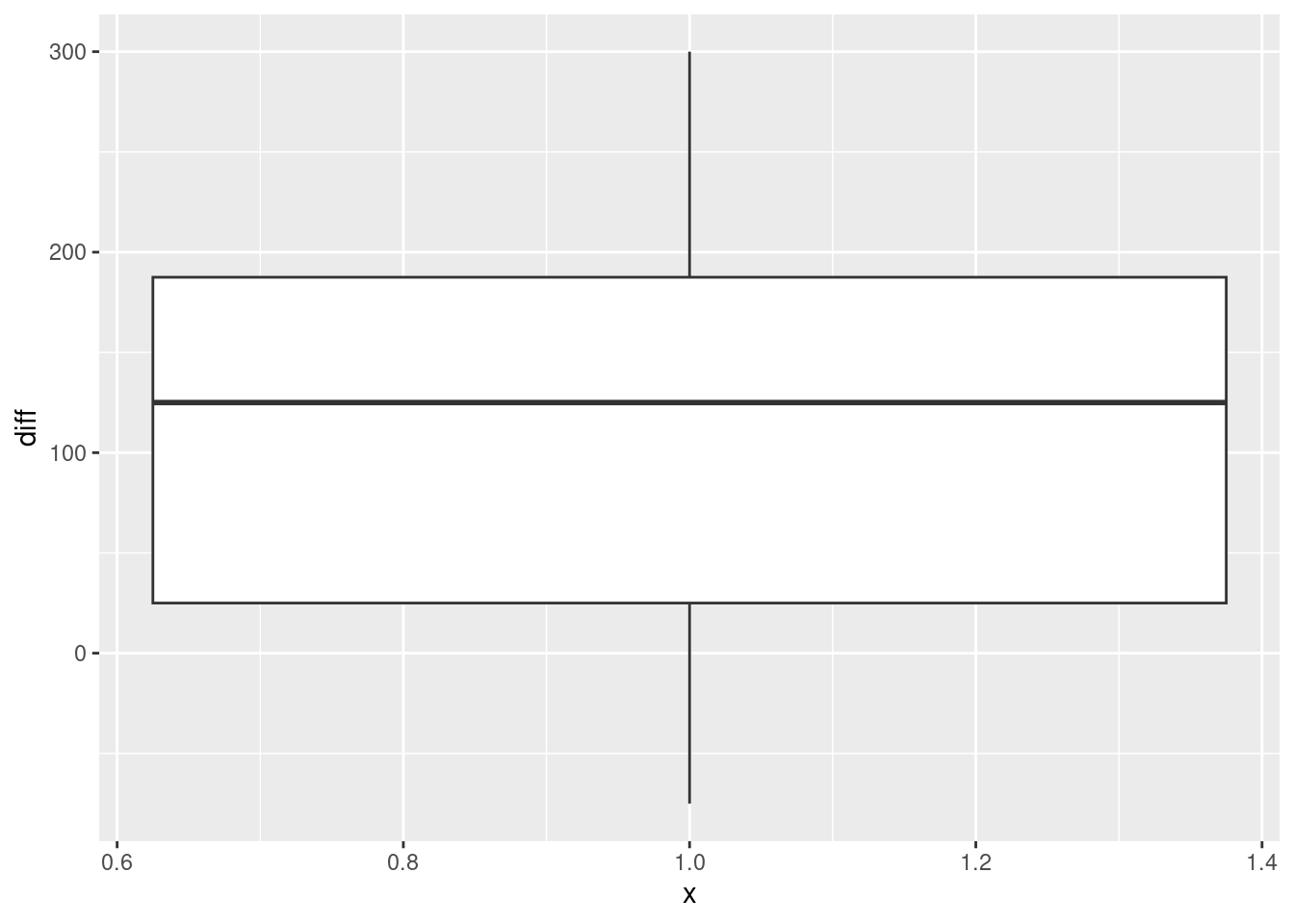

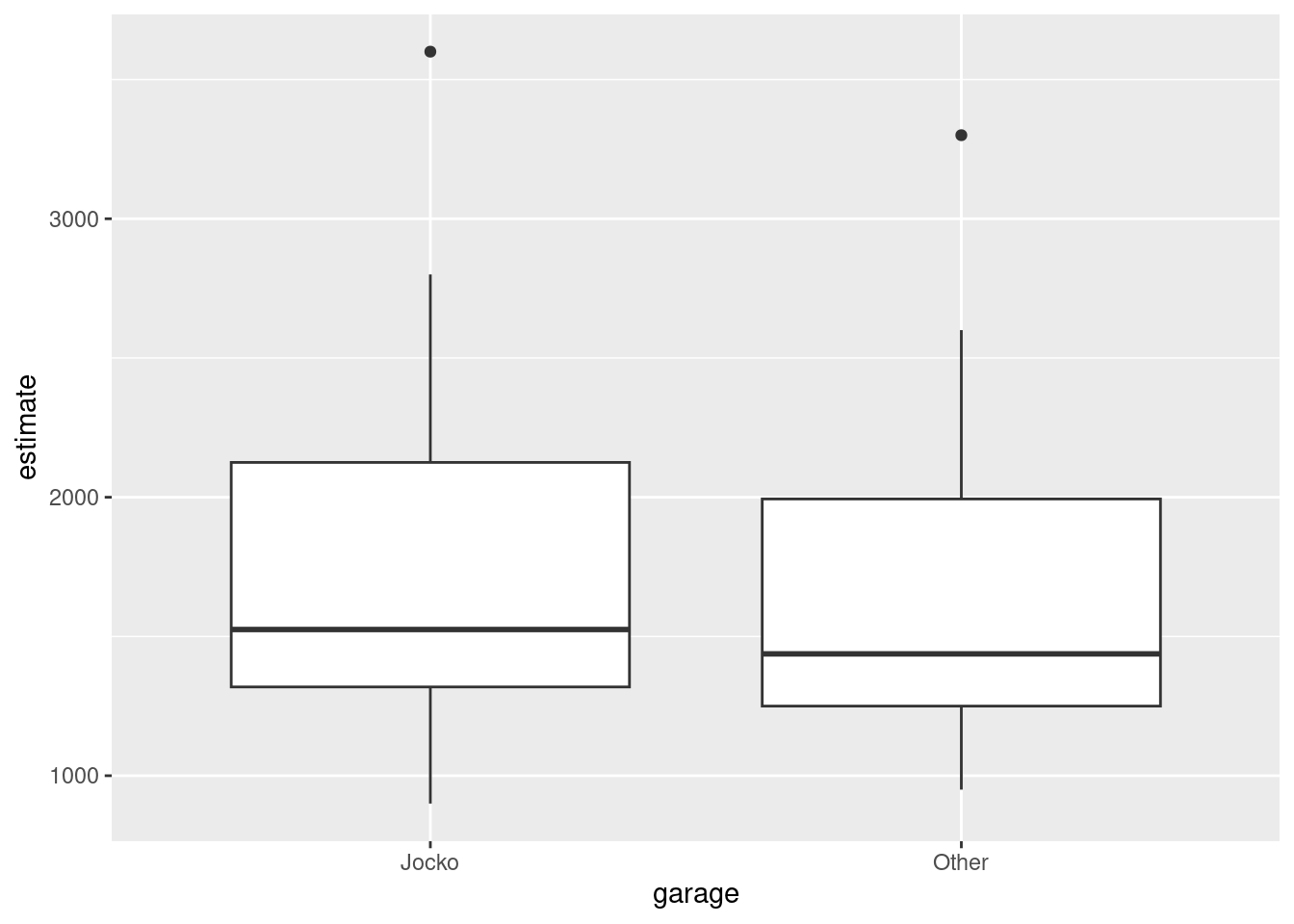

Make a suitable graph to assess the comparison of interest, and say briefly what your graph tells you.

Carry out a test to make an appropriate comparison of the mean estimates. What do you conclude, in the context of the data?

17.12 Tidying electricity consumption

How does the consumption of electricity depend on temperature? To find out, a short-term study was carried out by a utility company based in a certain area. For a period of two years, the average monthly temperature was recorded (in degrees Fahrenheit), the mean daily demand for electricity per household (in kilowatt hours), and the cost per kilowatt hour of electricity for that year (8 cents for the first year and 10 cents for the second, which it will be easiest to treat as categorical).

The data were laid out in an odd way, as shown in http://ritsokiguess.site/datafiles/utils.txt, in aligned columns: the twelve months of temperature were laid out on two lines for the first year, then the twelve months of consumption for the first year on the next two lines, and then four more lines for the second year laid out the same way. Thus the temperature of 31 in the first line goes with the consumption of 55 in the third line, and the last measurements for that year are the 78 at the end of the second line (temperature) and 73 at the end of the fourth line (consumption). Lines 5 through 8 of the data file are the same thing for the second year (when electricity was more expensive).

The data seem to have been laid out in order of temperature, rather than in order of months, which I would have thought would make more sense. But this is what we have.

Read in and display the data file, bearing in mind that it has no column names.

Arrange these data tidily, so that there is a column of price (per kilowatt hour), a column of temperatures, and a column of consumptions. Describe your process, including why you got list-columns (if you did) and what you did about them (if necessary).

Make a suitable graph of temperature, consumption and price in your tidy dataframe. Add smooth trends if appropriate. If you were unable to get the data tidy, use my tidy version here. (If you need the other file, right-click on “here” and Copy Link Address.)

What patterns or trends do you see on your graph? Do they make practical sense? There are two things I would like you to comment on.

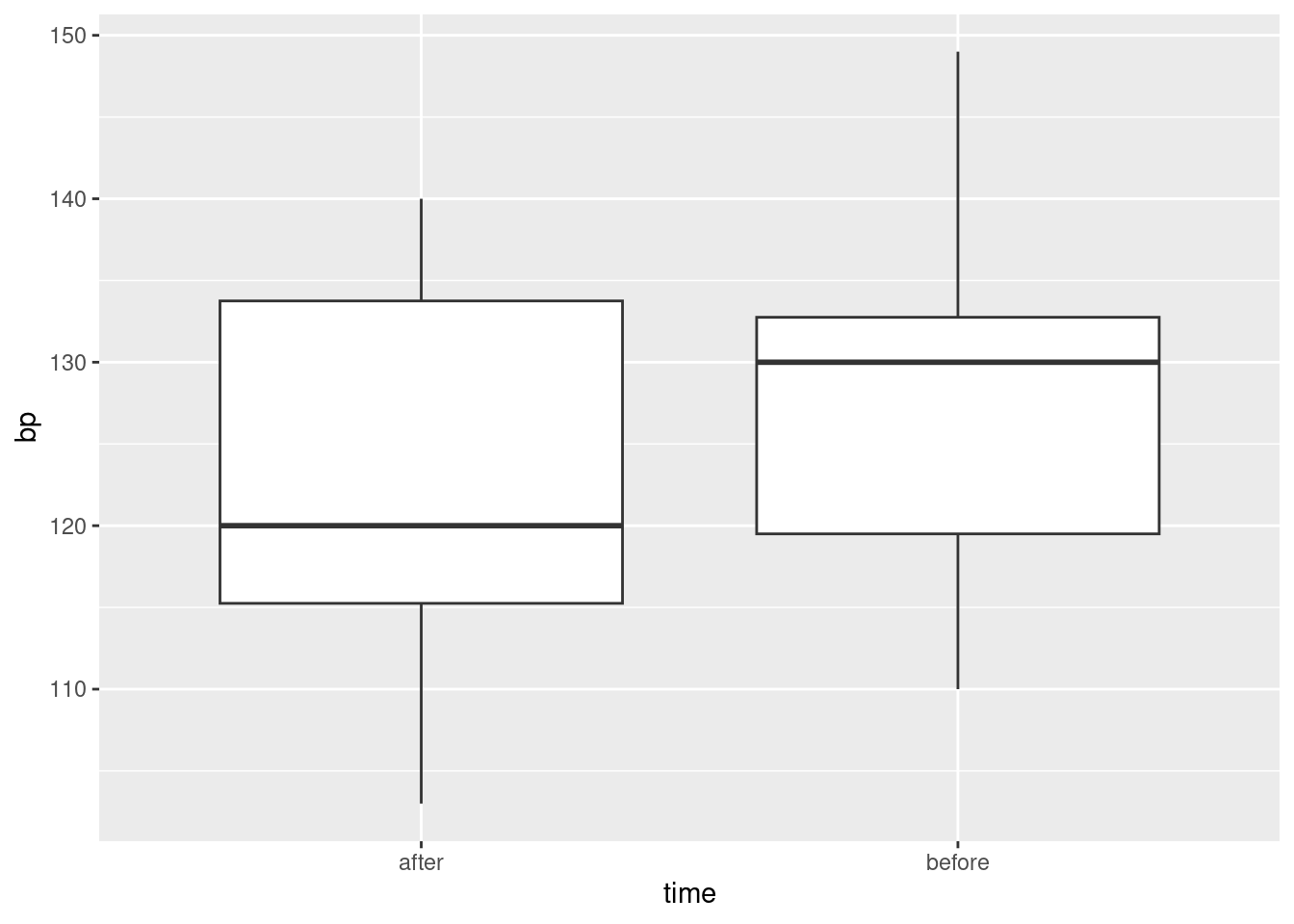

17.13 Tidy blood pressure

Going to the dentist is scary for a lot of people. One way in which this might show up is that people might have higher blood pressure on average before their dentist’s appointment than an hour after the appointment is done. Ten randomly-chosen individuals have their (systolic6) blood pressure measured while they are in a dentist’s waiting room, and then again one hour after their appointment is finished.

You might have seen a tidy version of this data set before.

The data as I originally received it is in http://ritsokiguess.site/datafiles/blood_pressure2.csv.

Read in and display the data as originally received.

Describe briefly how the data you read in is not tidy, bearing in mind how the data were collected and how they would be analysed.

Produce a tidy dataframe from the one you read in from the file. (How many rows should you have?)

What kind of test might you run on these data? Explain briefly.

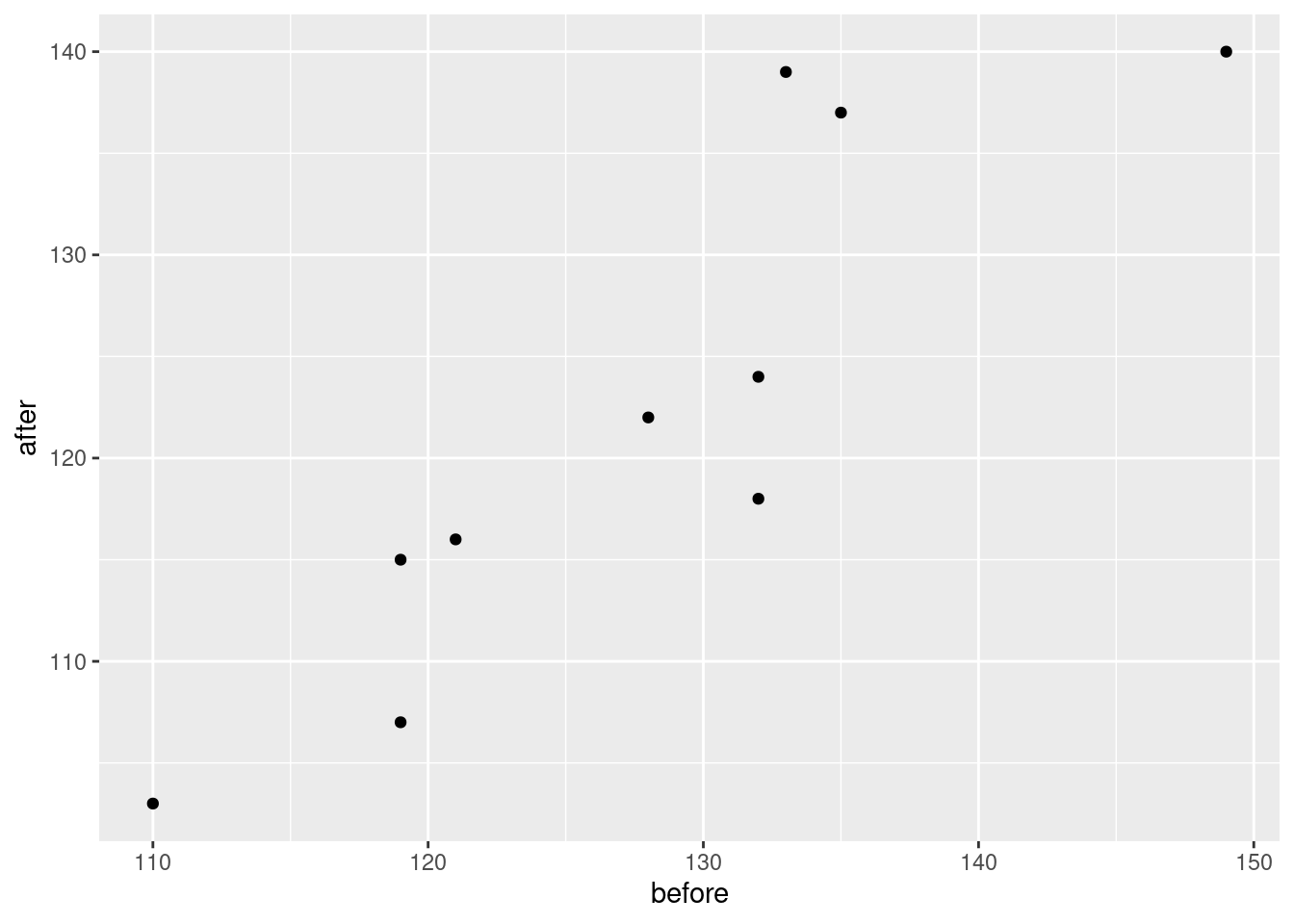

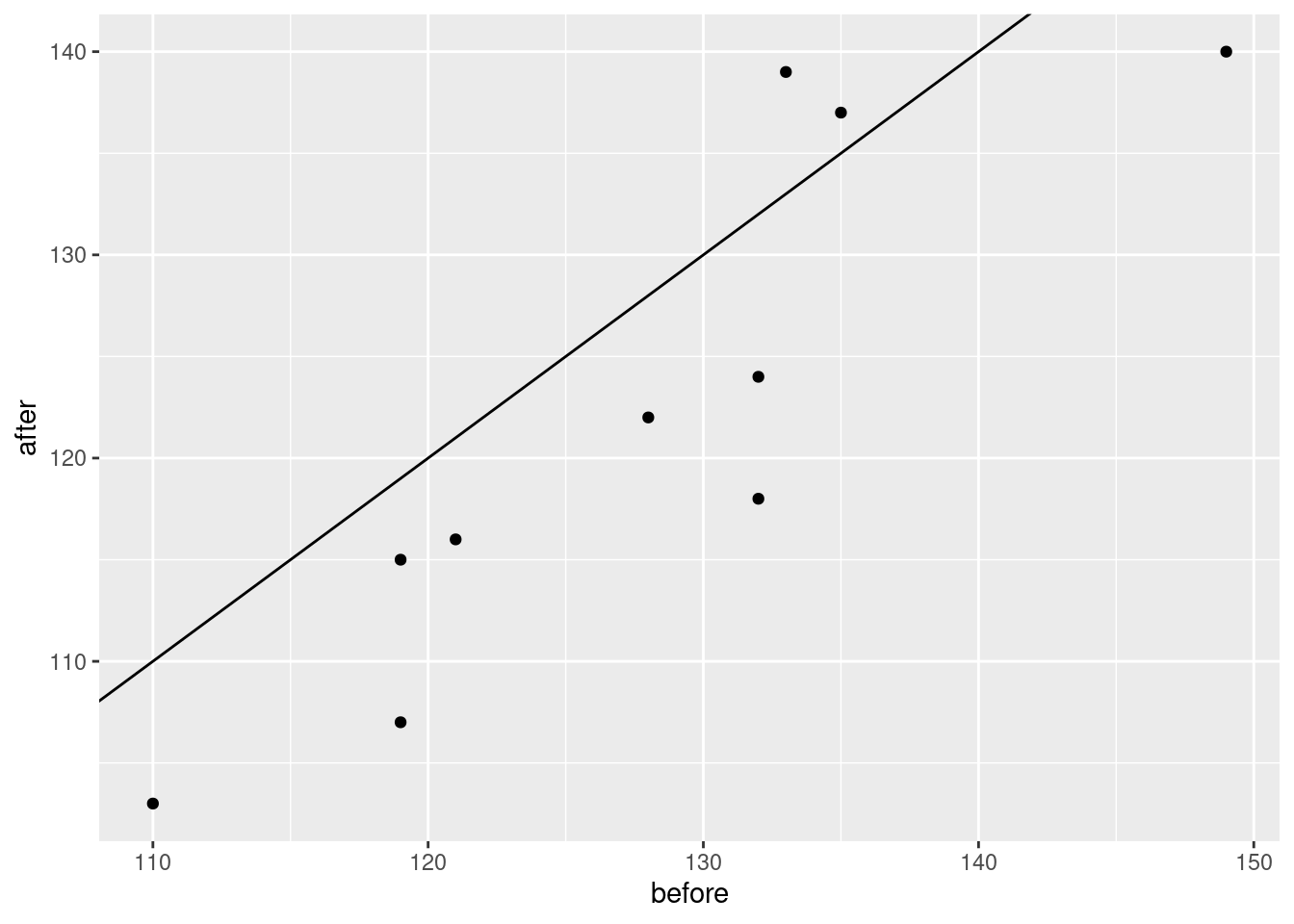

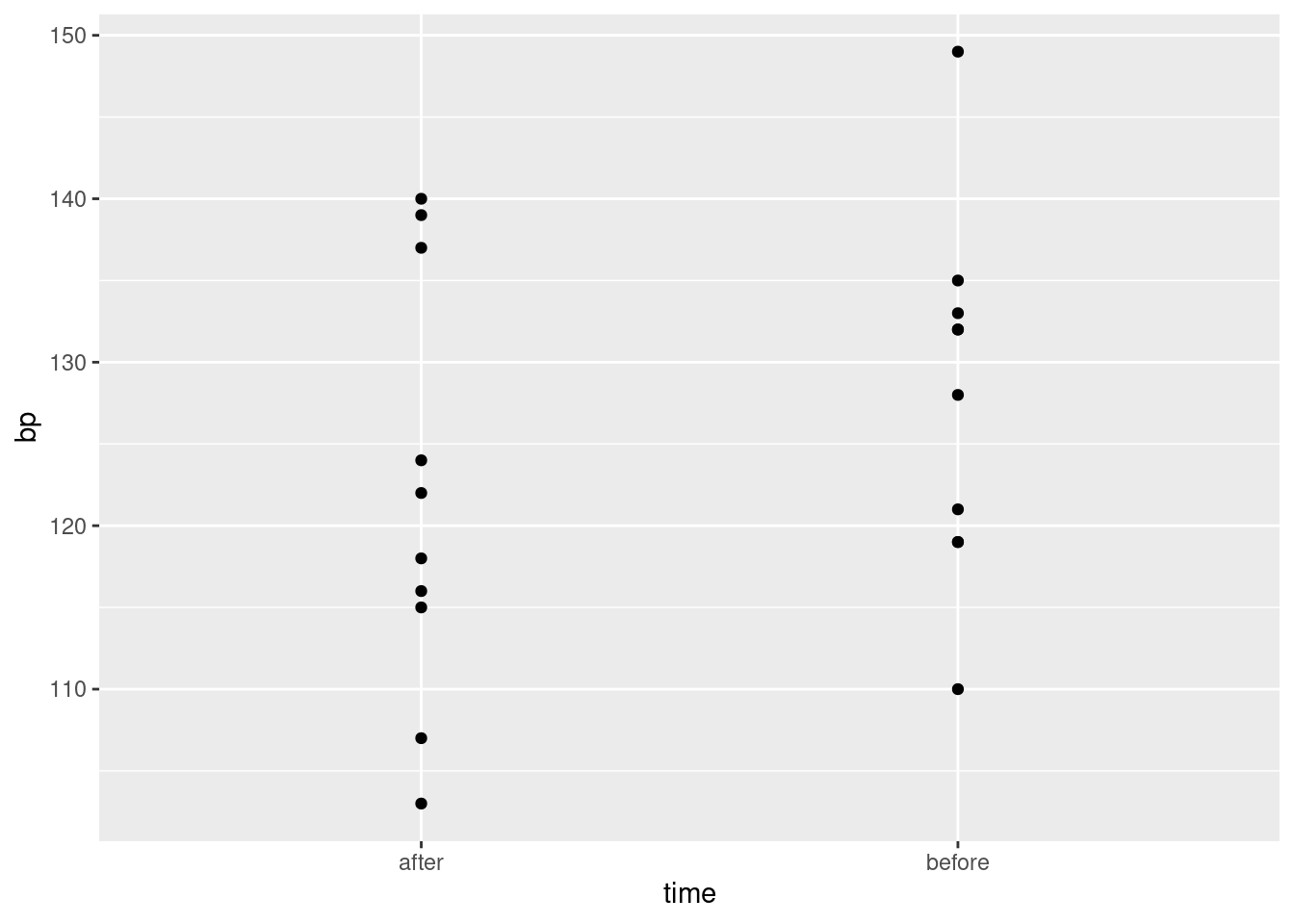

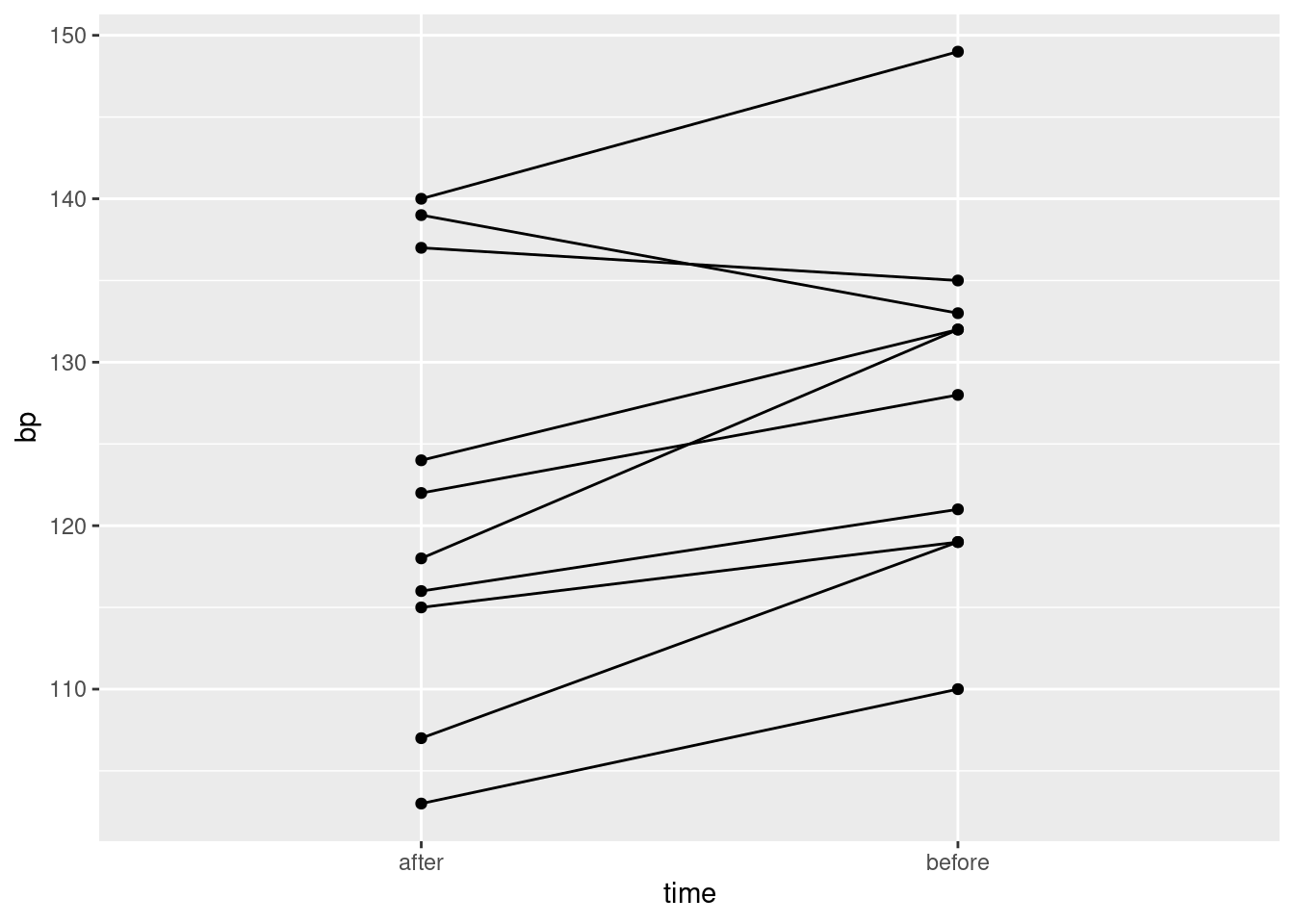

Draw a suitable graph of these data.

My solutions follow:

17.14 Baseball and softball spaghetti

On a previous assignment, we found that students could throw a baseball further than they could throw a softball. In this question, we will make a graph called a “spaghetti plot” to illustrate this graphically. (The issue previously was that the data were matched pairs: the same students threw both balls.)

This seems to work most naturally by building a pipe, a line or two at a time. See if you can do it that way. (If you can’t make it work, use lots of temporary data frames, one to hold the result of each part.)

- Read in the data again from link. The variables had no names, so supply some, as you did before.

Solution

Literal copy and paste:

myurl <- "http://ritsokiguess.site/datafiles/throw.txt"

throws <- read_delim(myurl, " ", col_names = c("student", "baseball", "softball"))Rows: 24 Columns: 3

── Column specification ────────────────────────────────────────────────────────

Delimiter: " "

dbl (3): student, baseball, softball

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.throws\(\blacksquare\)

- Create a new column that is the students turned into a

factor, adding it to your data frame. The reason for this will become clear later.

Solution

Feed student into factor, creating a new column with mutate:

throws %>% mutate(fs = factor(student))This doesn’t look any different from the original student numbers, but note the variable type at the top of the column.

\(\blacksquare\)

- Collect together all the throwing distances into one column, making a second column that says which ball was thrown.

Solution

Use pivot_longer. It goes like this:

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(baseball:softball, names_to="ball", values_to="distance")The names_to is the name of a new categorical column whose values will be what is currently column names, and the values_to names a new quantitative (usually) column that will hold the values in those columns that you are making longer.

If you want to show off a little, you can use a select-helper, noting that the columns you want to make longer all end in “ball”:

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(ends_with("ball"), names_to="ball", values_to="distance")The same result. Use whichever you like.

\(\blacksquare\)

- Using your new data frame, make a “scatterplot” of throwing distance against type of ball.

Solution

The obvious thing. No data frame in the ggplot because it’s the data frame that came out of the previous part of the pipeline (that doesn’t have a name):

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(baseball:softball, names_to="ball", values_to="distance") %>%

ggplot(aes(x = ball, y = distance)) + geom_point()

This is an odd type of scatterplot because the \(x\)-axis is actually a categorical variable. It’s really what would be called something like a dotplot. We’ll be using this as raw material for the plot we actually want.

What this plot is missing is an indication of which student threw which ball. As it stands now, it could be an inferior version of a boxplot of distances thrown for each ball (which would imply that they are two independent sets of students, something that is not true).

\(\blacksquare\)

- Add two things to your plot: something that will distinguish the students by colour (this works best if the thing distinguished by colour is a factor),7 and something that will join the two points for the same student by a line.

Solution

A colour and a group in the aes, and a geom_line:

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(baseball:softball, names_to="ball", values_to="distance") %>%

ggplot(aes(x = ball, y = distance, group = fs, colour = fs)) +

geom_point() + geom_line()

You can see what happens if you use the student as a number:

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(baseball:softball, names_to="ball", values_to="distance") %>%

ggplot(aes(x = ball, y = distance, group = student, colour = student)) +

geom_point() + geom_line()

Now the student numbers are distinguished as a shade of blue (on an implied continuous scale: even a nonsensical fractional student number like 17.5 would be a shade of blue). This is not actually so bad here, because all we are trying to do is to distinguish the students sufficiently from each other so that we can see where the spaghetti strands go. But I like the multi-coloured one better.

\(\blacksquare\)

- The legend is not very informative. Remove it from the plot, using

guides.

Solution

You may not have seen this before. Here’s what to do: Find what’s at the top of the legend that you want to remove. Here that is fs. Find where fs appears in your aes. It actually appears in two places: in group and colour. I think the legend we want to get rid of is actually the colour one, so we do this:

throws %>%

mutate(fs = factor(student)) %>%

pivot_longer(baseball:softball, names_to="ball", values_to="distance") %>%

ggplot(aes(x = ball, y = distance, group = fs, colour = fs)) +

geom_point() + geom_line() +

guides(colour = "none")

That seems to have done it.

\(\blacksquare\)

- What do you see on the final spaghetti plot? What does that tell you about the relative distances a student can throw a baseball vs. a softball? Explain briefly, blah blah blah.

Solution

Most of the spaghetti strands go downhill from baseball to softball, or at least very few of them go uphill. That tells us that most students can throw a baseball further than a softball. That was the same impression that the matched-pairs \(t\)-test gave us. But the spaghetti plot tells us something else. If you look carefully, you see that most of the big drops are for students who could throw a baseball a long way. These students also threw a softball further than the other students, but not by as much. Most of the spaghetti strands in the bottom half of the plot go more or less straight across. This indicates that students who cannot throw a baseball very far will throw a softball about the same distance as they threw the baseball. There is an argument you could make here that the difference between distances thrown is a proportional one, something like “a student typically throws a baseball 20% further than a softball”. That could be assessed by comparing not the distances themselves, but the logs of the distances: in other words, making a log transformation of all the distances. (Distances have a lower limit of zero, so you might expect observed distances to be skewed to the right, which is another argument for making some kind of transformation.)

\(\blacksquare\)

17.15 Ethanol and sleep time in rats

A biologist wished to study the effects of ethanol on sleep time in rats. A sample of 20 rats (all the same age) was selected, and each rat was given an injection having a particular concentration (0, 1, 2 or 4 grams per kilogram of body weight) of ethanol. These are labelled e0, e1, e2, e4. The “0” treatment was a control group. The rapid eye movement (REM) sleep time was then recorded for each rat. The data are in link.

- Read the data in from the file. Check that you have four rows of observations and five columns of sleep times.

Solution

Separated by single spaces:

my_url <- "http://ritsokiguess.site/datafiles/ratsleep.txt"

sleep1 <- read_delim(my_url, " ")Rows: 4 Columns: 6

── Column specification ────────────────────────────────────────────────────────

Delimiter: " "

chr (1): treatment

dbl (5): obs1, obs2, obs3, obs4, obs5

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.sleep1There are six columns, but one of them labels the groups, and there are correctly five columns of sleep times.

I used a “temporary” name for my data frame, because I’m going to be doing some processing on it in a minute, and I want to reserve the name sleep for my processed data frame.

\(\blacksquare\)

- Unfortunately, the data are in the wrong format. All the sleep times for each treatment group are on one row, and we should have one column containing all the sleep times, and the corresponding row should show which treatment group that sleep time came from. Transform this data frame into one that you could use for modelling or making graphs.

Solution

We will want one column of sleep times, with an additional categorical column saying what observation each sleep time was within its group (or, you might say, we don’t really care about that much, but that’s what we are going to get).

The columns obs1 through obs5 are different in that they are different observation numbers (“replicates”, in the jargon). I’ll call that rep. What makes them the same is that they are all sleep times. Columns obs1 through obs5 are the ones we want to combine, thus. Here is where I use the name sleep: I save the result of the pivot_longer into a data frame sleep. Note that I also used the brackets-around-the-outside to display what I had, so that I didn’t have to do a separate display. This is a handy way of saving and displaying in one shot:

(sleep1 %>%

pivot_longer(-treatment, names_to="rep", values_to="sleeptime") -> sleep)Typically in this kind of work, you have a lot of columns that need to be made longer, and a much smaller number of columns that need to be repeated as necessary. You can either specify all the columns to make longer, or you can specify “not” the other columns. Above, my first input to pivot_longer was “everything but treatment”, but you could also do it like this:

sleep1 %>%

pivot_longer(obs1:obs5, names_to="rep", values_to="sleeptime") or like this:

sleep1 %>%

pivot_longer(starts_with("obs"), names_to="rep", values_to="sleeptime") This one was a little unusual in that usually with these you have the treatments in the columns and the replicates in the rows. It doesn’t matter, though: pivot_longer handles both cases.

We have 20 rows of 3 columns. I got all the rows, but you will probably get an output with ten rows as usual, and will need to click Next to see the last ten rows. The initial display will say how many rows (20) and columns (3) you have.

The column rep is not very interesting: it just says which observation each one was within its group.8 The interesting things are treatment and sleeptime, which are the two variables we’ll need for our analysis of variance.

\(\blacksquare\)

- Using your new data frame, make side-by-side boxplots of sleep time by treatment group.

Solution

ggplot(sleep, aes(x = treatment, y = sleeptime)) + geom_boxplot()

\(\blacksquare\)

- In your boxplots, how does the median sleep time appear to depend on treatment group?

Solution

It appears to decrease as the dose of ethanol increases, and pretty substantially so (in that the differences ought to be significant, but that’s coming up).

\(\blacksquare\)

- There is an assumption about spread that the analysis of variance needs in order to be reliable. Do your boxplots indicate that this assumption is satisfied for these data, bearing in mind that you have only five observations per group?

Solution

The assumption is that the population SDs of each group are all equal. Now, the boxplots show IQRs, which are kind of a surrogate for SD, and because we only have five observations per group to base the IQRs on, the sample IQRs might vary a bit. So we should look at the heights of the boxes on the boxplot, and see whether they are grossly unequal. They appear to be to be of very similar heights, all things considered, so I am happy.

If you want the SDs themselves:

sleep %>%

group_by(treatment) %>%

summarize(stddev = sd(sleeptime))Those are very similar, given only 5 observations per group. No problems here.

\(\blacksquare\)

- Run an analysis of variance to see whether sleep time differs significantly among treatment groups. What do you conclude?

Solution

I use aov here, because I might be following up with Tukey in a minute:

sleep.1 <- aov(sleeptime ~ treatment, data = sleep)

summary(sleep.1) Df Sum Sq Mean Sq F value Pr(>F)

treatment 3 5882 1961 21.09 8.32e-06 ***

Residuals 16 1487 93

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is a very small P-value, so my conclusion is that the mean sleep times are not all the same for the treatment groups. Further than that I am not entitled to say (yet).

The technique here is to save the output from aov in something, look at that (via summary), and then that same something gets fed into TukeyHSD later.

\(\blacksquare\)

- Would it be a good idea to run Tukey’s method here? Explain briefly why or why not, and if you think it would be a good idea, run it.

Solution

Tukey’s method is useful when (i) we have run an analysis of variance and got a significant result and (ii) when we want to know which groups differ significantly from which. Both (i) and (ii) are true here. So:

TukeyHSD(sleep.1) Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = sleeptime ~ treatment, data = sleep)

$treatment

diff lwr upr p adj

e1-e0 -17.74 -35.18636 -0.2936428 0.0455781

e2-e0 -31.36 -48.80636 -13.9136428 0.0005142

e4-e0 -46.52 -63.96636 -29.0736428 0.0000056

e2-e1 -13.62 -31.06636 3.8263572 0.1563545

e4-e1 -28.78 -46.22636 -11.3336428 0.0011925

e4-e2 -15.16 -32.60636 2.2863572 0.1005398\(\blacksquare\)

- What do you conclude from Tukey’s method? (This is liable to be a bit complicated.) Is there a treatment that is clearly best, in terms of the sleep time being largest?

Solution

All the differences are significant except treatment e2 vs. e1 and e4. All the differences involving the control group e0 are significant, and if you look back at the boxplots in (c), you’ll see that the control group e0 had the highest mean sleep time. So the control group is best (from this point of view), or another way of saying it is that any dose of ethanol is significantly reducing mean sleep time. The other comparisons are a bit confusing, because the 1-4 difference is significant, but neither of the differences involving 2 are. That is, 1 is better than 4, but 2 is not significantly worse than 1 nor better than 4. This seems like it should be a logical impossibility, but the story is that we don’t have enough data to decide where 2 fits relative to 1 or 4. If we had 10 or 20 observations per group, we might be able to conclude that 2 is in between 1 and 4 as the boxplots suggest.

Extra: I didn’t ask about normality here, but like the equal-spreads assumption I’d say there’s nothing controversial about it with these data. With normality good and equal spreads good, aov plus Tukey is the analysis of choice.

\(\blacksquare\)

17.16 Growth of tomatoes

A biology graduate student exposed each of 32 tomato plants to one of four different colours of light (8 plants to each colour). The growth rate of each plant, in millimetres per week, was recorded. The data are in link.

- Read the data into R and confirm that you have 8 rows and 5 columns of data.

Solution

This kind of thing:

my_url="http://ritsokiguess.site/datafiles/tomatoes.txt"

toms1=read_delim(my_url," ")Rows: 8 Columns: 5

── Column specification ────────────────────────────────────────────────────────

Delimiter: " "

dbl (5): plant, blue, red, yellow, green

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.toms1I do indeed have 8 rows and 5 columns.

With only 8 rows, listing the data like this is good.

\(\blacksquare\)

- Re-arrange the data so that you have one column containing all the growth rates, and another column saying which colour light each plant was exposed to. (The aim here is to produce something suitable for feeding into

aovlater.)

Solution

This is a job for pivot_longer:

toms1 %>%

pivot_longer(-plant, names_to="colour", values_to="growthrate") -> toms2

toms2I chose to specify “everything but plant number”, since there are several colour columns with different names.

Since the column plant was never mentioned, this gets repeated as necessary, so now it denotes “plant within colour group”, which in this case is not very useful. (Where you have matched pairs, or repeated measures in general, you do want to keep track of which individual is which. But this is not repeated measures because plant number 1 in the blue group and plant number 1 in the red group are different plants.)

There were 8 rows originally and 4 different colours, so there should be, and are, \(8 \times 4=32\) rows in the made-longer data set.

\(\blacksquare\)

- Save the data in the new format to a text file. This is most easily done using

write_csv, which is the opposite ofread_csv. It requires two things: a data frame, and the name of a file to save in, which should have a.csvextension.

Solution

The code is easy enough:

write_csv(toms2,"tomatoes2.csv")If no error, it worked. That’s all you need.

To verify (for my satisfaction) that it was saved correctly:

cat tomatoes2.csv plant,colour,growthrate

1,blue,5.34

1,red,13.67

1,yellow,4.61

1,green,2.72

2,blue,7.45

2,red,13.04

2,yellow,6.63

2,green,1.08

3,blue,7.15

3,red,10.16

3,yellow,5.29

3,green,3.97

4,blue,5.53

4,red,13.12

4,yellow,5.29

4,green,2.66

5,blue,6.34

5,red,11.06

5,yellow,4.76

5,green,3.69

6,blue,7.16

6,red,11.43

6,yellow,5.57

6,green,1.96

7,blue,7.77

7,red,13.98

7,yellow,6.57

7,green,3.38

8,blue,5.09

8,red,13.49

8,yellow,5.25

8,green,1.87On my system, that will list the contents of the file. Or you can just open it in R Studio (if you saved it the way I did, it’ll be in the same folder, and you can find it in the Files pane.)

\(\blacksquare\)

- Make a suitable boxplot, and use it to assess the assumptions for ANOVA. What do you conclude? Explain briefly.

Solution

Nothing terribly surprising here. My data frame is called toms2, for some reason:

ggplot(toms2,aes(x=colour, y=growthrate))+geom_boxplot()

There are no outliers, but there is a little skewness (compare the whiskers, not the placement of the median within the box, because what matters with skewness is the tails, not the middle of the distribution; it’s problems in the tails that make the mean unsuitable as a measure of centre). The Red group looks the most skewed. Also, the Yellow group has smaller spread than the others (we assume that the population variances within each group are equal). The thing to bear in mind here, though, is that there are only eight observations per group, so the distributions could appear to have unequal variances or some non-normality by chance.

My take is that these data, all things considered, are just about OK for ANOVA. Another option would be to do Welch’s ANOVA as well and compare with the regular ANOVA: if they give more or less the same P-value, that’s a sign that I didn’t need to worry.

Extra: some people like to run a formal test on the variances to test them for equality. My favourite (for reasons explained elsewhere) is the Levene test, if you insist on going this way. It lives in package car, and does not take a data=, so you need to do the with thing:

library(car)

with(toms2,leveneTest(growthrate,colour))Warning in leveneTest.default(growthrate, colour): colour coerced to factor.The warning is because colour was actually text, but the test did the right thing by turning it into a factor, so that’s OK.

There is no way we can reject equal variances in the four groups. The \(F\)-statistic is less than 1, in fact, which says that if the four groups have the same population variances, the sample variances will be more different than the ones we observed on average, and so there is no way that these sample variances indicate different population variances. (This is because of 8 observations only per group; if there had been 80 observations per group, it would have been a different story.) Decide for yourself whether you’re surprised by this.

With that in mind, I think the regular ANOVA will be perfectly good, and we would expect that and the Welch ANOVA to give very similar results.

\(\blacksquare\)

- Run (regular) ANOVA on these data. What do you conclude? (Optional extra: if you think that some other variant of ANOVA would be better, run that as well and compare the results.)

Solution

aov, bearing in mind that Tukey is likely to follow:

toms.1=aov(growthrate~colour,data=toms2)

summary(toms.1) Df Sum Sq Mean Sq F value Pr(>F)

colour 3 410.5 136.82 118.2 5.28e-16 ***

Residuals 28 32.4 1.16

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is a tiny P-value, so the mean growth rate for the different colours is definitely not the same for all colours. Or, if you like, one or more of the colours has a different mean growth rate than the others.

This, remember, is as far as we go right now.

Extra: if you thought that normality was OK but not equal spreads, then Welch ANOVA is the way to go:

toms.2=oneway.test(growthrate~colour,data=toms2)

toms.2

One-way analysis of means (not assuming equal variances)

data: growthrate and colour

F = 81.079, num df = 3.000, denom df = 15.227, p-value = 1.377e-09The P-value is not quite as small as for the regular ANOVA, but it is still very small, and the conclusion is the same.

If you had doubts about the normality (that were sufficiently great, even given the small sample sizes), then go with Mood’s median test for multiple groups:

library(smmr)

median_test(toms2,growthrate,colour)$grand_median

[1] 5.55

$table

above

group above below

blue 5 3

green 0 8

red 8 0

yellow 3 5

$test

what value

1 statistic 1.700000e+01

2 df 3.000000e+00

3 P-value 7.067424e-04The P-value is again extremely small (though not quite as small as for the other two tests, for the usual reason that Mood’s median test doesn’t use the data very efficiently: it doesn’t use how far above or below the overall median the data values are.)

The story here, as ever, is consistency: whatever you thought was wrong, looking at the boxplots, needs to guide the test you do:

if you are not happy with normality, go with

median_testfromsmmr(Mood’s median test).if you are happy with normality and equal variances, go with

aov.if you are happy with normality but not equal variances, go with

oneway.test(Welch ANOVA).

So the first thing to think about is normality, and if you are OK with normality, then think about equal spreads. Bear in mind that you need to be willing to tolerate a certain amount of non-normality and inequality in the spreads, given that your data are only samples from their populations. (Don’t expect perfection, in short.)

\(\blacksquare\)

- If warranted, run a suitable follow-up. (If not warranted, explain briefly why not.)

Solution

Whichever flavour of ANOVA you ran (regular ANOVA, Welch ANOVA, Mood’s median test), you got the same conclusion for these data: that the average growth rates were not all the same for the four colours. That, as you’ll remember, is as far as you go. To find out which colours differ from which in terms of growth rate, you need to run some kind of multiple-comparisons follow-up, the right one for the analysis you did. Looking at the boxplots suggests that red is clearly best and green clearly worst, and it is possible that all the colours are significantly different from each other.) If you did regular ANOVA, Tukey is what you need:

TukeyHSD(toms.1) Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = growthrate ~ colour, data = toms2)

$colour

diff lwr upr p adj

green-blue -3.8125 -5.281129 -2.3438706 0.0000006

red-blue 6.0150 4.546371 7.4836294 0.0000000

yellow-blue -0.9825 -2.451129 0.4861294 0.2825002

red-green 9.8275 8.358871 11.2961294 0.0000000

yellow-green 2.8300 1.361371 4.2986294 0.0000766

yellow-red -6.9975 -8.466129 -5.5288706 0.0000000All of the differences are (strongly) significant, except for yellow and blue, the two with middling growth rates on the boxplot. Thus we would have no hesitation in saying that growth rate is biggest in red light and smallest in green light.

If you did Welch ANOVA, you need Games-Howell, which you have to get from one of the packages that offers it:

library(PMCMRplus)

gamesHowellTest(growthrate~factor(colour),data=toms2)

Pairwise comparisons using Games-Howell testdata: growthrate by factor(colour) blue green red

green 1.6e-05 - -

red 1.5e-06 4.8e-09 -

yellow 0.18707 0.00011 5.8e-07

P value adjustment method: nonealternative hypothesis: two.sidedThe conclusions are the same as for the Tukey: all the means are significantly different except for yellow and blue. Finally, if you did Mood’s median test, you need this one:

pairwise_median_test(toms2, growthrate, colour)Same conclusions again. This is what I would have guessed; the conclusions from Tukey were so clear-cut that it really didn’t matter which way you went; you’d come to the same conclusion.

That said, what I am looking for from you is a sensible choice of analysis of variance (ANOVA, Welch’s ANOVA or Mood’s median test) for a good reason, followed by the right follow-up for the test you did. Even though the conclusions are all the same no matter what you do here, I want you to get used to following the right method, so that you will be able to do the right thing when it does matter.

\(\blacksquare\)

17.17 Pain relief in migraine headaches (again)

The data in link are from a study of pain relief in migraine headaches. Specifically, 27 subjects were randomly assigned to receive one of three pain relieving drugs, labelled A, B and C. Each subject reported the number of hours of pain relief they obtained (that is, the number of hours between taking the drug and the migraine symptoms returning). A higher value is therefore better. Can we make some recommendation about which drug is best for the population of migraine sufferers?

- Read in and display the data. Take a look at the data file first, and see if you can say why

read_tablewill work andread_delimwill not.

Solution

The key is two things: the data values are lined up in columns, and there is more than one space between values. The second thing is why read_delim will not work. If you look carefully at the data file, you’ll see that the column names are above and aligned with the columns. read_table doesn’t actually need things to be lined up in columns; all it actually needs is for there to be one or more spaces between columns.

my_url <- "http://ritsokiguess.site/datafiles/migraine.txt"

migraine <- read_table(my_url)

── Column specification ────────────────────────────────────────────────────────

cols(

DrugA = col_double(),

DrugB = col_double(),

DrugC = col_double()

)migraineSuccess.

\(\blacksquare\)

- What is it about the experimental design that makes a one-way analysis of variance plausible for data like this?

Solution

Each experimental subject only tested one drug, so that we have 27 independent observations, nine from each drug. This is exactly the setup that a one-way ANOVA requires. Compare that to, for example, a situation where you had only 9 subjects, but they each tested all the drugs (so that each subject produced three measurements). That is like a three-measurement version of matched pairs, a so-called repeated-measures design, which requires its own kind of analysis.9

\(\blacksquare\)

- What is wrong with the current format of the data as far as doing a one-way ANOVA analysis is concerned? (This is related to the idea of whether or not the data are “tidy”.)

Solution

For our analysis, we need one column of pain relief time and one column labelling the drug that the subject in question took. Or, if you prefer to think about what would make these data “tidy”: there are 27 subjects, so there ought to be 27 rows, and all three columns are measurements of pain relief, so they ought to be in one column.

\(\blacksquare\)

- “Tidy” the data to produce a data frame suitable for your analysis.

Solution

This is pivot_longer. The column names are going to be stored in a column drug, and the corresponding values in a column called painrelief (use whatever names you like):

migraine %>%

pivot_longer(everything(), names_to="drug", values_to="painrelief") -> migraine2Since I was making all the columns longer, I used the select-helper everything() to do that. Using instead DrugA:DrugC or starts_with("Drug") would also be good. Try them. starts_with is not case-sensitive, as far as I remember, so starts_with("drug") will also work here.

We do indeed have a new data frame with 27 rows, one per observation, and 2 columns, one for each variable: the pain relief hours, plus a column identifying which drug that pain relief time came from. Exactly what aov needs.

You can probably devise a better name for your new data frame.

\(\blacksquare\)

- Go ahead and run your one-way ANOVA (and Tukey if necessary). Assume for this that the pain relief hours in each group are sufficiently close to normally distributed with sufficiently equal spreads.

Solution

My last sentence absolves us from doing the boxplots that we would normally insist on doing.

painrelief.1 <- aov(painrelief ~ drug, data = migraine2)

summary(painrelief.1) Df Sum Sq Mean Sq F value Pr(>F)

drug 2 41.19 20.59 7.831 0.00241 **

Residuals 24 63.11 2.63

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1There are (strongly) significant differences among the drugs, so it is definitely worth firing up Tukey to figure out where the differences are:

TukeyHSD(painrelief.1) Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = painrelief ~ drug, data = migraine2)

$drug

diff lwr upr p adj

DrugB-DrugA 2.8888889 0.9798731 4.797905 0.0025509

DrugC-DrugA 2.2222222 0.3132065 4.131238 0.0203671

DrugC-DrugB -0.6666667 -2.5756824 1.242349 0.6626647Both the differences involving drug A are significant, and because a high value of painrelief is better, in both cases drug A is worse than the other drugs. Drugs B and C are not significantly different from each other.

Extra: we can also use the “pipe” to do this all in one go:

migraine %>%

pivot_longer(everything(), names_to="drug", values_to="painrelief") %>%

aov(painrelief ~ drug, data = .) %>%

summary() Df Sum Sq Mean Sq F value Pr(>F)

drug 2 41.19 20.59 7.831 0.00241 **

Residuals 24 63.11 2.63

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1with the same results as before. Notice that I never actually created a second data frame by name; it was created by pivot_longer and then immediately used as input to aov.10 I also used the data=. trick to use “the data frame that came out of the previous step” as my input to aov.

Read the above like this: “take migraine, and then make everything longer, creating new columns drug and painrelief, and then do an ANOVA of painrelief by drug, and then summarize the results.”

What is even more alarming is that I can feed the output from aov straight into TukeyHSD:

migraine %>%

pivot_longer(everything(), names_to="drug", values_to="painrelief") %>%

aov(painrelief ~ drug, data = .) %>%

TukeyHSD() Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = painrelief ~ drug, data = .)

$drug

diff lwr upr p adj

DrugB-DrugA 2.8888889 0.9798731 4.797905 0.0025509

DrugC-DrugA 2.2222222 0.3132065 4.131238 0.0203671

DrugC-DrugB -0.6666667 -2.5756824 1.242349 0.6626647I wasn’t sure whether this would work, since the output from aov is an R list rather than a data frame, but the output from aov is sent into TukeyHSD whatever kind of thing it is.

What I am missing here is to display the result of aov and use it as input to TukeyHSD. Of course, I had to discover that this could be solved, and indeed it can:

migraine %>%

pivot_longer(everything(), names_to="drug", values_to="painrelief") %>%

aov(painrelief ~ drug, data = .) %>%

{

print(summary(.))

.

} %>%

TukeyHSD() Df Sum Sq Mean Sq F value Pr(>F)

drug 2 41.19 20.59 7.831 0.00241 **

Residuals 24 63.11 2.63

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Tukey multiple comparisons of means

95% family-wise confidence level

Fit: aov(formula = painrelief ~ drug, data = .)

$drug

diff lwr upr p adj

DrugB-DrugA 2.8888889 0.9798731 4.797905 0.0025509

DrugC-DrugA 2.2222222 0.3132065 4.131238 0.0203671

DrugC-DrugB -0.6666667 -2.5756824 1.242349 0.6626647The odd-looking second-last line of that again uses the . trick for “whatever came out of the previous step”. The thing inside the curly brackets is two commands one after the other; the first is to display the summary of that aov11 and the second is to just pass whatever came out of the previous line, the output from aov, on, unchanged, into TukeyHSD.

In the Unix/Linux world this is called tee, where you print something and pass it on to the next step. The name tee comes from a (real physical) pipe that plumbers would use to split water flow into two, which looks like a letter T.

\(\blacksquare\)

- What recommendation would you make about the best drug or drugs? Explain briefly.

Solution

Drug A is significantly the worst, so we eliminate that. But there is no significant difference between drugs B and C, so we have no reproducible reason for preferring one rather than the other. Thus, we recommend “either B or C”. If you weren’t sure which way around the drugs actually came out, then you should work out the mean pain relief score by drug:

migraine2 %>%

group_by(drug) %>%

summarize(m = mean(painrelief))These confirm that A is worst, and there is nothing much to choose between B and C. You should not recommend drug B over drug C on this evidence, just because its (sample) mean is higher. The point about significant differences is that they are supposed to stand up to replication: in another experiment, or in real-life experiences with these drugs, the mean pain relief score for drug A is expected to be worst, but between drugs B and C, sometimes the mean of B will come out higher and sometimes C’s mean will be higher, because there is no significant difference between them.12 Another way is to draw a boxplot of pain-relief scores:

ggplot(migraine2, aes(x = drug, y = painrelief)) + geom_boxplot()

The medians of drugs B and C are actually exactly the same. Because the pain relief values are all whole numbers (and there are only 9 in each group), you get that thing where enough of them are equal that the median and third quartiles are equal, actually for two of the three groups.

Despite the weird distributions, I’m willing to call these groups sufficiently symmetric for the ANOVA to be OK, but I didn’t ask you to draw the boxplot, because I didn’t want to confuse the issue with this. The point of this question was to get the data tidy enough to do an analysis.

As I said, I didn’t want you to have to get into this, but if you are worried, you know what the remedy is — Mood’s median test. Don’t forget to use the right data frame:

library(smmr)

median_test(migraine2, painrelief, drug)$grand_median

[1] 5

$table

above

group above below

DrugA 0 8

DrugB 5 2

DrugC 6 0

$test

what value

1 statistic 1.527273e+01

2 df 2.000000e+00

3 P-value 4.825801e-04Because the pain relief scores are integers, there are probably a lot of them equal to the overall median. There were 27 observations altogether, but Mood’s median test will discard any that are equal to this value. There must have been 9 observations in each group to start with, but if you look at each row of the table, there are only 8 observations listed for drug A, 7 for drug B and 6 for drug C, so there must have been 1, 2 and 3 (totalling 6) observations equal to the median that were discarded.

The P-value is a little bigger than came out of the \(F\)-test, but the conclusion is still that there are definitely differences among the drugs in terms of pain relief. The table at the top of the output again suggests that drug A is worse than the others, but to confirm that you’d have to do Mood’s median test on all three pairs of drugs, and then use Bonferroni to allow for your having done three tests:

pairwise_median_test(migraine2, painrelief, drug)Drug A gives worse pain relief (fewer hours) than both drugs B and C, which are not significantly different from each hour. This is exactly what you would have guessed from the boxplot.

I adjusted the P-values as per Bonferroni by multiplying them by 3 (so that I could still compare with 0.05), but it makes no sense to have a P-value, which is a probability, greater than 1, so an “adjusted P-value” that comes out greater than 1 is rounded back down to 1. You interpret this as being “no evidence at all of a difference in medians” between drugs B and C.

\(\blacksquare\)

17.18 Location, species and disease in plants

The table below is a “contingency table”, showing frequencies of diseased and undiseased plants of two different species in two different locations:

Species Disease present Disease absent

Location X Location Y Location X Location Y

A 44 12 38 10

B 28 22 20 18

The data were saved as link. In that file, the columns are coded by two letters: a p or an a to denote presence or absence of disease, and an x or a y to denote location X or Y. The data are separated by multiple spaces and aligned with the variable names.

- Read in and display the data.

Solution

read_table again. You know this because, when you looked at the data file, which of course you did (didn’t you?), you saw that the data values were aligned by columns with multiple spaces between them:

my_url <- "http://ritsokiguess.site/datafiles/disease.txt"

tbl <- read_table(my_url)

── Column specification ────────────────────────────────────────────────────────

cols(

Species = col_character(),

px = col_double(),

py = col_double(),

ax = col_double(),

ay = col_double()

)tblI was thinking ahead, since I’ll be wanting to have one of my columns called disease, so I’m not calling the data frame disease.

You’ll also have noticed that I simplified the data frame that I had you read in, because the original contingency table I showed you has two header rows, and we have to have one header row. So I mixed up the information in the two header rows into one.

\(\blacksquare\)

- Explain briefly how these data are not “tidy”.

Solution

The simple answer is that there are 8 frequencies, that each ought to be in a row by themselves. Or, if you like, there are three variables, Species, Disease status and Location, and each of those should be in a column of its own. Either one of these ideas, or something like it, is good. I need you to demonstrate that you know something about “tidy data” in this context.

\(\blacksquare\)

- Use a suitable

tidyrtool to get all the things that are the same into a single column. (You’ll need to make up a temporary name for the other new column that you create.) Show your result.

Solution

pivot_longer is the tool. All the columns apart from Species contain frequencies. They are frequencies in disease-location combinations, so I’ll call the column of “names” disloc. Feel free to call it temp for now if you prefer:

tbl %>% pivot_longer(-Species, names_to="disloc", values_to = "frequency") -> tbl.2

tbl.2\(\blacksquare\)

- Explain briefly how the data frame you just created is still not “tidy” yet.

Solution

The column I called disloc actually contains two variables, disease and location, which need to be split up. A check on this is that we have two columns (not including the frequencies), but back in (b) we found three variables, so there ought to be three non-frequency columns.

\(\blacksquare\)

- Use one more

tidyrtool to make these data tidy, and show your result.

Solution

This means splitting up disloc into two separate columns, splitting after the first character, thus:

tbl.2 %>% separate_wider_position(disloc, widths = c("disease" = 1, "location" = 1))The format of widths here is: name of new column equals width of new column (the disease is one character and the location is also one).

This is now tidy: eight frequencies in rows, and three non-frequency columns. (Go back and look at your answer to part (b) and note that the issues you found there have all been resolved now.)

Extra: my reading of one of the vignettes (the one called pivot) for tidyr suggests that pivot_longer can do both the making longer and the separating-wider in one shot:

tbl %>% pivot_longer(-Species, names_to=c("disease", "location"),

names_sep=1, values_to="frequency") -> tbl.3

tbl.3And I (amazingly) got that right first time!

The idea is that you recognize that the column names are actually two things: a disease status and a location. To get pivot_longer to recognize that, you put two things in the names_to. Then you have to say how the two things in the columns are separated: this might be by an underscore or a dot, or, as here, “after character number 1” (just as in separate_wider_position). Using two names and some indication of what separates them then does a combined pivot-longer-and-separate-wider, all in one shot.

The more I use pivot_longer, the more I marvel at the excellence of its design: it seems to be easy to guess how to make things work.

\(\blacksquare\)

- Let’s see if we can re-construct the original contingency table (or something equivalent to it). Use the function

xtabs. This requires first a model formula with the frequency variable on the left of the squiggle, and the other variables separated by plus signs on the right. Second it requires a data frame, withdata=. Feed your data frame from the previous part intoxtabs. Save the result in a variable and display the result.

Solution

tbl.4 <- xtabs(frequency ~ Species + disease + location, data = tbl.3)

tbl.4, , location = x

disease

Species a p

A 38 44

B 20 28

, , location = y

disease

Species a p

A 10 12

B 18 22This shows a pair of contingency tables, one each for each of the two locations (in general, the variable you put last on the right side of the model formula). You can check that everything corresponds with the original data layout at the beginning of the question, possibly with some things rearranged (but with the same frequencies in the same places).

\(\blacksquare\)

- Take the output from the last part and feed it into the function

ftable. How has the output been changed? Which do you like better? Explain briefly.

Solution

This:

ftable(tbl.4) location x y

Species disease

A a 38 10

p 44 12

B a 20 18

p 28 22This is the same output, but shown more compactly. (Rather like a vertical version of the original data, in fact.) I like ftable better because it displays the data in the smallest amount of space, though I’m fine if you prefer the xtabs output because it spreads things out more. This is a matter of taste. Pick one and tell me why you prefer it, and I’m good.

That’s the end of what you had to do, but I thought I would do some modelling and try to find out what’s associated with disease. The appropriate modelling with frequencies is called “log-linear modelling”, and it assumes that the log of the frequencies has a linear relationship with the effects of the other variables. This is not quite as simple as the log transformations we had before, because bigger frequencies are going to be more variable, so we fit a generalized linear model with a Poisson-distributed response and log link. (It’s better if you know what that means, but you ought to be able to follow the logic if you don’t. Chapter 29 has more on this.)

First, fit a model predicting frequency from everything, including all the interactions. (The reason for doing it this way will become clear later):

model.1 <- glm(frequency ~ Species * location * disease, data = tbl.3, family = "poisson")

drop1(model.1, test = "Chisq")The residuals are all zero because this model fits perfectly. The problem is that it is very complicated, so it offers no insight. So what we do is to look at the highest-order interaction Species:location:disease and see whether it is significant. It is not, so we can remove it. This is reminiscent of variable selection in regression, where we pull the least significant thing out of the model in turn until we can go no further. But here, we have additional things to think about: we have to get rid of all the three-way interactions before we can tackle the two-way ones, and all the two-way ones before we can tackle the main effects. There is a so-called “nested” structure happening here that says you don’t look at, say, Species, until you have removed all the higher-order interactions involving Species. Not clear yet? Don’t fret. drop1 allows you to assess what is currently up for grabs (here, only the three-way interaction, which is not significant, so out it comes).

Let’s get rid of that three-way interaction. This is another use for update that you might have seen in connection with multiple regression (to make small changes to a big model):

model.2 <- update(model.1, . ~ . - Species:location:disease)

drop1(model.2, test = "Chisq")Notice how update saved us having to write the whole model out again.

Now the three two-way interactions are up for grabs: Species:location, Species:disease and location:disease. The last of these is the least significant, so out it comes. I did some copying and pasting, but I had to remember which model I was working with and what I was removing:

model.3 <- update(model.2, . ~ . - location:disease)

drop1(model.3, test = "Chisq")Species:disease comes out, but it looks as if Species:location will have to stay:

model.4 <- update(model.3, . ~ . - Species:disease)

drop1(model.4, test = "Chisq")Species:location indeed stays. That means that anything “contained in” it also has to stay, regardless of its main effect. So the only candidate for removal now is disease: not significant, out it comes:

model.5 <- update(model.4, . ~ . - disease)

drop1(model.5, test = "Chisq")And now we have to stop.

What does this final model mean? Well, frequency depends significantly on the Species:location combination, but not on anything else. To see how, we can make a contingency table of species by location (totalling up over disease status, since that is not significant):

xtabs(frequency ~ Species + location, data = tbl.3) location

Species x y

A 82 22

B 48 40Another way is to make a graph. This is like a grouped bar chart, but slightly different because our dataframe tbl.3 already has frequencies in it, whereas geom_bar expects to be counting them up. As a consequence, we instead use geom_col, and feed that a y that contains the frequencies:13

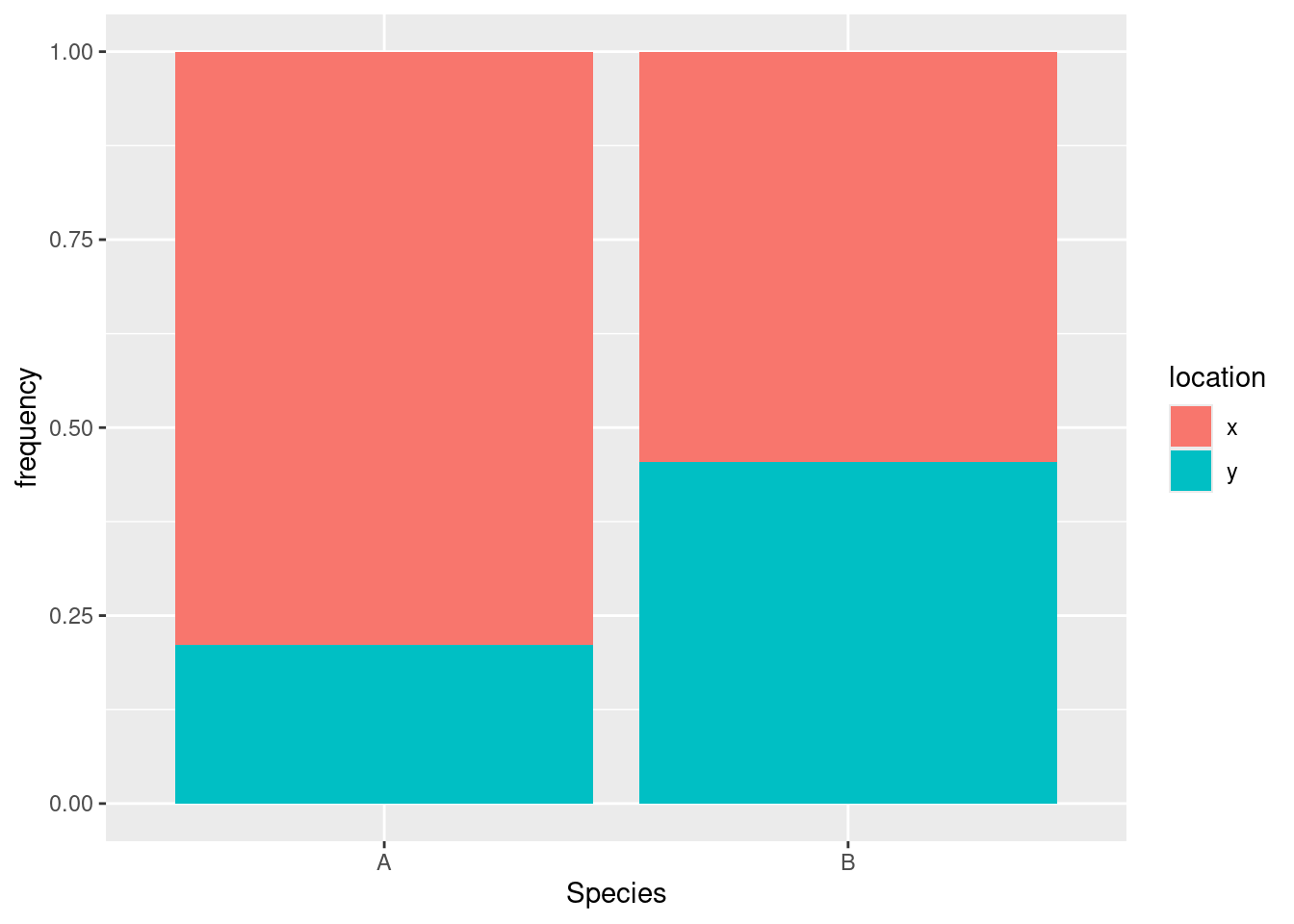

ggplot(tbl.3, aes(x = Species, y = frequency, fill = location)) + geom_col(position = "fill")

Most of the species A’s are at location X, but the species B’s are about evenly divided between the two locations. Or, if you prefer (equally good): location X has mostly species A, while location Y has mostly species B. You can condition on either variable and compare the conditional distribution of the other one.

Now, this is rather interesting, because this began as a study of disease, but disease has completely disappeared from our final model! That means that nothing in our final model has any relationship with disease. Indeed, if you check the original table, you’ll find that disease is present slightly more than it’s absent, for all combinations of species and location. That is, neither species nor location has any particular association with (effect on) disease, since disease prevalence doesn’t change appreciably if you change location, species or the combination of them.

A graph with disease in it would look something like the below. This time we’re thinking of disease as the response, so it goes in fill, and we have “too many” categorical explanatory variables, so one of them becomes facets (it doesn’t matter which one):

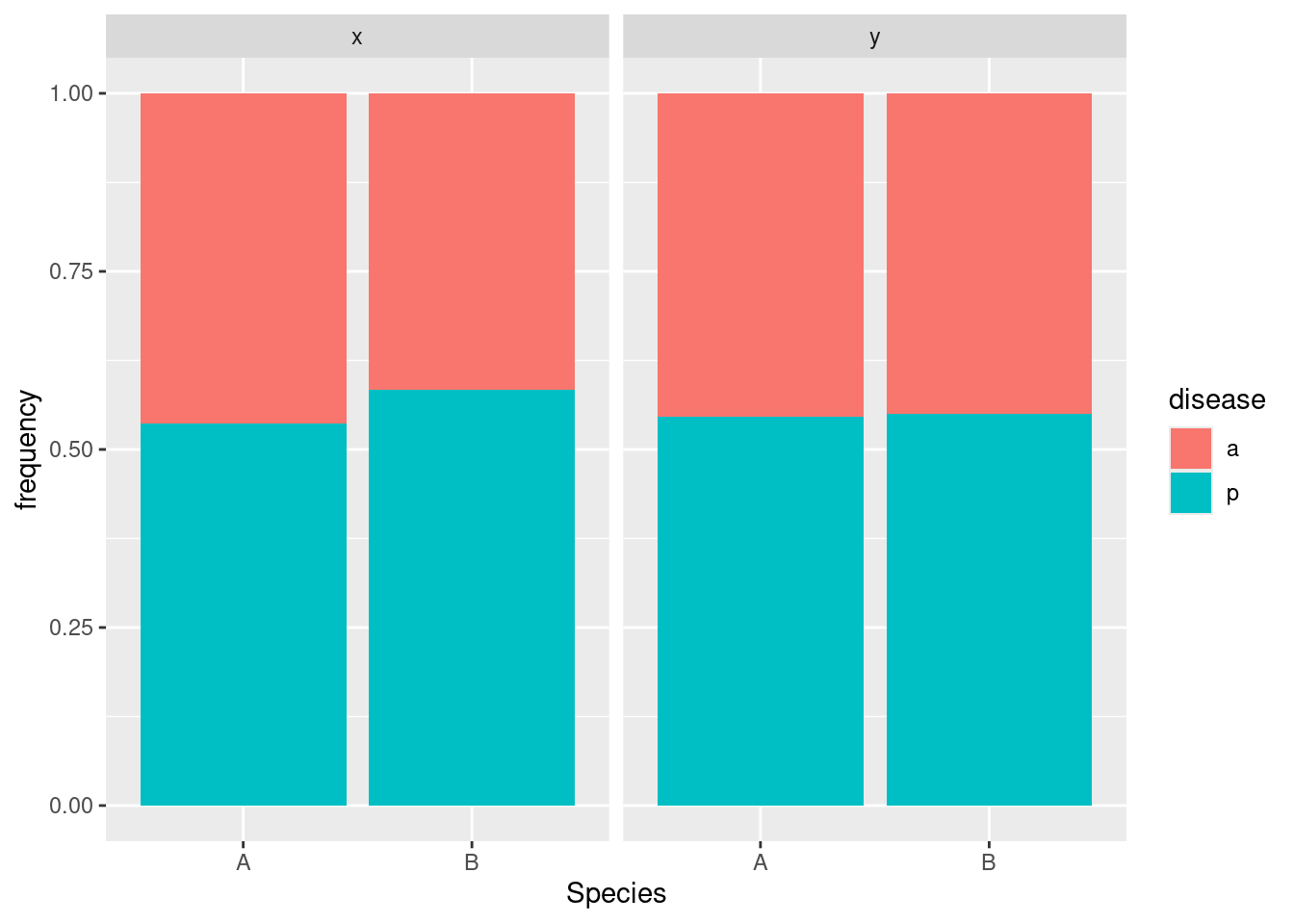

tbl.3ggplot(tbl.3, aes(x = Species, y = frequency, fill = disease)) + geom_col(position = "fill") +

facet_wrap(~ location)

Disease has nothing to do with Species because in both facets, the A and B bars are about the same; disease has nothing to do with location because the two facets are almost exactly the same.

The way an association with disease would show up is if a disease:something interaction had been significant and had stayed in the model, that something would have been associated with disease. For example, if the disease:Species table had looked like this:

disease <- c("a", "a", "p", "p")

Species <- c("A", "B", "A", "B")

frequency <- c(10, 50, 30, 30)

xx <- tibble(disease, Species, frequency)

xtabs(frequency ~ disease + Species, data=xx) Species

disease A B

a 10 50

p 30 30Or, if you like this better as a picture:

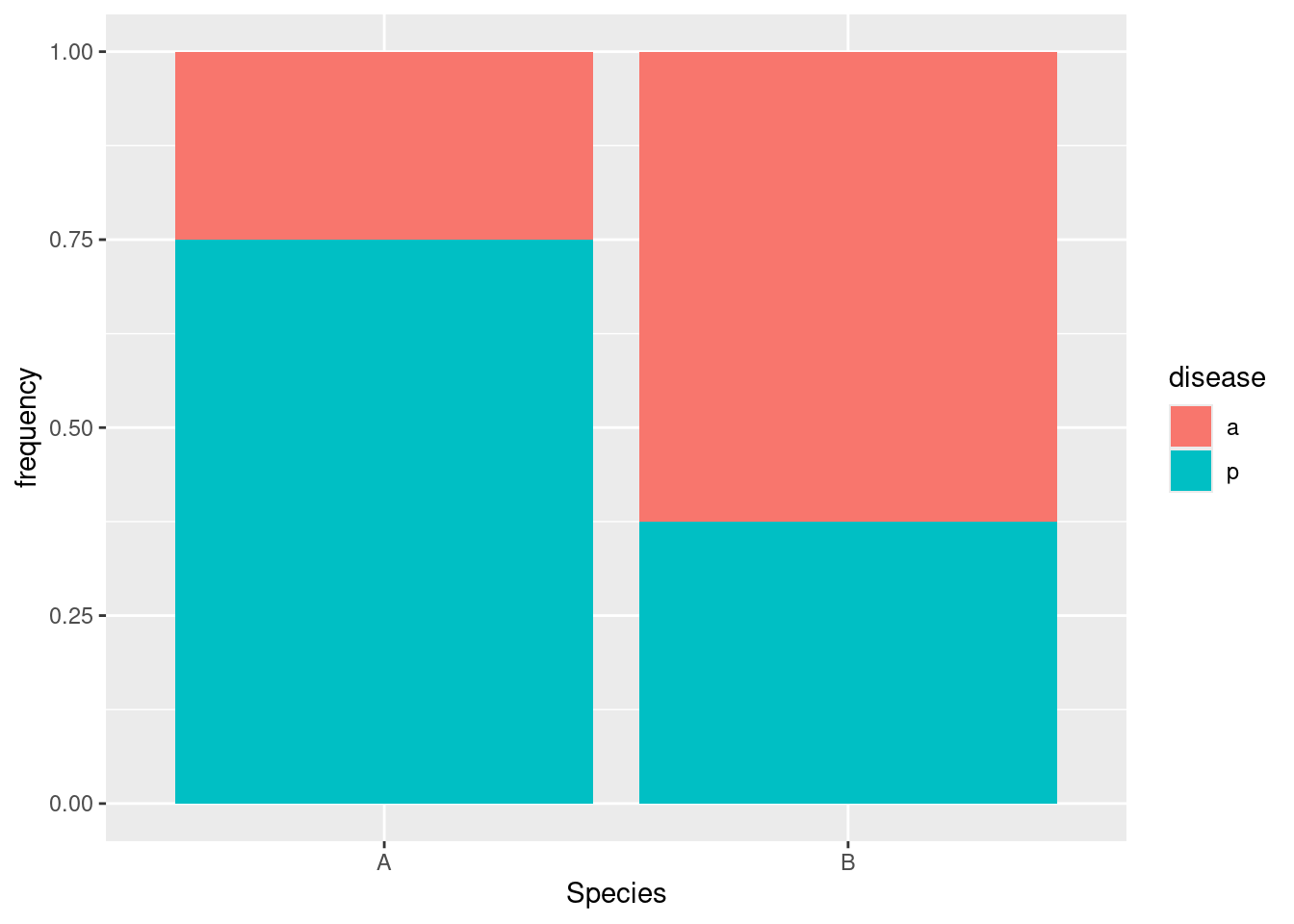

ggplot(xx, aes(x = Species, y = frequency, fill = disease)) + geom_col(position = "fill")

For species A, disease is present 75% of the time, but for species B it’s present less than 40% of the time. So in this one there ought to be a significant association between disease and species:

xx.1 <- glm(frequency ~ disease * Species, data = xx, family = "poisson")

drop1(xx.1, test = "Chisq")And so there is. Nothing can come out of the model. (This is the same kind of test as a chi-squared test for association.

The log-linear model is a multi-variable generalization of that.)

\(\blacksquare\)

17.19 Mating songs in crickets

Male tree crickets produce “mating songs” by rubbing their wings together to produce a chirping sound. It is hypothesized that female tree crickets identify males of the correct species by how fast (in chirps per second) the male’s mating song is. This is called the “pulse rate”. Some data for two species of crickets are in link. The columns, which are unlabelled, are temperature and pulse rate (respectively) for Oecanthus exclamationis (first two columns) and Oecanthus niveus (third and fourth columns). The columns are separated by tabs. There are some missing values in the first two columns because fewer exclamationis crickets than niveus crickets were measured. The research question is whether males of the different species have different average pulse rates. It is also of interest to see whether temperature has an effect, and if so, what. Before we get to that, however, we have some data organization to do.

- Read in the data, allowing for the fact that you have no column names. You’ll see that the columns have names

X1throughX4. This is OK.

Solution

Tab-separated, so read_tsv; no column names, so col_names=FALSE:

my_url <- "http://ritsokiguess.site/datafiles/crickets.txt"

crickets <- read_tsv(my_url, col_names = FALSE)Rows: 17 Columns: 4

── Column specification ────────────────────────────────────────────────────────

Delimiter: "\t"

dbl (4): X1, X2, X3, X4

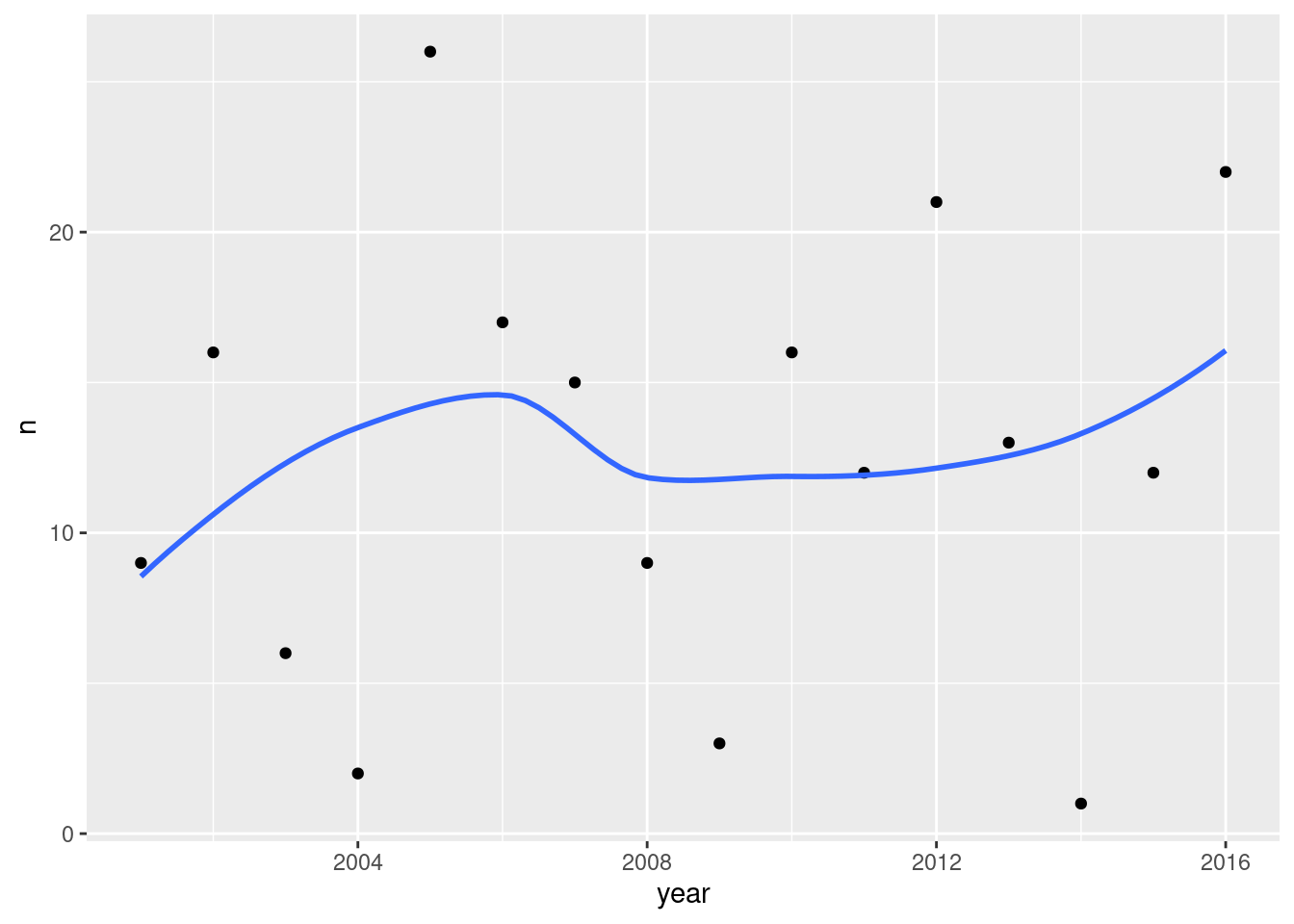

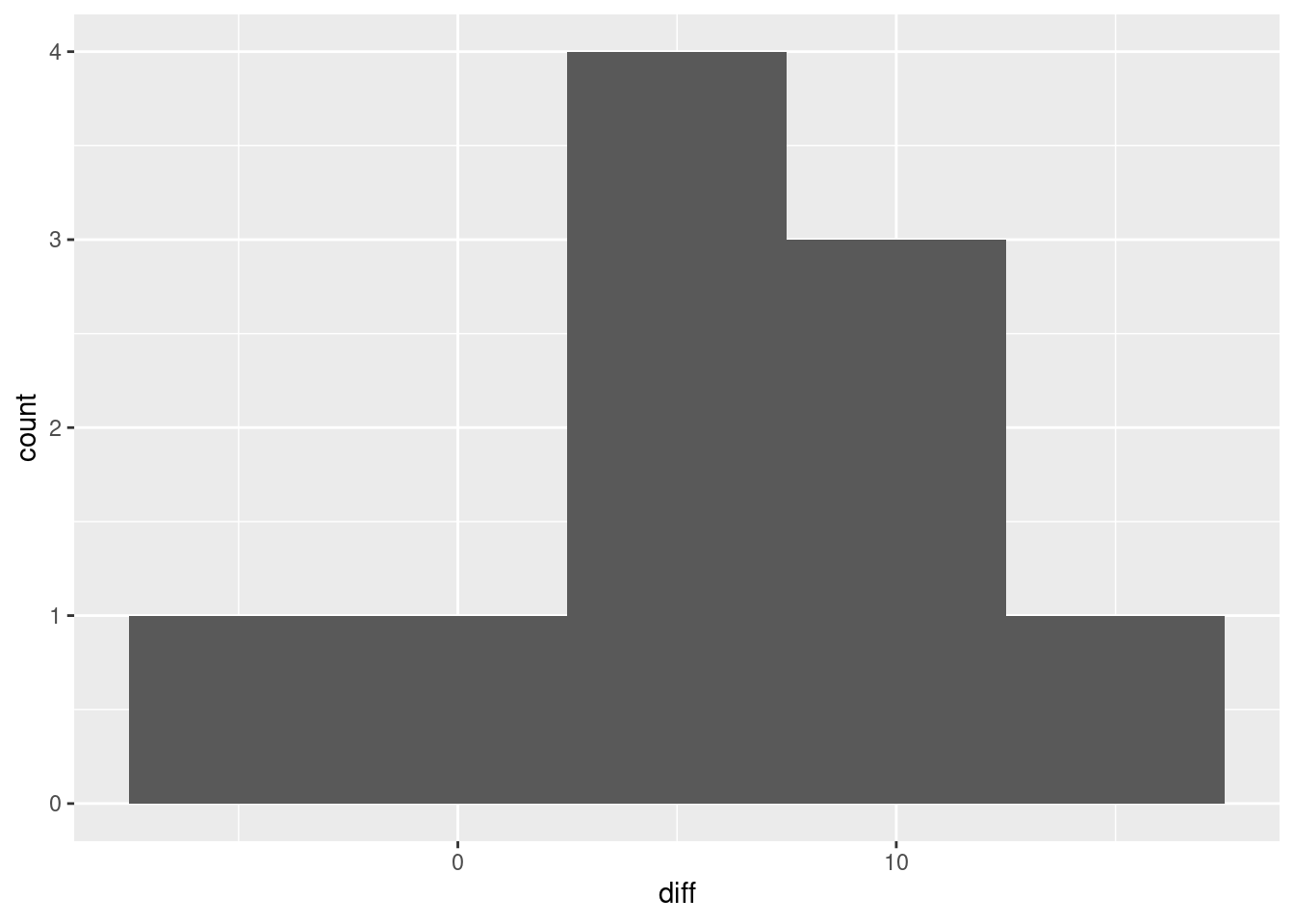

ℹ Use `spec()` to retrieve the full column specification for this data.