Chapter 30 Cluster analysis

Packages for this chapter:

30.1 Sites on the sea bed

Biologists investigate the prevalence of

species of organism by sampling sites where the organisms might be,

taking a “grab” from the site, and sending the grabs to a laboratory

for analysis. The data in this question come from the sea bed. There

were 30 sites, labelled s1 through s30. At each

site, five species of organism, labelled a through

e, were of interest; the data shown in those columns of the

data set were the number of organisms of that species identified in

the grab from that site. There are some other columns in the

(original) data set that will not concern us. Our interest is in

seeing which sites are similar to which other sites, so that a cluster

analysis will be suitable.

When the data are counts of different species, as they are here, biologists often measure the dissimilarity in species prevalence profiles between two sites using something called the Bray-Curtis dissimilarity. It is not important to understand this for this question (though I explain it in my solutions). I calculated the Bray-Curtis dissimilarity between each pair of sites and stored the results in link.

- Read in the dissimilarity data and check that you have 30 rows and 30 columns of dissimilarities.

Solution

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## .default = col_double()

## )

## ℹ Use `spec()` for the full column specifications.Check. The columns are labelled with

the site names. (As I originally set this question, the data file was

read in with read.csv instead, and the site names were read

in as row names as well: see discussion elsewhere about row names. But

in the tidyverse we don’t have row names.)

- Create a distance object out of your dissimilarities, bearing in mind that the values are distances (well, dissimilarities) already.

Solution

This one needs as.dist to convert already-distances into

a dist object. (dist would have

calculated distances from things that were not

distances/dissimilarities yet.)

If you check, you’ll see that the site names are being used to label rows and columns of the dissimilarity matrix as displayed. The lack of row names is not hurting us.

- Fit a cluster analysis using single-linkage, and display a dendrogram of the results.

Solution

This:

This is a base-graphics plot, it not having any of the nice

ggplot things. But it does the job.

Single-linkage tends to produce “stringy” clusters, since the individual being added to a cluster only needs to be close to one thing in the cluster. Here, that manifests itself in sites getting added to clusters one at a time: for example, sites 25 and 26 get joined together into a cluster, and then in sequence sites 6, 16, 27, 30 and 22 get joined on to it (rather than any of those sites being formed into clusters first).

You might

Conceivably. be wondering what else is in that

hclust object, and what it’s good for. Let’s take a look:

## List of 7

## $ merge : int [1:29, 1:2] -3 -25 -6 -9 -28 -16 -27 -1 -30 -24 ...

## $ height : num [1:29] 0.1 0.137 0.152 0.159 0.159 ...

## $ order : int [1:30] 21 14 29 23 15 1 19 18 2 7 ...

## $ labels : chr [1:30] "s1" "s2" "s3" "s4" ...

## $ method : chr "single"

## $ call : language hclust(d = d, method = "single")

## $ dist.method: NULL

## - attr(*, "class")= chr "hclust"You might guess that labels contains the names of the sites,

and you’d be correct. Of the other things, the most interesting are

merge and height. Let’s display them side by side:

## height

## [1,] 0.1000000 -3 -20

## [2,] 0.1369863 -25 -26

## [3,] 0.1523179 -6 2

## [4,] 0.1588785 -9 -12

## [5,] 0.1588785 -28 4

## [6,] 0.1617647 -16 3

## [7,] 0.1633987 -27 6

## [8,] 0.1692308 -1 -19

## [9,] 0.1807229 -30 7

## [10,] 0.1818182 -24 5

## [11,] 0.1956522 -5 10

## [12,] 0.2075472 -15 8

## [13,] 0.2083333 -14 -29

## [14,] 0.2121212 -7 11

## [15,] 0.2142857 -11 1

## [16,] 0.2149533 -2 14

## [17,] 0.2191781 -18 16

## [18,] 0.2205882 -22 9

## [19,] 0.2285714 17 18

## [20,] 0.2307692 12 19

## [21,] 0.2328767 -10 15

## [22,] 0.2558140 20 21

## [23,] 0.2658228 -23 22

## [24,] 0.2666667 13 23

## [25,] 0.3023256 -4 -13

## [26,] 0.3333333 24 25

## [27,] 0.3571429 -21 26

## [28,] 0.4285714 -8 -17

## [29,] 0.6363636 27 28height is the vertical scale of the dendrogram. The first

height is 0.1, and if you look at the bottom of the dendrogram, the

first sites to be joined together are sites 3 and 20 at height 0.1

(the horizontal bar joining sites 3 and 20 is what you are looking

for). In the last two columns, which came from merge, you see

what got joined together, with negative numbers meaning individuals

(individual sites), and positive numbers meaning clusters formed

earlier. So, if you look at the third line, at height 0.152, site 6

gets joined to the cluster formed on line 2, which (looking back) we

see consists of sites 25 and 26. Go back now to the dendrogram; about

\({3\over 4}\) of the way across, you’ll see sites 25 and 26 joined

together into a cluster, and a little higher up the page, site 6 joins

that cluster.

I said that single linkage produces stringy clusters, and the way that

shows up in merge is that you often get an individual site

(negative number) joined onto a previously-formed cluster (positive

number). This is in contrast to Ward’s method, below.

- Now fit a cluster analysis using Ward’s method, and display a dendrogram of the results.

Solution

Same thing, with small changes. The hard part is getting the name

of the method right:

The site numbers were a bit close together, so I printed them out

smaller than usual size (which is what the cex and a number

less than 1 is doing: 70% of normal size).

This is base-graphics code, which I learned a long time ago. There are a lot of options with weird names that are hard to remember, and that are sometimes inconsistent with each other. There is a package ggdendro that makes nice ggplot dendrograms, and another called dendextend that does all kinds of stuff with dendrograms. I decided that it wasn’t worth the trouble of teaching you (and therefore me) ggdendro, since the dendrograms look much the same.

This time, there is a greater tendency for sites to be joined into

small clusters first, then these small clusters are joined

together. It’s not perfect, but there is a greater tendency for it to

happen here.

This shows up in merge too:

## [,1] [,2]

## [1,] -3 -20

## [2,] -25 -26

## [3,] -9 -12

## [4,] -28 3

## [5,] -1 -19

## [6,] -6 2

## [7,] -14 -29

## [8,] -5 -7

## [9,] -18 -24

## [10,] -27 6

## [11,] -16 -22

## [12,] -2 4

## [13,] -30 10

## [14,] -15 5

## [15,] -23 8

## [16,] -4 -13

## [17,] -11 1

## [18,] 9 12

## [19,] -10 17

## [20,] -8 -17

## [21,] 11 13

## [22,] -21 15

## [23,] 7 22

## [24,] 14 19

## [25,] 16 24

## [26,] 18 21

## [27,] 20 23

## [28,] 26 27

## [29,] 25 28There are relatively few instances of a site being joined to a cluster of sites. Usually, individual sites get joined together (negative with a negative, mainly at the top of the list), or clusters get joined to clusters (positive with positive, mainly lower down the list).

- * On the Ward’s method clustering, how many clusters would you choose to divide the sites into? Draw rectangles around those clusters.

Solution

You may need to draw the plot again. In any case, a second line of code draws the rectangles. I think three clusters is good, but you can have a few more than that if you like:

What I want to see is a not-unreasonable choice of number of clusters (I think you could go up to about six), and then a depiction of that number of clusters on the plot. This is six clusters:

In all your plots, the cex is optional, but you can compare

the plots with it and without it and see which you prefer.

Looking at this, even seven clusters might work, but I doubt you’d want to go beyond that. The choice of the number of clusters is mainly an aesthetic This, I think, is the British spelling, with the North American one being esthetic. My spelling is where the aes in a ggplot comes from. decision.

- * The original data is in

link. Read in the

original data and verify that you again have 30 sites, variables

called

athrougheand some others.

Solution

Thus:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## site = col_character(),

## a = col_double(),

## b = col_double(),

## c = col_double(),

## d = col_double(),

## e = col_double(),

## depth = col_double(),

## pollution = col_double(),

## temp = col_double(),

## sediment = col_character()

## )30 observations of 10 variables, including a through

e. Check.

I gave this a weird name so that it didn’t overwrite my original

seabed, the one I turned into a distance object, though I

don’t think I really needed to worry.

These data came from link, If you are a soccer fan, you might recognize BBVA as a former sponsor of the top Spanish soccer league, La Liga BBVA (as it was). BBVA is a Spanish bank that also has a Foundation that published this book. from which I also got the definition of the Bray-Curtis dissimilarity that I calculated for you. The data are in Exhibit 1.1 of that book.

- Go back to your Ward method dendrogram with the red rectangles and find two sites in the same cluster. Display the original data for your two sites and see if you can explain why they are in the same cluster. It doesn’t matter which two sites you choose; the grader will merely check that your results look reasonable.

Solution

I want my two sites to be very similar, so I’m looking at two sites

that were joined into a cluster very early on, sites s3 and

s20. As I said, I don’t mind which ones you pick, but being

in the same cluster will be easiest to justify if you pick sites

that were joined together early.

Then you need to display just those rows of the original data (that

you just read in), which is a filter with an “or” in it:

I think this odd-looking thing also works:

I’ll also take displaying the lines one at a time, though it is easier to compare them if they are next to each other.

Why are they in the same cluster? To be similar (that is, have a low

dissimilarity), the values of a through e should be

close together. Here, they certainly are: a and e

are both zero for both sites, and b, c and

d are around 10 for both sites. So I’d call that similar.

You will probably pick a different pair of sites, and thus your detailed discussion will differ from mine, but the general point of it should be the same: pick a pair of sites in the same cluster (1 mark), display those two rows of the original data (1 mark), some sensible discussion of how the sites are similar (1 mark). As long as you pick two sites in the same one of your clusters, I don’t mind which ones you pick. The grader will check that your two sites were indeed in the same one of your clusters, then will check that you do indeed display those two sites from the original data.

What happens if you pick sites from different clusters? Let’s pick two very dissimilar ones, sites 4 and 7 from opposite ends of my dendrogram:

Site s4 has no a or b at all, and site

s7 has quite a few; site s7 has no c at

all, while site s4 has a lot. These are very different sites.

Extra: now that you’ve seen what the original data look like, I should

explain how I got the Bray-Curtis dissimilarities. As I said, only the

counts of species a through e enter into the

calculation; the other variables have nothing to do with it.

Let’s simplify matters by pretending that we have only two species (we can call them A and B), and a vector like this:

which says that we have 10 organisms of species A and 3 of species B at a site. This is rather similar to this site:

but very different from this site:

The way you calculate the Bray-Curtis dissimilarity is to take the absolute difference of counts of organisms of each species:

## [1] 2 1and add those up:

## [1] 3and then divide by the total of all the frequencies:

## [1] 0.12The smaller this number is, the more similar the sites are. So you

might imagine that v1 and v3 would be more dissimilar:

## [1] 0.7and so it is. The scaling of the Bray-Curtis dissimilarity is that the smallest it can be is 0, if the frequencies of each of the species are exactly the same at the two sites, and the largest it can be is 1, if one site has only species A and the other has only species B. (I’ll demonstrate that in a moment.) You might imagine that we’ll be doing this calculation a lot, and so we should define a function to automate it. Hadley Wickham (in “R for Data Science”) says that you should copy and paste some code (as I did above) no more than twice; if you need to do it again, you should write a function instead. The thinking behind this is if you copy and paste and change something (like a variable name), you’ll need to make the change everywhere, and it’s so easy to miss one. So, my function is (copying and pasting my code from above into the body of the function, which is Wickham-approved since it’s only my second time):

Let’s test it on my made-up sites, making up one more:

## [1] 0.12## [1] 0.7## [1] 0## [1] 1These all check out. The first two are repeats of the ones we did

before. The third one says that if you calculate Bray-Curtis for two

sites with the exact same frequencies all the way along, you get the

minimum value of 0; the fourth one says that when site v3

only has species B and site v4 only has species A, you get

the maximum value of 1.

But note this:

## [1] 8 4## [1] 16 8## [1] 0.3333333You might say that v2 and 2*v2 are the same

distribution, and so they are, proportionately. But Bray-Curtis is

assessing whether the frequencies are the same (as opposed to

something like a chi-squared test that is assessing

proportionality).

You could make a table out of the sites and species, and use the test statistic from a chi-squared test as a measure of dissimilarity: the smallest it can be is zero, if the species counts are exactly proportional at the two sites. It doesn’t have an upper limit.

So far so good. Now we have to do this for the actual data. The first

issue

There are more issues. is that the data is some of the

row of the original data frame; specifically, it’s columns 2 through

6. For example, sites s3 and s20 of the original

data frame look like this:

and we don’t want to feed the whole of those into braycurtis,

just the second through sixth elements of them. So let’s write another

function that extracts the columns a through e of its

inputs for given rows, and passes those on to the braycurtis

that we wrote before. This is a little fiddly, but bear with me. The

input to the function is the data frame, then the two sites that we want:

First, though, what happens if filter site s3?

This is a one-row data frame, not a vector as our function expects. Do we need to worry about it? First, grab the right columns, so that we will know what our function has to do:

That leads us to this function, which is a bit repetitious, but for

two repeats I can handle it. I haven’t done anything about the fact

that x and y below are actually data frames:

braycurtis.spec <- function(d, i, j) {

d %>% filter(site == i) %>% select(a:e) -> x

d %>% filter(site == j) %>% select(a:e) -> y

braycurtis(x, y)

}The first time I did this, I had the filter and the

select in the opposite order, so I was neatly removing

the column I wanted to filter by before I did the

filter!

The first two lines pull out columns a through e of

(respectively) sites i and j.

If I were going to create more than two things like x and

y, I would have hived that off

into a separate function as well, but I didn’t.

Sites 3 and 20 were the two sites I chose before as being similar ones (in the same cluster). So the dissimilarity should be small:

## [1] 0.1and so it is. Is it about right? The c differ by 5, the

d differ by one, and the total frequency in both rows is

about 60, so the dissimilarity should be about \(6/60=0.1\), as it is

(exactly, in fact).

This, you will note, works. I think R has taken the attitude that it

can treat these one-row data frames as if they were vectors.

This is the cleaned-up version of my function. When I first wrote it,

I printed out x and y, so that I could

check that they were what I was expecting (they were).

I am a paid-up member of the print all the things school of debugging. You probably know how to do this better.

We have almost all the machinery we need. Now what we have to do is to

compare every site with every other site and compute the dissimilarity

between them. If you’re used to Python or another similar language,

you’ll recognize this as two loops, one inside the other. This can be done in R (and I’ll show you how), but I’d rather show you the Tidyverse way first.

The starting point is to make a vector containing all the sites, which is easier than you would guess:

## [1] "s1" "s2" "s3" "s4" "s5" "s6" "s7" "s8" "s9" "s10" "s11" "s12" "s13" "s14"

## [15] "s15" "s16" "s17" "s18" "s19" "s20" "s21" "s22" "s23" "s24" "s25" "s26" "s27" "s28"

## [29] "s29" "s30"Next, we need to make all possible pairs of sites, which we also know how to do:

Now, think about what to do in English first: “for each of the sites in site1, and for each of the sites in site2, taken in parallel, work out the Bray-Curtis distance.” This is, I hope,

making you think of rowwise:

(you might notice that this takes a noticeable time to run.)

This is a “long” data frame, but for the cluster analysis, we need a wide one with sites in rows and columns, so let’s create that:

That’s the data frame I shared with you.

The more Python-like way of doing it is a loop inside a loop. This

works in R, but it has more housekeeping and a few possibly unfamiliar

ideas. We are going to work with a matrix, and we access

elements of a matrix with two numbers inside square brackets, a row

number and a column number. We also have to initialize our matrix that

we’re going to fill with Bray-Curtis distances; I’ll fill it with \(-1\)

values, so that if any are left at the end, I’ll know I missed

something.

m <- matrix(-1, 30, 30)

for (i in 1:30) {

for (j in 1:30) {

m[i, j] <- braycurtis.spec(seabed.z, sites[i], sites[j])

}

}

rownames(m) <- sites

colnames(m) <- sites

head(m)## s1 s2 s3 s4 s5 s6 s7 s8

## s1 0.0000000 0.4567901 0.2962963 0.4666667 0.4769231 0.5221239 0.4545455 0.9333333

## s2 0.4567901 0.0000000 0.4814815 0.5555556 0.3478261 0.2285714 0.4146341 0.9298246

## s3 0.2962963 0.4814815 0.0000000 0.4666667 0.5076923 0.5221239 0.4909091 1.0000000

## s4 0.4666667 0.5555556 0.4666667 0.0000000 0.7857143 0.6923077 0.8695652 1.0000000

## s5 0.4769231 0.3478261 0.5076923 0.7857143 0.0000000 0.4193548 0.2121212 0.8536585

## s6 0.5221239 0.2285714 0.5221239 0.6923077 0.4193548 0.0000000 0.5087719 0.9325843

## s9 s10 s11 s12 s13 s14 s15 s16

## s1 0.3333333 0.4029851 0.3571429 0.3750000 0.5769231 0.6326531 0.2075472 0.8571429

## s2 0.2222222 0.4468085 0.5662651 0.2149533 0.6708861 0.4210526 0.3750000 0.4720000

## s3 0.4074074 0.3432836 0.2142857 0.3250000 0.6538462 0.6734694 0.3584906 0.7346939

## s4 0.6388889 0.3793103 0.5319149 0.5492958 0.3023256 0.8500000 0.4090909 0.9325843

## s5 0.1956522 0.5641026 0.3731343 0.3186813 0.7142857 0.2666667 0.4687500 0.5045872

## s6 0.2428571 0.5714286 0.5304348 0.2374101 0.6756757 0.5925926 0.5357143 0.2484076

## s17 s18 s19 s20 s21 s22 s23 s24

## s1 1.0000000 0.5689655 0.1692308 0.3333333 0.7333333 0.7346939 0.4411765 0.5714286

## s2 0.8620690 0.3146853 0.3695652 0.4022989 0.6666667 0.3760000 0.5368421 0.2432432

## s3 1.0000000 0.5344828 0.3230769 0.1000000 0.8222222 0.6326531 0.5294118 0.3809524

## s4 1.0000000 0.6635514 0.4642857 0.3333333 0.8333333 0.9325843 0.8644068 0.5200000

## s5 0.8095238 0.5118110 0.3947368 0.5211268 0.3571429 0.3761468 0.2658228 0.4105263

## s6 0.9111111 0.2571429 0.3870968 0.4621849 0.6730769 0.2993631 0.4488189 0.3006993

## s25 s26 s27 s28 s29 s30

## s1 0.7037037 0.6956522 0.6363636 0.3250000 0.4339623 0.6071429

## s2 0.3925926 0.3277311 0.3809524 0.2149533 0.3500000 0.3669065

## s3 0.6666667 0.6086957 0.6363636 0.5000000 0.4339623 0.5892857

## s4 0.9393939 0.9277108 0.9333333 0.5774648 0.5454545 0.8446602

## s5 0.5294118 0.4174757 0.3818182 0.3186813 0.3125000 0.4796748

## s6 0.1856287 0.1523179 0.2151899 0.2949640 0.5357143 0.2163743Because my loops work with site numbers and my function works with site names, I have to remember to refer to the site names when I call my function. I also have to supply row and column names (the site names).

That looks all right. Are all my Bray-Curtis distances between 0 and 1? I can smoosh my matrix into a vector and summarize it:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.0000 0.3571 0.5023 0.5235 0.6731 1.0000All the dissimilarities are correctly between 0 and 1. We can also check the one we did before:

or

## [1] 0.1Check.

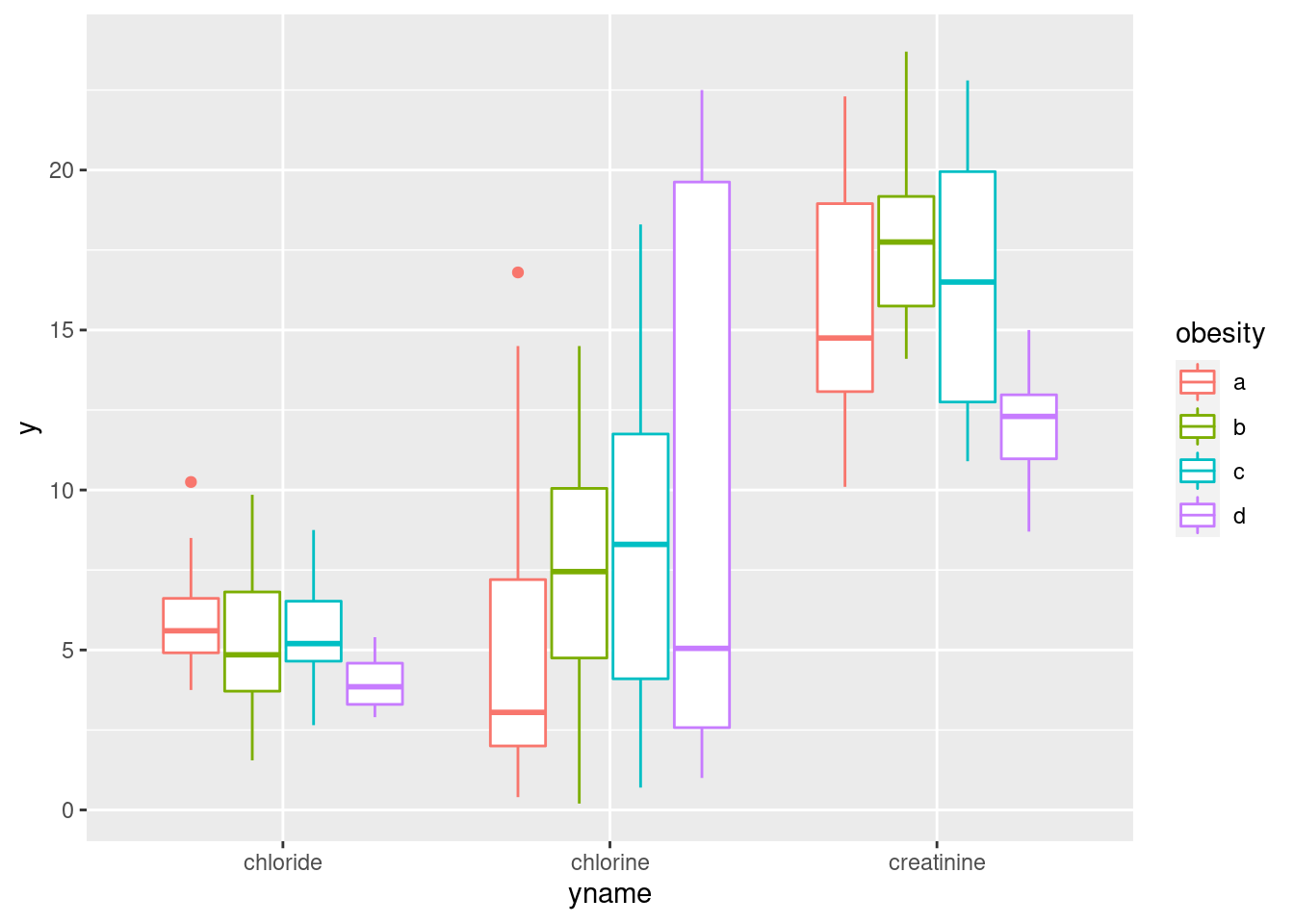

- Obtain the cluster memberships for each site, for your

preferred number of clusters from part (here). Add a

column to the original data that you read in, in part

(here), containing those cluster memberships, . Obtain a plot that will enable you to assess the

relationship between those clusters and

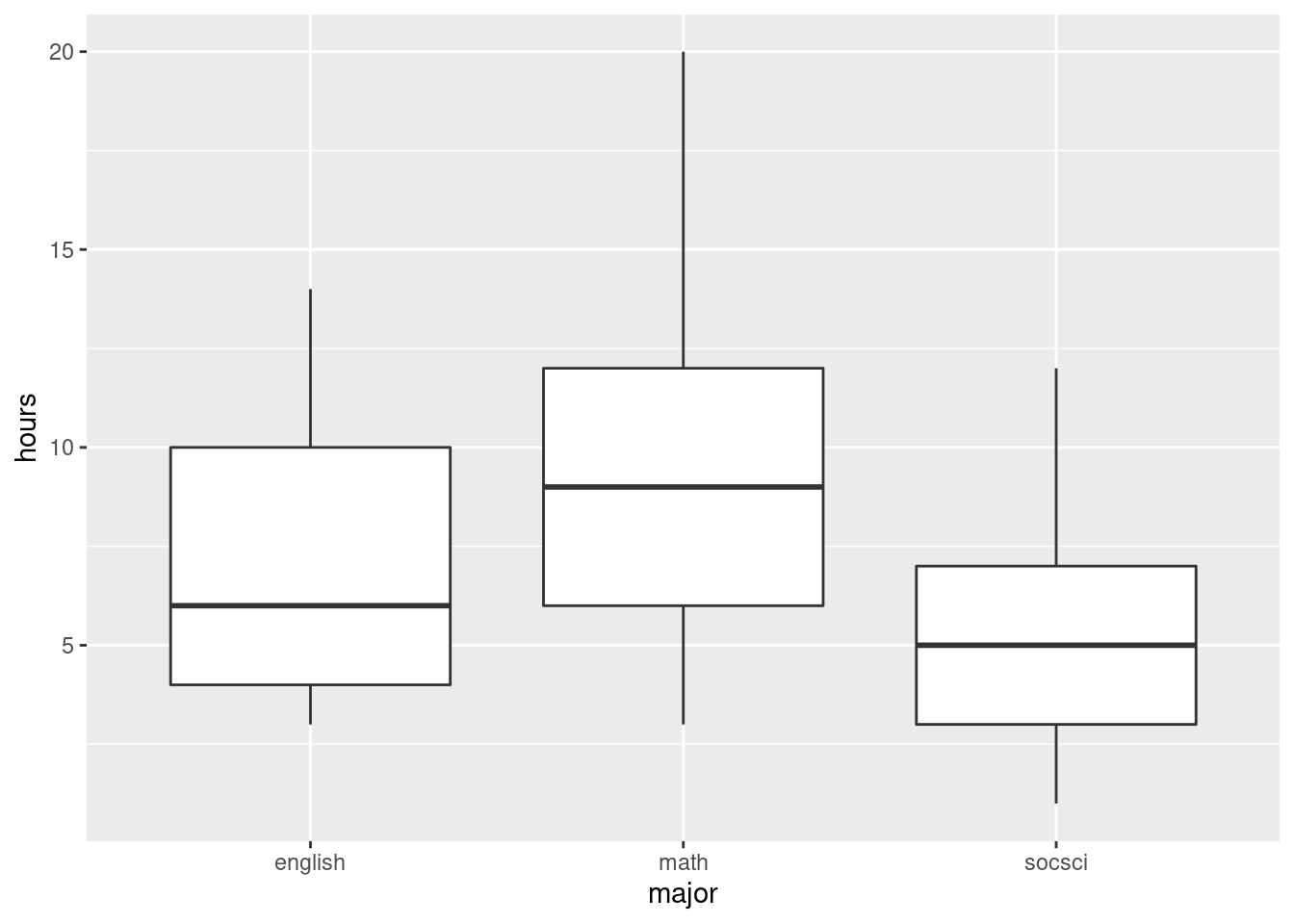

pollution. (Once you have the cluster memberships, you can add them to the data frame and make the graph using a pipe.) What do you see?

Solution

Start by getting the clusters with cutree. I’m going with 3

clusters, though you can use the number of clusters you chose

before. (This is again making the grader’s life a misery, but her

instructions from me are to check that you have done something

reasonable, with the actual answer being less important.)

## s1 s2 s3 s4 s5 s6 s7 s8 s9 s10 s11 s12 s13 s14 s15 s16 s17 s18 s19 s20 s21 s22

## 1 2 1 1 3 2 3 3 2 1 1 2 1 3 1 2 3 2 1 1 3 2

## s23 s24 s25 s26 s27 s28 s29 s30

## 3 2 2 2 2 2 3 2Now, we add that to the original data, the data frame I called

seabed.z, and make a plot. The best one is a boxplot:

seabed.z %>%

mutate(cluster = factor(cluster)) %>%

ggplot(aes(x = cluster, y = pollution)) + geom_boxplot()

The clusters differ substantially in terms of the amount of pollution, with my cluster 1 being highest and my cluster 2 being lowest. (Cluster 3 has a low outlier.)

Any sensible plot will do here. I think boxplots are the best, but you could also do something like vertically-faceted histograms:

seabed.z %>%

mutate(cluster = factor(cluster)) %>%

ggplot(aes(x = pollution)) + geom_histogram(bins = 8) +

facet_grid(cluster ~ .)

which to my mind doesn’t show the differences as dramatically. (The bins are determined from all the data together, so that each facet actually has fewer than 8 bins. You can see where the bins would be if they had any data in them.)

Here’s how 5 clusters looks:

## s1 s2 s3 s4 s5 s6 s7 s8 s9 s10 s11 s12 s13 s14 s15 s16 s17 s18 s19 s20 s21 s22

## 1 2 1 1 3 4 3 5 2 1 1 2 1 3 1 4 5 2 1 1 3 4

## s23 s24 s25 s26 s27 s28 s29 s30

## 3 2 4 4 4 2 3 4seabed.z %>%

mutate(cluster = factor(cluster)) %>%

ggplot(aes(x = cluster, y = pollution)) + geom_boxplot()

This time, the picture isn’t quite so clear-cut, but clusters 1 and 5 are the highest in terms of pollution and cluster 4 is the lowest. I’m guessing that whatever number of clusters you choose, you’ll see some differences in terms of pollution.

What is interesting is that pollution had nothing to

do with the original formation of the clusters: that was based only on

which species were found at each site. So, what we have shown here is that

the amount of pollution has some association with what species are found at a

site.

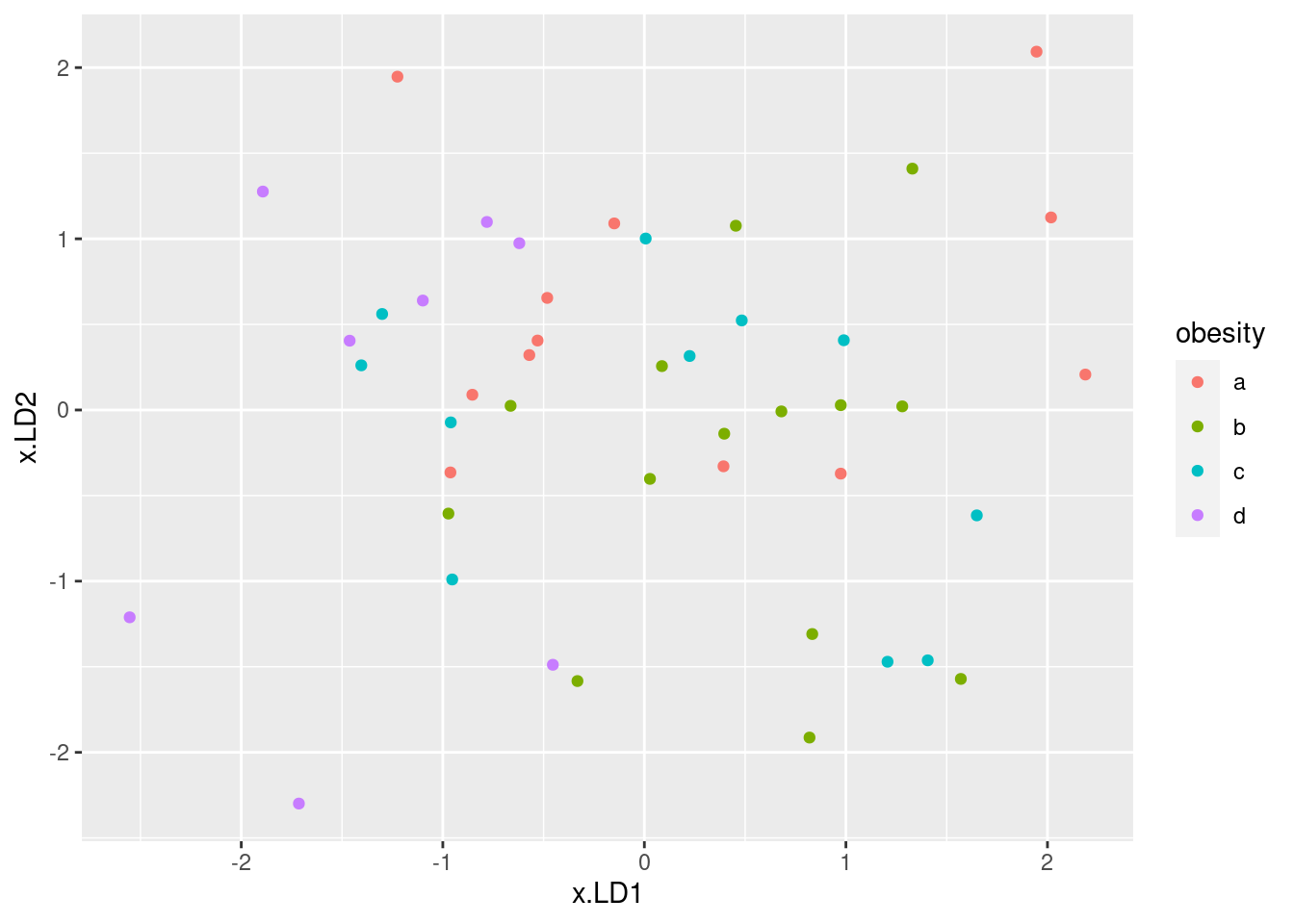

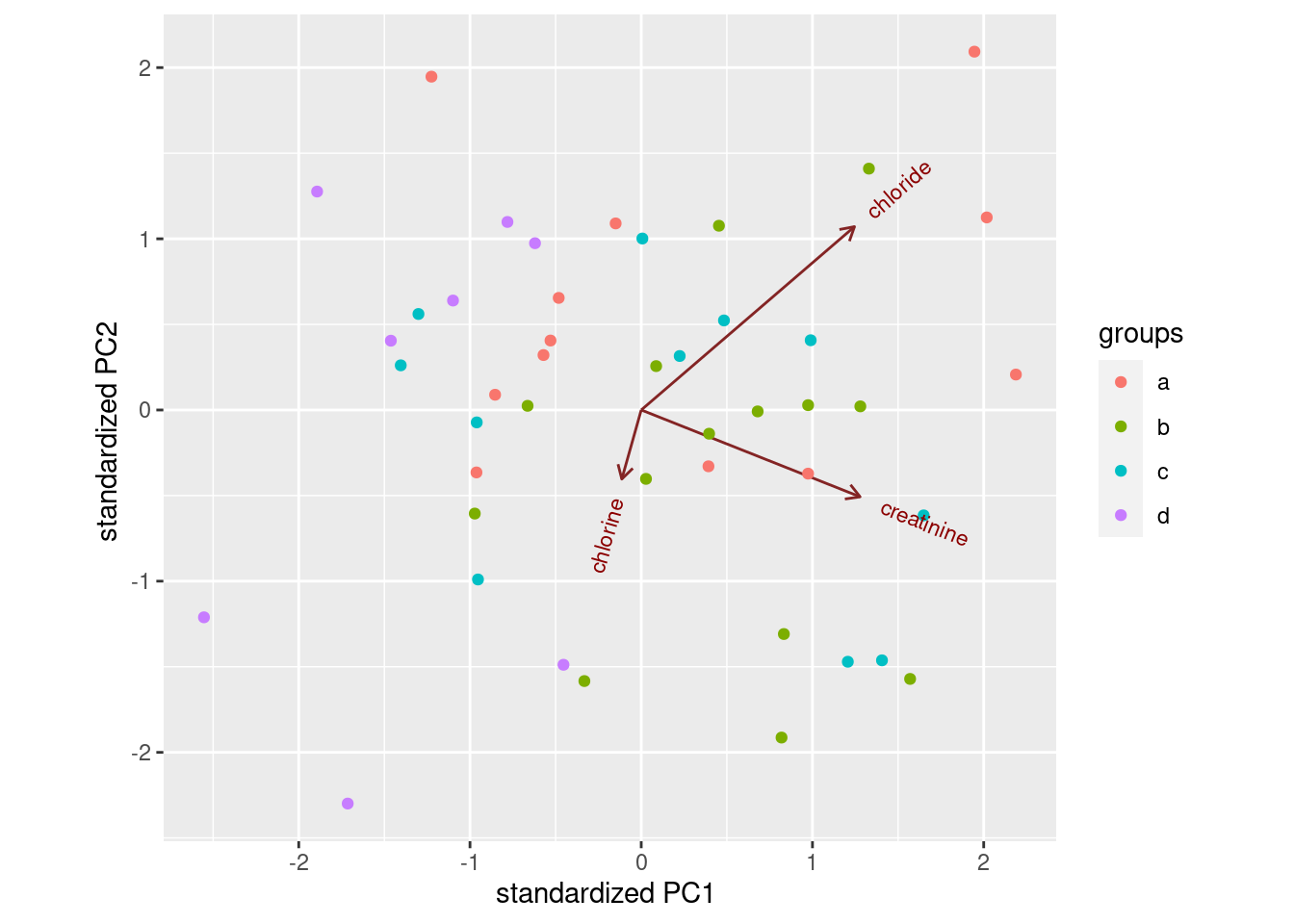

A way to go on with this is to use the clusters as “known groups”

and predict the cluster membership from depth,

pollution and temp using a discriminant

analysis. Then you could plot the sites, colour-coded by what cluster

they were in, and even though you had three variables, you could plot

it in two dimensions (or maybe even one dimension, depending how many

LD’s were important).

30.2 Dissimilarities between fruits

Consider the fruits apple, orange, banana, pear, strawberry, blueberry. We are going to work with these four properties of fruits:

has a round shape

Is sweet

Is crunchy

Is a berry

- Make a table with fruits as columns, and with rows “round shape”, “sweet”, “crunchy”, “berry”. In each cell of the table, put a 1 if the fruit has the property named in the row, and a 0 if it does not. (This is your opinion, and may not agree with mine. That doesn’t matter, as long as you follow through with whatever your choices were.)

Solution

Something akin to this:

Fruit Apple Orange Banana Pear Strawberry Blueberry

Round shape 1 1 0 0 0 1

Sweet 1 1 0 0 1 0

Crunchy 1 0 0 1 0 0

Berry 0 0 0 0 1 1

You’ll have to make a choice about “crunchy”. I usually eat pears before they’re fully ripe, so to me, they’re crunchy.

- We’ll define the dissimilarity between two fruits to be the number of qualities they disagree on. Thus, for example, the dissimilarity between Apple and Orange is 1 (an apple is crunchy and an orange is not, but they agree on everything else). Calculate the dissimilarity between each pair of fruits, and make a square table that summarizes the results. (To save yourself some work, note that the dissimilarity between a fruit and itself must be zero, and the dissimilarity between fruits A and B is the same as that between B and A.) Save your table of dissimilarities into a file for the next part.

Solution

I got this, by counting them:

Fruit Apple Orange Banana Pear Strawberry Blueberry

Apple 0 1 3 2 3 3

Orange 1 0 2 3 2 2

Banana 3 2 0 1 2 2

Pear 2 3 1 0 3 3

Strawberry 3 2 2 3 0 2

Blueberry 3 2 2 3 2 0

I copied this into a file fruits.txt. Note that (i) I

have aligned my columns, so that I will be able to use

read_table later, and (ii) I have given the first column

a name, since read_table wants the same number of column

names as columns.

Extra: yes, you can do this in R too. We’ve seen some of the tricks before.

Let’s start by reading in my table of fruits and properties, which I saved in link:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## Property = col_character(),

## Apple = col_double(),

## Orange = col_double(),

## Banana = col_double(),

## Pear = col_double(),

## Strawberry = col_double(),

## Blueberry = col_double()

## )We don’t need the first column, so we’ll get rid of it:

The loop way is the most direct. We’re going to be looking at

combinations of fruits and other fruits, so we’ll need two loops one

inside the other. It’s easier for this to work with column numbers,

which here are 1 through 6, and we’ll make a matrix m with

the dissimilarities in it, which we have to initialize first. I’ll

initialize it to a \(6\times 6\) matrix of -1, since the final

dissimilarities are 0 or bigger, and this way I’ll know if I forgot

anything.

Here’s where we are at so far:

fruit_m <- matrix(-1, 6, 6)

for (i in 1:6) {

for (j in 1:6) {

fruit_m[i, j] <- 3 # dissim between fruit i and fruit j

}

}This, of course, doesn’t run yet. The sticking point is how to calculate the dissimilarity between two columns. I think that is a separate thought process that should be in a function of its own. The inputs are the two column numbers, and a data frame to get those columns from:

dissim <- function(i, j, d) {

x <- d %>% select(i)

y <- d %>% select(j)

sum(x != y)

}

dissim(1, 2, fruit2)## Note: Using an external vector in selections is ambiguous.

## ℹ Use `all_of(i)` instead of `i` to silence this message.

## ℹ See <https://tidyselect.r-lib.org/reference/faq-external-vector.html>.

## This message is displayed once per session.## Note: Using an external vector in selections is ambiguous.

## ℹ Use `all_of(j)` instead of `j` to silence this message.

## ℹ See <https://tidyselect.r-lib.org/reference/faq-external-vector.html>.

## This message is displayed once per session.## [1] 1Apple and orange differ by one (not being crunchy). The process is:

grab the \(i\)-th column and call it x, grab the \(j\)-th column

and call it y. These are two one-column data frames with four

rows each (the four properties). x!=y goes down the rows, and

for each one gives a TRUE if they’re different and a

FALSE if they’re the same. So x!=y is a collection

of four T-or-F values. This seems backwards, but I was thinking of

what we want to do: we want to count the number of different

ones. Numerically, TRUE counts as 1 and FALSE as 0,

so we should make the thing we’re counting (the different ones) come

out as TRUE. To count the number of TRUEs (1s), add

them up.

That was a complicated thought process, so it was probably wise to write a function to do it. Now, in our loop, we only have to call the function (having put some thought into getting it right):

fruit_m <- matrix(-1, 6, 6)

for (i in 1:6) {

for (j in 1:6) {

fruit_m[i, j] <- dissim(i, j, fruit2)

}

}

fruit_m## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0 1 3 2 3 3

## [2,] 1 0 2 3 2 2

## [3,] 3 2 0 1 2 2

## [4,] 2 3 1 0 3 3

## [5,] 3 2 2 3 0 2

## [6,] 3 2 2 3 2 0The last step is re-associate the fruit names with this matrix. This

is a matrix so it has a rownames and a

colnames. We set both of those, but first we have to get the

fruit names from fruit2:

fruit_names <- names(fruit2)

rownames(fruit_m) <- fruit_names

colnames(fruit_m) <- fruit_names

fruit_m## Apple Orange Banana Pear Strawberry Blueberry

## Apple 0 1 3 2 3 3

## Orange 1 0 2 3 2 2

## Banana 3 2 0 1 2 2

## Pear 2 3 1 0 3 3

## Strawberry 3 2 2 3 0 2

## Blueberry 3 2 2 3 2 0This is good to go into the cluster analysis (happening later).

There is a tidyverse way to do this also. It’s actually a lot

like the loop way in its conception, but the coding looks

different. We start by making all combinations of the fruit names with

each other, which is crossing:

Now, we want a function that, given any two fruit names, works out the dissimilarity between them. A happy coincidence is that we can use the function we had before, unmodified! How? Take a look:

dissim <- function(i, j, d) {

x <- d %>% select(i)

y <- d %>% select(j)

sum(x != y)

}

dissim("Apple", "Orange", fruit2)## [1] 1select can take a column number or a column name, so

that running it with column names gives the right answer.

Now, we want to run this function for each of the pairs in

combos. This is rowwise, since our function takes only one fruit and one other fruit at a time, not all of them at once:

This would work just as well using fruit1, with the column of properties, rather than

fruit2, since we are picking out the columns by name rather

than number.

To make this into something we can turn into a dist object

later, we need to pivot-wider the column other to make a

square array:

combos %>%

rowwise() %>%

mutate(dissim = dissim(fruit, other, fruit2)) %>%

pivot_wider(names_from = other, values_from = dissim) -> fruit_spread

fruit_spreadDone!

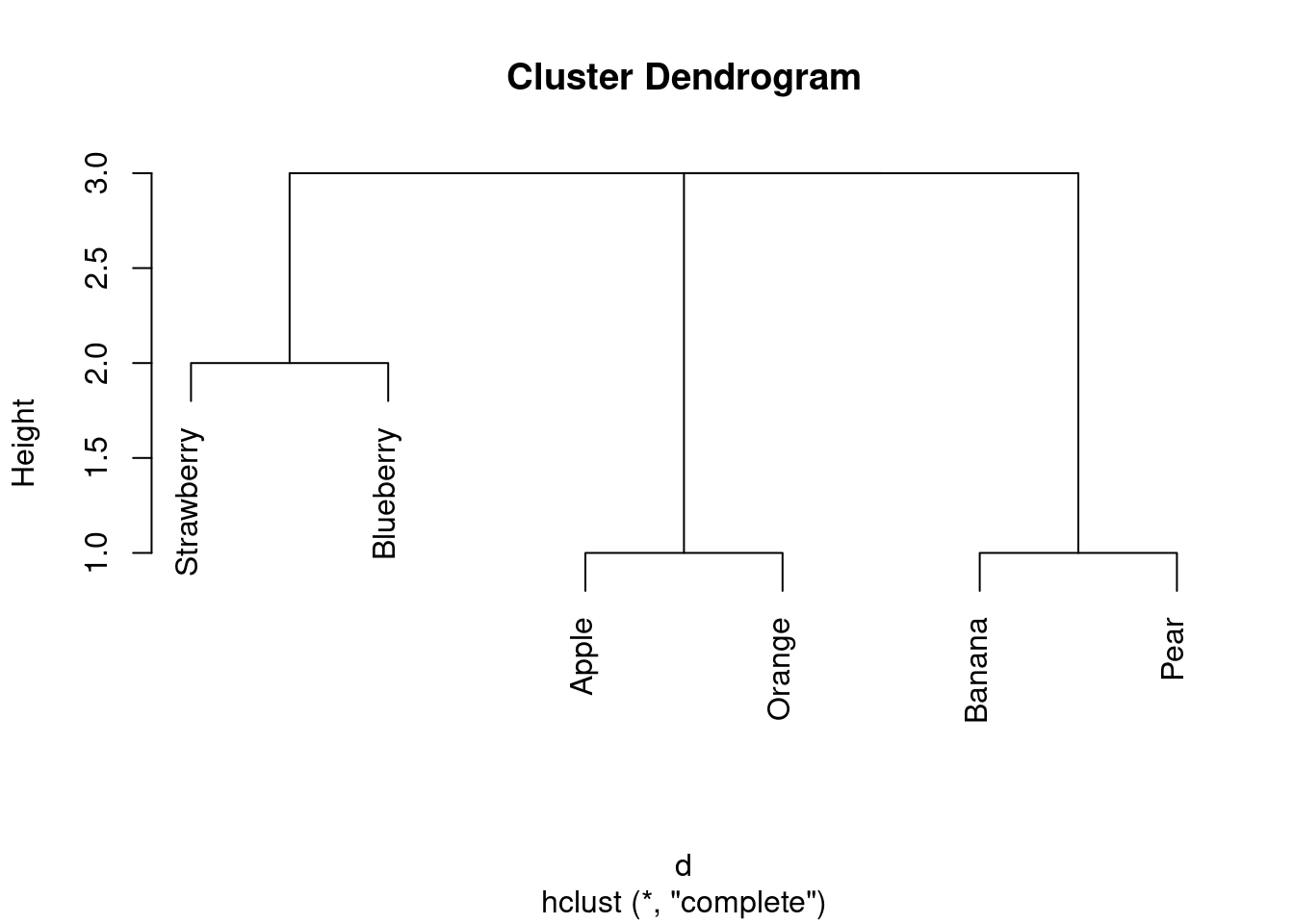

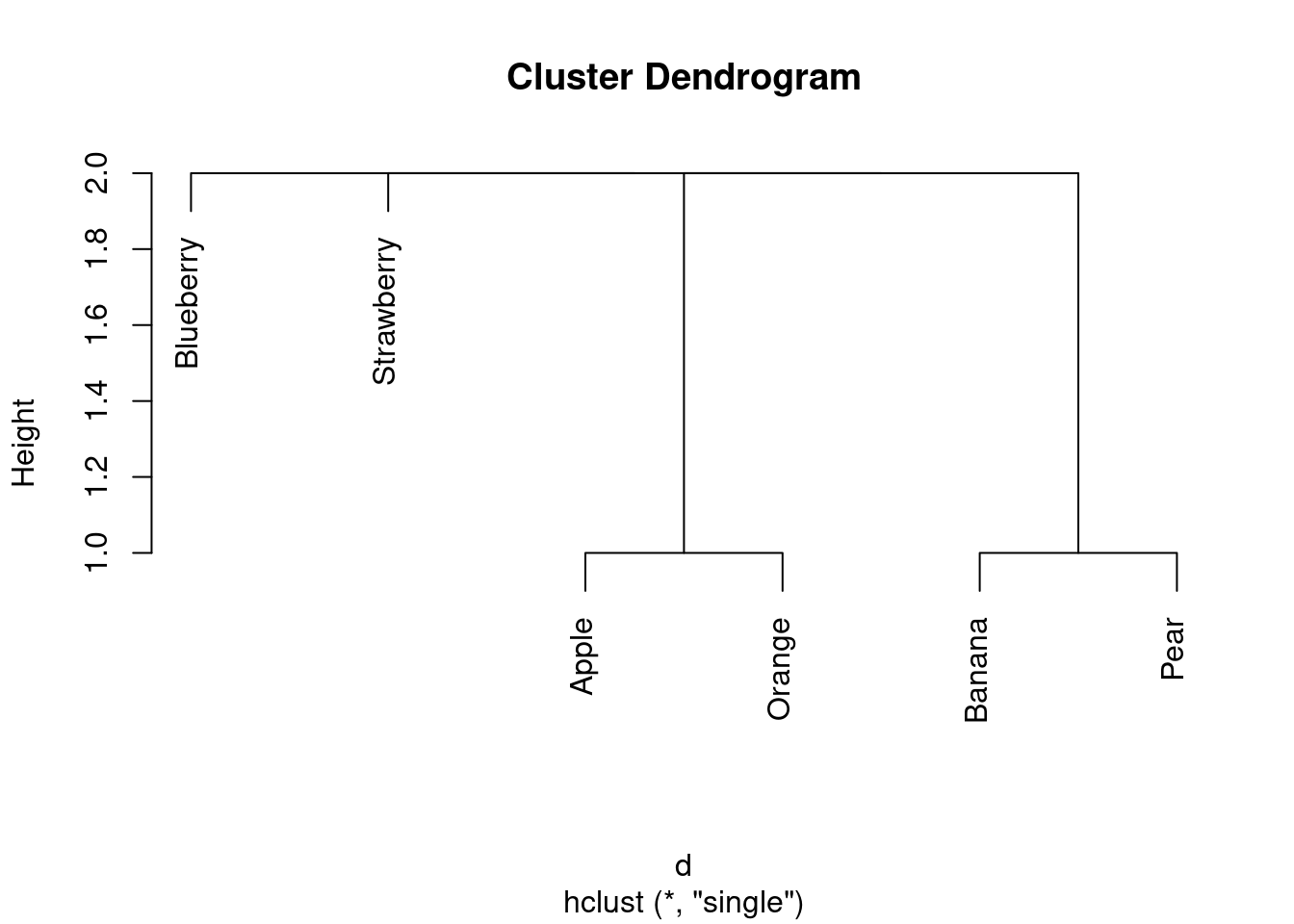

- Do a hierarchical cluster analysis using complete linkage. Display your dendrogram.

Solution

First, we need to take one of our matrices of dissimilarities

and turn it into a dist object. Since I asked you to

save yours into a file, let’s start from there. Mine is aligned

columns:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## fruit = col_character(),

## Apple = col_double(),

## Orange = col_double(),

## Banana = col_double(),

## Pear = col_double(),

## Strawberry = col_double(),

## Blueberry = col_double()

## )Then turn it into a dist object. The first step is to take

off the first column, since as.dist can get the names from

the columns:

## Apple Orange Banana Pear Strawberry

## Orange 1

## Banana 3 2

## Pear 2 3 1

## Strawberry 3 2 2 3

## Blueberry 3 2 2 3 2If you forget to take off the first column, this happens:

## Warning in storage.mode(m) <- "numeric": NAs introduced by coercion## Warning in as.dist.default(dissims): non-square matrix## Error in dimnames(df) <- if (is.null(labels)) list(seq_len(size), seq_len(size)) else list(labels, : length of 'dimnames' [1] not equal to array extentThe key thing here is “non-square matrix”: you have one more column than you have rows, since you have a column of fruit names.

This one is as.dist since you already have dissimilarities

and you want to arrange them into the right type of

thing. dist is for calculating dissimilarities, which

we did before, so we don’t want to do that now.

Now, after all that work, the actual cluster analysis and dendrogram:

- How many clusters, of what fruits, do you seem to have? Explain briefly.

Solution

I reckon I have three clusters: strawberry and blueberry in one, apple and orange in the second, and banana and pear in the third. (If your dissimilarities were different from mine, your dendrogram will be different also.)

- Pick a pair of clusters (with at least 2 fruits in each) from your dendrogram. Verify that the complete-linkage distance on your dendrogram is correct.

Solution

I’ll pick strawberry-blueberry and and apple-orange. I’ll arrange the dissimilarities like this:

apple orange

strawberry 3 2

blueberry 3 2

The largest of those is 3, so that’s the complete-linkage

distance. That’s also what the dendrogram says.

(Likewise, the smallest of those is 2, so 2 is the

single-linkage distance.) That is to say, the largest distance or

dissimilarity

from anything in one cluster to anything in the other is 3, and

the smallest is 2.

I don’t mind which pair of clusters you take, as long as you spell

out the dissimilarity (distance) between each fruit in each

cluster, and take the maximum of those. Besides, if your

dissimilarities are different from mine, your complete-linkage

distance could be different from mine also. The grader will have

to use her judgement!

That’s two cups of coffee I owe the grader now.

The important point is that you assess the dissimilarities between

fruits in one cluster and fruits in the other. The dissimilarities

between fruits in the same cluster don’t enter into it xxx.

I now have a mental image of John Cleese saying it don’t enter into it in the infamous Dead Parrot sketch, https://www.youtube.com/watch?v=vnciwwsvNcc. Not to mention How to defend yourself against an assailant armed with fresh fruit, https://www.youtube.com/watch?v=4JgbOkLdRaE.

As it happens, all my complete-linkage distances between clusters

(of at least 2 fruits) are 3. The single-linkage ones are

different, though:

All the single-linkage cluster distances are 2. (OK, so this wasn’t a very interesting example, but I wanted to give you one where you could calculate what was going on.)

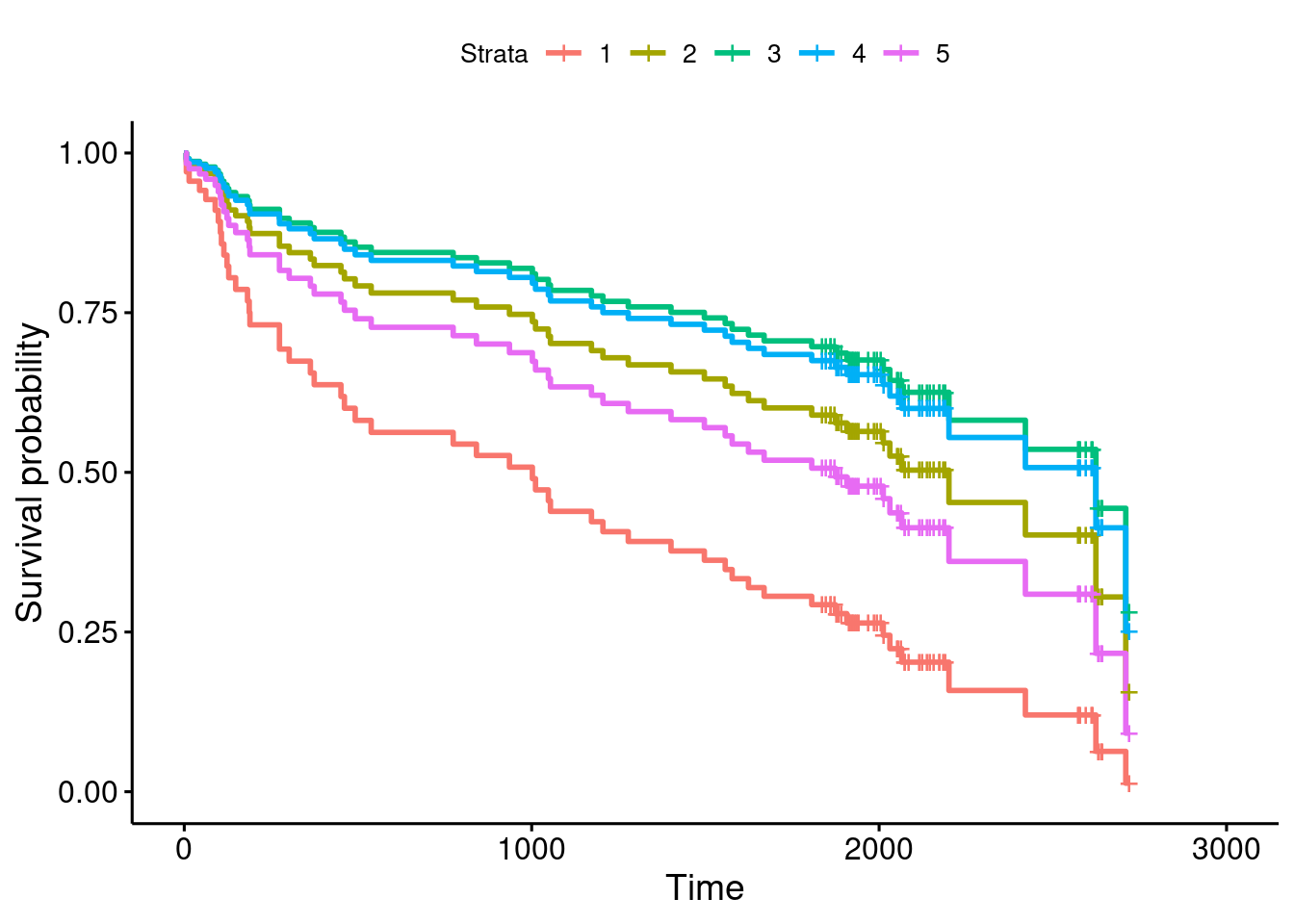

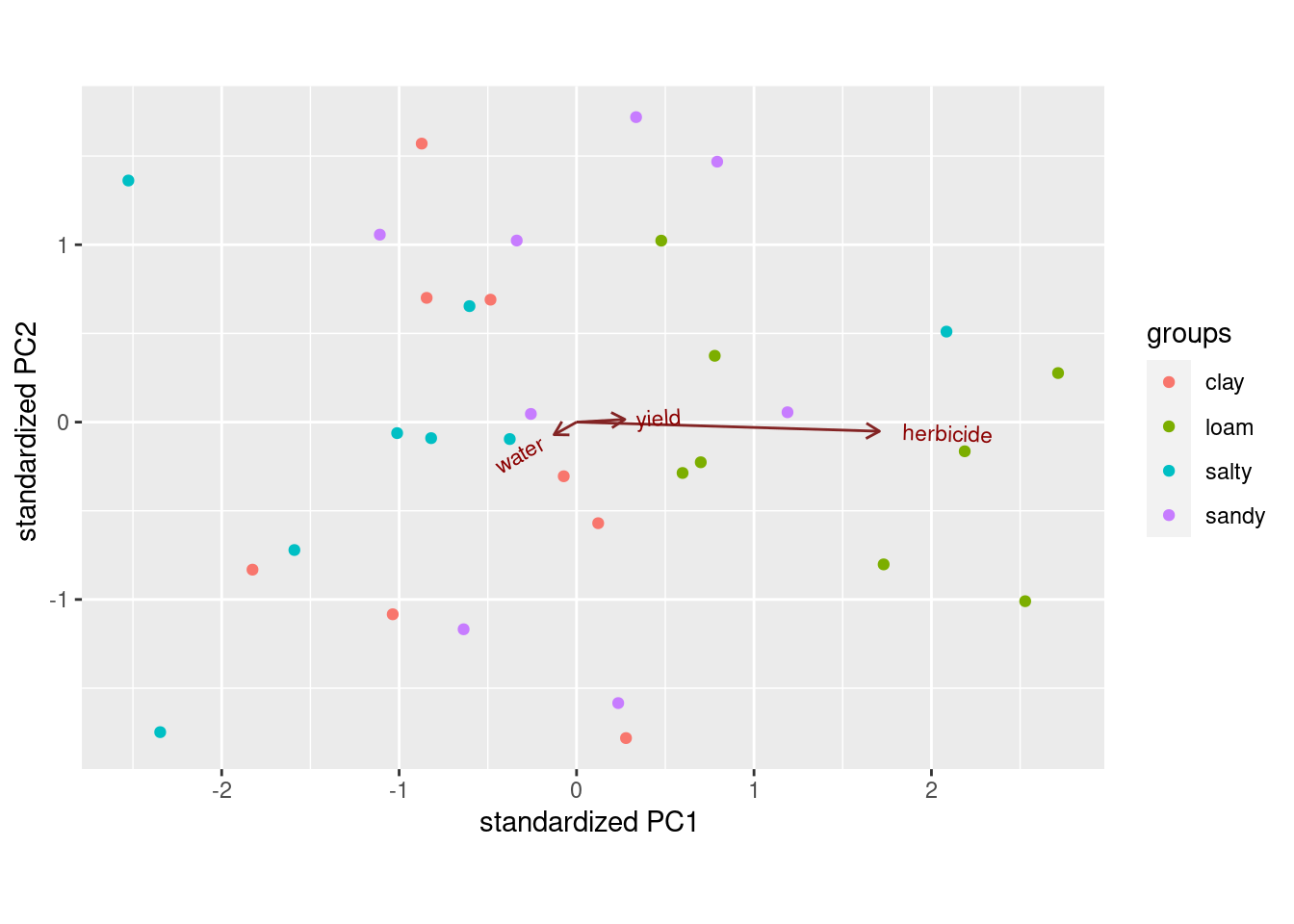

30.3 Similarity of species

Two scientists assessed the dissimilarity between a number of species by recording the number of positions in the protein molecule cytochrome-\(c\) where the two species being compared have different amino acids. The dissimilarities that they recorded are in link.

- Read the data into a data frame and take a look at it.

Solution

Nothing much new here:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## what = col_character(),

## Man = col_double(),

## Monkey = col_double(),

## Horse = col_double(),

## Pig = col_double(),

## Pigeon = col_double(),

## Tuna = col_double(),

## Mould = col_double(),

## Fungus = col_double()

## )This is a square array of dissimilarities between the eight species.

The data set came from the 1960s, hence the use of “Man” rather than

“human”. It probably also came from the UK, judging by the spelling

of Mould.

(I gave the first column the name what so that you could

safely use species for the whole data frame.)

- Bearing in mind that the values you read in are

already dissimilarities, convert them into a

distobject suitable for running a cluster analysis on, and display the results. (Note that you need to get rid of any columns that don’t contain numbers.)

Solution

The point here is that the values you have are already

dissimilarities, so no conversion of the numbers is required. Thus

this is a job for as.dist, which merely changes how it

looks. Use a pipeline to get rid of the first column first:

## Man Monkey Horse Pig Pigeon Tuna Mould

## Monkey 1

## Horse 17 16

## Pig 13 12 5

## Pigeon 16 15 16 13

## Tuna 31 32 27 25 27

## Mould 63 62 64 64 59 72

## Fungus 66 65 68 67 66 69 61This doesn’t display anything that it doesn’t need to: we know that the dissimilarity between a species and itself is zero (no need to show that), and that the dissimilarity between B and A is the same as between A and B, so no need to show everything twice. It might look as if you are missing a row and a column, but one of the species (Fungus) appears only in a row and one of them (Man) only in a column.

This also works, to select only the numerical columns:

## Man Monkey Horse Pig Pigeon Tuna Mould

## Monkey 1

## Horse 17 16

## Pig 13 12 5

## Pigeon 16 15 16 13

## Tuna 31 32 27 25 27

## Mould 63 62 64 64 59 72

## Fungus 66 65 68 67 66 69 61Extra: data frames officially have an attribute called “row names”,

that is displayed where the row numbers display, but which isn’t

actually a column of the data frame. In the past, when we used

read.table with a dot, the first column of data read in from

the file could be nameless (that is, you could have one more column of

data than you had column names) and the first column would be treated

as row names. People used row names for things like identifier

variables. But row names have this sort of half-existence, and when

Hadley Wickham designed the tidyverse, he decided not to use

row names, taking the attitude that if it’s part of the data, it

should be in the data frame as a genuine column. This means that when

you use a read_ function, you have to have exactly as many

column names as columns.

For these data, I previously had the column here called

what as row names, and as.dist automatically got rid

of the row names when formatting the distances. Now, it’s a

genuine column, so I have to get rid of it before running

as.dist. This is more work, but it’s also more honest, and

doesn’t involve thinking about row names at all. So, on balance, I

think it’s a win.

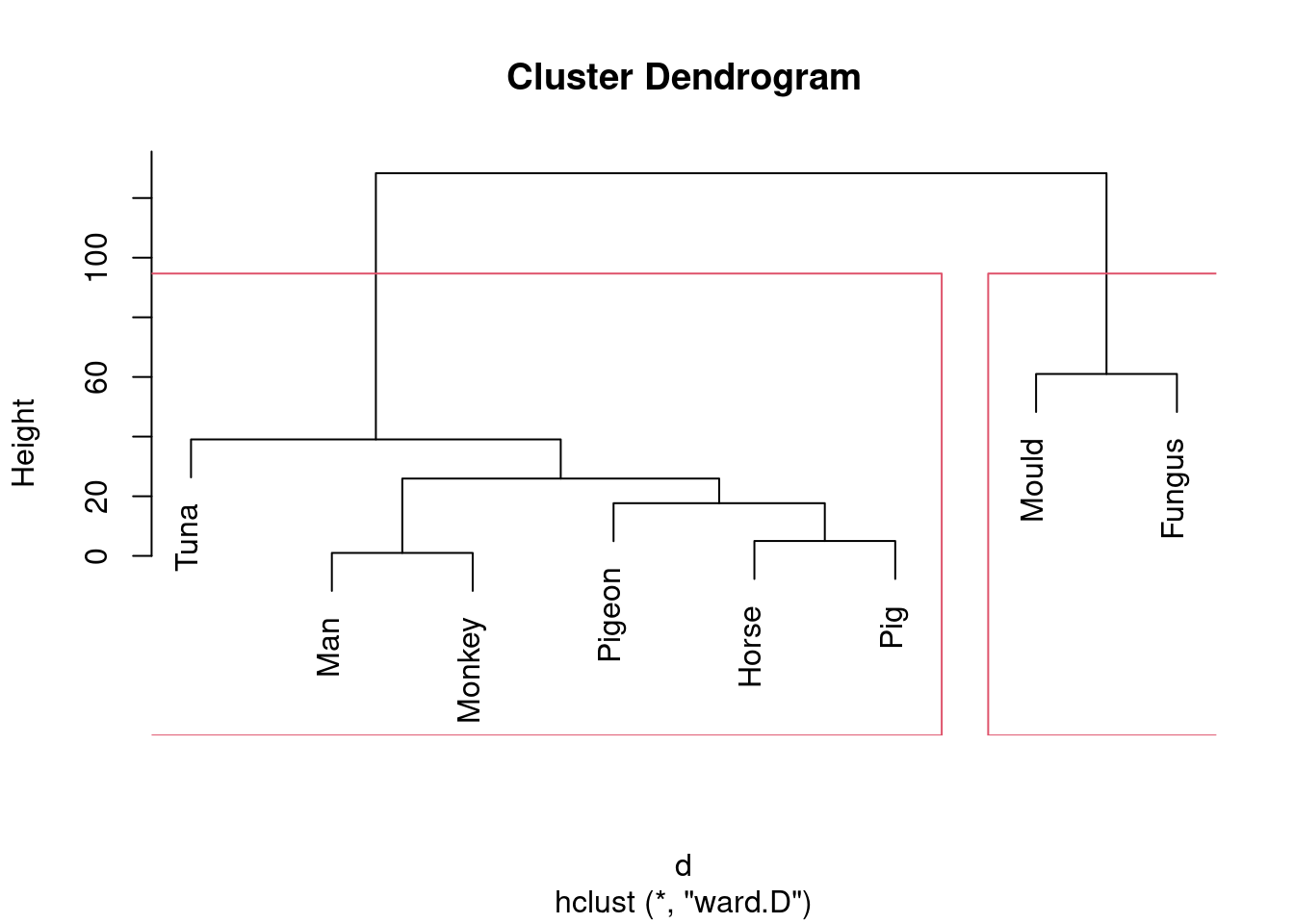

- Run a cluster analysis using single-linkage and obtain a dendrogram.

Solution

Something like this:

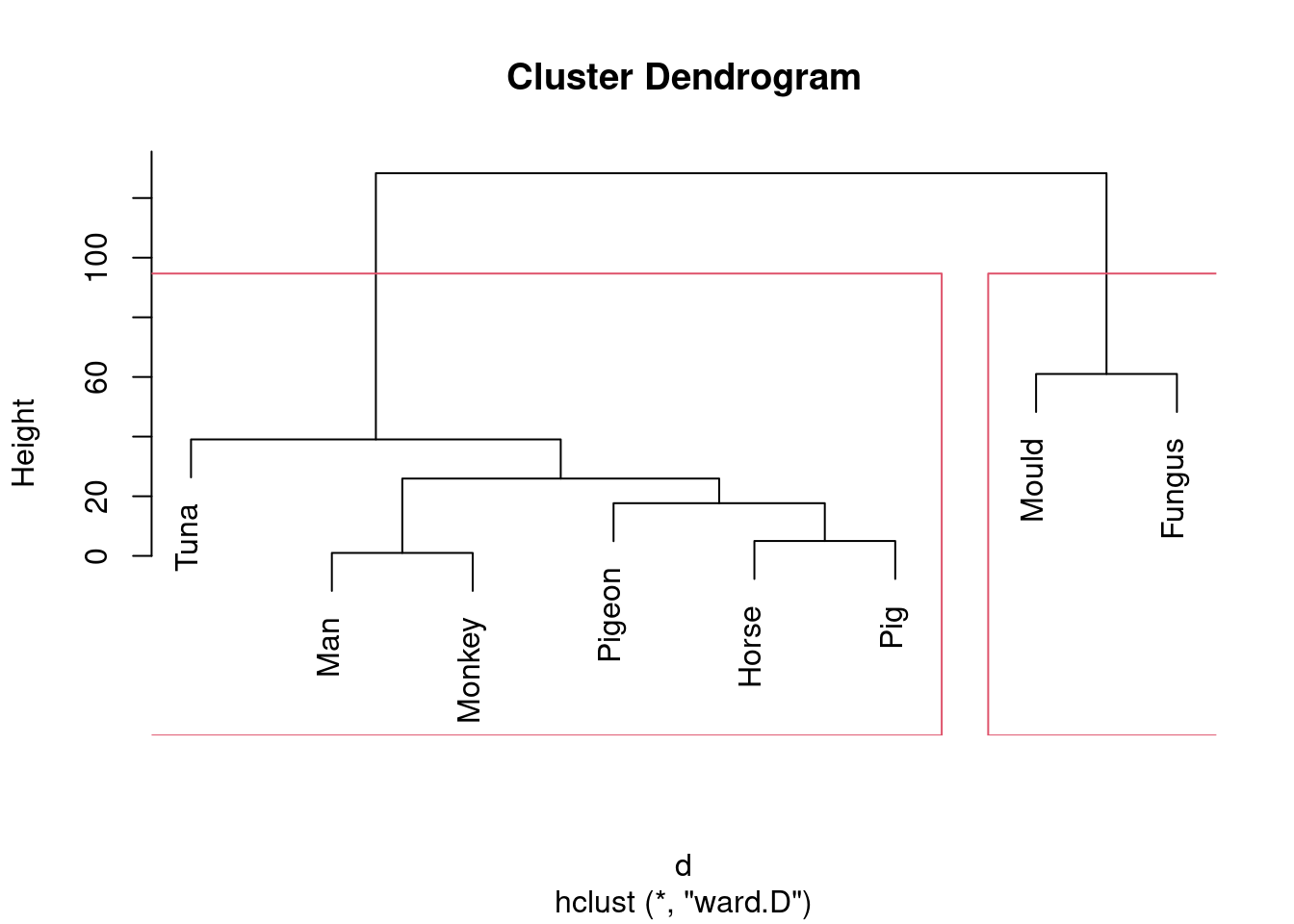

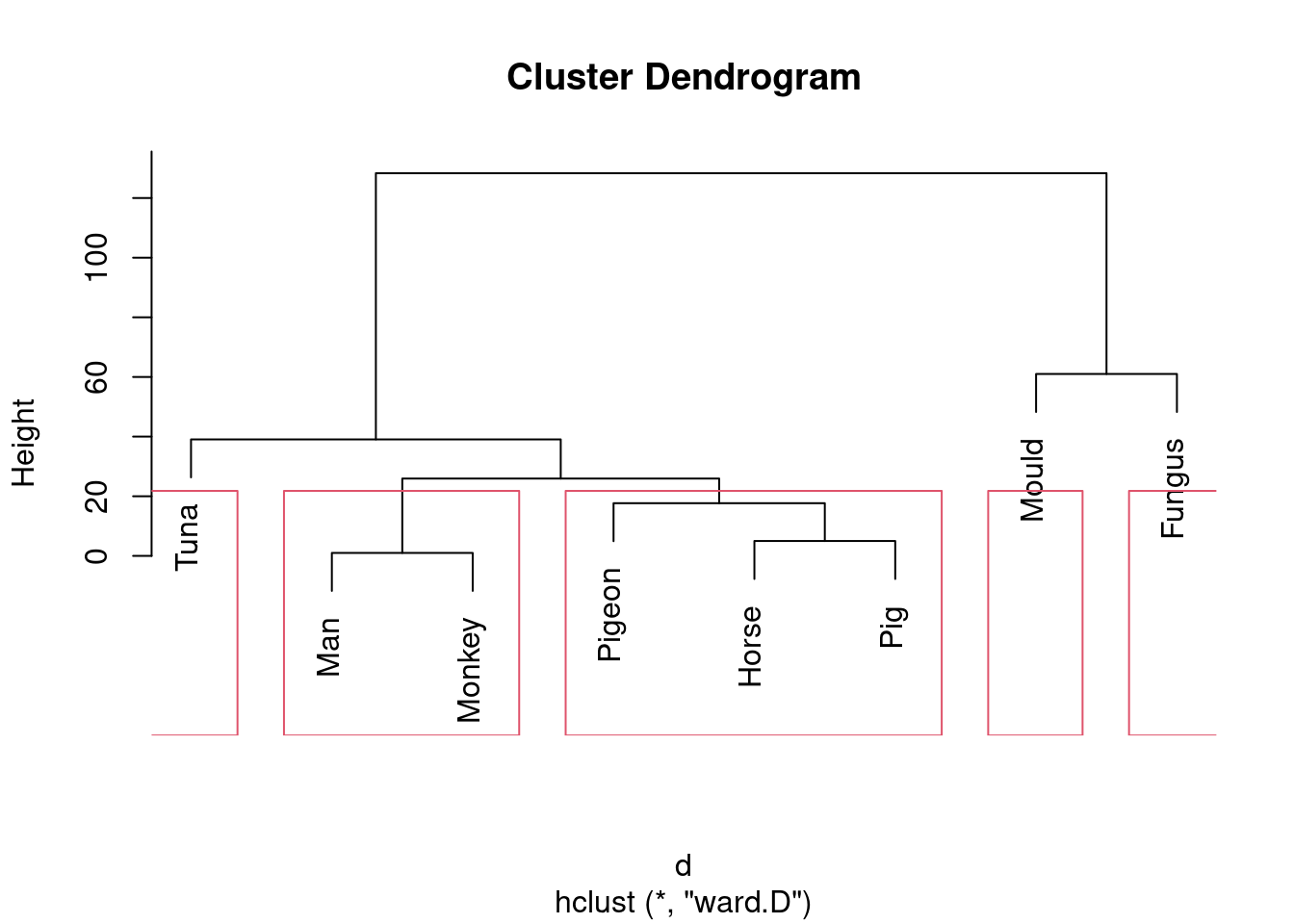

- Run a cluster analysis using Ward’s method and obtain a dendrogram.

Solution

Not much changes here in the code, but the result is noticeably different:

Don’t forget to take care with the method: it has to be

ward in lowercase (even though it’s someone’s name) followed

by a D in uppercase.

- Describe how the two dendrograms from the last two parts look different.

Solution

This is (as ever with this kind of thing) a judgement call. Your job is to come up with something reasonable. For myself, I was thinking about how single-linkage tends to produce “stringy” clusters that join single objects (species) onto already-formed clusters. Is that happening here? Apart from the first two clusters, man and monkey, horse and pig, everything that gets joined on is a single species joined on to a bigger cluster, including mould and fungus right at the end. Contrast that with the output from Ward’s method, where, for the most part, groups are formed first and then joined onto other groups. For example, in Ward’s method, mould and fungus are joined earlier, and also the man-monkey group is joined to the pigeon-horse-pig group. Tuna is an exception, but usually Ward tends to join fairly dissimilar things that are nonetheless more similar to each other than to anything else. This is like Hungarian and Finnish in the example in class: they are very dissimilar languages, but they are more similar to each other than to anything else. You might prefer to look at the specifics of what gets joined. I think the principal difference from this angle is that mould and fungus get joined together (much) earlier in Ward. Also, pigeon gets joined to horse and pig first under Ward, but after those have been joined to man and monkey under single-linkage. This is also a reasonable kind of observation.

- Looking at your clustering for Ward’s method, what seems to be a sensible number of clusters? Draw boxes around those clusters.

Solution

Pretty much any number of clusters bigger than 1 and smaller than

8 is ok here, but I would prefer to see something between 2 and

5, because a number of clusters of that sort offers (i) some

insight (“these things are like these other things”) and (ii) a

number of clusters of that sort is supported by the data.

To draw those clusters, you need rect.hclust, and

before that you’ll need to plot the cluster object again. For 2

clusters, that would look like this:

This one is “mould and fungus vs. everything else”. (My red boxes seem to have gone off the side, sorry.)

Or we could go to the other end of the scale:

Five is not really an insightful number of clusters with 8 species, but it seems to correspond (for me at least) with a reasonable division of these species into “kinds of living things”. That is, I am bringing some outside knowledge into my number-of-clusters division.

- List which cluster each species is in, for your preferred number of clusters (from Ward’s method).

Solution

This is cutree. For 2 clusters it would be this:

## Man Monkey Horse Pig Pigeon Tuna Mould Fungus

## 1 1 1 1 1 1 2 2For 5 it would be this:

## Man Monkey Horse Pig Pigeon Tuna Mould Fungus

## 1 1 2 2 2 3 4 5and anything in between is in between.

These ones came out sorted, so there is no need to sort them (so you don’t need the methods of the next question).

30.4 Rating beer

Thirty-two students each rated 10 brands of beer:

Anchor Steam

Bass

Beck’s

Corona

Gordon Biersch

Guinness

Heineken

Pete’s Wicked Ale

Sam Adams

Sierra Nevada

The ratings are on a scale of 1 to 9, with a higher rating being better. The data are in link. I abbreviated the beer names for the data file. I hope you can figure out which is which.

- Read in the data, and look at the first few rows.

Solution

Data values are aligned in columns, but the column headers are

not aligned with them, so read_table2:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## student = col_character(),

## AnchorS = col_double(),

## Bass = col_double(),

## Becks = col_double(),

## Corona = col_double(),

## GordonB = col_double(),

## Guinness = col_double(),

## Heineken = col_double(),

## PetesW = col_double(),

## SamAdams = col_double(),

## SierraN = col_double()

## )32 rows (students), 11 columns (10 beers, plus a column of student IDs). All seems to be kosher. If beer can be kosher. I investigated. It can; in fact, I found a long list of kosher beers that included Anchor Steam.

- The researcher who collected the data wants to see which

beers are rated similarly to which other beers. Try to create a

distance matrix from these data and explain why it didn’t do what

you wanted. (Remember to get rid of the

studentcolumn first.)

Solution

The obvious thing is to feed these ratings into dist

(we are creating distances rather than re-formatting

things that are already distances). We need to skip the first

column, since those are student identifiers:

## 'dist' num [1:496] 9.8 8.49 6.56 8.89 8.19 ...

## - attr(*, "Size")= int 32

## - attr(*, "Diag")= logi FALSE

## - attr(*, "Upper")= logi FALSE

## - attr(*, "method")= chr "euclidean"

## - attr(*, "call")= language dist(x = .)The 496 distances are:

## [1] 496the number of ways of choosing 2 objects out of 32, when order does

not matter.

Feel free to be offended by my choice of the letter d to

denote both data frames (that I didn’t want to give a better name to)

and dissimilarities in dist objects.

You can look at the whole thing if you like, though it is rather

large. A dist object is stored internally as a long vector

(here of 496 values); it’s displayed as a nice triangle. The clue here

is the thing called Size, which indicates that we have a

\(32\times 32\) matrix of distances between the 32 students, so

that if we were to go on and do a cluster analysis based on this

d, we’d get a clustering of the students rather than

of the beers, as we want. (If you just print out d,

you’ll see that is of distances between 32 (unlabelled) objects, which

by inference must be the 32 students.)

It might be interesting to do a cluster analysis of the 32 students (it would tell you which of the students have similar taste in beer), but that’s not what we have in mind here.

- The R function

t()transposes a matrix: that is, it interchanges rows and columns. Feed the transpose of your read-in beer ratings intodist. Does this now give distances between beers?

Solution

Again, omit the first column. The pipeline code looks a bit weird:

so you should feel free to do it in a couple of steps. This way shows that you can also refer to columns by number:

Either way gets you to the same place:

## AnchorS Bass Becks Corona GordonB Guinness Heineken PetesW SamAdams

## Bass 15.19868

## Becks 16.09348 13.63818

## Corona 20.02498 17.83255 17.54993

## GordonB 13.96424 11.57584 14.42221 13.34166

## Guinness 14.93318 13.49074 16.85230 20.59126 14.76482

## Heineken 20.66398 15.09967 13.78405 14.89966 14.07125 18.54724

## PetesW 11.78983 14.00000 16.37071 17.72005 11.57584 14.28286 19.49359

## SamAdams 14.62874 11.61895 14.73092 14.93318 10.90871 15.90597 14.52584 14.45683

## SierraN 12.60952 15.09967 17.94436 16.97056 11.74734 13.34166 19.07878 13.41641 12.12436There are 10 beers with these names, so this is good.

- Try to explain briefly why I used

as.distin the class example (the languages one) butdisthere. (Think about the form of the input to each function.)

Solution

as.dist is used if you already have

dissimilarities (and you just want to format them right), but

dist is used if you have

data on variables and you want to calculate

dissimilarities.

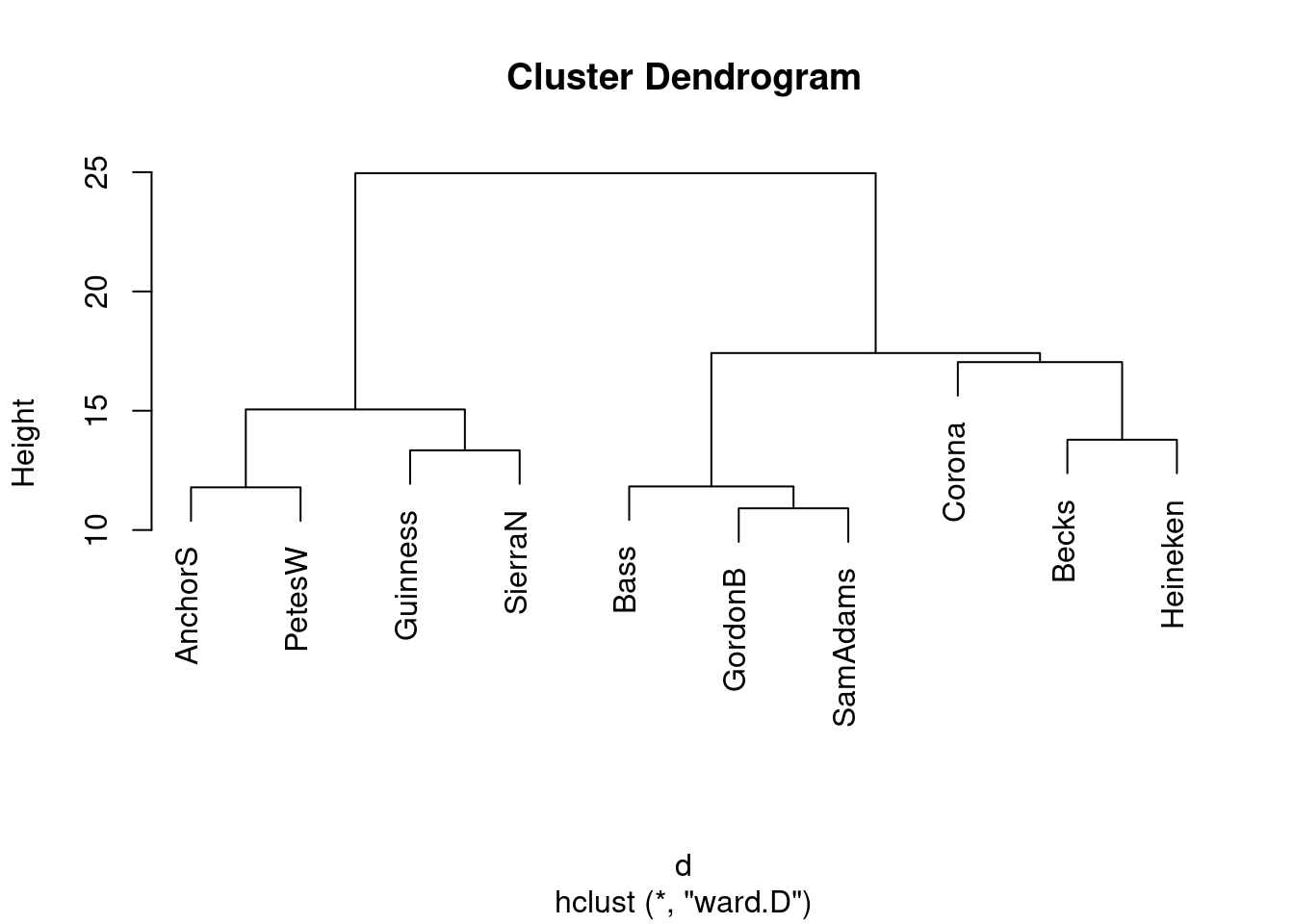

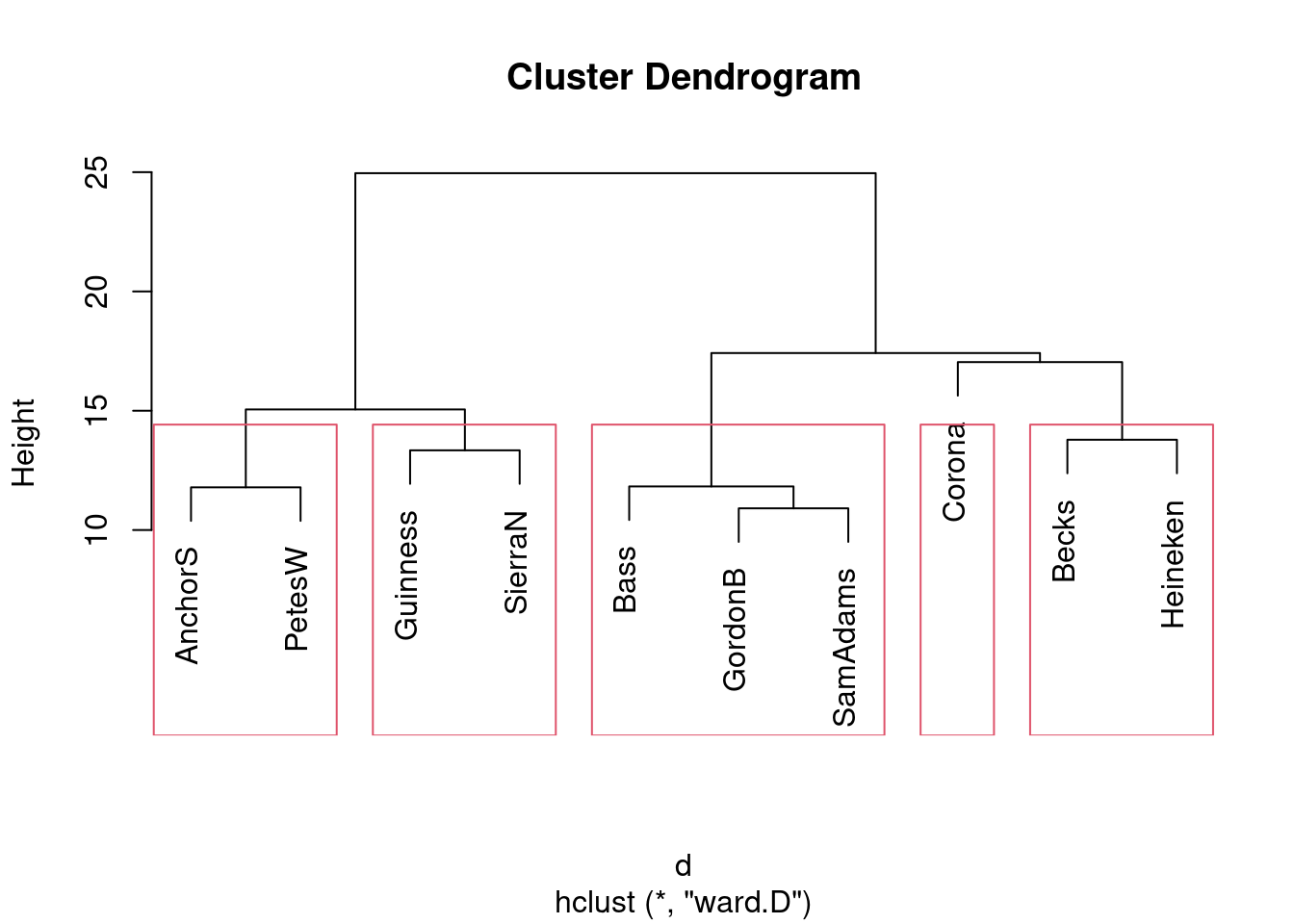

- * Obtain a clustering of the beers, using Ward’s method. Show the dendrogram.

Solution

This:

- What seems to be a sensible number of clusters? Which beers are in which cluster?

Solution

This is a judgement call. Almost anything sensible is reasonable. I personally think that two clusters is good, beers Anchor Steam, Pete’s Wicked Ale, Guinness and Sierra Nevada in the first, and Bass, Gordon Biersch, Sam Adams, Corona, Beck’s, and Heineken in the second. You could make a case for three clusters, splitting off Corona, Beck’s and Heineken into their own cluster, or even about 5 clusters as Anchor Steam, Pete’s Wicked Ale; Guinness, Sierra Nevada; Bass, Gordon Biersch, Sam Adams; Corona; Beck’s, Heineken.

The idea is to have a number of clusters sensibly smaller than the 10 observations, so that you are getting some actual insight. Having 8 clusters for 10 beers wouldn’t be very informative! (This is where you use your own knowledge about beer to help you rationalize your choice of number of clusters.)

Extra: as to why the clusters split up like this, I think the four beers on the left of my dendrogram are “dark” and the six on the right are “light” (in colour), and I would expect the students to tend to like all the beers of one type and not so much all the beers of the other type.

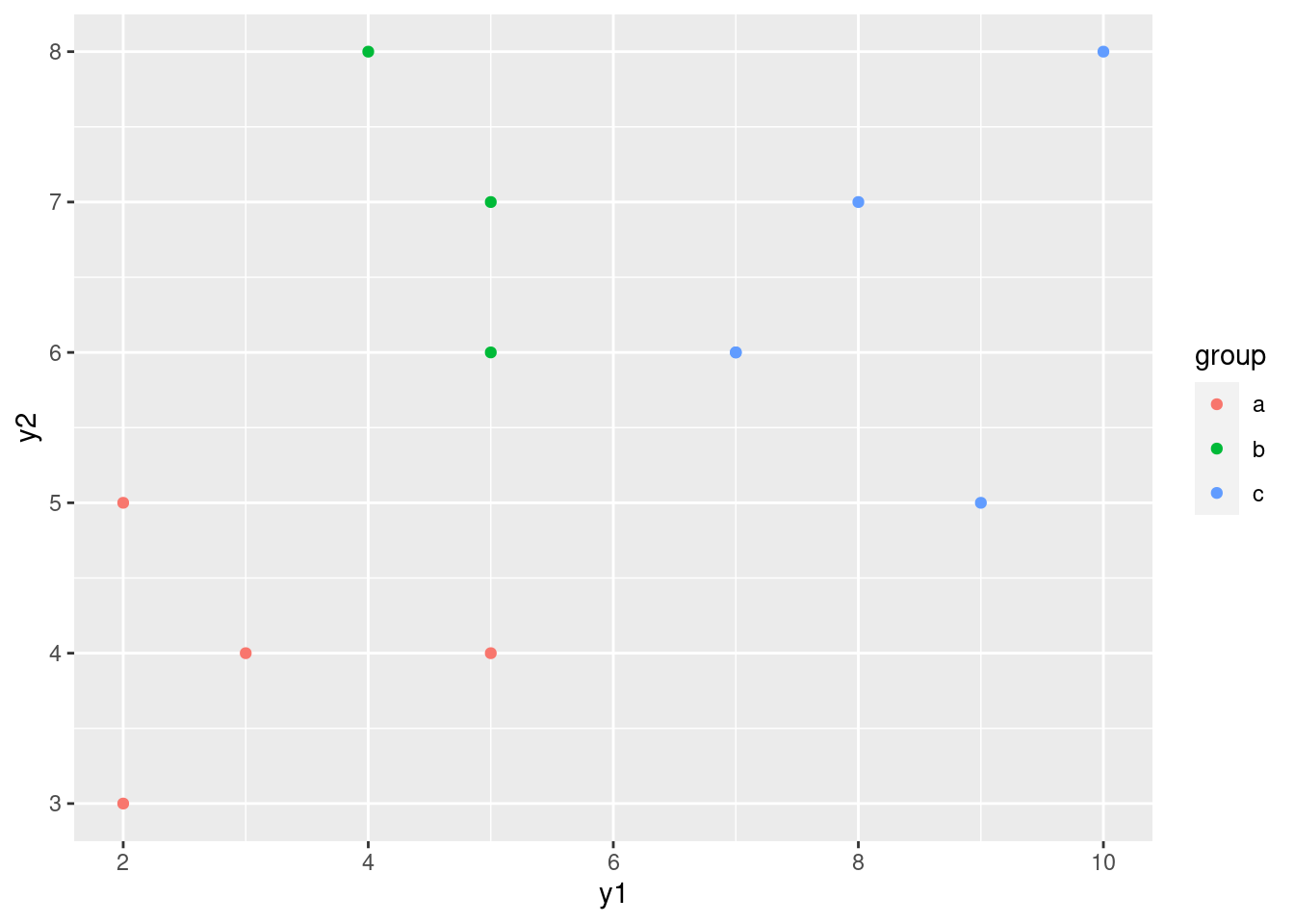

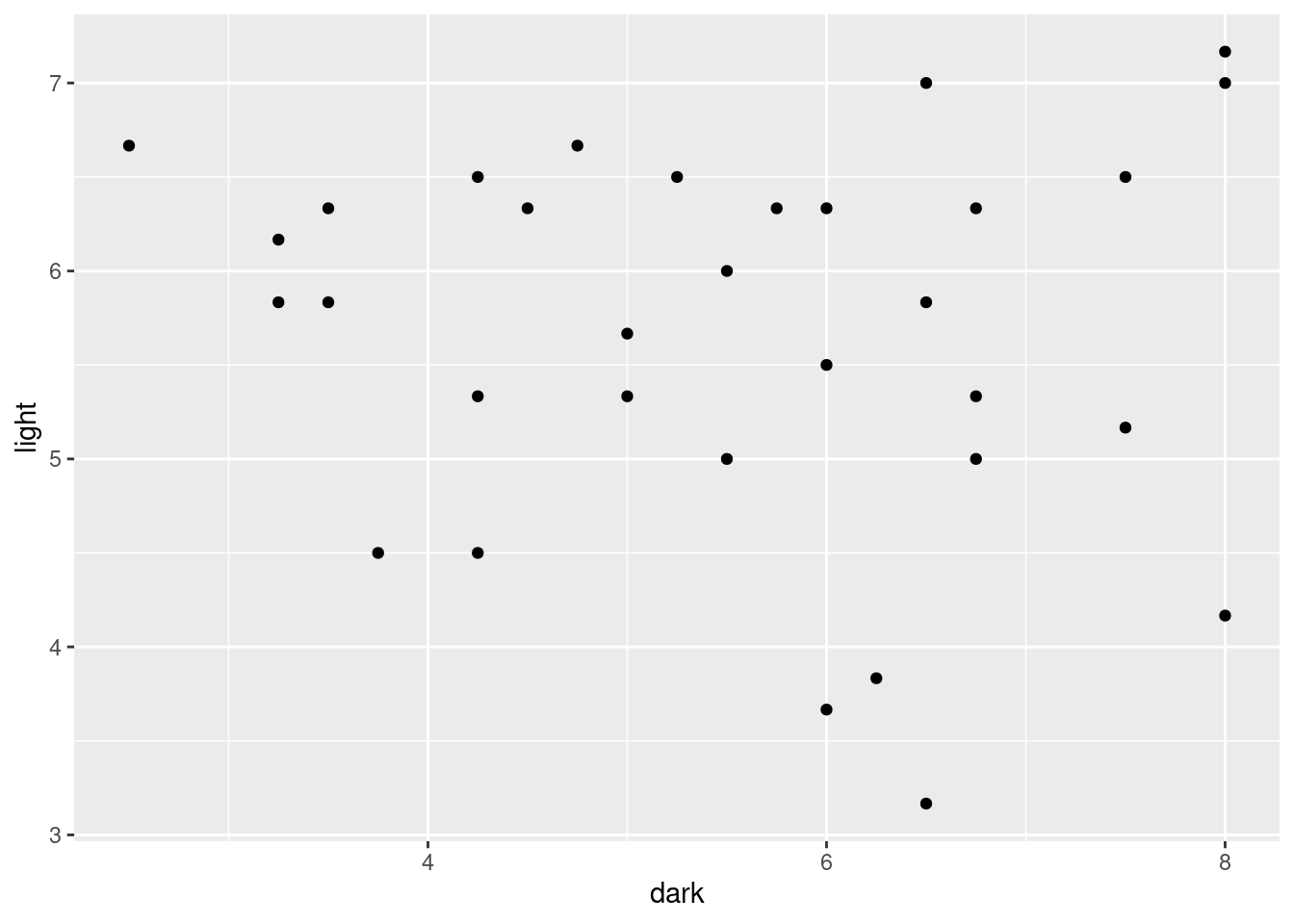

You knew I would have to investigate this, didn’t you? Let’s aim for a scatterplot of all the ratings for the dark beers, against the ones for the light beers.

Start with the data frame read in from the file:

The aim is to find the average rating for a dark beer and a light beer for each student, and then plot them against each other. Does a student who likes dark beer tend not to like light beer, and vice versa?

Let’s think about what to do first.

We need to: pivot_longer all the rating columns into one, labelled

by name of beer. Then create a variable that is dark

if we’re looking at one of the dark beers and light

otherwise. ifelse works like “if” in a spreadsheet: a

logical thing that is either true or false, followed by a value if

true and a value if false. There is a nice R command %in%

which is TRUE if the thing in the first variable is to be

found somewhere in the list of things given next (here, one of the

apparently dark beers). (Another way to do this, which will appeal to

you more if you like databases, is to create a second data frame with

two columns, the first being the beer names, and the second being

dark or light as appropriate for that beer. Then you

use a “left join” to look up beer type from beer name.)

Next, group by beer type within student. Giving two things to

group_by does it this way: the second thing within

(or “for each of”) the first.

Then calculate the mean rating within each group. This gives one column of students, one column of beer types, and one column of rating means.

Then we need to pivot_wider beer type

into two columns so that we can make a scatterplot of the mean ratings

for light and dark against

each other.

Finally, we make a scatterplot.

You’ll see the final version of this that worked, but rest assured that there were many intervening versions of this that didn’t!

I urge you to examine the chain one line at a time and see what each line does. That was how I debugged it.

Off we go:

beer %>%

pivot_longer(-student, names_to="name", values_to="rating") %>%

mutate(beer.type = ifelse(name %in%

c("AnchorS", "PetesW", "Guinness", "SierraN"), "dark", "light")) %>%

group_by(student, beer.type) %>%

summarize(mean.rat = mean(rating)) %>%

pivot_wider(names_from=beer.type, values_from=mean.rat) %>%

ggplot(aes(x = dark, y = light)) + geom_point()

After all that work, not really. There are some students who like light beer but not dark beer (top left), there is a sort of vague straggle down to the bottom right, where some students like dark beer but not light beer, but there are definitely students at the top right, who just like beer!

The only really empty part of this plot is the bottom left, which says that these students don’t hate both kinds of beer; they like either dark beer, or light beer, or both.

The reason a ggplot fits into this “workflow” is that the

first thing you feed into ggplot is a data frame, the one

created by the chain here. Because it’s in a pipeline,

you don’t have the

first thing on ggplot, so you can concentrate on the

aes (“what to plot”) and then the “how to plot it”.

Now back to your regularly-scheduled programming.

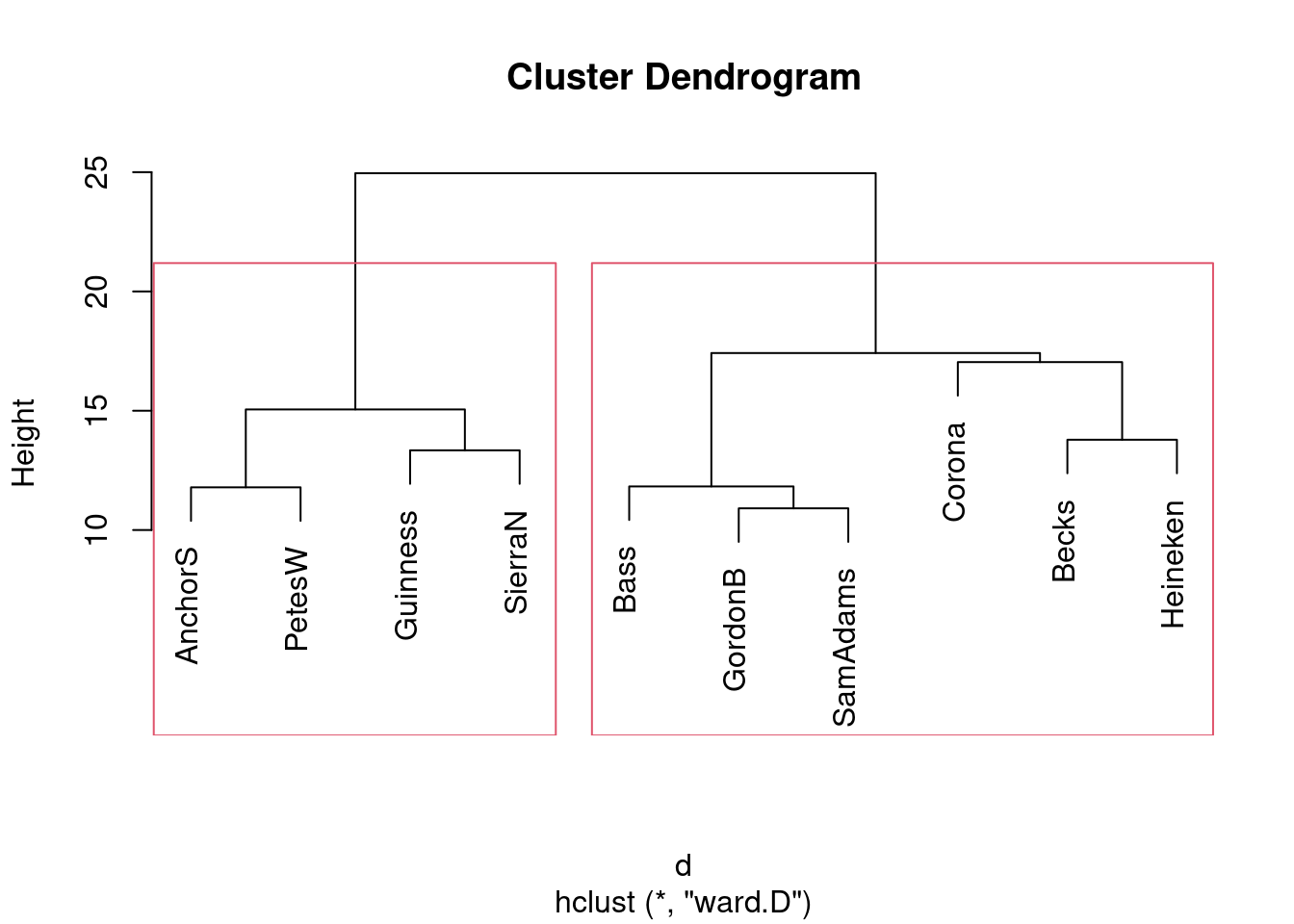

- Re-draw your dendrogram with your clusters indicated.

Solution

rect.hclust, with your chosen number of clusters:

Or if you prefer 5 clusters, like this:

Same idea with any other number of clusters. If you follow through with your preferred number of clusters from the previous part, I’m good.

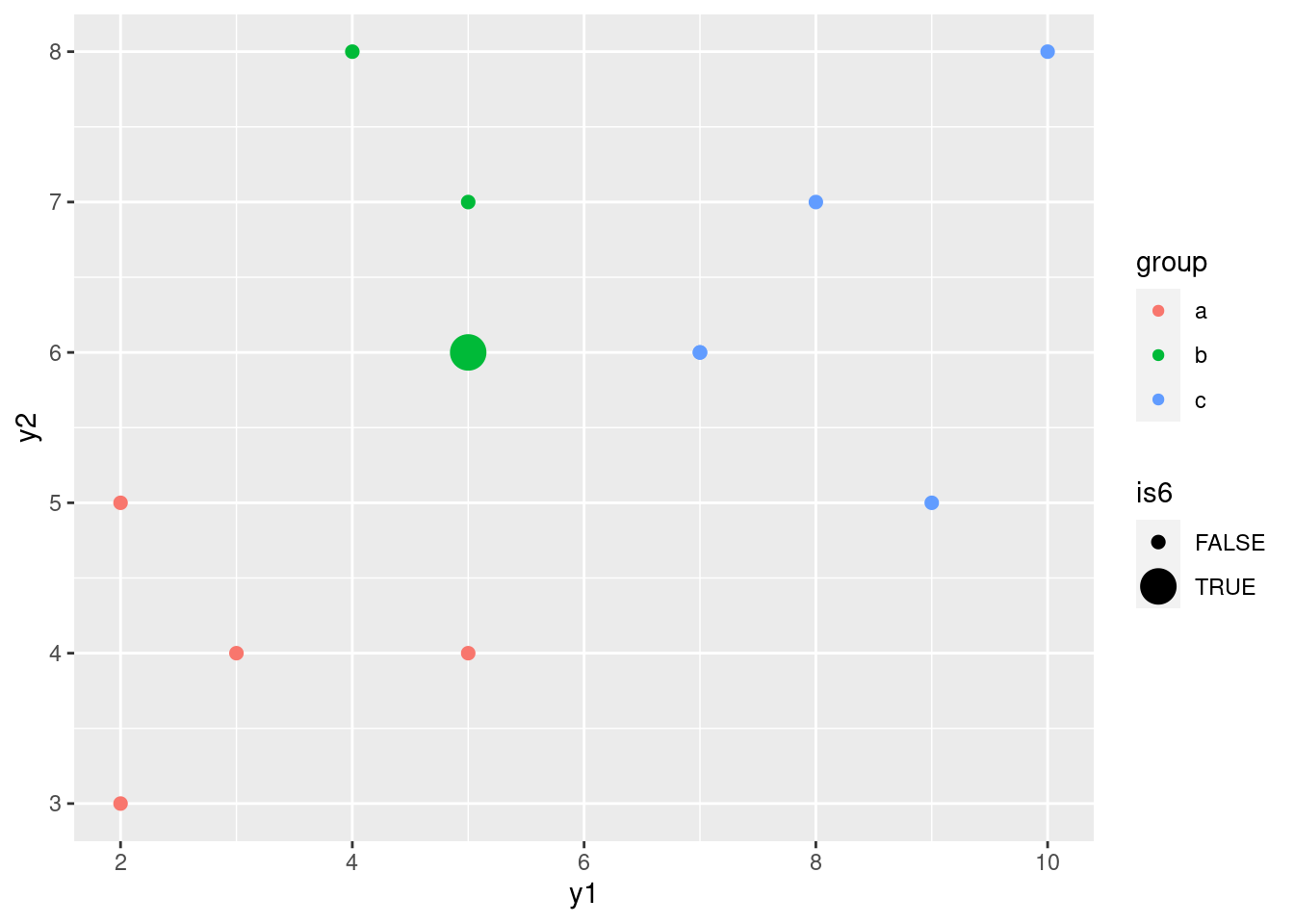

- Obtain a K-means

clustering with 2 clusters.

If you haven’t gotten to K-means clustering yet, leave this and save it for later.

Note that you will need to use the (transposed)

original data, not the distances. Use a suitably large value of

nstart. (The data are ratings all on the same scale, so there is no need forscalehere. In case you were wondering.)

Solution

I used 20 for nstart. This is the pipe way:

Not everyone (probably) will get the same answer, because of the random nature of the procedure, but the above code should be good whatever output it produces.

- How many beers are in each cluster?

Solution

On mine:

## [1] 6 4You might get the same numbers the other way around.

- Which beers are in each cluster? You can do this

simply by obtaining the cluster memberships and using

sortas in the last question, or you can do it as I did in class by obtaining the names of the things to be clustered and picking out the ones of them that are in cluster 1, 2, 3, .)

Solution

The cluster numbers of each beer are these:

## AnchorS Bass Becks Corona GordonB Guinness Heineken PetesW SamAdams SierraN

## 2 1 1 1 1 2 1 2 1 2This is what is known in the business as a “named vector”: it has values (the cluster numbers) and each value has a name attached to it (the name of a beer).

Named vectors are handily turned into a data frame with enframe:

Or, to go back the other way, deframe:

## AnchorS Bass Becks Corona GordonB Guinness Heineken PetesW SamAdams SierraN

## 2 1 1 1 1 2 1 2 1 2or, give the columns better names and arrange them by cluster:

These happen to be the same clusters as in my 2-cluster solution using Ward’s method.

30.5 Clustering the Swiss bills

This question is about the Swiss bank counterfeit bills again. This time we’re going to ignore whether each bill is counterfeit or not, and see what groups they break into. Then, at the end, we’ll see whether cluster analysis was able to pick out the counterfeit ones or not.

- Read the data in again (just like last time), and look at the first few rows. This is just the same as before.

Solution

The data file was aligned in columns, so:

##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## length = col_double(),

## left = col_double(),

## right = col_double(),

## bottom = col_double(),

## top = col_double(),

## diag = col_double(),

## status = col_character()

## )- The variables in this data frame are on different

scales. Standardize them so that they all have mean 0 and standard

deviation 1. (Don’t try to standardize the

statuscolumn!)

Solution

What kind of thing do we have?

## [1] "matrix" "array"so something like this is needed to display some of it (rather than all of it):

## length left right bottom top diag

## [1,] -0.2549435 2.433346 2.8299417 -0.2890067 -1.1837648 0.4482473

## [2,] -0.7860757 -1.167507 -0.6347880 -0.9120152 -1.4328473 1.0557460

## [3,] -0.2549435 -1.167507 -0.6347880 -0.4966762 -1.3083061 1.4896737

## [4,] -0.2549435 -1.167507 -0.8822687 -1.3273542 -0.3119759 1.3161027

## [5,] 0.2761888 -1.444496 -0.6347880 0.6801176 -3.6745902 1.1425316

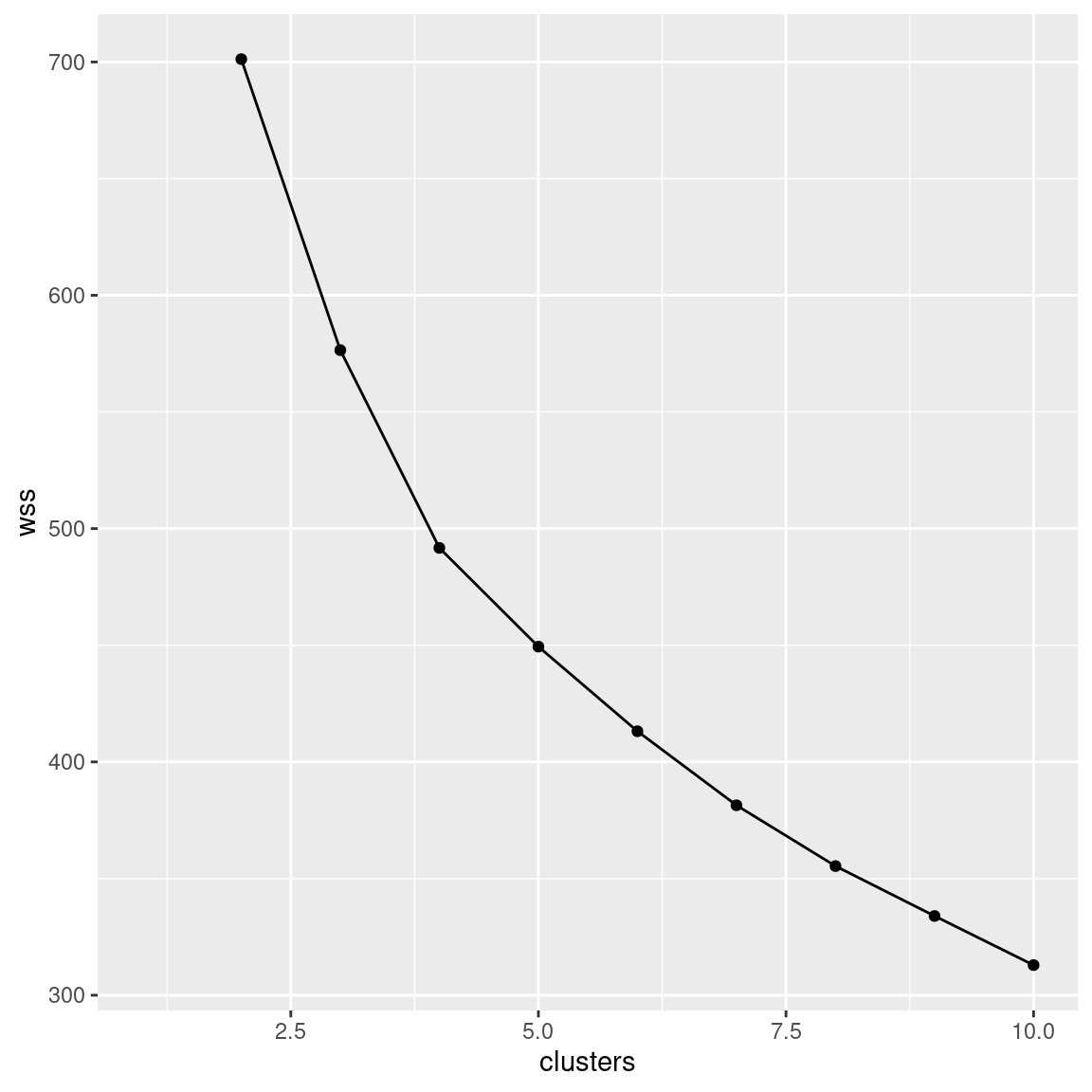

## [6,] 2.1351516 1.879368 1.3450576 -0.2890067 -0.6855997 0.7953894- We are going to make a scree plot. First, calculate the total within-cluster SS for each number of clusters from 2 to 10.

Solution

When I first made this problem (some years ago),

I thought the obvious answer was a loop, but now that I’ve been

steeped in the Tidyverse a while, I think rowwise is much

clearer, so I’ll do that first.

Start by making a tibble that has one column called clusters containing the numbers 2 through 10:

Now, for each of these numbers of clusters, calculate the total within-cluster sum of squares for it (that number of clusters). To do that, think about how you’d do it for something like three clusters:

## [1] 576.1284and then use that within your rowwise:

tibble(clusters = 2:10) %>%

rowwise() %>%

mutate(wss = kmeans(swiss.s, clusters, nstart = 20)$tot.withinss) -> wssq

wssqAnother way is to save all the output from the kmeans, in a list-column, and then extract the thing you want, thus:

tibble(clusters = 2:10) %>%

rowwise() %>%

mutate(km = list(kmeans(swiss.s, clusters, nstart = 20))) %>%

mutate(wss = km$tot.withinss) -> wssq.2

wssq.2The output from kmeans is a collection of things, not just a single number, so when you create the column km, you need to put list around the kmeans, and then you’ll create a list-column. wss, on the other hand, is a single number each time, so no list is needed, and wss is an ordinary column of numbers, labelled dbl at the top.

The most important thing in both of these is to remember the rowwise. Without it, everything will go horribly wrong! This is because kmeans expects a single number for the number of clusters, and rowwise will provide that single number (for the row you are looking at). If you forget the rowwise, the whole column clusters will get fed into kmeans all at once, and kmeans will get horribly confused.

If you insist, do it Python-style as a loop, like this:

clus <- 2:10

wss.1 <- numeric(0)

for (i in clus)

{

wss.1[i] <- kmeans(swiss.s, i, nstart = 20)$tot.withinss

}

wss.1## [1] NA 701.2054 576.1284 491.7085 449.3900 412.9139 381.3926 355.3338 338.5621

## [10] 318.1799Note that there are 10 wss values, but the first one is

missing, since we didn’t do one cluster.

R vectors start from 1, unlike C arrays or Python lists, which start from 0.

The numeric(0) says “wss has nothing in it, but if it had anything, it would be numbers”. Or, you can initialize

wss to however long it’s going to be (here 10), which is

actually more efficient (R doesn’t have to keep making it

“a bit longer”). If you initialize it to length 10, the 10 values will have

NAs in them when you start.

It doesn’t matter what nstart is: Ideally, big enough to have a decent

chance of finding the best clustering, but small enough that it

doesn’t take too long to run.

Whichever way you create your total within-cluster sums of squares, you can use it to make a scree plot (next part).

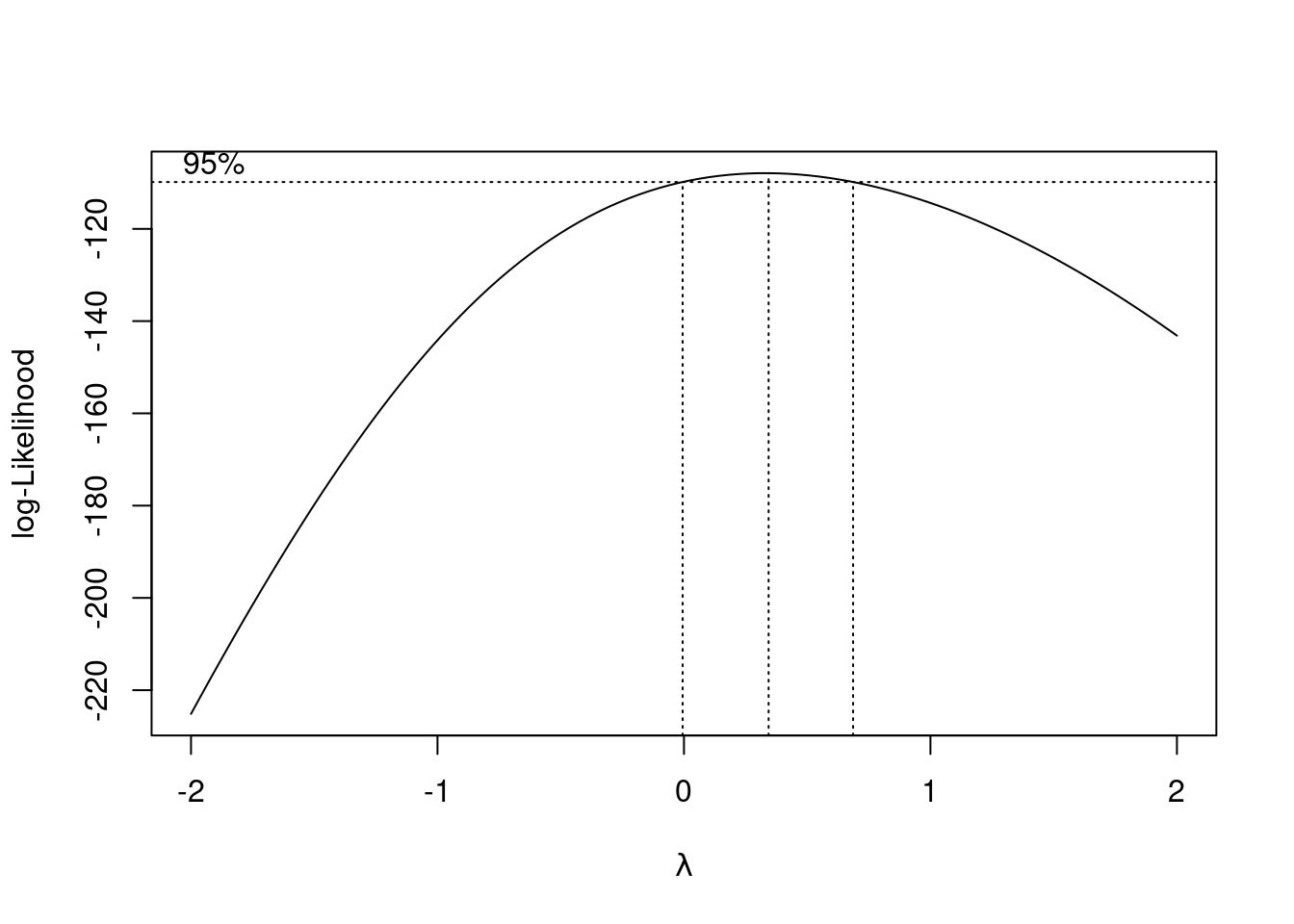

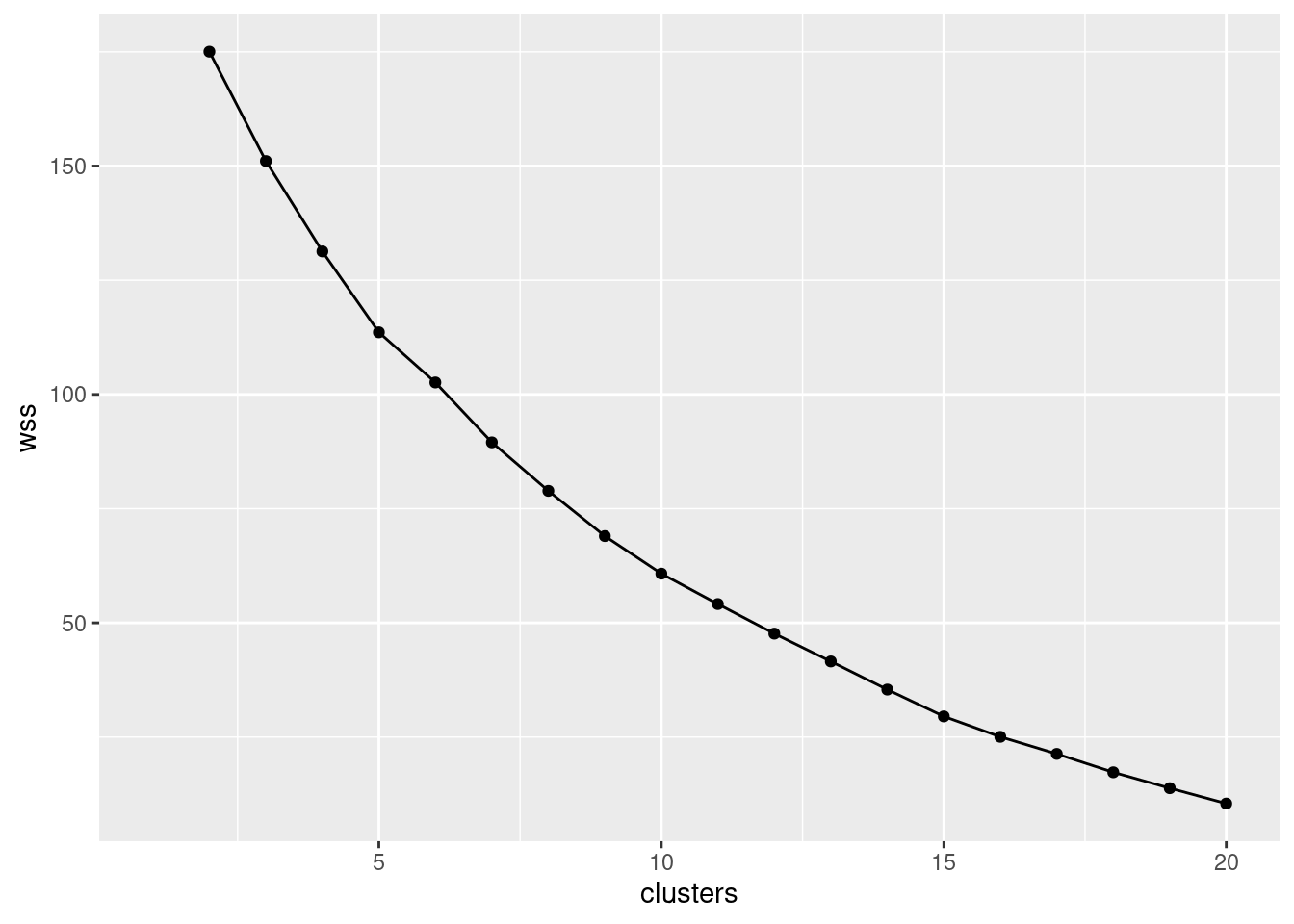

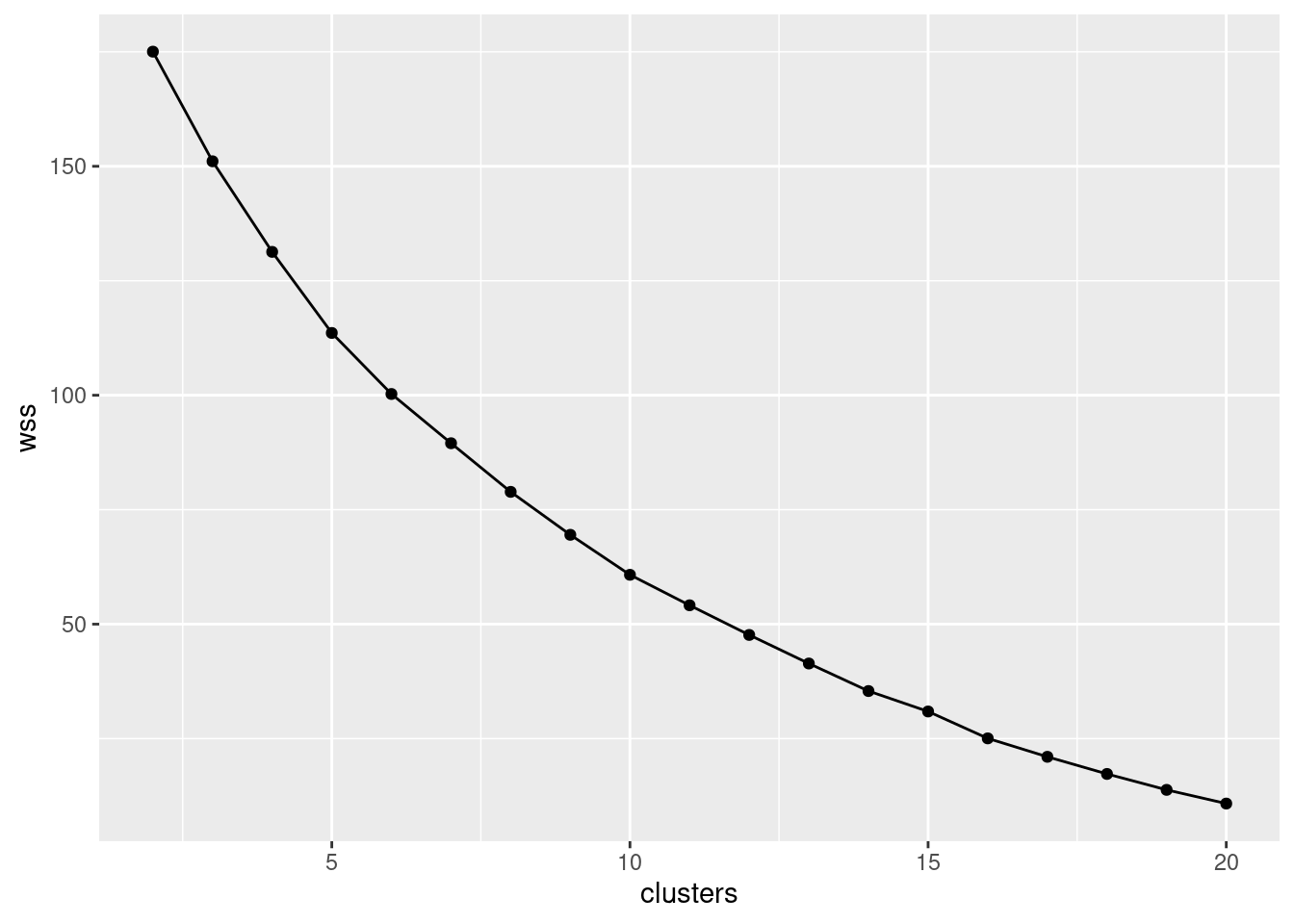

- * Make a scree plot (creating a data frame first if you need). How many clusters do you think you should use?

Solution

The easiest is to use the output from the rowwise,

which I called wssq, this already being a dataframe:

If you did it the loop way, you’ll have to make a data frame

first, which you can then pipe into ggplot:

tibble(clusters = 1:10, wss = wss.1) %>%

ggplot(aes(x = clusters, y = wss)) + geom_point() + geom_line()## Warning: Removed 1 rows containing missing values (geom_point).## Warning: Removed 1 row(s) containing missing values (geom_path).

If you started at 2 clusters, your wss will start at 2

clusters also, and you’ll need to be careful to have something like

clusters=2:10 (not 1:10) in the definition of your

data frame.

Interpretation: I see a small elbow at 4 clusters, so that’s how many I think we should use. Any place you can reasonably see an elbow is good.

The warning is about the missing within-cluster total sum of squares for one cluster, since the loop way didn’t supply a total within-cluster sum of squares for one cluster.

- Run K-means with the number of clusters that you found in (here). How many bills are in each cluster?

Solution

I’m going to start by setting the random number seed (so that my results don’t change every time I run this). You don’t need to do that, though you might want to in something like R Markdown code (for example, in an R Notebook):

Now, down to business:

## [1] 50 32 68 50This many. Note that my clusters 1 and 4 (and also 2 and 3) add up to 100 bills. There were 100 genuine and 100 counterfeit bills in the original data set. I don’t know why “7”. I just felt like it. Extra: you might remember that back before I actually ran K-means on each of the numbers of clusters from 2 to 10. How can we extract that output? Something like this. Here’s where the output was:

Now we need to pull out the 4th row and the km column. We need the output as an actual thing, not a data frame, so:

Is that the right thing?

## [[1]]

## K-means clustering with 4 clusters of sizes 32, 50, 50, 68

##

## Cluster means:

## length left right bottom top diag

## 1 1.1475776 0.6848546 0.2855308 -0.5788787 -0.40538184 0.7764051

## 2 -0.5683115 0.2617543 0.3254371 1.3197396 0.04670298 -0.8483286

## 3 0.1062264 0.6993965 0.8352473 0.1927865 1.18251937 -0.9316427

## 4 -0.2002681 -1.0290130 -0.9878119 -0.8397381 -0.71307204 0.9434354

##

## Clustering vector:

## [1] 1 4 4 4 4 1 4 4 4 1 1 4 1 4 4 4 4 4 4 4 4 1 1 1 4 1 1 1 1 4 1 4 4 1 1 1 1 4 1 4 4 4

## [43] 4 1 4 4 4 4 4 4 4 1 4 1 4 4 1 4 1 4 4 4 4 4 4 1 4 4 4 3 4 4 4 4 4 4 4 4 1 4 4 4 4 1

## [85] 1 4 4 4 1 4 4 1 4 4 4 1 1 4 4 4 3 3 3 3 2 2 3 3 3 3 3 3 3 2 2 3 2 2 2 3 3 2 3 3 2 3

## [127] 3 3 3 3 2 2 3 3 2 2 2 3 2 2 3 2 2 3 2 2 2 3 2 3 2 2 2 2 2 2 2 2 2 3 3 2 2 2 2 3 1 3

## [169] 3 2 3 2 2 2 2 2 2 3 3 3 2 3 3 3 2 2 3 2 3 2 3 3 2 3 2 3 3 3 3 2

##

## Within cluster sum of squares by cluster:

## [1] 92.37757 95.51948 137.68573 166.12573

## (between_SS / total_SS = 58.8 %)

##

## Available components:

##

## [1] "cluster" "centers" "totss" "withinss" "tot.withinss"

## [6] "betweenss" "size" "iter" "ifault"Looks like it. But I should check:

## NULLAh. swiss.7a is actually a list, as evidenced by the [[1]] at the top of the output, so I get things from it thus:

## length left right bottom top diag

## 1 1.1475776 0.6848546 0.2855308 -0.5788787 -0.40538184 0.7764051

## 2 -0.5683115 0.2617543 0.3254371 1.3197396 0.04670298 -0.8483286

## 3 0.1062264 0.6993965 0.8352473 0.1927865 1.18251937 -0.9316427

## 4 -0.2002681 -1.0290130 -0.9878119 -0.8397381 -0.71307204 0.9434354This would be because it came from a list-column; using pull removed the data-frameness from swiss.7a, but not its listness.

- Make a table showing cluster membership against actual status (counterfeit or genuine). Are the counterfeit bills mostly in certain clusters?

Solution

table. swiss.7$cluster shows the actual

cluster numbers:

##

## 1 2 3 4

## counterfeit 50 1 0 49

## genuine 0 31 68 1Or, if you prefer,

or even

tibble(obs = swiss$status, pred = swiss.7$cluster) %>%

count(obs, pred) %>%

pivot_wider(names_from = obs, values_from = n, values_fill = 0)In my case (yours might be different), 99 of the 100 counterfeit bills are in clusters 1 and 4, and 99 of the 100 genuine bills are in clusters 2 and 3. This is again where set.seed is valuable: write this text once and it never needs to change. So the clustering has done a very good job of distinguishing the genuine bills from the counterfeit ones. (You could imagine, if you were an employee at the bank, saying that a bill in cluster 1 or 4 is counterfeit, and being right 99% of the time.) This is kind of a by-product of the clustering, though: we weren’t trying to distinguish counterfeit bills (that would have been the discriminant analysis that we did before); we were just trying to divide them into groups of different ones, and part of what made them different was that some of them were genuine bills and some of them were counterfeit.

30.6 Grouping similar cars

The file link contains information on seven variables for 32 different cars. The variables are:

Carname: name of the car (duh!)mpg: gas consumption in miles per US gallon (higher means the car uses less gas)disp: engine displacement (total volume of cylinders in engine): higher is more powerfulhp: engine horsepower (higher means a more powerful engine)drat: rear axle ratio (higher means more powerful but worse gas mileage)wt: car weight in US tonsqsec: time needed for the car to cover a quarter mile (lower means faster)

- Read in the data and display its structure. Do you have the right number of cars and variables?

my_url <- "https://raw.githubusercontent.com/nxskok/datafiles/master/car-cluster.csv"

cars <- read_csv(my_url)##

## ── Column specification ──────────────────────────────────────────────────────────────────

## cols(

## Carname = col_character(),

## mpg = col_double(),

## disp = col_double(),

## hp = col_double(),

## drat = col_double(),

## wt = col_double(),

## qsec = col_double()

## )Check, both on number of cars and number of variables.

- The variables are all measured on different scales. Use

scaleto produce a matrix of standardized (\(z\)-score) values for the columns of your data that are numbers.

Solution

All but the first column needs to be scaled, so:

This is a matrix, as we’ve seen before.

Another way is like this:

I would prefer to have a look at my result, so that I can see that it has sane things in it:

## mpg disp hp drat wt qsec

## [1,] 0.1508848 -0.57061982 -0.5350928 0.5675137 -0.610399567 -0.7771651

## [2,] 0.1508848 -0.57061982 -0.5350928 0.5675137 -0.349785269 -0.4637808

## [3,] 0.4495434 -0.99018209 -0.7830405 0.4739996 -0.917004624 0.4260068

## [4,] 0.2172534 0.22009369 -0.5350928 -0.9661175 -0.002299538 0.8904872

## [5,] -0.2307345 1.04308123 0.4129422 -0.8351978 0.227654255 -0.4637808

## [6,] -0.3302874 -0.04616698 -0.6080186 -1.5646078 0.248094592 1.3269868or,

## mpg disp hp drat wt qsec

## [1,] 0.1508848 -0.57061982 -0.5350928 0.5675137 -0.610399567 -0.7771651

## [2,] 0.1508848 -0.57061982 -0.5350928 0.5675137 -0.349785269 -0.4637808

## [3,] 0.4495434 -0.99018209 -0.7830405 0.4739996 -0.917004624 0.4260068

## [4,] 0.2172534 0.22009369 -0.5350928 -0.9661175 -0.002299538 0.8904872

## [5,] -0.2307345 1.04308123 0.4129422 -0.8351978 0.227654255 -0.4637808

## [6,] -0.3302874 -0.04616698 -0.6080186 -1.5646078 0.248094592 1.3269868These look right. Or, perhaps better, this:

## mpg disp hp drat

## Min. :-1.6079 Min. :-1.2879 Min. :-1.3810 Min. :-1.5646

## 1st Qu.:-0.7741 1st Qu.:-0.8867 1st Qu.:-0.7320 1st Qu.:-0.9661

## Median :-0.1478 Median :-0.2777 Median :-0.3455 Median : 0.1841

## Mean : 0.0000 Mean : 0.0000 Mean : 0.0000 Mean : 0.0000

## 3rd Qu.: 0.4495 3rd Qu.: 0.7688 3rd Qu.: 0.4859 3rd Qu.: 0.6049

## Max. : 2.2913 Max. : 1.9468 Max. : 2.7466 Max. : 2.4939

## wt qsec

## Min. :-1.7418 Min. :-1.87401

## 1st Qu.:-0.6500 1st Qu.:-0.53513

## Median : 0.1101 Median :-0.07765

## Mean : 0.0000 Mean : 0.00000

## 3rd Qu.: 0.4014 3rd Qu.: 0.58830

## Max. : 2.2553 Max. : 2.82675The mean is exactly zero, for all variables, which is as it should be. Also, the standardized values look about as they should; even the extreme ones don’t go beyond \(\pm 3\).

This doesn’t show the standard deviation of each variable, though, which should be exactly 1 (since that’s what “standardizing” means). To get that, this:

The idea here is “take the matrix cars.s, turn it into a data frame, and for each column, calculate the SD of it”.

The scale function can take a data frame, as here, but always produces a matrix. That’s why we had to turn it back into a data frame.

As you realize now, the same idea will get the mean of each column too:

and we see that the means are all zero, to about 15 decimals, anyway.

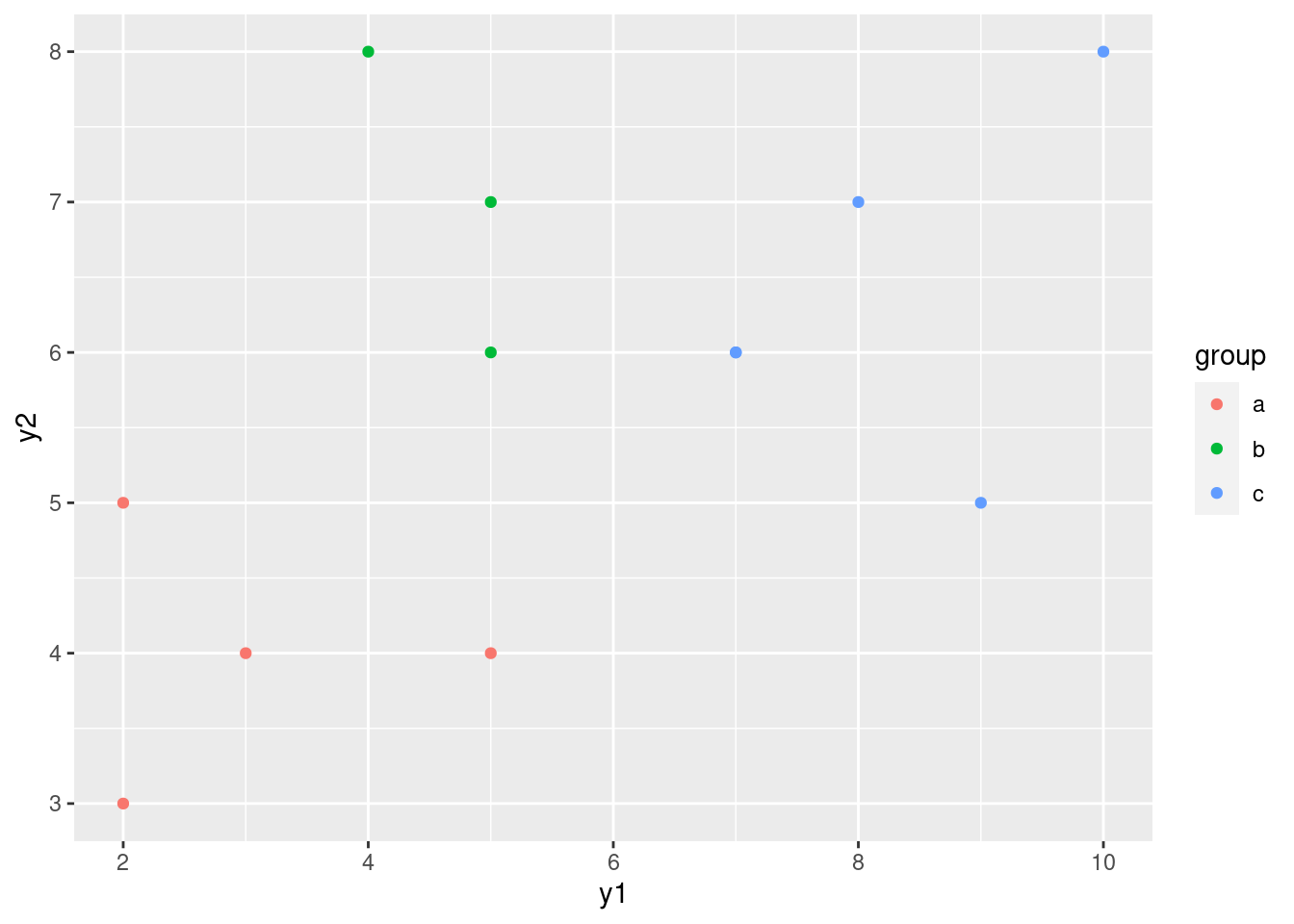

- Run a K-means cluster analysis for these data, obtaining 3 clusters, and display the results. Take whatever action you need to obtain the best (random) result from a number of runs.

Solution

The hint at the end says “use nstart”, so something like this:

## K-means clustering with 3 clusters of sizes 12, 6, 14

##

## Cluster means:

## mpg disp hp drat wt qsec

## 1 0.1384407 -0.5707543 -0.5448163 0.1887816 -0.2454544 0.5491221

## 2 1.6552394 -1.1624447 -1.0382807 1.2252295 -1.3738462 0.3075550

## 3 -0.8280518 0.9874085 0.9119628 -0.6869112 0.7991807 -0.6024854

##

## Clustering vector:

## [1] 1 1 1 1 3 1 3 1 1 1 1 3 3 3 3 3 3 2 2 2 1 3 3 3 3 2 2 2 3 1 3 1

##

## Within cluster sum of squares by cluster:

## [1] 24.95528 7.76019 33.37849

## (between_SS / total_SS = 64.5 %)

##

## Available components:

##

## [1] "cluster" "centers" "totss" "withinss" "tot.withinss"

## [6] "betweenss" "size" "iter" "ifault"You don’t need the set.seed, but if you run again, you’ll get

a different answer. With the nstart, you’ll probably get the

same clustering every time you run, but the clusters might have

different numbers, so that when you talk about “cluster 1” and then

re-run, what you were talking about might have moved to cluster 3, say.

If you are using R Markdown, for this reason, having a

set.seed before anything involving random number generation

is a smart move.

I forgot this, and then realized that I would have to rewrite a whole paragraph. In case you think I remember everything the first time.

- Display the car names together with which cluster they are in. If you display them all at once, sort by cluster so that it’s easier to see which clusters contain which cars. (You may have to make a data frame first.)

Solution

As below. The car names are in the Carname column of the

original cars data frame, and the cluster numbers are in

the cluster part of the output from kmeans. You’ll

need to take some action to display everything (there are only 32

cars, so it’s perfectly all right to display all of them):

Or start from the original data frame as read in from the file and grab only what you want:

This time we want to keep the car names and throw away everything else.

- I have no idea whether 3 is a sensible number of clusters. To find out, we will draw a scree plot (in a moment). Write a function that accepts the number of clusters and the (scaled) data, and returns the total within-cluster sum of squares.

Solution

I failed to guess (in conversation with students, back when this was a question to be handed in) what you might do. There are two equally good ways to tackle this part and the next:

Write a function to calculate the total within-cluster sum of squares (in this part) and somehow use it in the next part, eg. via

rowwise, to get the total within-cluster sum of squares for each number of clusters.Skip the function-writing part and go directly to a loop in the next part.

I’m good with either approach: as long as you obtain, somehow, the total within-cluster sum of squares for each number of clusters, and use them for making a scree plot, I think you should get the points for this part and the next. I’ll talk about the function way here and the loop way in the next part.

The function way is just like the one in the previous question:

The data and number of clusters can have any names, as long as you use whatever input names you chose within the function.

I should probably check that this works, at least on 3 clusters. Before we had

## [1] 66.09396and the function gives

## [1] 66.09396Check.

I need to make sure that I used my scaled cars data, but I

don’t need to say anything about nstart, since that defaults

to the perfectly suitable 20.

- Calculate the total within-group sum of squares for each number of clusters from 2 to 10, using the function you just wrote.

Solution

The loop way. I like to define my possible numbers of clusters into a vector first:

## [1] NA 87.29448 66.09396 50.94273 38.22004 29.28816 24.23138 20.76061 17.97491

## [10] 15.19850Now that I look at this again, it occurs to me that there is no great need to write a function to do this: you can just do what you need to do within the loop, like this:

w <- numeric(0)

nclus <- 2:10

for (i in nclus) {

w[i] <- kmeans(cars.s, i, nstart = 20)$tot.withinss

}

w## [1] NA 87.29448 66.09396 50.94273 38.22004 29.28816 24.23138 20.76061 17.33653

## [10] 15.19850You ought to have an nstart somewhere to make sure that

kmeans gets run a number of times and the best result taken.

If you initialize your w with numeric(10) rather

than numeric(0), it apparently gets filled with zeroes rather

than NA values. This means, later, when you come to plot your

w-values, the within-cluster total sum of squares will appear

to be zero, a legitimate value, for one cluster, even though it is

definitely not. (Or, I suppose, you could start your loop at 1

cluster, and get a legitimate, though very big, value for it.)

In both of the above cases, the curly brackets are optional because

there is only one line within the loop.

I am m accustomed to using the curly brackets all the time, partly because my single-line loops have a habit of expanding to more than one line as I embellish what they do, and partly because I’m used to the programming language Perl where the curly brackets are obligatory even with only one line. Curly brackets in Perl serve the same purpose as indentation serves in Python: figuring out what is inside a loop or an if and what is outside.

What is actually happening here is an implicit

loop-within-a-loop. There is a loop over i that goes over all

clusters, and then there is a loop over another variable, j

say, that loops over the nstart runs that we’re doing for

i clusters, where we find the tot.withinss for

i clusters on the jth run, and if it’s the best one

so far for i clusters, we save it. Or, at least,

kmeans saves it.

Or, using rowwise, which I like better:

Note that w starts at 1, but wwx starts at 2. For

this way, you have to define a function first to calculate the

total within-cluster sum of squares for a given number of clusters. If

you must, you can do the calculation in the mutate rather than writing a function,

but I find that very confusing to read, so I’d rather define the

function first, and then use it later. (The principle is to keep the mutate simple, and put the complexity in the function where it belongs.)

As I say, if you must:

tibble(clusters = 2:10) %>%

rowwise() %>%

mutate(wss = kmeans(cars.s,

clusters,

nstart = 20)$tot.withinss) -> wwx

wwxThe upshot of all of this is that if you had obtained a total within-cluster sum of squares for each number of clusters, somehow, and it’s correct, you should have gotten some credit When this was a question to hand in, which it is not any more. for this part and the last part. This is a common principle of mine, and works on exams as well as assignments; it goes back to the idea of “get the job done” that you first saw in C32.

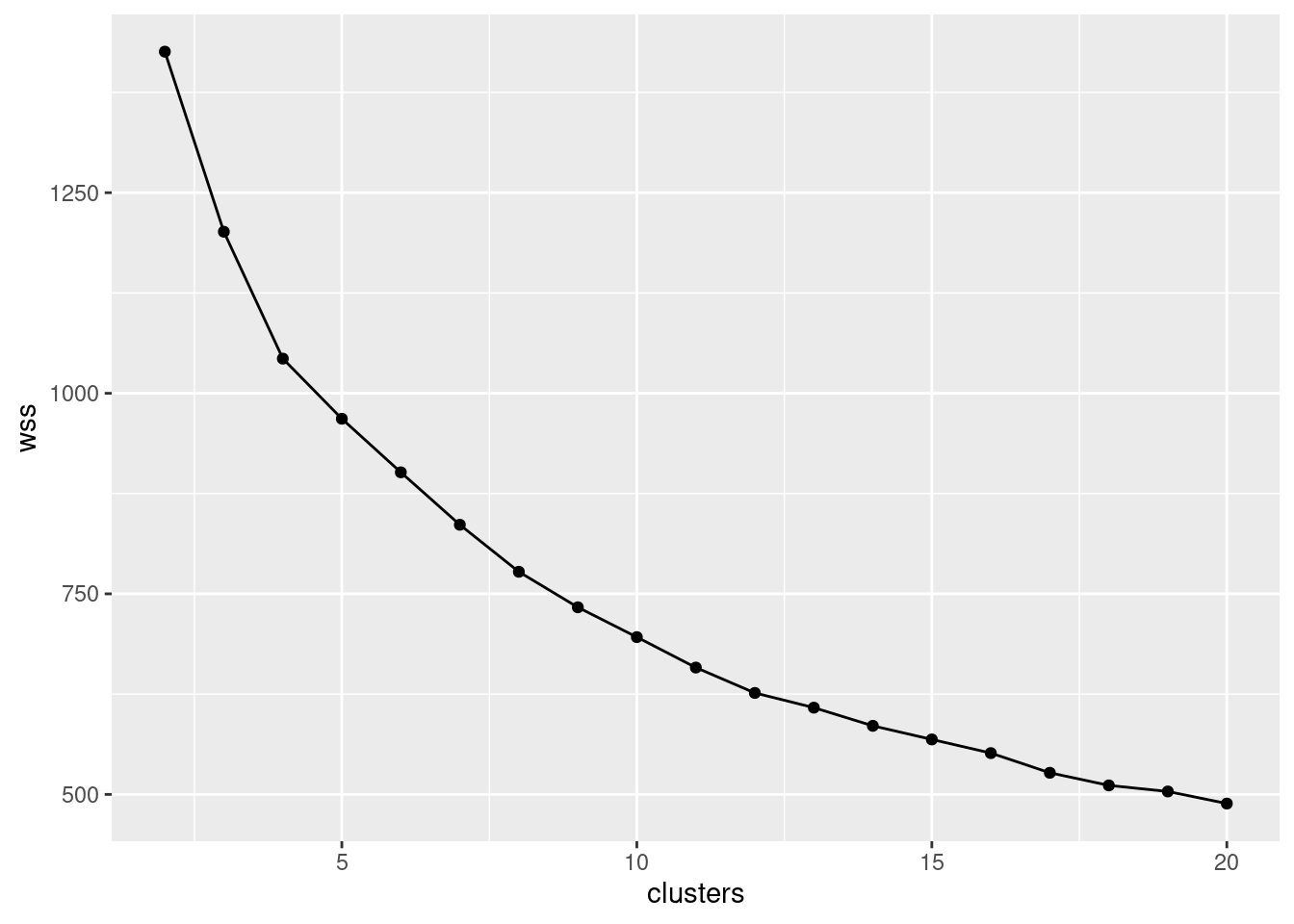

- Make a scree plot, using the total within-cluster sums of squares values that you calculated in the previous part.

Solution

If you did this the loop way, it’s tempting to leap into this:

## Error in data.frame(clusters = nclus, wss = w): arguments imply differing number of rows: 9, 10and then wonder why it doesn’t work. The problem is that w

has 10 things in it, including an NA at the front (as a

placeholder for 1 cluster):

## [1] NA 87.29448 66.09396 50.94273 38.22004 29.28816 24.23138 20.76061 17.33653

## [10] 15.19850## [1] 2 3 4 5 6 7 8 9 10while nclus only has 9. So do something like this instead:

tibble(clusters = 1:10, wss = w) %>%

ggplot(aes(x = clusters, y = wss)) + geom_point() + geom_line()## Warning: Removed 1 rows containing missing values (geom_point).## Warning: Removed 1 row(s) containing missing values (geom_path).

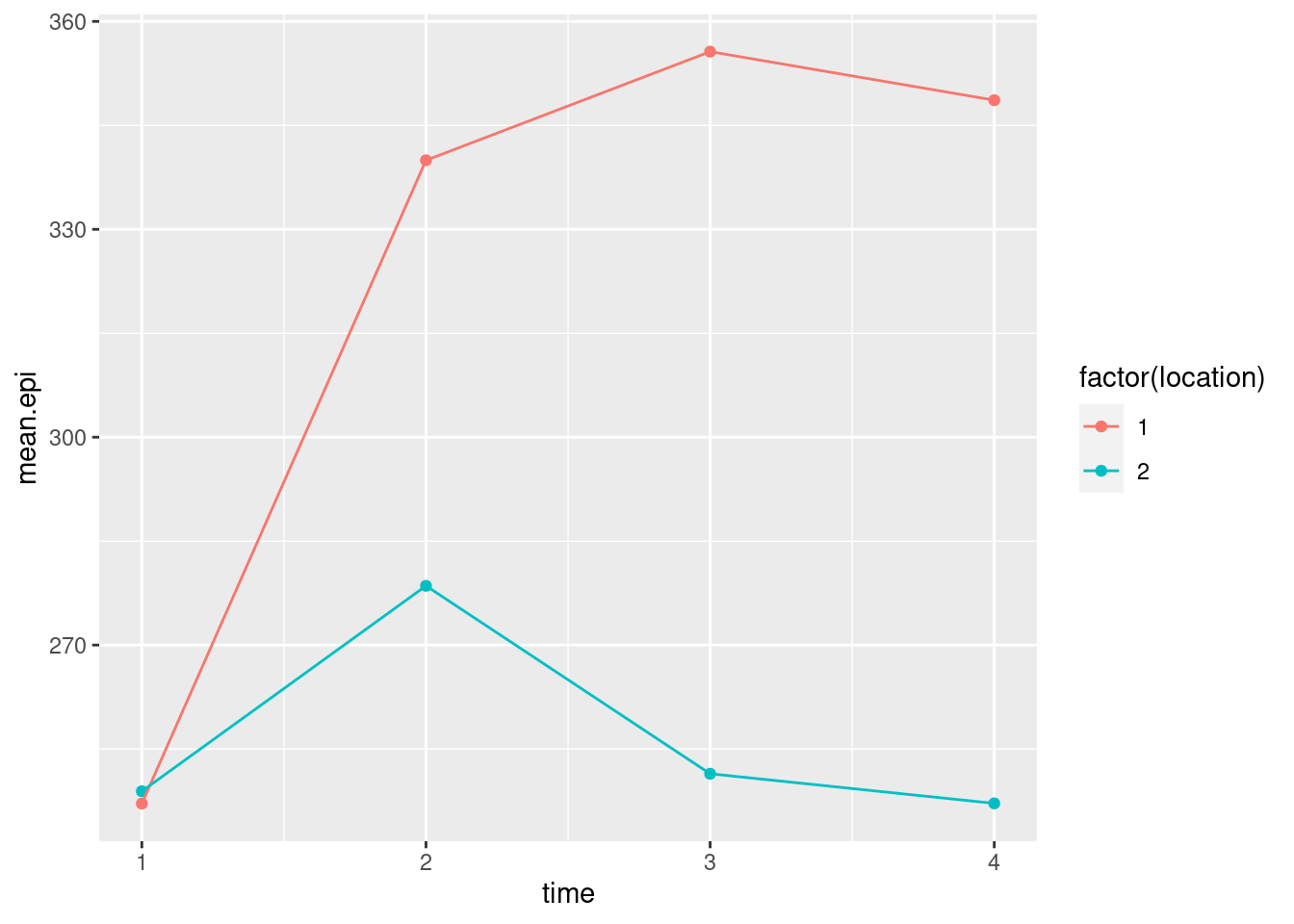

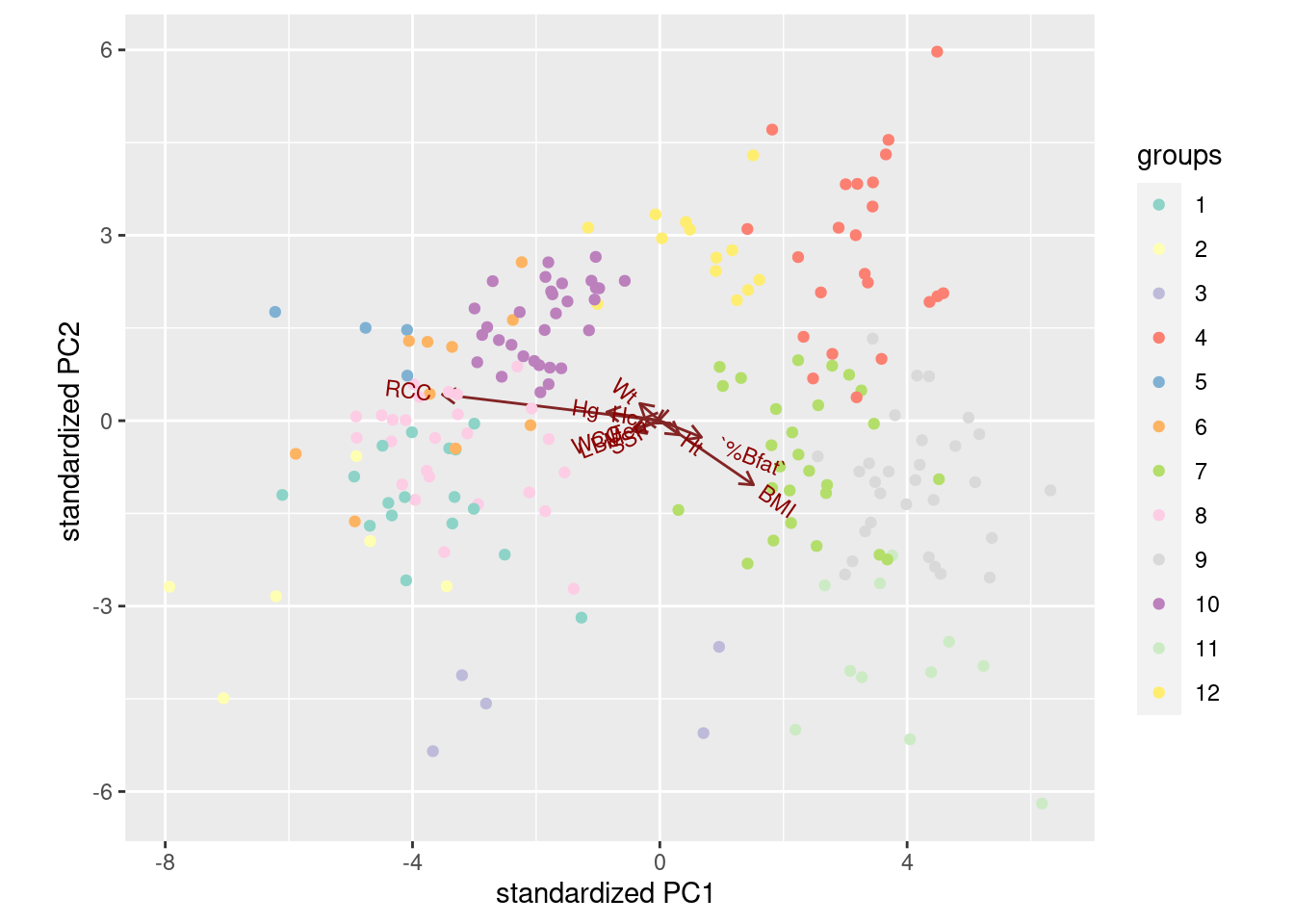

This gives a warning because there is no 1-cluster w-value,