Chapter 34 Discriminant analysis

Packages for this chapter:

library(ggbiplot)

library(MASS)

library(tidyverse)

library(car)(Note: ggbiplot loads plyr, which overlaps a lot with dplyr

(filter, select etc.). We want the dplyr stuff elsewhere, so we

load ggbiplot first, and the things in plyr get hidden, as shown

in the Conflicts. This, despite appearances, is what we want.)

34.1 Telling whether a banknote is real or counterfeit

* A Swiss bank collected a number of known counterfeit (fake) bills over time, and sampled a number of known genuine bills of the same denomination. Is it possible to tell, from measurements taken from a bill, whether it is genuine or not? We will explore that issue here. The variables measured were:

length

right-hand width

left-hand width

top margin

bottom margin

diagonal

Read in the data from link, and check that you have 200 rows and 7 columns altogether.

Run a multivariate analysis of variance. What do you conclude? Is it worth running a discriminant analysis? (This is the same procedure as with basic MANOVAs before.)

Run a discriminant analysis. Display the output.

How many linear discriminants did you get? Is that making sense? Explain briefly.

* Using your output from the discriminant analysis, describe how each of the linear discriminants that you got is related to your original variables. (This can, maybe even should, be done crudely: “does each variable feature in each linear discriminant: yes or no?”.)

What values of your variable(s) would make

LD1large and positive?* Find the means of each variable for each group (genuine and counterfeit bills). You can get this from your fitted linear discriminant object.

Plot your linear discriminant(s), however you like. Bear in mind that there is only one linear discriminant.

What kind of score on

LD1do genuine bills typically have? What kind of score do counterfeit bills typically have? What characteristics of a bill, therefore, would you look at to determine if a bill is genuine or counterfeit?

34.2 Urine and obesity: what makes a difference?

A study was made of the characteristics of urine of young

men. The men were classified into four groups based on their degree of

obesity. (The groups are labelled a, b, c, d.) Four variables

were measured, x (which you can ignore), pigment creatinine,

chloride and chlorine. The data are in

link as a

.csv file. There are 45 men altogether.

Yes, you may have seen this one before. What you found was something like this, probably also with the Box M test (which has a P-value that is small, but not small enough to be a concern):

my_url <- "http://ritsokiguess.site/datafiles/urine.csv"

urine <- read_csv(my_url)## Rows: 45 Columns: 5

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (1): obesity

## dbl (4): x, creatinine, chloride, chlorine

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.response <- with(urine, cbind(creatinine, chlorine, chloride))

urine.1 <- manova(response ~ obesity, data = urine)

summary(urine.1)## Df Pillai approx F num Df den Df Pr(>F)

## obesity 3 0.43144 2.2956 9 123 0.02034 *

## Residuals 41

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Our aim is to understand why this result was significant.

Read in the data again (copy the code from above) and obtain a discriminant analysis.

How many linear discriminants were you expecting? Explain briefly.

Why do you think we should pay attention to the first two linear discriminants but not the third? Explain briefly.

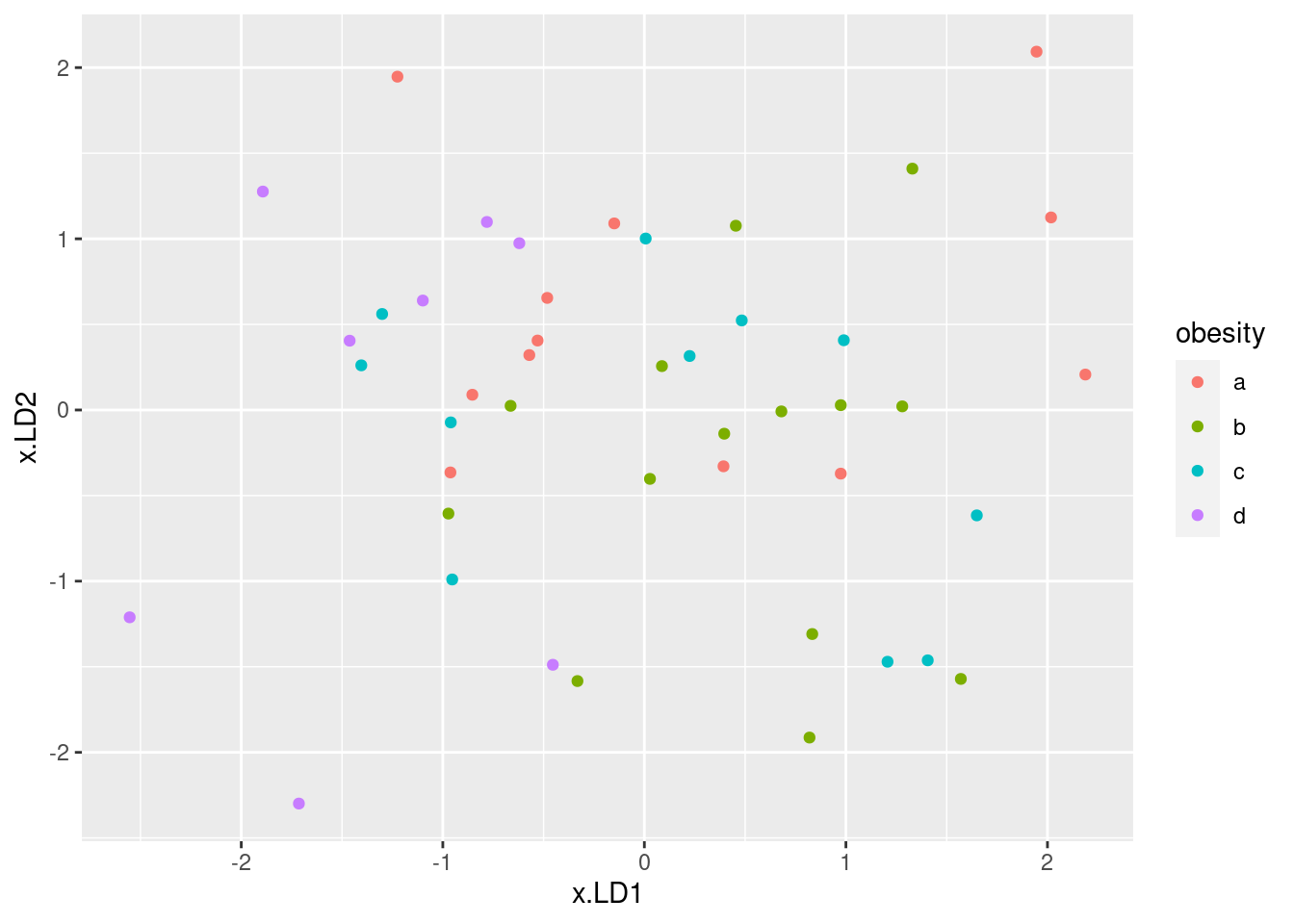

Plot the first two linear discriminant scores (against each other), with each obesity group being a different colour.

* Looking at your plot, discuss how (if at all) the discriminants separate the obesity groups. (Where does each obesity group fall on the plot?)

* Obtain a table showing observed and predicted obesity groups. Comment on the accuracy of the predictions.

Do your conclusions from (here) and (here) appear to be consistent?

34.3 Understanding a MANOVA

One use of discriminant analysis is to

understand the results of a MANOVA. This question is a followup to a

previous MANOVA that we did, the one with two variables y1

and y2 and three groups a through c. The

data were in link.

Read the data in again and run the MANOVA that you did before.

Run a discriminant analysis “predicting” group from the two response variables. Display the output.

* In the output from the discriminant analysis, why are there exactly two linear discriminants

LD1andLD2?* From the output, how would you say that the first linear discriminant

LD1compares in importance to the second oneLD2: much more important, more important, equally important, less important, much less important? Explain briefly.Obtain a plot of the discriminant scores.

Describe briefly how

LD1and/orLD2separate the groups. Does your picture confirm the relative importance ofLD1andLD2that you found back in part (here)? Explain briefly.What makes group

ahave a low score onLD1? There are two steps that you need to make: consider the means of groupaon variablesy1andy2and how they compare to the other groups, and consider howy1andy2play into the score onLD1.Obtain predictions for the group memberships of each observation, and make a table of the actual group memberships against the predicted ones. How many of the observations were wrongly classified?

34.4 What distinguishes people who do different jobs?

24436 people work at a

certain company.

They each have one of three jobs: customer service, mechanic,

dispatcher. In the data set, these are labelled 1, 2 and 3

respectively. In addition, they each are rated on scales called

outdoor, social and conservative. Do people

with different jobs tend to have different scores on these scales, or,

to put it another way, if you knew a person’s scores on

outdoor, social and conservative, could you

say something about what kind of job they were likely to hold? The

data are in link.

Read in the data and display some of it.

Note the types of each of the variables, and create any new variables that you need to.

Run a multivariate analysis of variance to convince yourself that there are some differences in scale scores among the jobs.

Run a discriminant analysis and display the output.

Which is the more important,

LD1orLD2? How much more important? Justify your answer briefly.Describe what values for an individual on the scales will make each of

LD1andLD2high.The first group of employees, customer service, have the highest mean on

socialand the lowest mean on both of the other two scales. Would you expect the customer service employees to score high or low onLD1? What aboutLD2?Plot your discriminant scores (which you will have to obtain first), and see if you were right about the customer service employees in terms of

LD1andLD2. The job names are rather long, and there are a lot of individuals, so it is probably best to plot the scores as coloured circles with a legend saying which colour goes with which job (rather than labelling each individual with the job they have).* Obtain predicted job allocations for each individual (based on their scores on the three scales), and tabulate the true jobs against the predicted jobs. How would you describe the quality of the classification? Is that in line with what the plot would suggest?

Consider an employee with these scores: 20 on

outdoor, 17 onsocialand 8 onconservativeWhat job do you think they do, and how certain are you about that? Usepredict, first making a data frame out of the values to predict for.Since I am not making you hand this one in, I’m going to keep going. Re-run the analysis to incorporate cross-validation, and make a table of the predicted group memberships. Is it much different from the previous one you had? Why would that be?

34.5 Observing children with ADHD

A number of children with ADHD were observed by their mother

or their father (only one parent observed each child). Each parent was

asked to rate occurrences of behaviours of four different types,

labelled q1 through q4 in the data set. Also

recorded was the identity of the parent doing the observation for each

child: 1 is father, 2 is mother.

Can we tell (without looking at the parent column) which

parent is doing the observation? Research suggests that rating the

degree of impairment in different categories depends on who is doing

the rating: for example, mothers may feel that a child has difficulty

sitting still, while fathers, who might do more observing of a child

at play, might think of such a child as simply being “active” or

“just being a kid”. The data are in

link.

Read in the data and confirm that you have four ratings and a column labelling the parent who made each observation.

Run a suitable discriminant analysis and display the output.

Which behaviour item or items seem to be most helpful at distinguishing the parent making the observations? Explain briefly.

Obtain the predictions from the

lda, and make a suitable plot of the discriminant scores, bearing in mind that you only have oneLD. Do you think there will be any misclassifications? Explain briefly.Obtain the predicted group memberships and make a table of actual vs. predicted. Were there any misclassifications? Explain briefly.

Re-run the discriminant analysis using cross-validation, and again obtain a table of actual and predicted parents. Is the pattern of misclassification different from before? Hints: (i) Bear in mind that there is no

predictstep this time, because the cross-validation output includes predictions; (ii) use a different name for the predictions this time because we are going to do a comparison in a moment.Display the original data (that you read in from the data file) side by side with two sets of posterior probabilities: the ones that you obtained with

predictbefore, and the ones from the cross-validated analysis. Comment briefly on whether the two sets of posterior probabilities are similar. Hints: (i) usedata.framerather thancbind, for reasons that I explain elsewhere; (ii) round the posterior probabilities to 3 decimals before you display them. There are only 29 rows, so look at them all. I am going to add theLD1scores to my output and sort by that, but you don’t need to. (This is for something I am going to add later.)Row 17 of your (original) data frame above, row 5 of the output in the previous part, is the mother that was misclassified as a father. Why is it that the cross-validated posterior probabilities are 1 and 0, while the previous posterior probabilities are a bit less than 1 and a bit more than 0?

Find the parents where the cross-validated posterior probability of being a father is “non-trivial”: that is, not close to zero and not close to 1. (You will have to make a judgement about what “close to zero or 1” means for you.) What do these parents have in common, all of them or most of them?

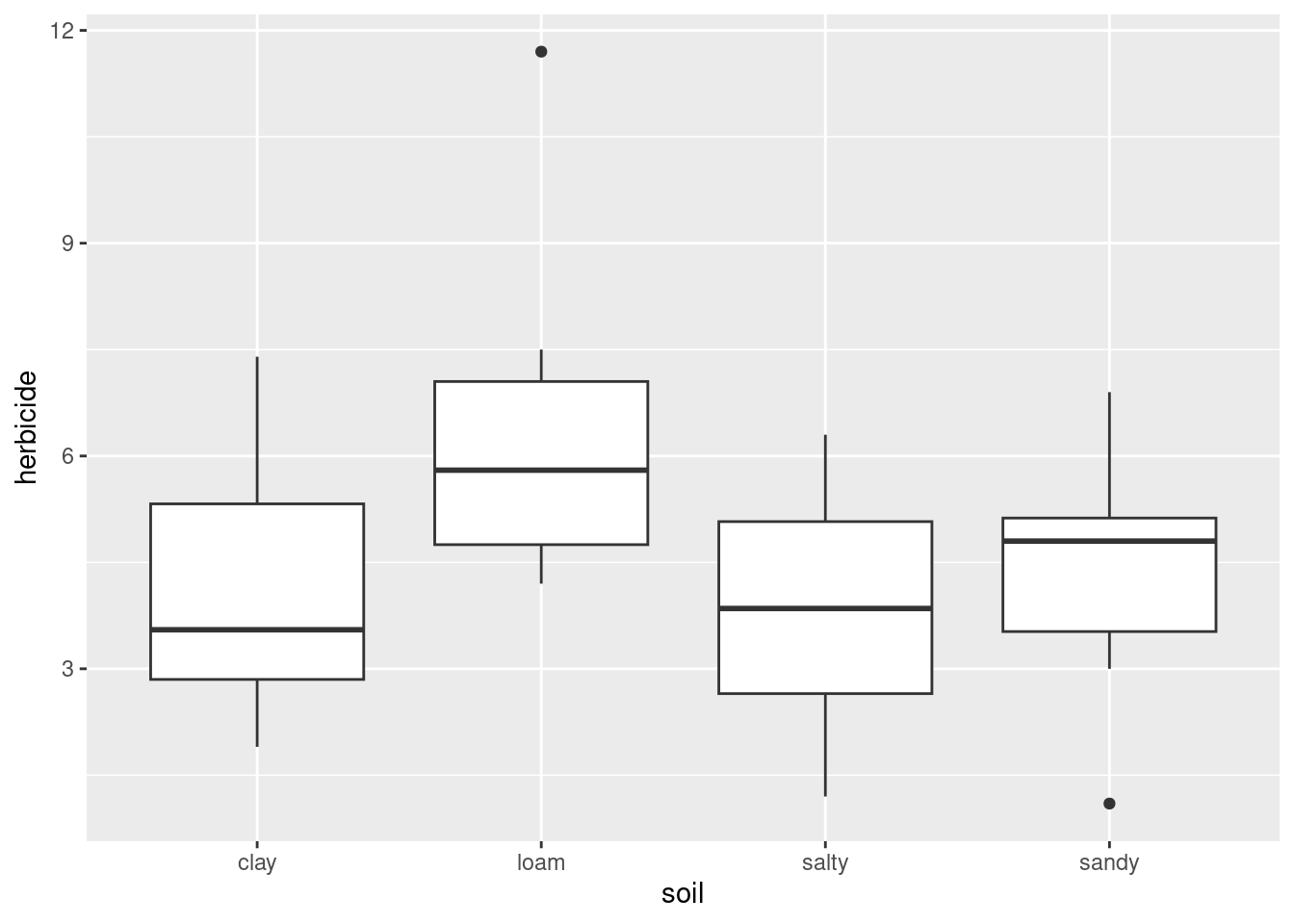

34.6 Growing corn

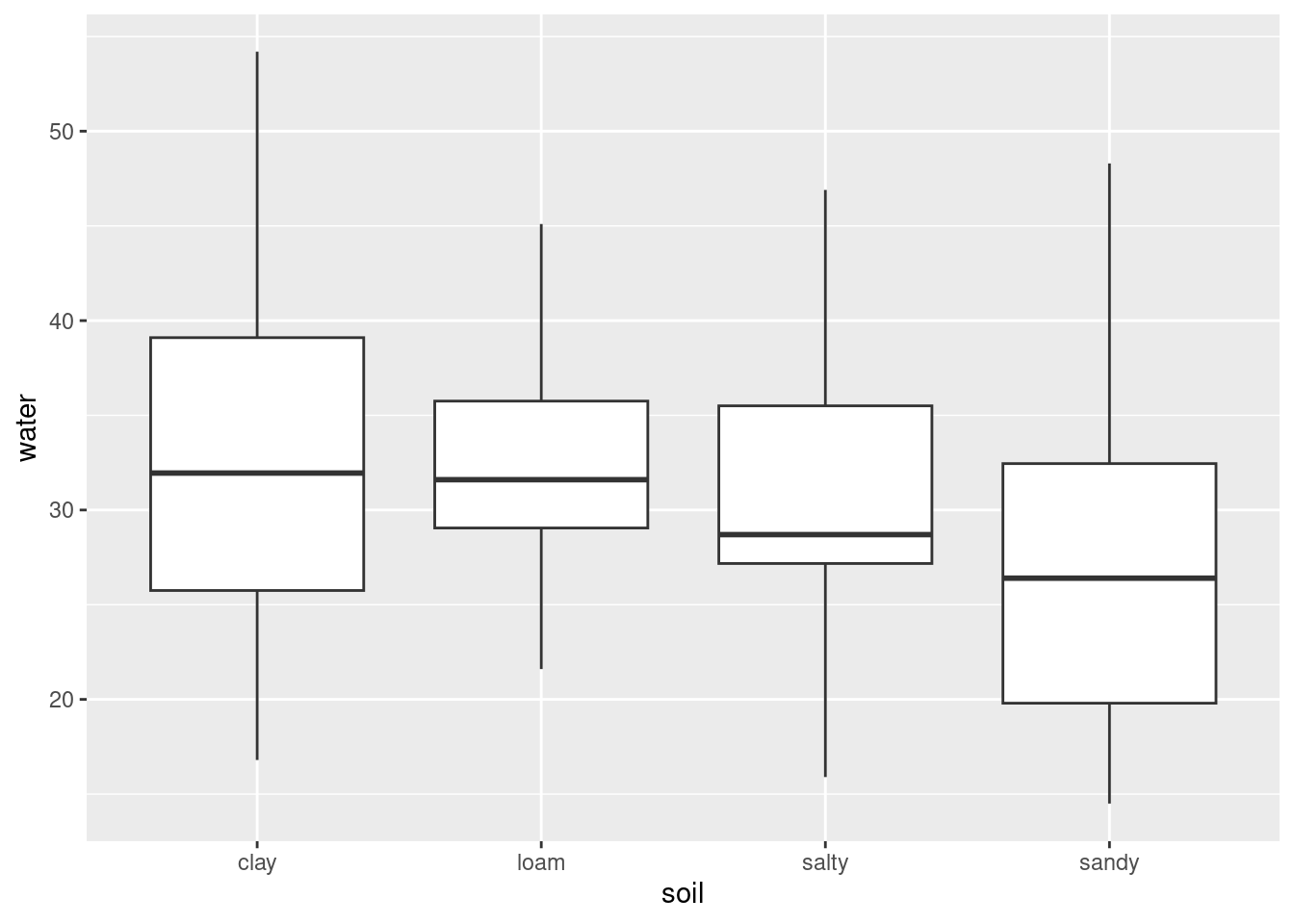

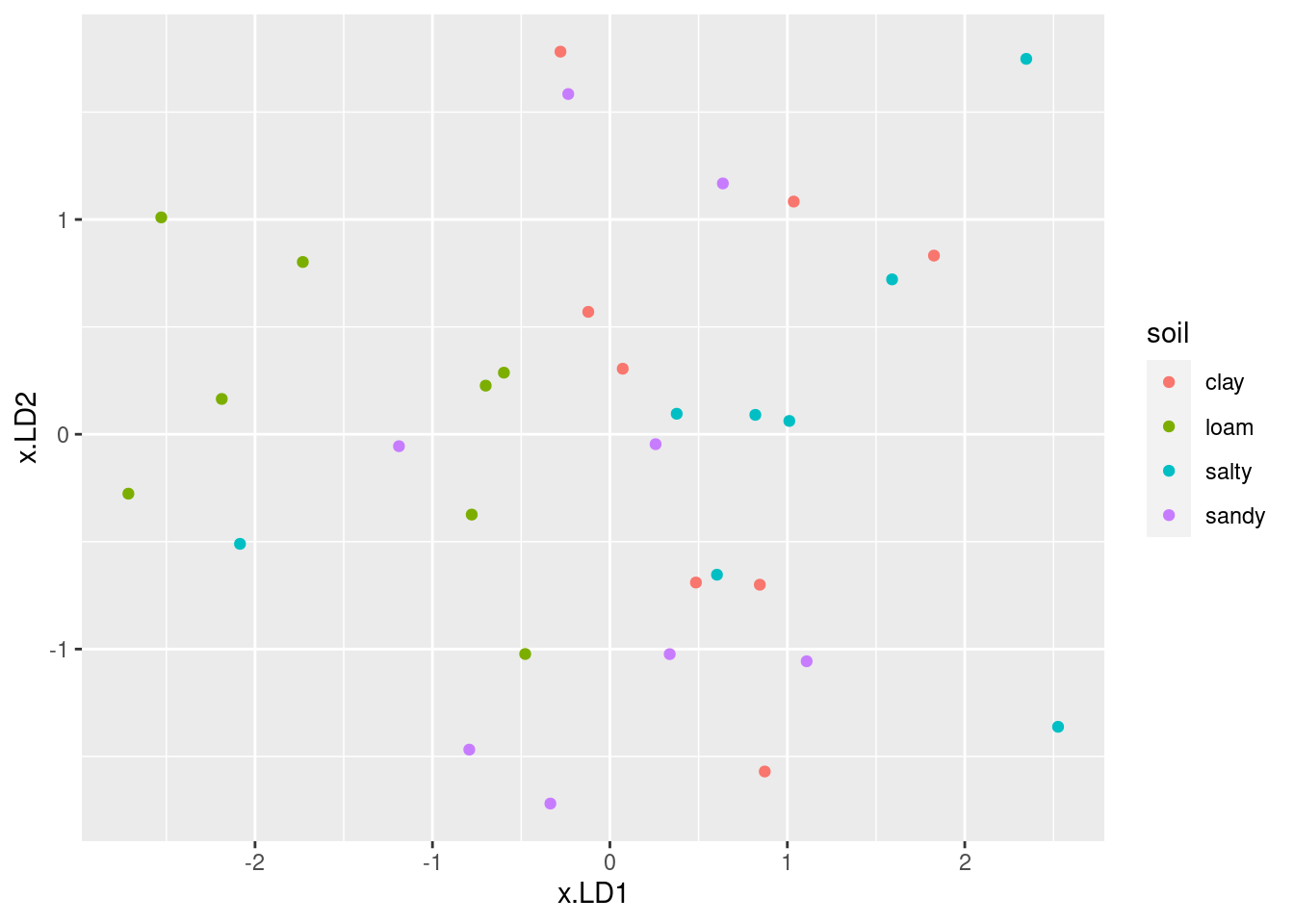

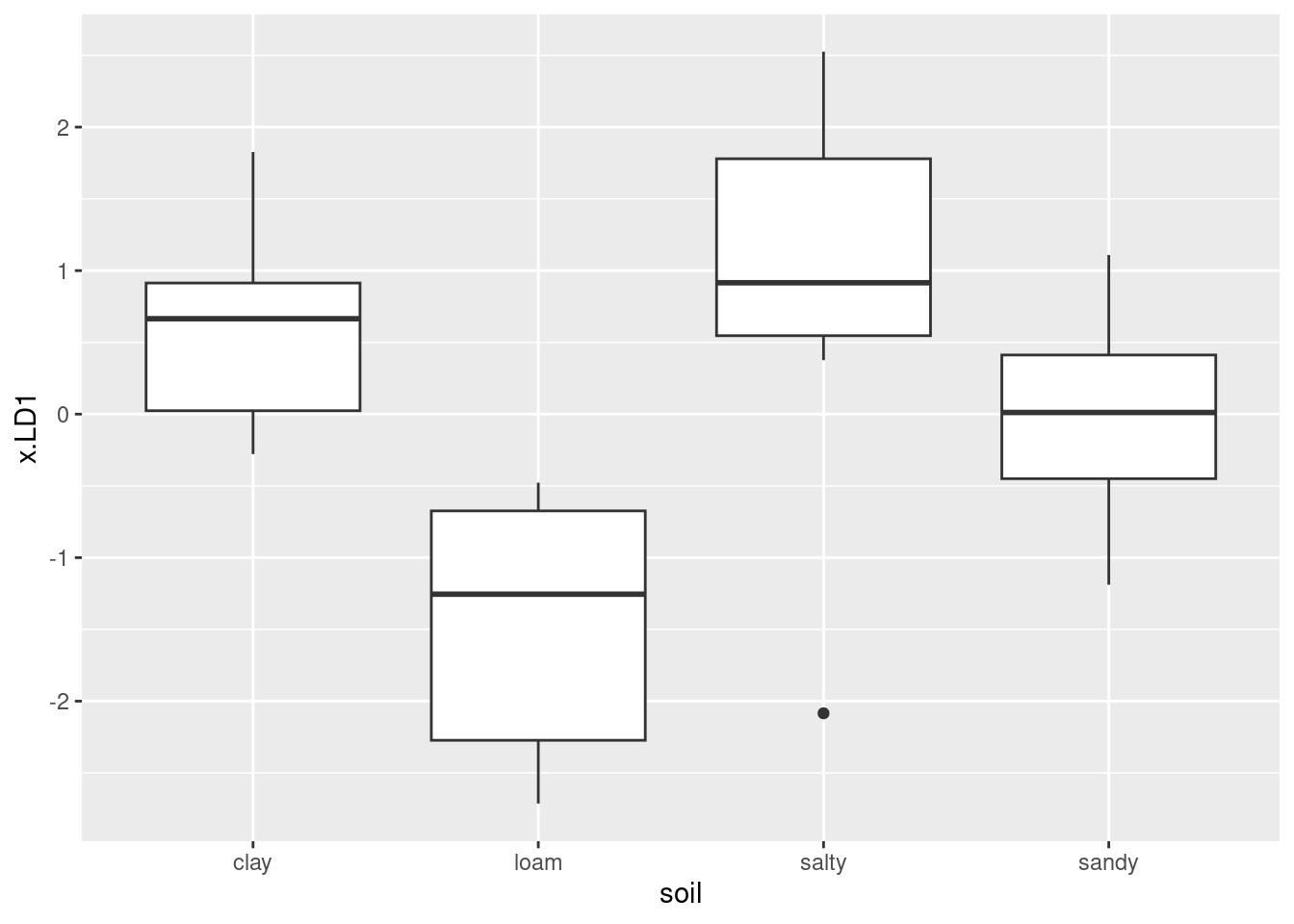

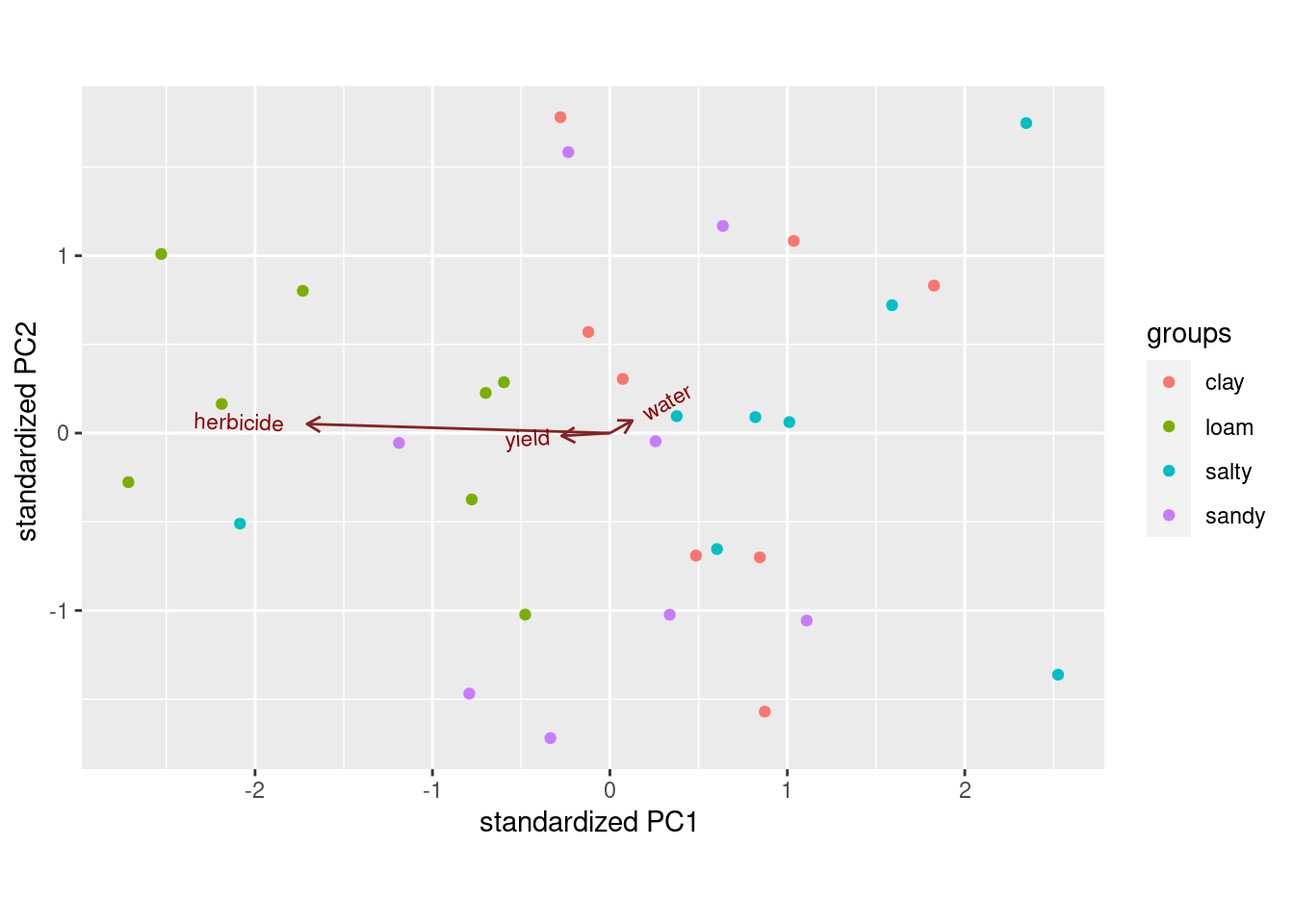

A new type of corn seed has been developed. The people developing it want to know if the type of soil the seed is planted in has an impact on how well the seed performs, and if so, what kind of impact. Three outcome measures were used: the yield of corn produced (from a fixed amount of seed), the amount of water needed, and the amount of herbicide needed. The data are in link. 32 fields were planted with the seed, 8 fields with each soil type.

Read in the data and verify that you have 32 observations with the correct variables.

Run a multivariate analysis of variance to see whether the type of soil has any effect on any of the variables. What do you conclude from it?

Run a discriminant analysis on these data, “predicting” soil type from the three response variables. Display the results.

* Which linear discriminants seem to be worth paying attention to? Why did you get three linear discriminants? Explain briefly.

Which response variables do the important linear discriminants depend on? Answer this by extracting something from your discriminant analysis output.

Obtain predictions for the discriminant analysis. (You don’t need to do anything with them yet.)

Plot the first two discriminant scores against each other, coloured by soil type. You’ll have to start by making a data frame containing what you need.

On your plot that you just made, explain briefly how

LD1distinguishes at least one of the soil types.On your plot, does

LD2appear to do anything to separate the groups? Is this surprising given your earlier findings? Explain briefly.Make a table of actual and predicted

soilgroup. Which soil type was classified correctly the most often?

34.7 Understanding athletes’ height, weight, sport and gender

On a previous assignment, we used MANOVA on the athletes data to demonstrate that there was a significant relationship between the combination of the athletes’ height and weight, with the sport they play and the athlete’s gender. The problem with MANOVA is that it doesn’t give any information about the kind of relationship. To understand that, we need to do discriminant analysis, which is the purpose of this question.

The data can be found at link.

Once again, read in and display (some of) the data, bearing in mind that the data values are separated by tabs. (This ought to be a free two marks.)

Use

uniteto make a new column in your data frame which contains the sport-gender combination. Display it. (You might like to display only a few columns so that it is clear that you did the right thing.) Hint: you’ve seenunitein the peanuts example in class.Run a discriminant analysis “predicting” sport-gender combo from height and weight. Display the results. (No comment needed yet.)

What kind of height and weight would make an athlete have a large (positive) score on

LD1? Explain briefly.Make a guess at the sport-gender combination that has the highest score on LD1. Why did you choose the combination you did?

What combination of height and weight would make an athlete have a small* (that is, very negative) score on LD2? Explain briefly.

Obtain predictions for the discriminant analysis, and use these to make a plot of

LD1score againstLD2score, with the individual athletes distinguished by what sport they play and gender they are. (You can use colour to distinguish them, or you can use shapes. If you want to go the latter way, there are clues in my solutions to the MANOVA question about these athletes.)Look on your graph for the four athletes with the smallest (most negative) scores on

LD2. What do they have in common? Does this make sense, given your answer to part (here)? Explain briefly.Obtain a (very large) square table, or a (very long) table with frequencies, of actual and predicted sport-gender combinations. You will probably have to make the square table very small to fit it on the page. For that, displaying the columns in two or more sets is OK (for example, six columns and all the rows, six more columns and all the rows, then the last five columns for all the rows). Are there any sport-gender combinations that seem relatively easy to classify correctly? Explain briefly.

My solutions follow:

34.8 Telling whether a banknote is real or counterfeit

* A Swiss bank collected a number of known counterfeit (fake) bills over time, and sampled a number of known genuine bills of the same denomination. Is it possible to tell, from measurements taken from a bill, whether it is genuine or not? We will explore that issue here. The variables measured were:

length

right-hand width

left-hand width

top margin

bottom margin

diagonal

- Read in the data from link, and check that you have 200 rows and 7 columns altogether.

Solution

Check the data file first. It’s aligned in columns, thus:

my_url <- "http://ritsokiguess.site/datafiles/swiss1.txt"

swiss <- read_table(my_url)##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## length = col_double(),

## left = col_double(),

## right = col_double(),

## bottom = col_double(),

## top = col_double(),

## diag = col_double(),

## status = col_character()

## )swiss## # A tibble: 200 × 7

## length left right bottom top diag status

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <chr>

## 1 215. 131 131. 9 9.7 141 genuine

## 2 215. 130. 130. 8.1 9.5 142. genuine

## 3 215. 130. 130. 8.7 9.6 142. genuine

## 4 215. 130. 130. 7.5 10.4 142 genuine

## 5 215 130. 130. 10.4 7.7 142. genuine

## 6 216. 131. 130. 9 10.1 141. genuine

## 7 216. 130. 130. 7.9 9.6 142. genuine

## 8 214. 130. 129. 7.2 10.7 142. genuine

## 9 215. 129. 130. 8.2 11 142. genuine

## 10 215. 130. 130. 9.2 10 141. genuine

## # … with 190 more rowsYep, 200 rows and 7 columns.

\(\blacksquare\)

- Run a multivariate analysis of variance. What do you conclude? Is it worth running a discriminant analysis? (This is the same procedure as with basic MANOVAs before.)

Solution

Small-m manova will do here:

response <- with(swiss, cbind(length, left, right, bottom, top, diag))

swiss.1 <- manova(response ~ status, data = swiss)

summary(swiss.1)## Df Pillai approx F num Df den Df Pr(>F)

## status 1 0.92415 391.92 6 193 < 2.2e-16 ***

## Residuals 198

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1summary(BoxM(response, swiss$status))## Box's M Test

##

## Chi-Squared Value = 121.8991 , df = 21 and p-value: 3.2e-16That is a very significant Box’s M test, which means that we shouldn’t trust the MANOVA at all. It is only the fact that the MANOVA is so significant that provides any evidence that the discriminant analysis is worth doing.

Extra: you might be wondering whether you had to go to all that trouble to make the response variable. Would this work?

response2 <- swiss %>% select(length:diag)

swiss.1a <- manova(response2 ~ status, data = swiss)## Error in model.frame.default(formula = response2 ~ status, data = swiss, : invalid type (list) for variable 'response2'No, because response2 needs to be an R matrix, and it isn’t:

class(response2)## [1] "tbl_df" "tbl" "data.frame"The error message was a bit cryptic (nothing unusual there), but a

data frame (to R) is a special kind of list, so that R didn’t

like response2 being a data frame, which it

thought was a list.

This, however, works, since it turns the data frame into a matrix:

response4 <- swiss %>% select(length:diag) %>% as.matrix()

swiss.2a <- manova(response4 ~ status, data = swiss)

summary(swiss.2a)## Df Pillai approx F num Df den Df Pr(>F)

## status 1 0.92415 391.92 6 193 < 2.2e-16 ***

## Residuals 198

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Anyway, the conclusion: the status of a bill (genuine or counterfeit) definitely has an influence on some or all of those other variables, since the P-value \(2.2 \times 10^{-16}\) (or less) is really small. So it is apparently worth running a discriminant analysis to figure out where the differences lie.

As a piece of strategy, for creating the response matrix, you can

always either use cbind, which creates a matrix

directly, or you can use select, which is often easier but

creates a data frame, and then turn that into a matrix

using as.matrix. As long as you end up with a

matrix, it’s all good.

\(\blacksquare\)

- Run a discriminant analysis. Display the output.

Solution

Now we forget about all that

response stuff. For a discriminant analysis, the

grouping variable (or combination of the grouping variables)

is the “response”, and the quantitative ones are

“explanatory”:

swiss.3 <- lda(status ~ length + left + right + bottom + top + diag, data = swiss)

swiss.3## Call:

## lda(status ~ length + left + right + bottom + top + diag, data = swiss)

##

## Prior probabilities of groups:

## counterfeit genuine

## 0.5 0.5

##

## Group means:

## length left right bottom top diag

## counterfeit 214.823 130.300 130.193 10.530 11.133 139.450

## genuine 214.969 129.943 129.720 8.305 10.168 141.517

##

## Coefficients of linear discriminants:

## LD1

## length 0.005011113

## left 0.832432523

## right -0.848993093

## bottom -1.117335597

## top -1.178884468

## diag 1.556520967\(\blacksquare\)

- How many linear discriminants did you get? Is that making sense? Explain briefly.

Solution

I got one discriminant, which makes sense because there are two groups, and the smaller of 6 (variables, not counting the grouping one) and \(2-1\) is 1.

\(\blacksquare\)

- * Using your output from the discriminant analysis, describe how each of the linear discriminants that you got is related to your original variables. (This can, maybe even should, be done crudely: “does each variable feature in each linear discriminant: yes or no?”.)

Solution

This is the Coefficients of Linear Discriminants. Make a call about whether each of those coefficients is close to zero (small in size compared to the others), or definitely positive or definitely negative.

These are judgement calls: either you can say that LD1

depends mainly on diag (treating the other coefficients

as “small” or close to zero), or you can say that LD1

depends on everything except length.

\(\blacksquare\)

- What values of your variable(s) would make

LD1large and positive?

Solution

Depending on your answer to the previous part:

If you said that only diag was important, diag

being large would make LD1 large and positive.

If you said that everything but length was important,

then it’s a bit more complicated: left and

diag large, right, bottom and

top small (since their coefficients are negative).

\(\blacksquare\)

- * Find the means of each variable for each group (genuine and counterfeit bills). You can get this from your fitted linear discriminant object.

Solution

swiss.3$means## length left right bottom top diag

## counterfeit 214.823 130.300 130.193 10.530 11.133 139.450

## genuine 214.969 129.943 129.720 8.305 10.168 141.517\(\blacksquare\)

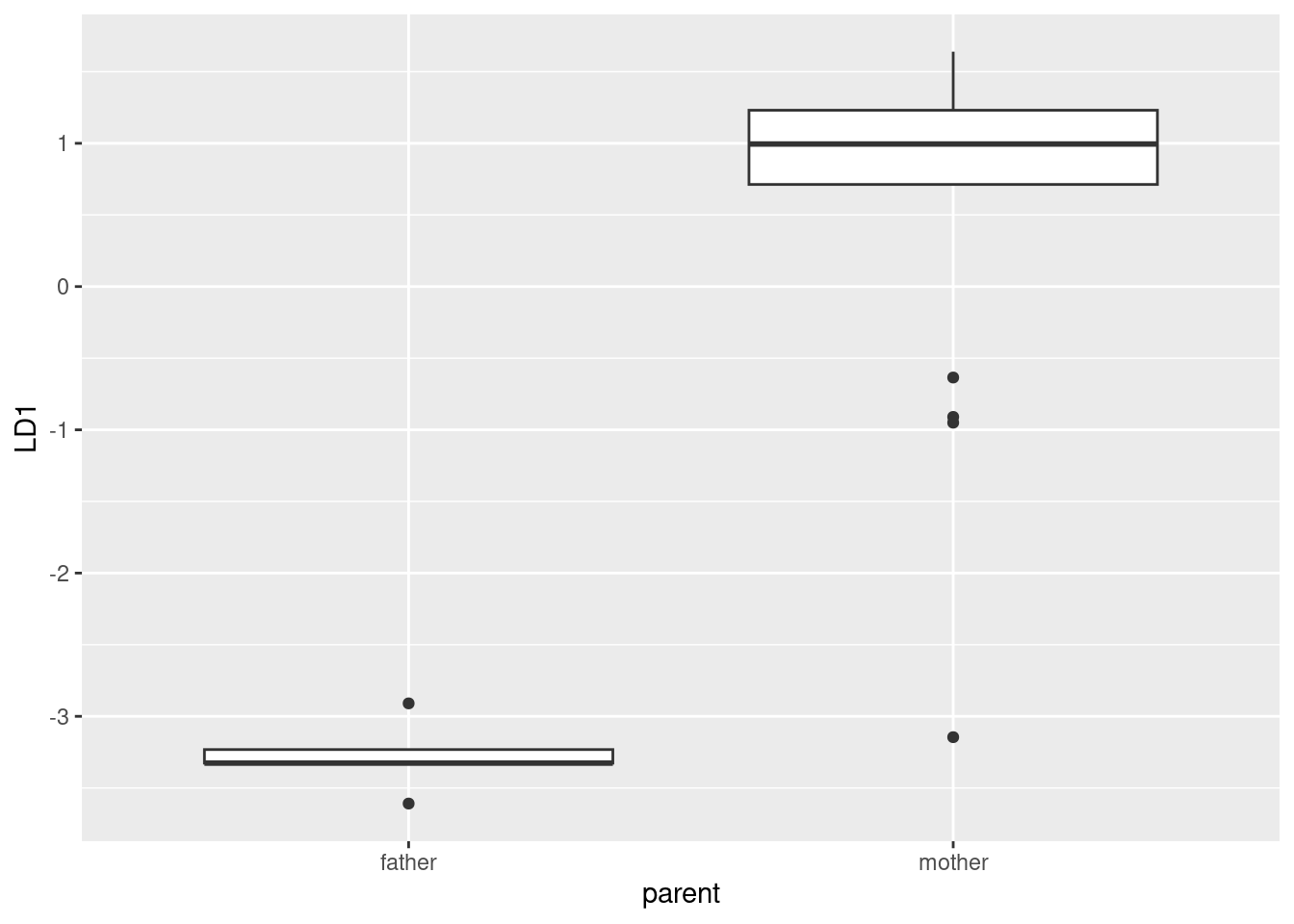

- Plot your linear discriminant(s), however you like. Bear in mind that there is only one linear discriminant.

Solution

With only one linear discriminant, we can plot LD1 scores on

the \(y\)-axis and the grouping variable on the \(x\)-axis. How

you do that is up to you.

Before we start, though, we need the LD1 scores. This means

doing predictions. The discriminant scores are in there. We take the

prediction output and make a data frame with all the things in the

original data. My current preference (it changes) is to store the

predictions, and then cbind them with the original data,

thus:

swiss.pred <- predict(swiss.3)

d <- cbind(swiss, swiss.pred)

head(d)## length left right bottom top diag status class posterior.counterfeit

## 1 214.8 131.0 131.1 9.0 9.7 141.0 genuine genuine 3.245560e-07

## 2 214.6 129.7 129.7 8.1 9.5 141.7 genuine genuine 1.450624e-14

## 3 214.8 129.7 129.7 8.7 9.6 142.2 genuine genuine 1.544496e-14

## 4 214.8 129.7 129.6 7.5 10.4 142.0 genuine genuine 4.699587e-15

## 5 215.0 129.6 129.7 10.4 7.7 141.8 genuine genuine 1.941700e-13

## 6 215.7 130.8 130.5 9.0 10.1 141.4 genuine genuine 1.017550e-08

## posterior.genuine LD1

## 1 0.9999997 2.150948

## 2 1.0000000 4.587317

## 3 1.0000000 4.578290

## 4 1.0000000 4.749580

## 5 1.0000000 4.213851

## 6 1.0000000 2.649422I needed head because cbind makes an old-fashioned

data.frame rather than a tibble, so if you display

it, you get all of it.

This gives the LD1 scores, predicted groups, and posterior

probabilities as well. That saves us having to pick out the other

things later.

The obvious thing is a boxplot. By examining d above (didn’t

you?), you saw that the LD scores were in a column called

LD1:

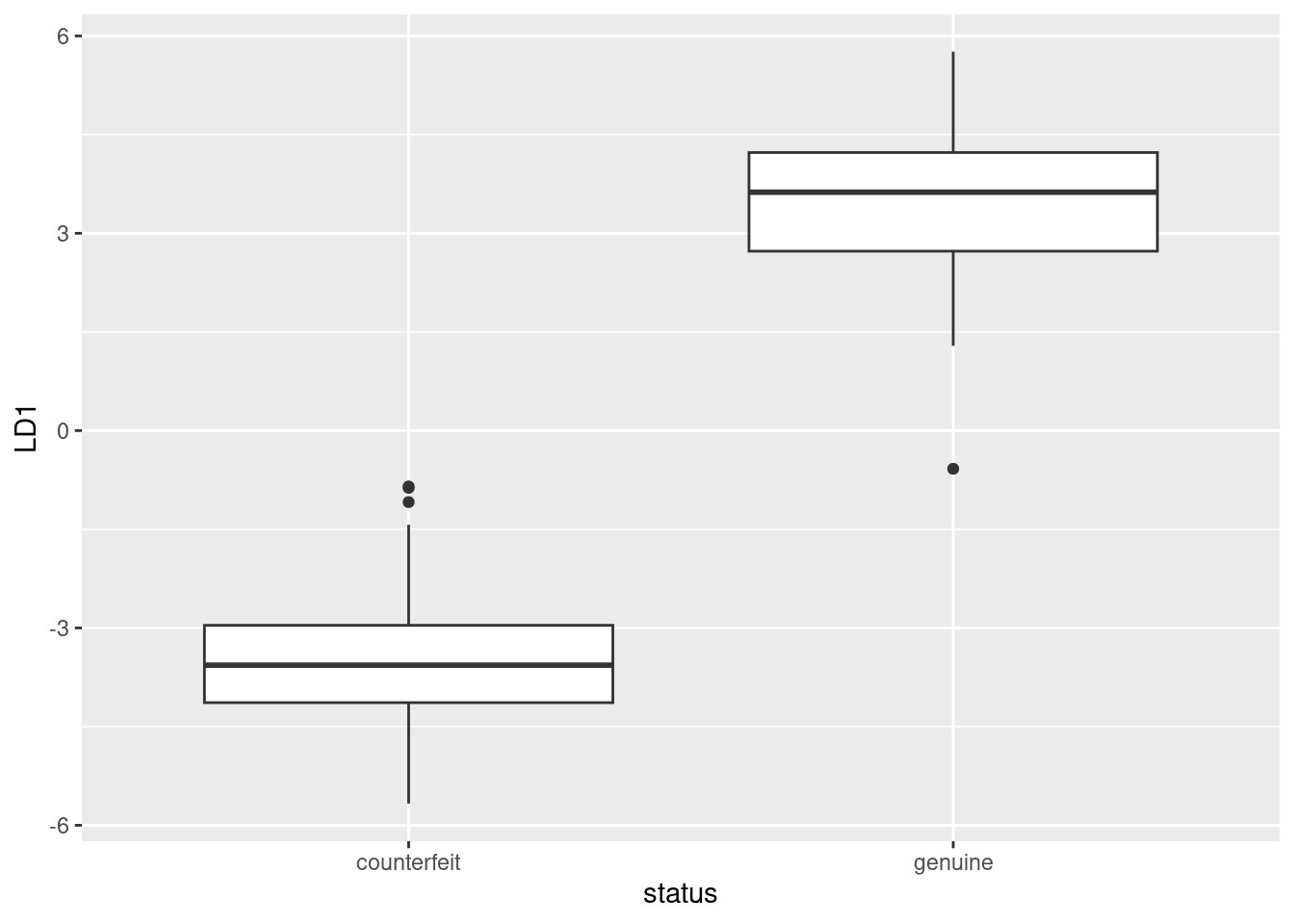

ggplot(d, aes(x = status, y = LD1)) + geom_boxplot()

This shows that positive LD1 scores go (almost without exception) with genuine bills, and negative ones with counterfeit bills. It also shows that there are three outlier bills, two counterfeit ones with unusually high LD1 score, and one genuine one with unusually low LD1 score, at least for a genuine bill.

This goes to show that (the Box M test notwithstanding) the two types of bill really are different in a way that is worth investigating.

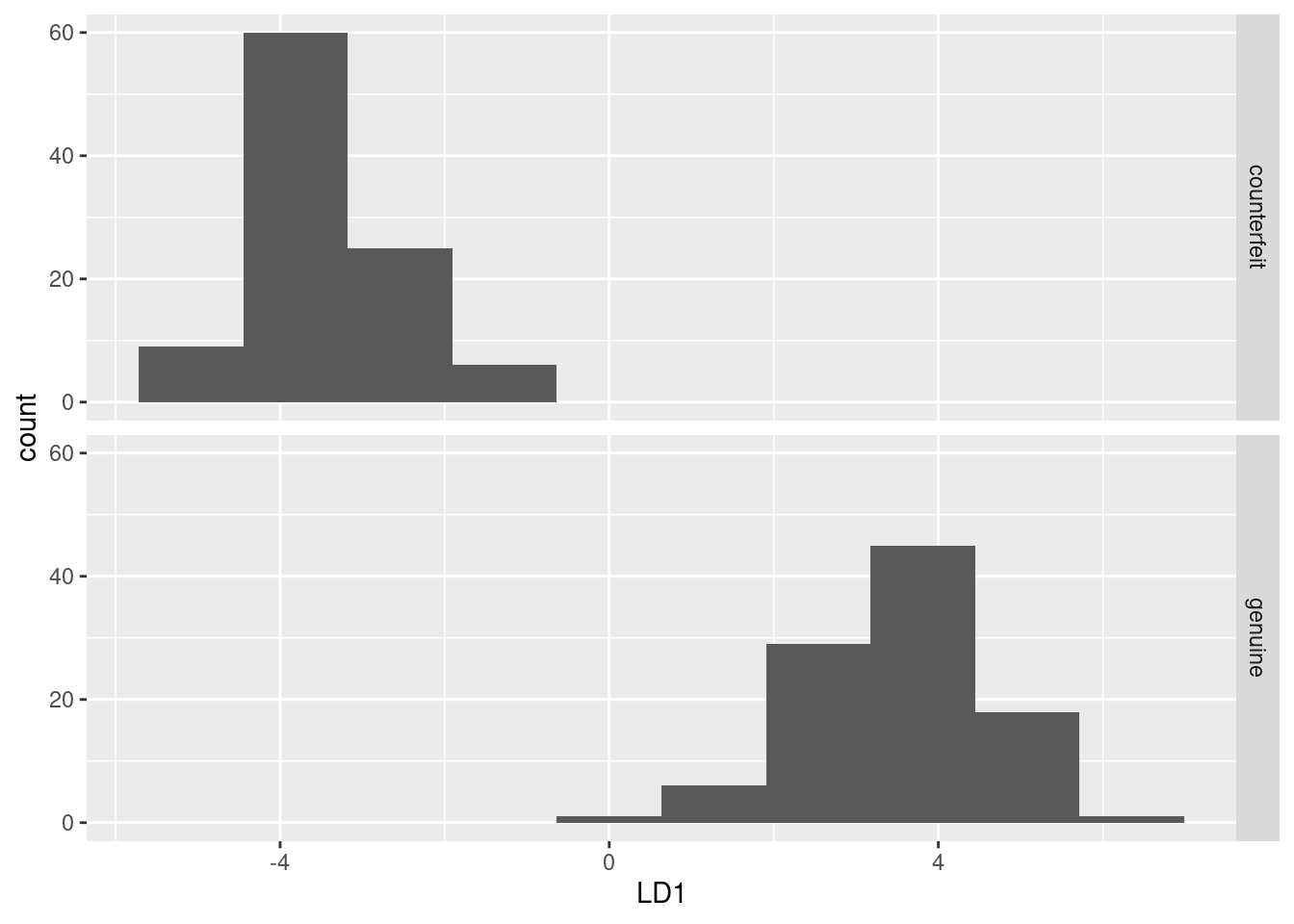

Or you could do faceted histograms of LD1 by status:

ggplot(d, aes(x = LD1)) + geom_histogram(bins = 10) + facet_grid(status ~ .)

This shows much the same thing as plot(swiss.3) does (try it).

\(\blacksquare\)

- What kind of score on

LD1do genuine bills typically have? What kind of score do counterfeit bills typically have? What characteristics of a bill, therefore, would you look at to determine if a bill is genuine or counterfeit?

Solution

The genuine bills almost all have a positive score on

LD1, while the counterfeit ones all have a negative one.

This means that the genuine bills (depending on your answer to

(here)) have a large diag, or they have a

large left and diag, and a small

right, bottom and top.

If you look at your table of means in (here), you’ll

see that the genuine bills do indeed have a large diag,

or, depending on your earlier answer, a small right,

bottom and top, but not actually a small

left (the left values are very close for the

genuine and counterfeit coins).

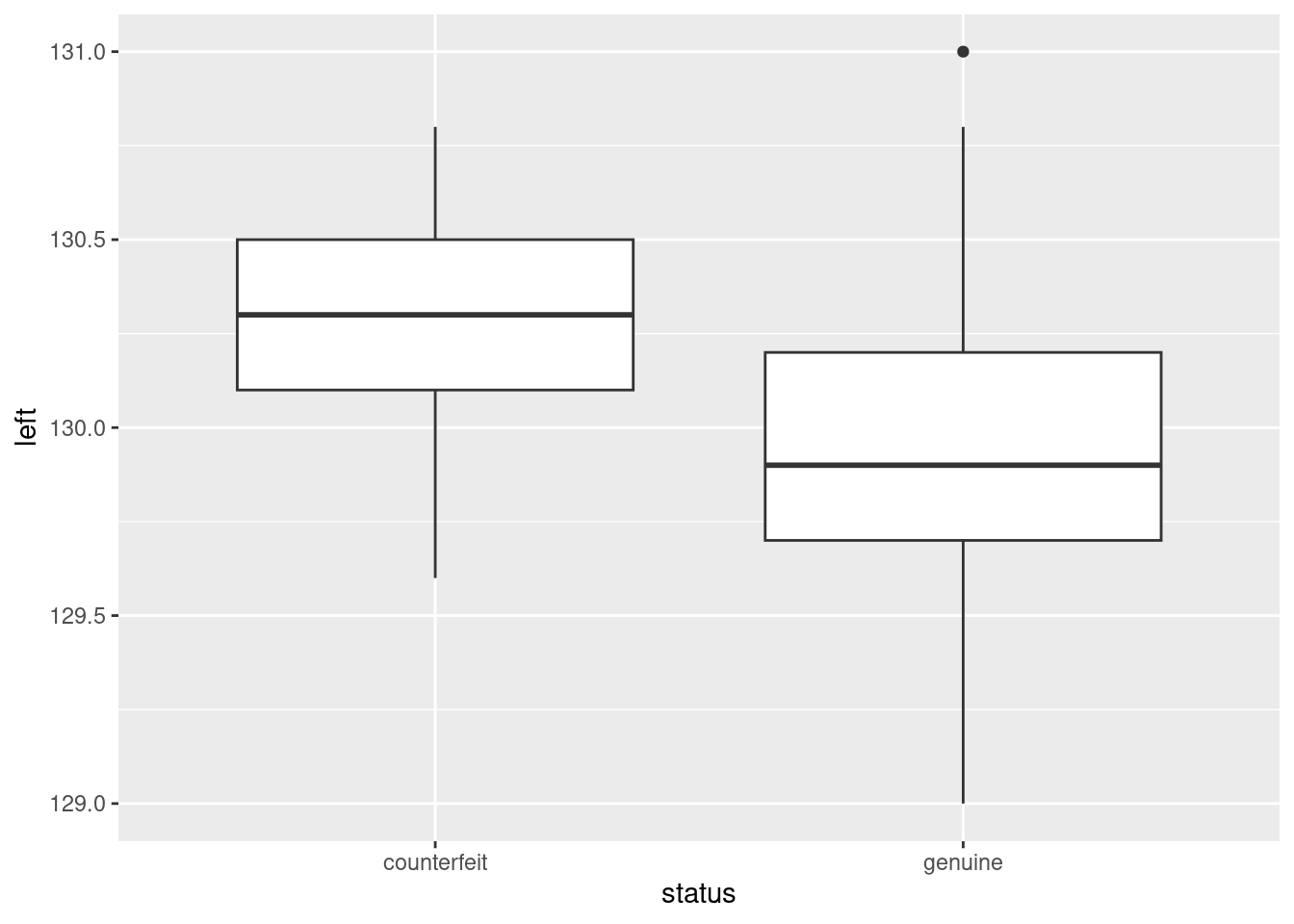

Extra: as to that last point, this is easy enough to think about. A boxplot seems a nice way to display it:

ggplot(d, aes(y = left, x = status)) + geom_boxplot()

There is a fair bit of overlap: the median is higher for the counterfeit bills, but the highest value actually belongs to a genuine one.

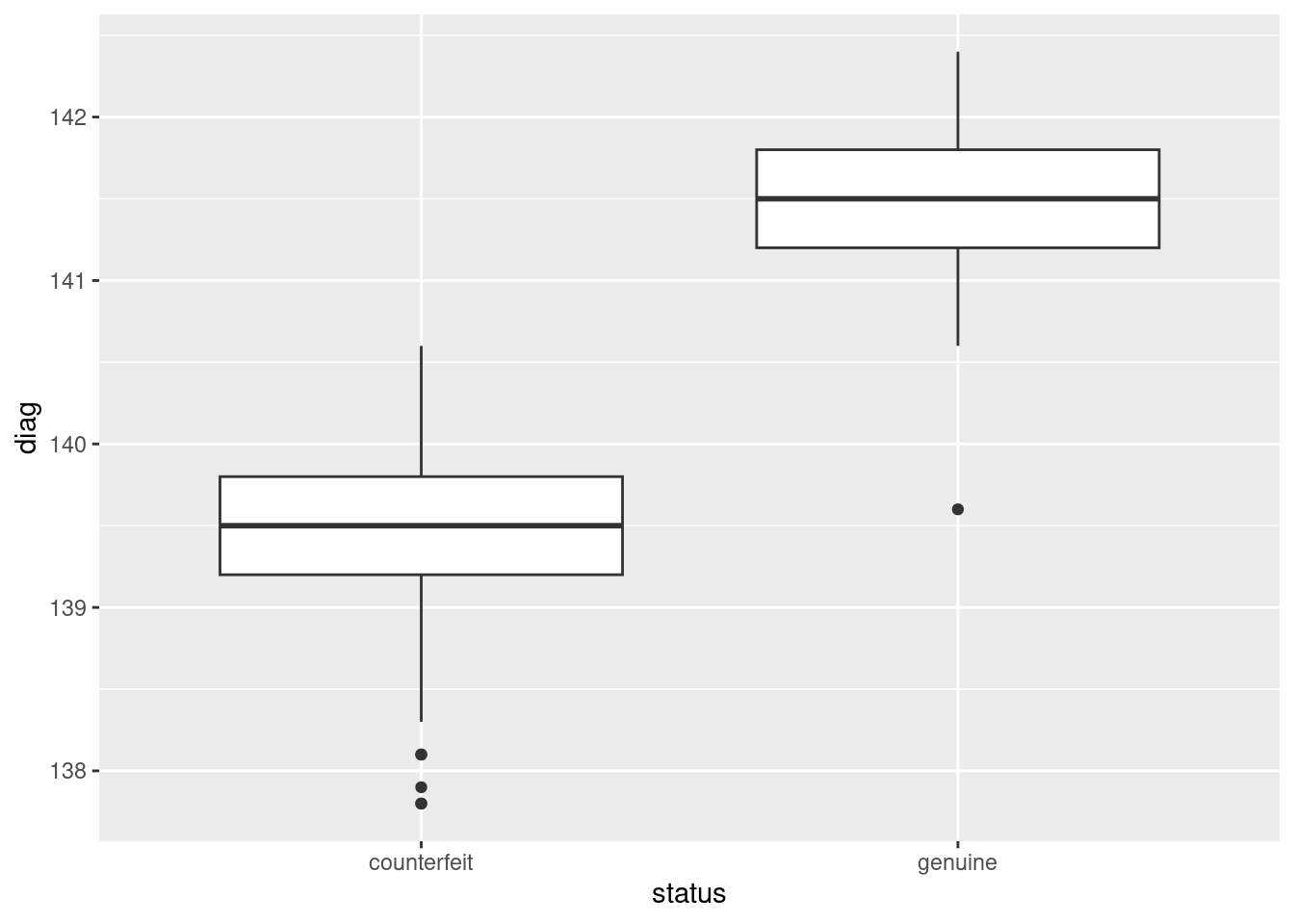

Compare that to diag:

ggplot(d, aes(y = diag, x = status)) + geom_boxplot()

Here, there is an almost complete separation of the genuine and

counterfeit bills, with just one low outlier amongst the genuine bills

spoiling the pattern.

I didn’t look at the predictions (beyond the discriminant scores),

since this question (as set on an assignment a couple of years ago)

was already too long, but there is no difficulty in doing so.

Everything is in the data frame I called d:

with(d, table(obs = status, pred = class))## pred

## obs counterfeit genuine

## counterfeit 100 0

## genuine 1 99(this labels the rows and columns, which is not necessary but is nice.)

The tidyverse way is to make a data frame out of the actual

and predicted statuses, and then count what’s in there:

d %>% count(status, class)## status class n

## 1 counterfeit counterfeit 100

## 2 genuine counterfeit 1

## 3 genuine genuine 99This gives a “long” table, with frequencies for each of the combinations for which anything was observed.

Frequency tables are usually wide, and we can make this one so by pivot-wider-ing pred:

d %>%

count(status, class) %>%

pivot_wider(names_from = class, values_from = n)## # A tibble: 2 × 3

## status counterfeit genuine

## <chr> <int> <int>

## 1 counterfeit 100 NA

## 2 genuine 1 99One of the genuine bills is incorrectly classified as a counterfeit one (evidently that low outlier on LD1), but every single one of the counterfeit bills is classified correctly. That missing value is actually a frequency that is zero, which you can fix up thus:

d %>%

count(status, class) %>%

pivot_wider(names_from = class, values_from = n, values_fill = 0) ## # A tibble: 2 × 3

## status counterfeit genuine

## <chr> <int> <int>

## 1 counterfeit 100 0

## 2 genuine 1 99which turns any missing values into the zeroes they should be in this kind of problem. It would be interesting to see what the posterior probabilities look like for that misclassified bill:

d %>% filter(status != class)## length left right bottom top diag status class

## 70 214.9 130.2 130.2 8 11.2 139.6 genuine counterfeit

## posterior.counterfeit posterior.genuine LD1

## 70 0.9825773 0.01742267 -0.5805239On the basis of the six measured variables, this looks a lot more like a counterfeit bill than a genuine one. Are there any other bills where there is any doubt? One way to find out is to find the maximum of the two posterior probabilities. If this is small, there is some doubt about whether the bill is real or fake. 0.99 seems like a very stringent cutoff, but let’s try it and see:

d %>%

mutate(max.post = pmax(posterior.counterfeit, posterior.genuine)) %>%

filter(max.post < 0.99) %>%

dplyr::select(-c(length:diag))## status class posterior.counterfeit posterior.genuine LD1

## 70 genuine counterfeit 0.9825773 0.01742267 -0.5805239

## max.post

## 70 0.9825773The only one is the bill that was misclassified: it was actually genuine, but was classified as counterfeit. The posterior probabilities say that it was pretty unlikely to be genuine, but it was the only bill for which there was any noticeable doubt at all.

I had to use pmax rather than max there, because I

wanted max.post to contain the larger of the two

corresponding entries: that is, the first entry in max.post

is the larger of the first entry of counterfeit and the first

entry in genuine. If I used max instead, I’d get the

largest of all the entries in counterfeit and

all the entries in genuine, repeated 200 times. (Try

it and see.) pmax stands for “parallel maximum”, that is,

for each row separately. This also should work:

d %>%

rowwise() %>%

mutate(max.post = max(posterior.counterfeit, posterior.genuine)) %>%

filter(max.post < 0.99) %>%

select(-c(length:diag))## # A tibble: 1 × 6

## # Rowwise:

## status class posterior.counterfeit posterior.genuine LD1 max.post

## <chr> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 genuine counterfeit 0.983 0.0174 -0.581 0.983Because we’re using rowwise, max is applied to the pairs

of values of posterior.counterfeit and posterior.genuine,

taken one row at a time.

\(\blacksquare\)

34.9 Urine and obesity: what makes a difference?

A study was made of the characteristics of urine of young

men. The men were classified into four groups based on their degree of

obesity. (The groups are labelled a, b, c, d.) Four variables

were measured, x (which you can ignore), pigment creatinine,

chloride and chlorine. The data are in

link as a

.csv file. There are 45 men altogether.

Yes, you may have seen this one before. What you found was something like this:

my_url <- "http://ritsokiguess.site/datafiles/urine.csv"

urine <- read_csv(my_url)## Rows: 45 Columns: 5

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (1): obesity

## dbl (4): x, creatinine, chloride, chlorine

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.response <- with(urine, cbind(creatinine, chlorine, chloride))

urine.1 <- manova(response ~ obesity, data = urine)

summary(urine.1)## Df Pillai approx F num Df den Df Pr(>F)

## obesity 3 0.43144 2.2956 9 123 0.02034 *

## Residuals 41

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1summary(BoxM(response, urine$obesity))## Box's M Test

##

## Chi-Squared Value = 30.8322 , df = 18 and p-value: 0.0301Our aim is to understand why this result was significant. (Remember that the P-value on Box’s M test is not small enough to be worried about.)

- Read in the data again (copy the code from above) and obtain a discriminant analysis.

Solution

As above, plus:

urine.1 <- lda(obesity ~ creatinine + chlorine + chloride, data = urine)

urine.1## Call:

## lda(obesity ~ creatinine + chlorine + chloride, data = urine)

##

## Prior probabilities of groups:

## a b c d

## 0.2666667 0.3111111 0.2444444 0.1777778

##

## Group means:

## creatinine chlorine chloride

## a 15.89167 5.275000 6.012500

## b 17.82143 7.450000 5.214286

## c 16.34545 8.272727 5.372727

## d 11.91250 9.675000 3.981250

##

## Coefficients of linear discriminants:

## LD1 LD2 LD3

## creatinine 0.24429462 -0.1700525 -0.02623962

## chlorine -0.02167823 -0.1353051 0.11524045

## chloride 0.23805588 0.3590364 0.30564592

##

## Proportion of trace:

## LD1 LD2 LD3

## 0.7476 0.2430 0.0093\(\blacksquare\)

- How many linear discriminants were you expecting? Explain briefly.

Solution

There are 3 variables and 4 groups, so the smaller of 3 and \(4-1=3\): that is, 3.

\(\blacksquare\)

- Why do you think we should pay attention to the first two linear discriminants but not the third? Explain briefly.

Solution

The first two “proportion of trace” values are a lot bigger than the third (or, the third one is close to 0).

\(\blacksquare\)

- Plot the first two linear discriminant scores (against each other), with each obesity group being a different colour.

Solution

First obtain the predictions, and then make a data frame out of the original data and the predictions.

urine.pred <- predict(urine.1)

d <- cbind(urine, urine.pred)

head(d)## obesity x creatinine chloride chlorine class posterior.a posterior.b

## 1 a 24 17.6 5.15 7.5 b 0.2327008 0.4124974

## 2 a 32 13.4 5.75 7.1 a 0.3599095 0.2102510

## 3 a 17 20.3 4.35 2.3 b 0.2271118 0.4993603

## 4 a 30 22.3 7.55 4.0 b 0.2935374 0.4823766

## 5 a 30 20.5 8.50 2.0 a 0.4774623 0.3258104

## 6 a 27 18.5 10.25 2.0 a 0.6678748 0.1810762

## posterior.c posterior.d x.LD1 x.LD2 x.LD3

## 1 0.3022445 0.052557333 0.3926519 -0.3290621 -0.0704284

## 2 0.2633959 0.166443708 -0.4818807 0.6547023 0.1770694

## 3 0.2519562 0.021571722 0.9745295 -0.3718462 -0.9850425

## 4 0.2211991 0.002886957 2.1880446 0.2069465 0.1364540

## 5 0.1933571 0.003370286 2.0178238 1.1247359 0.2435680

## 6 0.1482500 0.002799004 1.9458323 2.0931546 0.8309276urine produced the first five columns and urine.pred

produced the rest.

To go a more tidyverse way, we can combine the original data frame and

the predictions using bind_cols, but we have to be more

careful that the things we are gluing together are both data frames:

class(urine)## [1] "spec_tbl_df" "tbl_df" "tbl" "data.frame"class(urine.pred)## [1] "list"urine is a tibble all right, but urine.pred is a list. What does it look like?

glimpse(urine.pred)## List of 3

## $ class : Factor w/ 4 levels "a","b","c","d": 2 1 2 2 1 1 3 3 1 1 ...

## $ posterior: num [1:45, 1:4] 0.233 0.36 0.227 0.294 0.477 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : chr [1:45] "1" "2" "3" "4" ...

## .. ..$ : chr [1:4] "a" "b" "c" "d"

## $ x : num [1:45, 1:3] 0.393 -0.482 0.975 2.188 2.018 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : chr [1:45] "1" "2" "3" "4" ...

## .. ..$ : chr [1:3] "LD1" "LD2" "LD3"A data frame is a list for which all the items are the same length,

but some of the things in here are matrices. You can tell because they

have a number of rows, 45, and a number of columns, 3 or

4. They do have the right number of rows, though, so something

like as.data.frame (a base R function) will smoosh them all

into one data frame, grabbing the columns from the matrices:

head(as.data.frame(urine.pred))## class posterior.a posterior.b posterior.c posterior.d x.LD1 x.LD2

## 1 b 0.2327008 0.4124974 0.3022445 0.052557333 0.3926519 -0.3290621

## 2 a 0.3599095 0.2102510 0.2633959 0.166443708 -0.4818807 0.6547023

## 3 b 0.2271118 0.4993603 0.2519562 0.021571722 0.9745295 -0.3718462

## 4 b 0.2935374 0.4823766 0.2211991 0.002886957 2.1880446 0.2069465

## 5 a 0.4774623 0.3258104 0.1933571 0.003370286 2.0178238 1.1247359

## 6 a 0.6678748 0.1810762 0.1482500 0.002799004 1.9458323 2.0931546

## x.LD3

## 1 -0.0704284

## 2 0.1770694

## 3 -0.9850425

## 4 0.1364540

## 5 0.2435680

## 6 0.8309276You see that the columns that came from matrices have gained two-part names, the first part from the name of the matrix, the second part from the column name within that matrix. Then we can do this:

dd <- bind_cols(urine, as.data.frame(urine.pred))

dd## # A tibble: 45 × 13

## obesity x creatinine chloride chlorine class posterior.a posterior.b

## * <chr> <dbl> <dbl> <dbl> <dbl> <fct> <dbl> <dbl>

## 1 a 24 17.6 5.15 7.5 b 0.233 0.412

## 2 a 32 13.4 5.75 7.1 a 0.360 0.210

## 3 a 17 20.3 4.35 2.3 b 0.227 0.499

## 4 a 30 22.3 7.55 4 b 0.294 0.482

## 5 a 30 20.5 8.5 2 a 0.477 0.326

## 6 a 27 18.5 10.2 2 a 0.668 0.181

## 7 a 25 12.1 5.95 16.8 c 0.167 0.208

## 8 a 30 12 6.3 14.5 c 0.230 0.197

## 9 a 28 10.1 5.45 0.9 a 0.481 0.0752

## 10 a 24 14.7 3.75 2 a 0.323 0.247

## # … with 35 more rows, and 5 more variables: posterior.c <dbl>,

## # posterior.d <dbl>, x.LD1 <dbl>, x.LD2 <dbl>, x.LD3 <dbl>If you want to avoid base R altogether, though, and go straight to

bind_cols, you have to be more careful about the types of

things. bind_cols only works with vectors and data

frames, not matrices, so that is what it is up to you to make sure you

have. That means pulling out the pieces, turning them from matrices

into data frames, and then gluing everything back together:

post <- as_tibble(urine.pred$posterior)

ld <- as_tibble(urine.pred$x)

ddd <- bind_cols(urine, class = urine.pred$class, ld, post)

ddd## # A tibble: 45 × 13

## obesity x creatinine chloride chlorine class LD1 LD2 LD3 a

## <chr> <dbl> <dbl> <dbl> <dbl> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 a 24 17.6 5.15 7.5 b 0.393 -0.329 -0.0704 0.233

## 2 a 32 13.4 5.75 7.1 a -0.482 0.655 0.177 0.360

## 3 a 17 20.3 4.35 2.3 b 0.975 -0.372 -0.985 0.227

## 4 a 30 22.3 7.55 4 b 2.19 0.207 0.136 0.294

## 5 a 30 20.5 8.5 2 a 2.02 1.12 0.244 0.477

## 6 a 27 18.5 10.2 2 a 1.95 2.09 0.831 0.668

## 7 a 25 12.1 5.95 16.8 c -0.962 -0.365 1.39 0.167

## 8 a 30 12 6.3 14.5 c -0.853 0.0890 1.23 0.230

## 9 a 28 10.1 5.45 0.9 a -1.23 1.95 -0.543 0.481

## 10 a 24 14.7 3.75 2 a -0.530 0.406 -1.06 0.323

## # … with 35 more rows, and 3 more variables: b <dbl>, c <dbl>, d <dbl>That’s a lot of work, but you might say that it’s worth it because you

are now absolutely sure what kind of thing everything is. I also had

to be slightly careful with the vector of class values; in

ddd it has to have a name, so I have to make sure I give it

one.37

Any of these ways (in general) is good. The last way is a more

careful approach, since you are making sure things are of the right

type rather than relying on R to convert them for you, but I don’t

mind which way you go.

Now make the plot, making sure that you are using columns with the right names. I’m using my first data frame, with the two-part names:

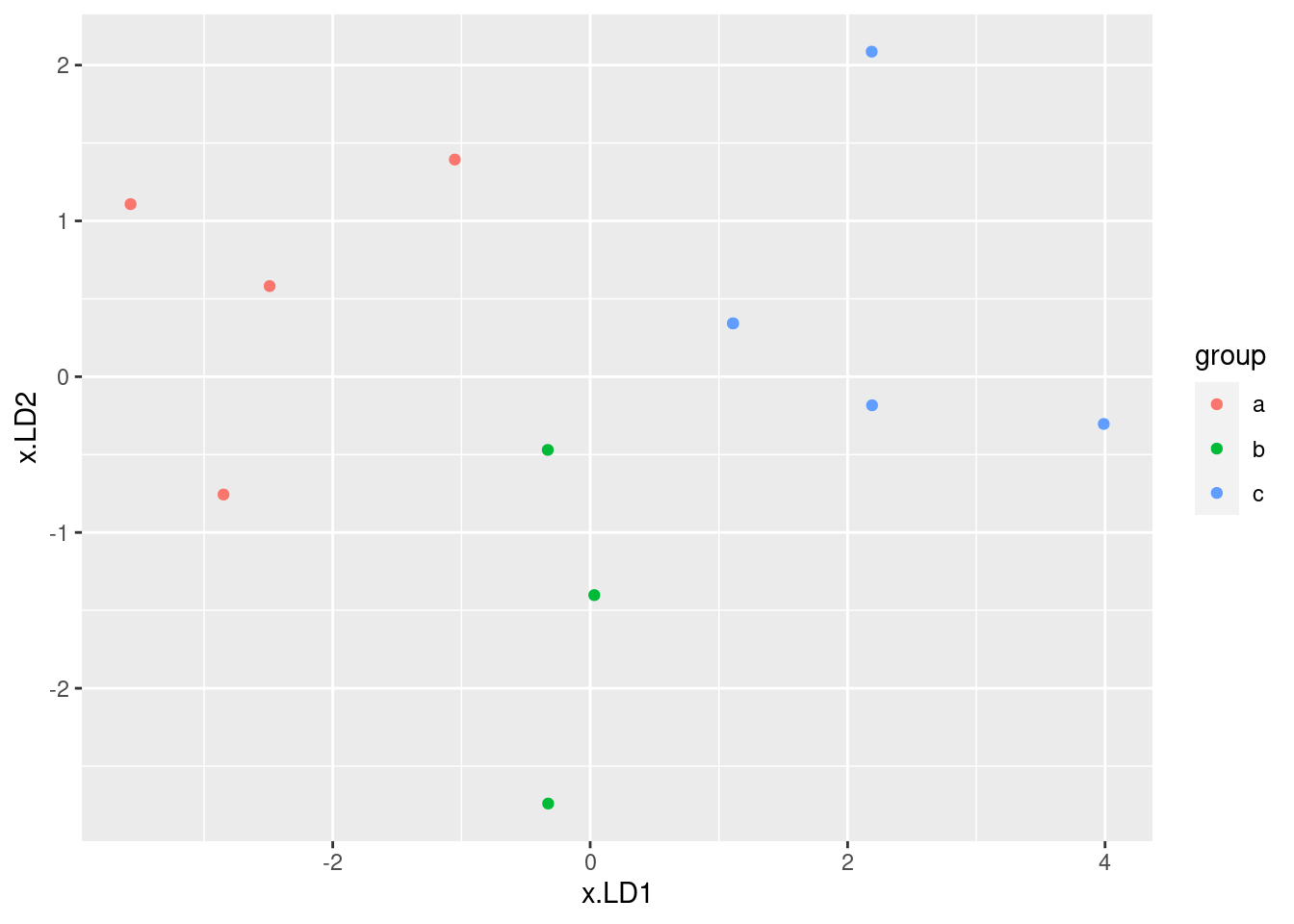

ggplot(d, aes(x = x.LD1, y = x.LD2, colour = obesity)) + geom_point()

\(\blacksquare\)

- * Looking at your plot, discuss how (if at all) the discriminants separate the obesity groups. (Where does each obesity group fall on the plot?)

Solution

My immediate reaction was

“they don’t much”. If you look a bit more closely, the

b group, in green, is on the right (high

LD1) and the d group (purple) is on the

left (low LD1). The a group, red, is

mostly at the top (high LD2) but the c

group, blue, really is all over the place.

The way to tackle interpreting a plot like this is to look for each group individually and see if that group is only or mainly found on a certain part of the plot.

This can be rationalized by looking at

the “coefficients of linear discriminants” on the output. LD1 is

low if creatinine and chloride are low (it has nothing

much to do with chlorine since that coefficient

is near zero). Group d is lowest on both

creatinine and chloride, so that will be lowest on

LD1. LD2 is high if chloride

is high, or creatinine and chlorine are

low. Out of the groups a, b, c, a has

the highest mean on chloride and lowest means on the other

two variables, so this should be highest on LD2

and (usually) is.

Looking at the means is only part of the story; if the

individuals within a group are very variable, as they are

here (especially group c), then that group will

appear all over the plot. The table of means only says how

the average individual within a group stacks up.

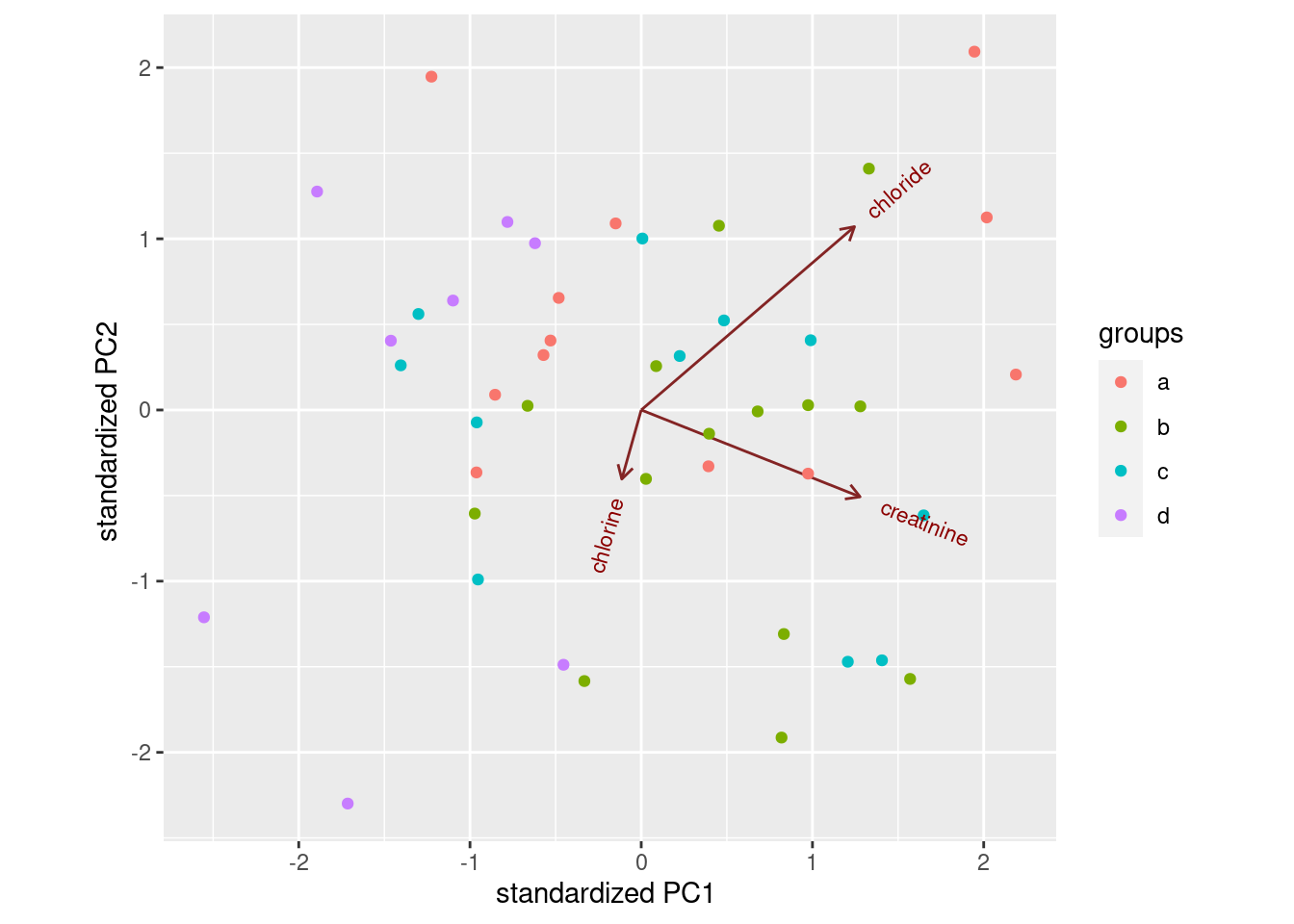

ggbiplot(urine.1, groups = urine$obesity)

This shows (in a way that is perhaps easier to see) how the linear

discriminants are related to the original variables, and thus how the

groups differ in terms of the original variables.38

Most of the B’s are high creatinine and high chloride (on the right); most of the D’s are low on both (on the left). LD2 has a bit of chloride, but not much of anything else.

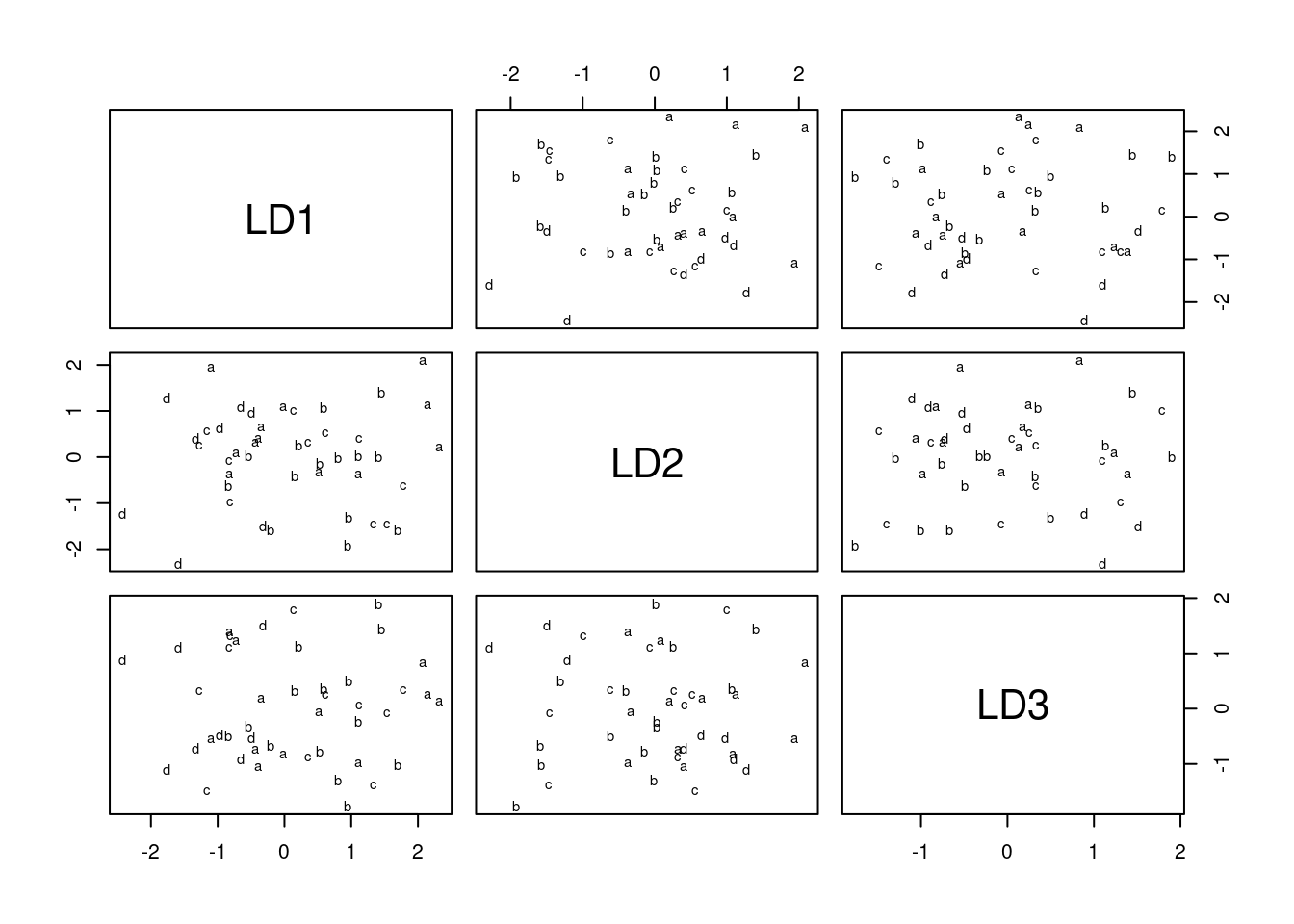

Extra: the way we used to do this was with “base graphics”, which involved plotting the lda output itself:

plot(urine.1)

which is a plot of each discriminant score against each other one. You can plot just the first two, like this:

plot(urine.1, dimen = 2)

This is easier than using ggplot, but (i) less flexible and

(ii) you have to figure out how it works rather than doing things the

standard ggplot way. So I went with constructing a data frame

from the predictions, and then

ggplotting that. It’s a matter of taste which way is better.

\(\blacksquare\)

- * Obtain a table showing observed and predicted obesity groups. Comment on the accuracy of the predictions.

Solution

Make a table, one way or another:

tab <- with(d, table(obesity, class))

tab## class

## obesity a b c d

## a 7 3 2 0

## b 2 9 2 1

## c 3 4 1 3

## d 2 0 1 5class is always the predicted group in these. You can

also name things in table.

Or, if you prefer (equally good), the tidyverse way of

counting all the combinations of true obesity and predicted

class, which can be done all in one go, or in

two steps by saving the data frame first. I’m saving my results for

later:

d %>% count(obesity, class) -> tab

tab## obesity class n

## 1 a a 7

## 2 a b 3

## 3 a c 2

## 4 b a 2

## 5 b b 9

## 6 b c 2

## 7 b d 1

## 8 c a 3

## 9 c b 4

## 10 c c 1

## 11 c d 3

## 12 d a 2

## 13 d c 1

## 14 d d 5or if you prefer to make it look more like a table of frequencies:

tab %>% pivot_wider(names_from=class, values_from=n, values_fill = list(n=0))## # A tibble: 4 × 5

## obesity a b c d

## <chr> <int> <int> <int> <int>

## 1 a 7 3 2 0

## 2 b 2 9 2 1

## 3 c 3 4 1 3

## 4 d 2 0 1 5The thing on the end fills in zero frequencies as such (they would

otherwise be NA, which they are not: we know they are zero).

My immediate reaction to this is “it’s terrible”! But at least some

of the men have their obesity group correctly predicted: 7 of the

\(7+3+2+0=12\)

men that are actually in group a are predicted to be in

a; 9 of the 14 actual b’s are predicted to be

b’s; 5 of the 8 actual d’s are predicted to be

d’s. These are not so awful. But only 1 of the 11

c’s is correctly predicted to be a c!

As for what I want to see: I am looking for some kind of statement about how good you think the predictions are (the word “terrible” is fine for this) with some kind of support for your statement. For example, “the predictions are not that good, but at least group B is predicted with some accuracy (9 out of 14).”

I think looking at how well the individual groups were predicted is

the most incisive way of getting at this, because the c men

are the hardest to get right and the others are easier, but you could

also think about an overall misclassification rate. This comes most

easily from the “tidy” table:

tab %>% count(correct = (obesity == class), wt = n)## correct n

## 1 FALSE 23

## 2 TRUE 22You can count anything, not just columns that already exist. This one

is a kind of combined mutate-and-count to create the (logical) column

called correct.

It’s a shortcut for this:

tab %>%

mutate(is_correct = (obesity == class)) %>%

count(is_correct, wt = n)## is_correct n

## 1 FALSE 23

## 2 TRUE 22If I don’t put the wt, count counts the number of

rows for which the true and predicted obesity group is the

same. But that’s not what I want here: I want the number of

observations totalled up, which is what the wt=

does. It says “use the things in the given column as weights”, which

means to total them up rather than count up the number of rows.

This says that 22 men were classified correctly and 23 were gotten wrong. We can find the proportions correct and wrong:

tab %>%

count(correct = (obesity == class), wt = n) %>%

mutate(proportion = n / sum(n))## correct n proportion

## 1 FALSE 23 0.5111111

## 2 TRUE 22 0.4888889and we see that 51% of men had their obesity group predicted wrongly. This is the overall misclassification rate, which is a simple summary of how good a job the discriminant analysis did.

There is a subtlety here. n has changed its meaning in the

middle of this calculation! In tab, n is counting

the number of obesity observed and predicted combinations, but now it

is counting the number of men classified correctly and

incorrectly. The wt=n uses the first n, but the

mutate line uses the new n, the result of the

count line here. (I think count used to use

nn for the result of the second count, so that you

could tell them apart, but it no longer seems to do so.)

I said above that the obesity groups were not equally easy to

predict. A small modification of the above will get the

misclassification rates by (true) obesity group. This is done by

putting an appropriate group_by in at the front, before we

do any summarizing:

tab %>%

group_by(obesity) %>%

count(correct = (obesity == class), wt = n) %>%

mutate(proportion = n / sum(n))## # A tibble: 8 × 4

## # Groups: obesity [4]

## obesity correct n proportion

## <chr> <lgl> <int> <dbl>

## 1 a FALSE 5 0.417

## 2 a TRUE 7 0.583

## 3 b FALSE 5 0.357

## 4 b TRUE 9 0.643

## 5 c FALSE 10 0.909

## 6 c TRUE 1 0.0909

## 7 d FALSE 3 0.375

## 8 d TRUE 5 0.625This gives the proportion wrong and correct for each (true) obesity group. I’m going to do the one more cosmetic thing to make it easier to read, a kind of “untidying”:

tab %>%

group_by(obesity) %>%

count(correct = (obesity == class), wt = n) %>%

mutate(proportion = n / sum(n)) %>%

select(-n) %>%

pivot_wider(names_from=correct, values_from=proportion)## # A tibble: 4 × 3

## # Groups: obesity [4]

## obesity `FALSE` `TRUE`

## <chr> <dbl> <dbl>

## 1 a 0.417 0.583

## 2 b 0.357 0.643

## 3 c 0.909 0.0909

## 4 d 0.375 0.625Looking down the TRUE column, groups A, B and D were gotten

about 60% correct (and 40% wrong), but group C is much worse. The

overall misclassification rate is made bigger by the fact that C is so

hard to predict.

Find out for yourself what happens if I fail to remove the n

column before doing the pivot_wider.

A slightly more elegant look is obtained this way, by making nicer values than TRUE and FALSE:

tab %>%

group_by(obesity) %>%

mutate(prediction_stat = ifelse(obesity == class, "correct", "wrong")) %>%

count(prediction_stat, wt = n) %>%

mutate(proportion = n / sum(n)) %>%

select(-n) %>%

pivot_wider(names_from=prediction_stat, values_from=proportion)## # A tibble: 4 × 3

## # Groups: obesity [4]

## obesity correct wrong

## <chr> <dbl> <dbl>

## 1 a 0.583 0.417

## 2 b 0.643 0.357

## 3 c 0.0909 0.909

## 4 d 0.625 0.375\(\blacksquare\)

Solution

On the plot of (here), we said that there was a

lot of scatter, but that groups a, b and

d tended to be found at the top, right and left

respectively of the plot. That suggests that these three

groups should be somewhat predictable. The c’s, on

the other hand, were all over the place on the plot, and

were mostly predicted wrong.

The idea is that the stories you pull from the plot and the predictions should be more or less consistent. There are several ways you might say that: another approach is to say that the observations are all over the place on the plot, and the predictions are all bad. This is not as insightful as my comments above, but if that’s what the plot told you, that’s what the predictions would seem to be saying as well. (Or even, the predictions are not so bad compared to the apparently random pattern on the plot, if that’s what you saw. There are different ways to say something more or less sensible.)

\(\blacksquare\)

34.10 Understanding a MANOVA

One use of discriminant analysis is to

understand the results of a MANOVA. This question is a followup to a

previous MANOVA that we did, the one with two variables y1

and y2 and three groups a through c. The

data were in link.

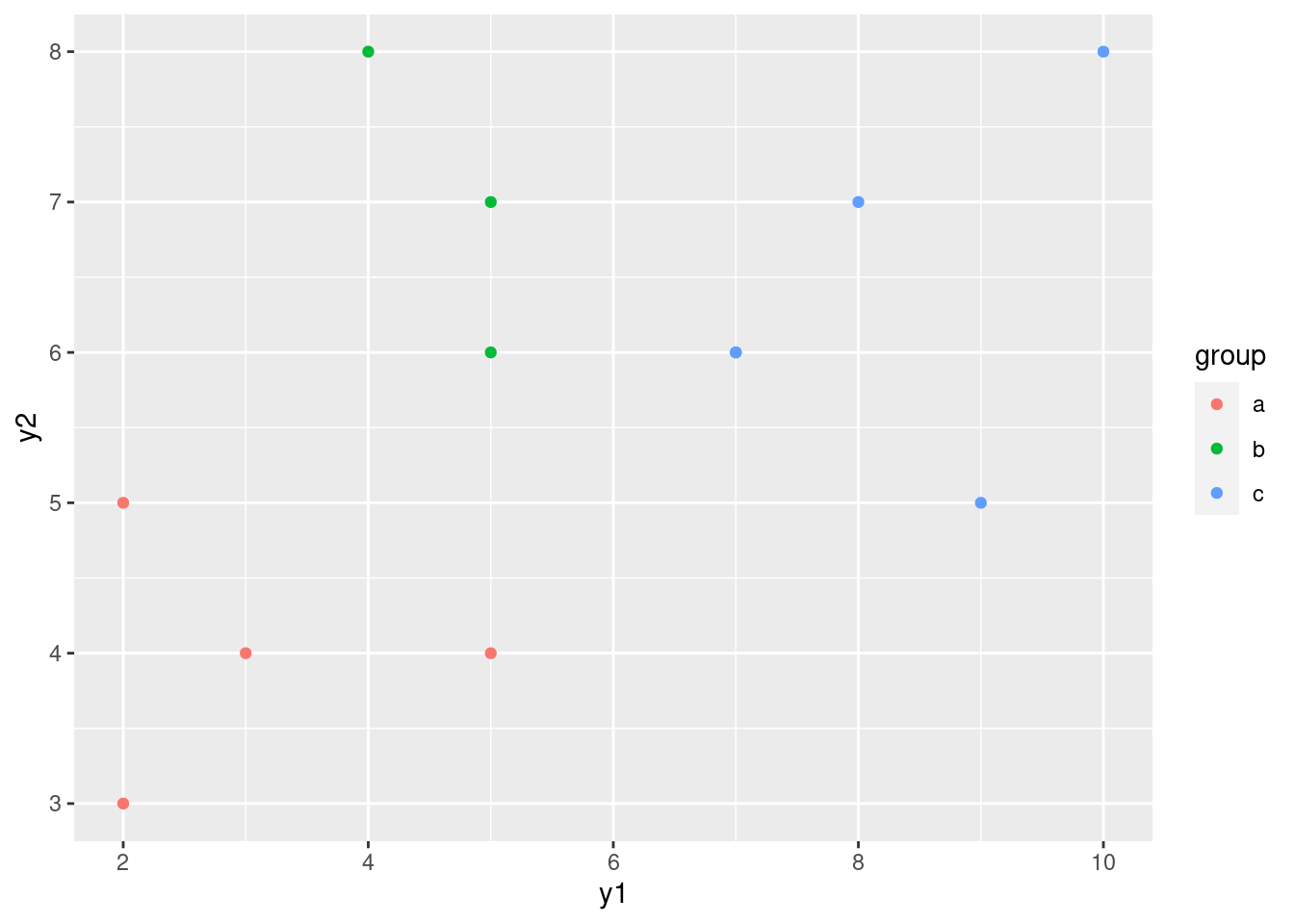

- Read the data in again and run the MANOVA that you did before.

Solution

This is an exact repeat of what you did before:

my_url <- "https://raw.githubusercontent.com/nxskok/datafiles/master/simple-manova.txt"

simple <- read_delim(my_url, " ")## Rows: 12 Columns: 3

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: " "

## chr (1): group

## dbl (2): y1, y2

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.simple## # A tibble: 12 × 3

## group y1 y2

## <chr> <dbl> <dbl>

## 1 a 2 3

## 2 a 3 4

## 3 a 5 4

## 4 a 2 5

## 5 b 4 8

## 6 b 5 6

## 7 b 5 7

## 8 c 7 6

## 9 c 8 7

## 10 c 10 8

## 11 c 9 5

## 12 c 7 6response <- with(simple, cbind(y1, y2))

simple.3 <- manova(response ~ group, data = simple)

summary(simple.3)## Df Pillai approx F num Df den Df Pr(>F)

## group 2 1.3534 9.4196 4 18 0.0002735 ***

## Residuals 9

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This P-value is small, so there is some way in which some of the groups differ on some of the variables.39

We should check that we believe this, using Box’s M test:40

summary(BoxM(response, simple$group))## Box's M Test

##

## Chi-Squared Value = 3.517357 , df = 6 and p-value: 0.742There is no problem here: no evidence that any of the response variables differ in spread across the groups.

\(\blacksquare\)

- Run a discriminant analysis “predicting” group from the two response variables. Display the output.

Solution

This:

simple.4 <- lda(group ~ y1 + y2, data = simple)

simple.4## Call:

## lda(group ~ y1 + y2, data = simple)

##

## Prior probabilities of groups:

## a b c

## 0.3333333 0.2500000 0.4166667

##

## Group means:

## y1 y2

## a 3.000000 4.0

## b 4.666667 7.0

## c 8.200000 6.4

##

## Coefficients of linear discriminants:

## LD1 LD2

## y1 0.7193766 0.4060972

## y2 0.3611104 -0.9319337

##

## Proportion of trace:

## LD1 LD2

## 0.8331 0.1669Note that this is the other way around from MANOVA: here, we are “predicting the group” from the response variables, in the same manner as one of the flavours of logistic regression: “what makes the groups different, in terms of those response variables?”.

\(\blacksquare\)

- * In the output from the discriminant analysis,

why are there exactly two linear discriminants

LD1andLD2?

Solution

There are two linear discriminants because there are 3 groups and two variables, so there are the smaller of \(3-1\) and 2 discriminants.

\(\blacksquare\)

- * From the output, how would you say that the

first linear discriminant

LD1compares in importance to the second oneLD2: much more important, more important, equally important, less important, much less important? Explain briefly.

Solution

Look at the Proportion of trace at the bottom of the output.

The first number is much bigger than the second, so the first linear

discriminant is much more important than the second. (I care about

your reason; you can say it’s “more important” rather than

“much more important” and I’m good with that.)

\(\blacksquare\)

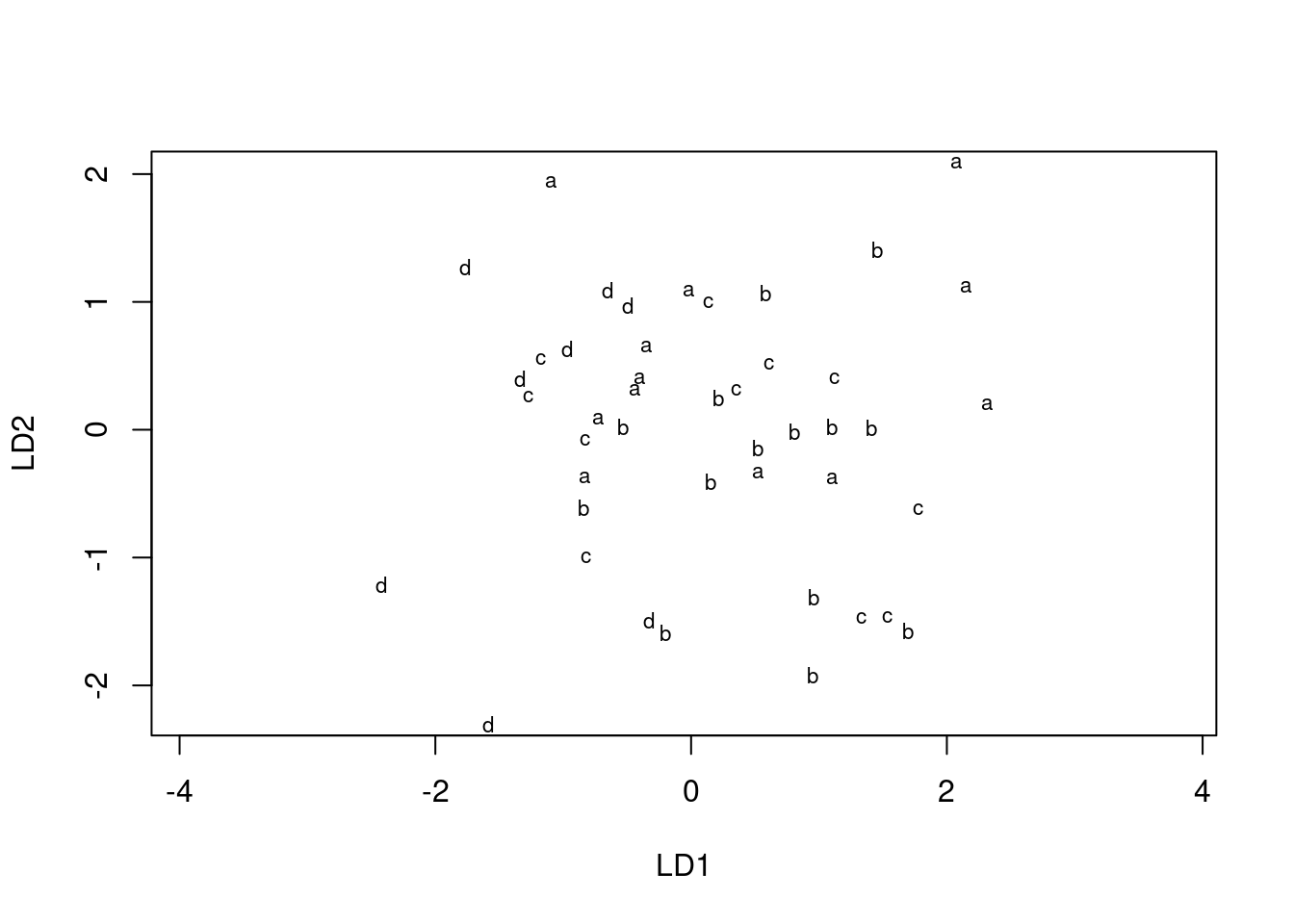

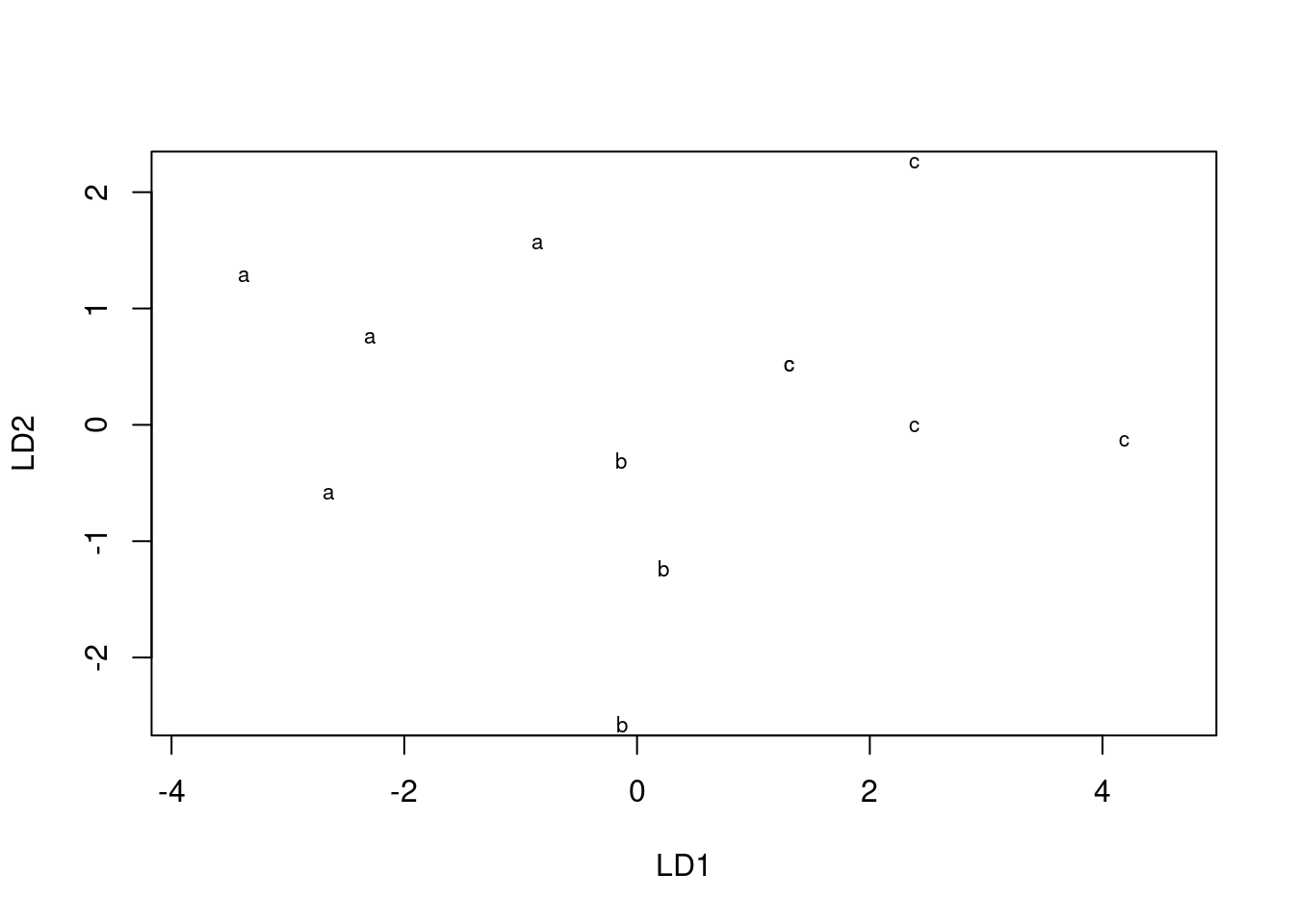

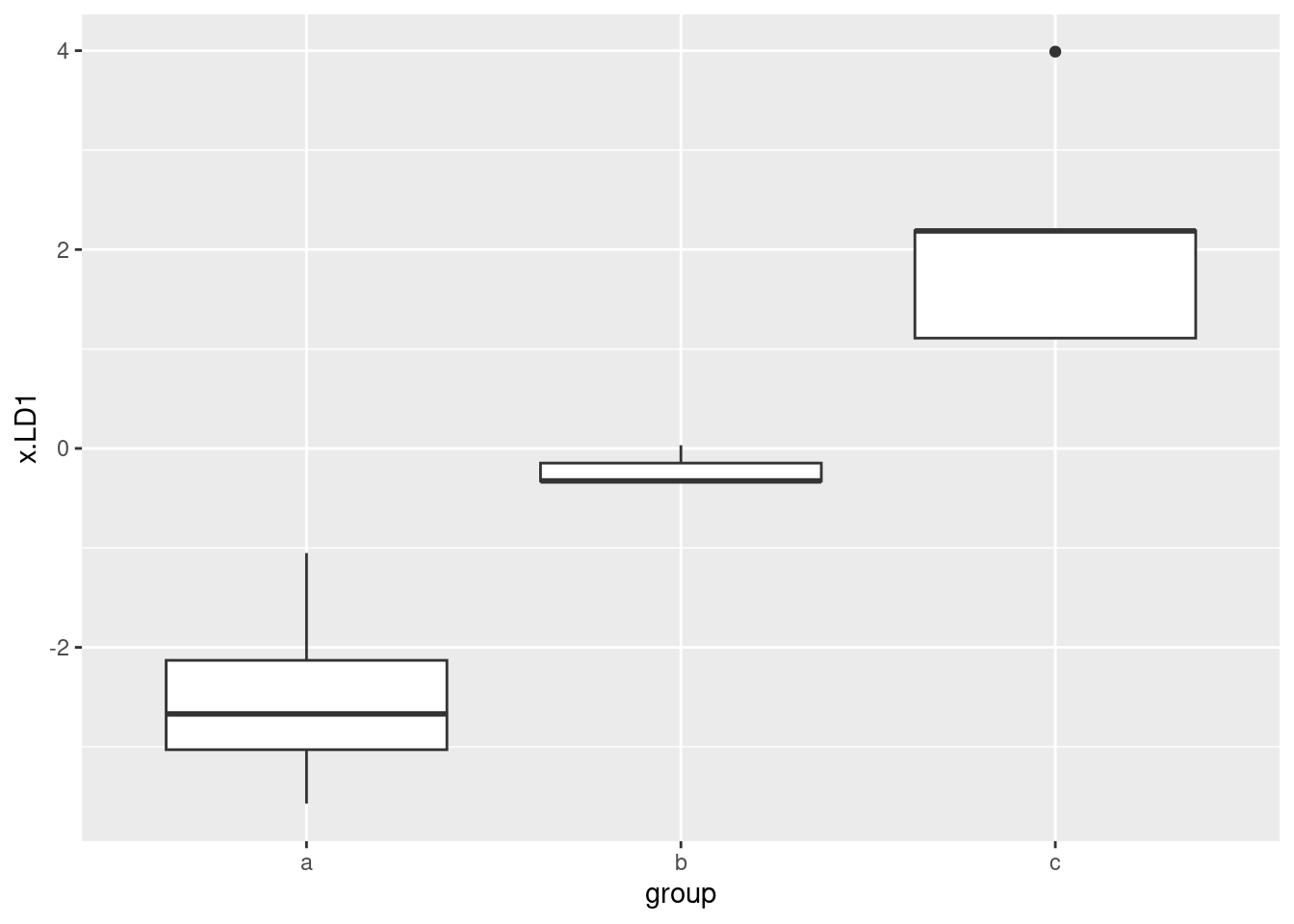

- Obtain a plot of the discriminant scores.

Solution

This was the old-fashioned way:

plot(simple.4)

It needs cajoling to produce colours, but we can do better. The first thing is to obtain the predictions:

simple.pred <- predict(simple.4)Then we make a data frame out of the discriminant scores and the true

groups, using cbind:

d <- cbind(simple, simple.pred)

head(d)## group y1 y2 class posterior.a posterior.b posterior.c x.LD1 x.LD2

## 1 a 2 3 a 0.999836110 0.0001636933 1.964310e-07 -3.5708196 1.1076359

## 2 a 3 4 a 0.994129686 0.0058400248 3.028912e-05 -2.4903326 0.5817994

## 3 a 5 4 a 0.953416498 0.0267238544 1.985965e-02 -1.0515795 1.3939939

## 4 a 2 5 a 0.957685668 0.0423077129 6.618865e-06 -2.8485988 -0.7562315

## 5 b 4 8 b 0.001068057 0.9978789644 1.052978e-03 -0.3265145 -2.7398380

## 6 b 5 6 b 0.107572389 0.8136017106 7.882590e-02 -0.3293587 -0.4698735or like this, for fun:41

ld <- as_tibble(simple.pred$x)

post <- as_tibble(simple.pred$posterior)

dd <- bind_cols(simple, class = simple.pred$class, ld, post)

dd## # A tibble: 12 × 9

## group y1 y2 class LD1 LD2 a b c

## <chr> <dbl> <dbl> <fct> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 a 2 3 a -3.57 1.11 1.00 0.000164 0.000000196

## 2 a 3 4 a -2.49 0.582 0.994 0.00584 0.0000303

## 3 a 5 4 a -1.05 1.39 0.953 0.0267 0.0199

## 4 a 2 5 a -2.85 -0.756 0.958 0.0423 0.00000662

## 5 b 4 8 b -0.327 -2.74 0.00107 0.998 0.00105

## 6 b 5 6 b -0.329 -0.470 0.108 0.814 0.0788

## 7 b 5 7 b 0.0318 -1.40 0.00772 0.959 0.0335

## 8 c 7 6 c 1.11 0.342 0.00186 0.0671 0.931

## 9 c 8 7 c 2.19 -0.184 0.0000127 0.0164 0.984

## 10 c 10 8 c 3.99 -0.303 0.00000000317 0.000322 1.00

## 11 c 9 5 c 2.19 2.09 0.0000173 0.000181 1.00

## 12 c 7 6 c 1.11 0.342 0.00186 0.0671 0.931After that, we plot the first one against the second one, colouring by true groups:

ggplot(d, aes(x = x.LD1, y = x.LD2, colour = group)) + geom_point()

I wanted to compare this plot with the original plot of y1

vs. y2, coloured by groups:

ggplot(simple, aes(x = y1, y = y2, colour = group)) + geom_point()

The difference between this plot and the one of LD1 vs.

LD2 is that things have been rotated a bit so that most of

the separation of groups is done by LD1. This is reflected in

the fact that LD1 is quite a bit more important than

LD2: the latter doesn’t help much in separating the groups.

With that in mind, we could also plot just LD1, presumably

against groups via boxplot:

ggplot(d, aes(x = group, y = x.LD1)) + geom_boxplot()

This shows that LD1 does a pretty fine job of separating the groups,

and LD2 doesn’t really have much to add to the picture.

\(\blacksquare\)

- Describe briefly how

LD1and/orLD2separate the groups. Does your picture confirm the relative importance ofLD1andLD2that you found back in part (here)? Explain briefly.

Solution

LD1 separates the groups left to right: group a is

low on LD1, b is in the middle and c is

high on LD1. (There is no intermingling of the groups on

LD1, so it separates the groups perfectly.)

As for LD2, all it does (possibly) is to distinguish

b (low) from a and c (high). Or you can,

just as reasonably, take the view that it doesn’t really separate

any of the groups.

Back in part (here), you said (I hope) that LD1

was (very) important compared to LD2. This shows up here in

that LD1 does a very good job of distinguishing the groups,

while LD2 does a poor to non-existent job of separating any

groups. (If you didn’t

say that before, here is an invitation to reconsider what you

did say there.)

\(\blacksquare\)

- What makes group

ahave a low score onLD1? There are two steps that you need to make: consider the means of groupaon variablesy1andy2and how they compare to the other groups, and consider howy1andy2play into the score onLD1.

Solution

The information you need is in the big output.

The means of y1 and y2 for group a are 3

and 4 respectively, which are the lowest of all the groups. That’s

the first thing.

The second thing is the coefficients of

LD1 in terms of y1 and y2, which are both

positive. That means, for any observation, if its y1

and y2 values are large, that observation’s score on

LD1 will be large as well. Conversely, if its values are

small, as the ones in group a are, its score on

LD1 will be small.

You need these two things.

This explains why the group a observations are on the left

of the plot. It also explains why the group c observations

are on the right: they are large on both y1 and

y2, and so large on LD1.

What about LD2? This is a little more confusing (and thus I

didn’t ask you about that). Its “coefficients of linear discriminant”

are positive on y1 and negative on

y2, with the latter being bigger in size. Group b

is about average on y1 and distinctly high on

y2; the second of these coupled with the negative

coefficient on y2 means that the LD2 score for

observations in group b will be negative.

For LD2, group a has a low mean on both variables

and group c has a high mean, so for both groups there is a

kind of cancelling-out happening, and neither group a nor

group c will be especially remarkable on LD2.

\(\blacksquare\)

- Obtain predictions for the group memberships of each observation, and make a table of the actual group memberships against the predicted ones. How many of the observations were wrongly classified?

Solution

Use the

simple.pred that you got earlier. This is the

table way:

with(d, table(obs = group, pred = class))## pred

## obs a b c

## a 4 0 0

## b 0 3 0

## c 0 0 5Every single one of the 12 observations has been classified into its

correct group. (There is nothing off the diagonal of this table.)

The alternative to table is the tidyverse way:

d %>% count(group, class)## group class n

## 1 a a 4

## 2 b b 3

## 3 c c 5or

d %>%

count(group, class) %>%

pivot_wider(names_from=class, values_from=n, values_fill = list(n=0))## # A tibble: 3 × 4

## group a b c

## <chr> <int> <int> <int>

## 1 a 4 0 0

## 2 b 0 3 0

## 3 c 0 0 5if you want something that looks like a frequency table.

All the as got classified as a, and so on.

That’s the end of what I asked you to do, but as ever I wanted to

press on. The next question to ask after getting the predicted groups

is “what are the posterior probabilities of being in each group for each observation”:

that is, not just which group do I think it

belongs in, but how sure am I about that call? The posterior

probabilities in my d start with posterior. These

have a ton of decimal places which I like to round off first before I

display them, eg. to 3 decimals here:

d %>%

select(y1, y2, group, class, starts_with("posterior")) %>%

mutate(across(starts_with("posterior"), \(post) round(post, 3)))## y1 y2 group class posterior.a posterior.b posterior.c

## 1 2 3 a a 1.000 0.000 0.000

## 2 3 4 a a 0.994 0.006 0.000

## 3 5 4 a a 0.953 0.027 0.020

## 4 2 5 a a 0.958 0.042 0.000

## 5 4 8 b b 0.001 0.998 0.001

## 6 5 6 b b 0.108 0.814 0.079

## 7 5 7 b b 0.008 0.959 0.034

## 8 7 6 c c 0.002 0.067 0.931

## 9 8 7 c c 0.000 0.016 0.984

## 10 10 8 c c 0.000 0.000 1.000

## 11 9 5 c c 0.000 0.000 1.000

## 12 7 6 c c 0.002 0.067 0.931You see that the posterior probability of an observation being in the

group it actually was in is close to 1 all the way down. The

only one with any doubt at all is observation #6, which is actually

in group b, but has “only” probability 0.814 of being a

b based on its y1 and y2 values. What else

could it be? Well, it’s about equally split between being a

and c. Let me see if I can display this observation on the

plot in a different way. First I need to make a new column picking out

observation 6, and then I use this new variable as the size

of the point I plot:

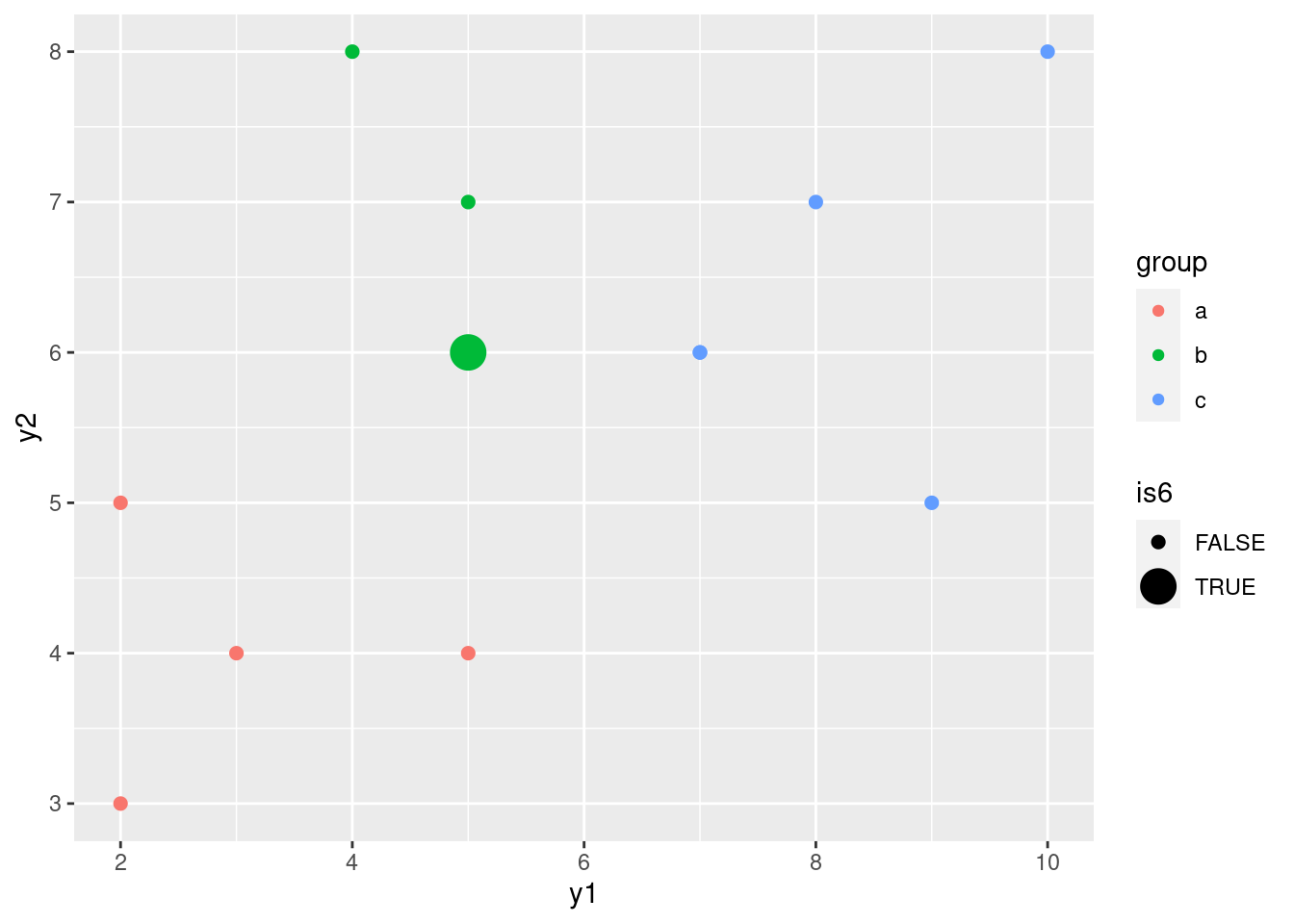

simple %>%

mutate(is6 = (row_number() == 6)) %>%

ggplot(aes(x = y1, y = y2, colour = group, size = is6)) +

geom_point()## Warning: Using size for a discrete variable is not advised.

That makes it stand out.

As the legend indicates, observation #6 is plotted as a big circle,

with the rest being plotted as small circles as usual. Since observation #6

is in group b, it appears as a big green circle. What makes it

least like a b? Well, it has the smallest y2 value

of any of the b’s (which makes it most like an a of

any of the b’s), and it has the largest y1 value (which makes it

most like a c of any of the b’s). But still, it’s nearer the

greens than anything else, so it’s still more like a b than

it is like any of the other groups.

\(\blacksquare\)

34.11 What distinguishes people who do different jobs?

24442 people work at a

certain company.

They each have one of three jobs: customer service, mechanic,

dispatcher. In the data set, these are labelled 1, 2 and 3

respectively. In addition, they each are rated on scales called

outdoor, social and conservative. Do people

with different jobs tend to have different scores on these scales, or,

to put it another way, if you knew a person’s scores on

outdoor, social and conservative, could you

say something about what kind of job they were likely to hold? The

data are in link.

- Read in the data and display some of it.

Solution

The usual. This one is aligned columns. I’m using a “temporary” name for my read-in data frame, since I’m going to create the proper one in a moment.

my_url <- "https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt"

jobs0 <- read_table(my_url)## Warning: Missing column names filled in: 'X6' [6]##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## outdoor = col_double(),

## social = col_double(),

## conservative = col_double(),

## job = col_double(),

## id = col_double(),

## X6 = col_character()

## )## Warning: 244 parsing failures.

## row col expected actual file

## 1 -- 6 columns 5 columns 'https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt'

## 2 -- 6 columns 5 columns 'https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt'

## 3 -- 6 columns 5 columns 'https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt'

## 4 -- 6 columns 5 columns 'https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt'

## 5 -- 6 columns 5 columns 'https://raw.githubusercontent.com/nxskok/datafiles/master/jobs.txt'

## ... ... ......... ......... ....................................................................

## See problems(...) for more details.jobs0## # A tibble: 244 × 6

## outdoor social conservative job id X6

## <dbl> <dbl> <dbl> <dbl> <dbl> <chr>

## 1 10 22 5 1 1 <NA>

## 2 14 17 6 1 2 <NA>

## 3 19 33 7 1 3 <NA>

## 4 14 29 12 1 4 <NA>

## 5 14 25 7 1 5 <NA>

## 6 20 25 12 1 6 <NA>

## 7 6 18 4 1 7 <NA>

## 8 13 27 7 1 8 <NA>

## 9 18 31 9 1 9 <NA>

## 10 16 35 13 1 10 <NA>

## # … with 234 more rowsWe got all that was promised, plus a label id for each

employee, which we will from here on ignore.43

\(\blacksquare\)

- Note the types of each of the variables, and create any new variables that you need to.

Solution

These are all int or whole numbers. But, the job ought

to be a factor: the labels 1, 2 and 3 have no meaning

as such, they just label the three different jobs. (I gave you a

hint of this above.) So we need to turn job into a

factor.

I think the best way to do that is via mutate, and then

we save the new data frame into one called jobs that we

actually use for the analysis below:

job_labels <- c("custserv", "mechanic", "dispatcher")

jobs <- jobs0 %>%

mutate(job = factor(job, labels = job_labels))I lived on the edge and saved my factor job into a variable

with the same name as the numeric one. I should check that I now have

the right thing:

jobs## # A tibble: 244 × 6

## outdoor social conservative job id X6

## <dbl> <dbl> <dbl> <fct> <dbl> <chr>

## 1 10 22 5 custserv 1 <NA>

## 2 14 17 6 custserv 2 <NA>

## 3 19 33 7 custserv 3 <NA>

## 4 14 29 12 custserv 4 <NA>

## 5 14 25 7 custserv 5 <NA>

## 6 20 25 12 custserv 6 <NA>

## 7 6 18 4 custserv 7 <NA>

## 8 13 27 7 custserv 8 <NA>

## 9 18 31 9 custserv 9 <NA>

## 10 16 35 13 custserv 10 <NA>

## # … with 234 more rowsI like this better because you see the actual factor levels rather than the underlying numeric values by which they are stored.

All is good here. If you forget the labels thing, you’ll get

a factor, but its levels will be 1, 2, and 3, and you will have to

remember which jobs they go with. I’m a fan of giving factors named

levels, so that you can remember what stands for what.44

Extra: another way of doing this is to make a lookup table, that is, a little table that shows which job goes with which number:

lookup_tab <- tribble(

~job, ~jobname,

1, "custserv",

2, "mechanic",

3, "dispatcher"

)

lookup_tab## # A tibble: 3 × 2

## job jobname

## <dbl> <chr>

## 1 1 custserv

## 2 2 mechanic

## 3 3 dispatcherI carefully put the numbers in a column called job because I want to match these with the column called job in jobs0:

jobs0 %>%

left_join(lookup_tab) %>%

sample_n(20)## Joining, by = "job"## # A tibble: 20 × 7

## outdoor social conservative job id X6 jobname

## <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 11 23 5 1 35 <NA> custserv

## 2 17 25 7 1 13 <NA> custserv

## 3 19 16 6 2 93 <NA> mechanic

## 4 19 23 12 2 55 <NA> mechanic

## 5 14 16 7 3 41 <NA> dispatcher

## 6 15 21 10 1 49 <NA> custserv

## 7 16 22 2 1 59 <NA> custserv

## 8 20 28 8 1 65 <NA> custserv

## 9 20 15 7 3 12 <NA> dispatcher

## 10 23 27 11 2 31 <NA> mechanic

## 11 17 17 8 2 85 <NA> mechanic

## 12 14 17 6 1 2 <NA> custserv

## 13 12 12 6 3 23 <NA> dispatcher

## 14 0 27 11 1 17 <NA> custserv

## 15 18 31 9 1 9 <NA> custserv

## 16 18 16 15 3 10 <NA> dispatcher

## 17 12 16 10 2 18 <NA> mechanic

## 18 13 16 7 3 27 <NA> dispatcher

## 19 13 15 18 3 21 <NA> dispatcher

## 20 13 20 10 2 22 <NA> mechanicYou see that each row has the name of the job that employee has, in the column jobname, because the job id was looked up in our lookup table. (I displayed some random rows so you could see that it worked.)

\(\blacksquare\)

- Run a multivariate analysis of variance to convince yourself that there are some differences in scale scores among the jobs.

Solution

You know how to do this, right? This one is the easy way:

response <- with(jobs, cbind(social, outdoor, conservative))

response.1 <- manova(response ~ job, data = jobs)

summary(response.1)## Df Pillai approx F num Df den Df Pr(>F)

## job 2 0.76207 49.248 6 480 < 2.2e-16 ***

## Residuals 241

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Or you can use Manova. That is mostly for practice here,

since there is no reason to make things difficult for yourself:

library(car)

response.2 <- lm(response ~ job, data = jobs)

summary(Manova(response.2))##

## Type II MANOVA Tests:

##

## Sum of squares and products for error:

## social outdoor conservative

## social 4406.2994 334.90273 235.46301

## outdoor 334.9027 4082.46302 64.76334

## conservative 235.4630 64.76334 2683.25695

##

## ------------------------------------------

##

## Term: job

##

## Sum of squares and products for the hypothesis:

## social outdoor conservative

## social 2889.1228 -794.3945 -1405.8401

## outdoor -794.3945 1609.7993 283.1711

## conservative -1405.8401 283.1711 691.7594

##

## Multivariate Tests: job

## Df test stat approx F num Df den Df Pr(>F)

## Pillai 2 0.7620657 49.24757 6 480 < 2.22e-16 ***

## Wilks 2 0.3639880 52.38173 6 478 < 2.22e-16 ***

## Hotelling-Lawley 2 1.4010307 55.57422 6 476 < 2.22e-16 ***

## Roy 2 1.0805270 86.44216 3 240 < 2.22e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This version gives the four different versions of the test (rather than just the Pillai test that manova gives), but the results are in this case identical for all of them.

So: oh yes, there are differences (on some or all of the variables, for some or all of the groups). So we need something like discriminant analysis to understand the differences.

We really ought to follow this up with Box’s M test, to be sure that the variances and correlations for each variable are equal enough across the groups, but we note off the top that the P-values (all of them) are really small, so there ought not to be much doubt about the conclusion anyway:

summary(BoxM(response, jobs$job))## Box's M Test

##

## Chi-Squared Value = 25.64176 , df = 12 and p-value: 0.0121This is small, but not (for this test) small enough to worry about (it’s not less than 0.001).

This, and the lda below, actually works perfectly well if you use the

original (integer) job, but then you have to remember which job number

is which.

\(\blacksquare\)

- Run a discriminant analysis and display the output.

Solution

Now job

is the “response”:

job.1 <- lda(job ~ social + outdoor + conservative, data = jobs)

job.1## Call:

## lda(job ~ social + outdoor + conservative, data = jobs)

##

## Prior probabilities of groups:

## custserv mechanic dispatcher

## 0.3483607 0.3811475 0.2704918

##

## Group means:

## social outdoor conservative

## custserv 24.22353 12.51765 9.023529

## mechanic 21.13978 18.53763 10.139785

## dispatcher 15.45455 15.57576 13.242424

##

## Coefficients of linear discriminants:

## LD1 LD2

## social -0.19427415 -0.04978105

## outdoor 0.09198065 -0.22501431

## conservative 0.15499199 0.08734288

##

## Proportion of trace:

## LD1 LD2

## 0.7712 0.2288\(\blacksquare\)

- Which is the more important,

LD1orLD2? How much more important? Justify your answer briefly.

Solution

Look at the “proportion of trace” at the bottom. The value for

LD1 is quite a bit higher, so LD1 is quite a

bit more important when it comes to separating the groups.

LD2 is, as I said, less important, but is not

completely worthless, so it will be worth taking a look at it.

\(\blacksquare\)

- Describe what values for an individual on the scales will make

each of

LD1andLD2high.

Solution

This is a two-parter: decide whether each scale makes a

positive, negative or zero contribution to the linear

discriminant (looking at the “coefficients of linear discriminants”),

and then translate that into what would make

each LD high. Let’s start with LD1:

Its coefficients on the three scales are respectively negative

(\(-0.19\)), zero (0.09; my call) and positive (0.15). Where you draw the

line is up to you: if you want to say that outdoor’s

contribution is positive, go ahead. This means that LD1

will be high if social is low and if

conservative is high. (If you thought that

outdoor’s coefficient was positive rather than zero, if

outdoor is high as well.)

Now for LD2: I’m going to call outdoor’s

coefficient of \(-0.22\) negative and the other two zero, so that

LD2 is high if outdoor is low. Again,

if you made a different judgement call, adapt your answer accordingly.

\(\blacksquare\)

- The first group of employees, customer service, have the

highest mean on

socialand the lowest mean on both of the other two scales. Would you expect the customer service employees to score high or low onLD1? What aboutLD2?

Solution

In the light of what we said in the previous part, the customer

service employees, who are high on social and low on

conservative, should be low (negative) on

LD1, since both of these means are pointing that way.

As I called it, the only thing that matters to LD2 is

outdoor, which is low for the customer service

employees, and thus LD2 for them will be high

(negative coefficient).

\(\blacksquare\)

- Plot your discriminant scores (which you will have to obtain

first), and see if you were right about the customer service

employees in terms of

LD1andLD2. The job names are rather long, and there are a lot of individuals, so it is probably best to plot the scores as coloured circles with a legend saying which colour goes with which job (rather than labelling each individual with the job they have).

Solution

Predictions first, then make a data frame combining the predictions with the original data:

p <- predict(job.1)

d <- cbind(jobs, p)

head(d)## outdoor social conservative job id X6 class posterior.custserv

## 1 10 22 5 custserv 1 <NA> custserv 0.9037622

## 2 14 17 6 custserv 2 <NA> mechanic 0.3677743

## 3 19 33 7 custserv 3 <NA> custserv 0.7302117

## 4 14 29 12 custserv 4 <NA> custserv 0.8100756

## 5 14 25 7 custserv 5 <NA> custserv 0.7677607

## 6 20 25 12 custserv 6 <NA> mechanic 0.1682521

## posterior.mechanic posterior.dispatcher x.LD1 x.LD2

## 1 0.08894785 0.0072899882 -1.6423155 0.71477348

## 2 0.48897890 0.1432467601 -0.1480302 0.15096436

## 3 0.26946971 0.0003186265 -2.6415213 -1.68326115

## 4 0.18217319 0.0077512155 -1.5493681 0.07764901

## 5 0.22505382 0.0071854904 -1.5472314 -0.15994117

## 6 0.78482488 0.0469230463 -0.2203876 -1.07331266Following my suggestion, plot these the standard way with

colour distinguishing the jobs:

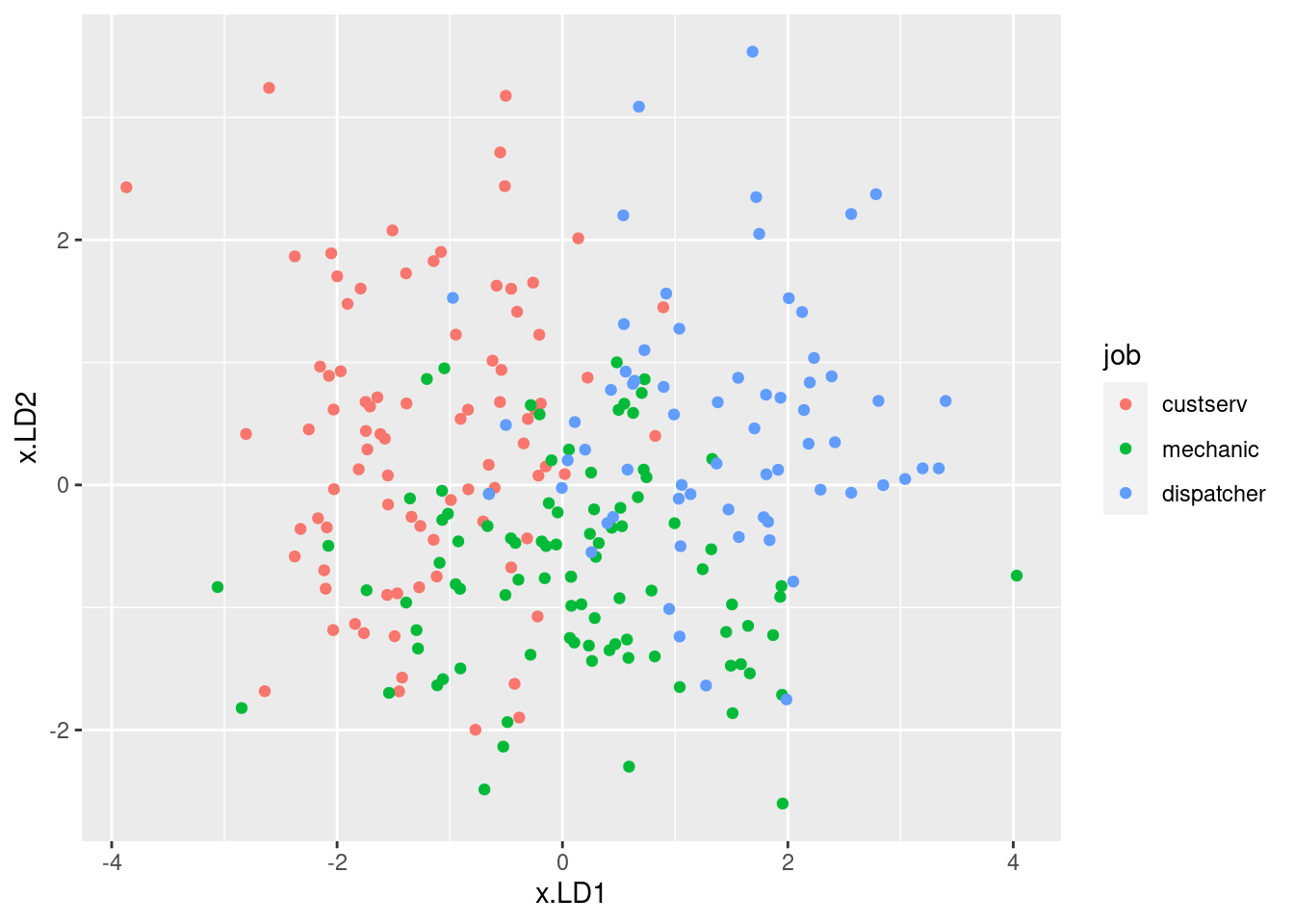

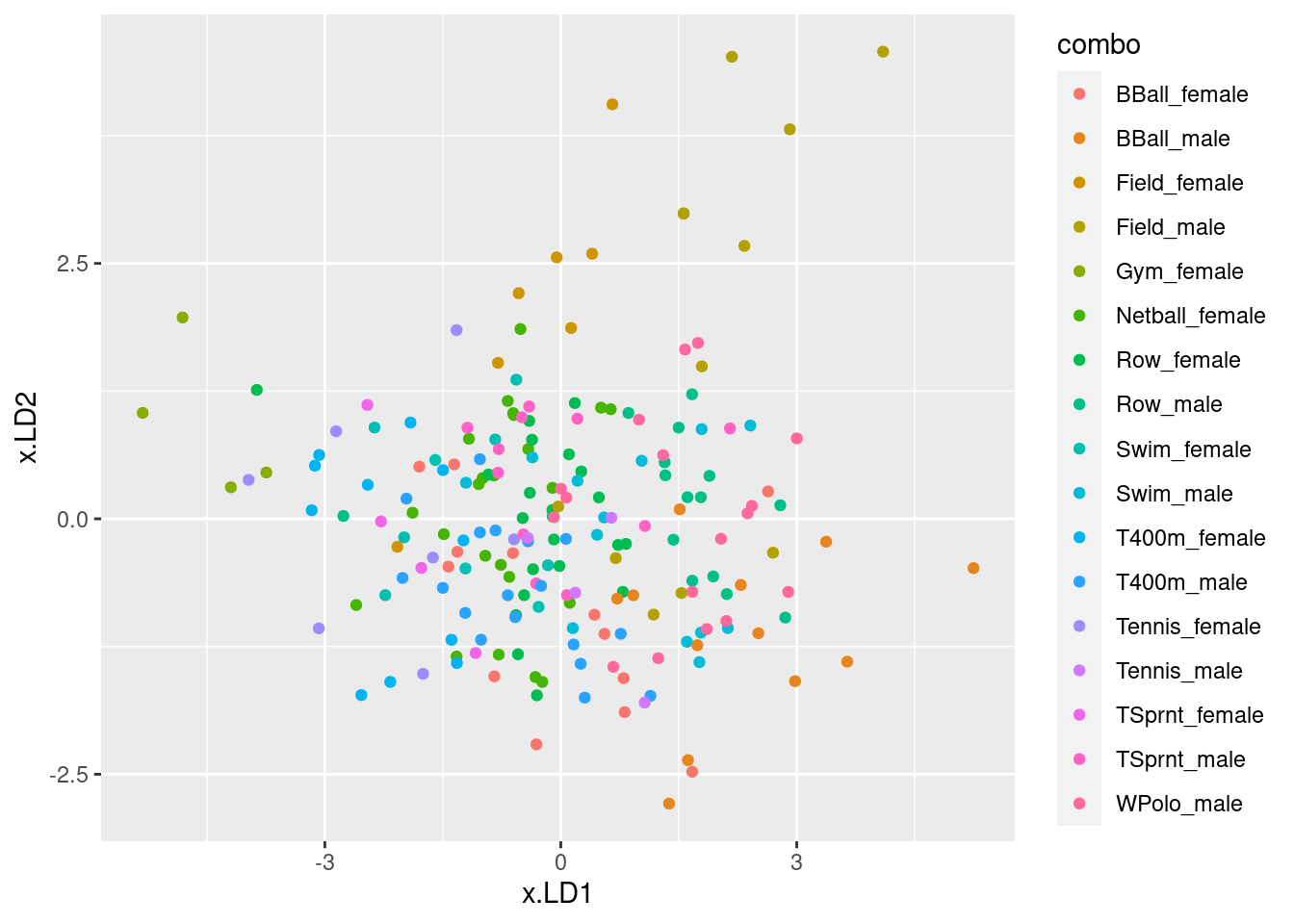

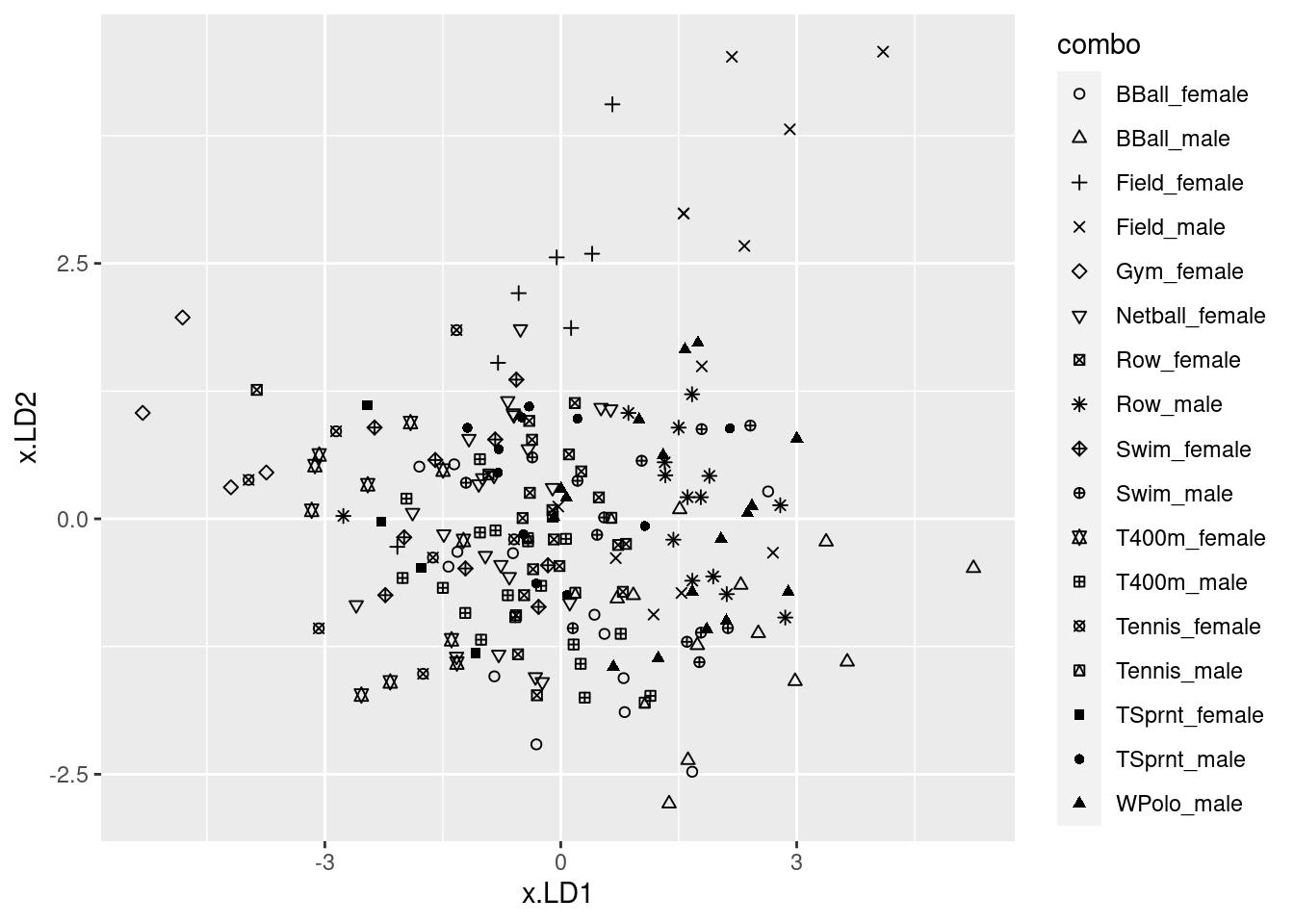

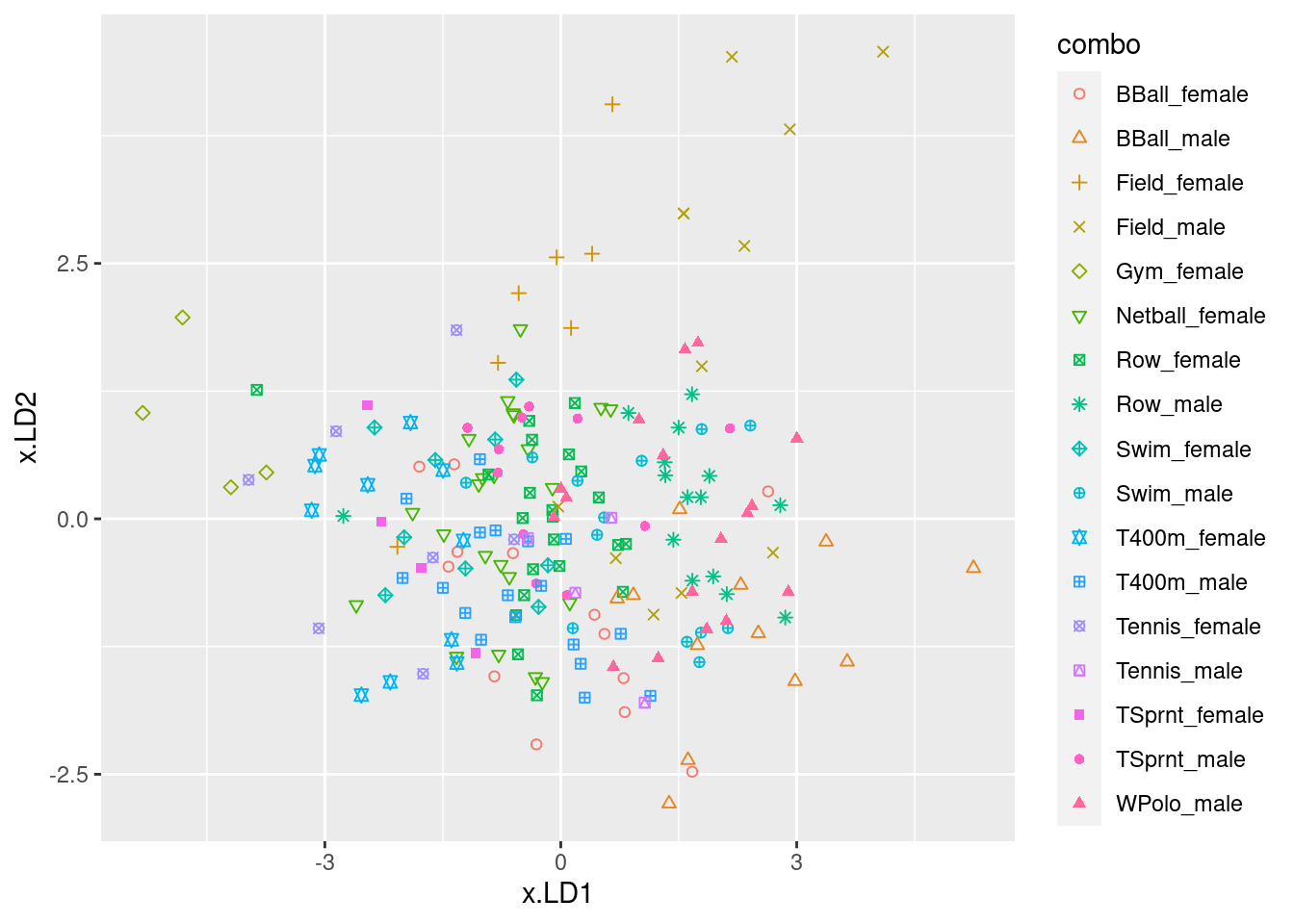

ggplot(d, aes(x = x.LD1, y = x.LD2, colour = job)) + geom_point()

I was mostly right about the customer service people: small

LD1 definitely, large LD2 kinda. I wasn’t more right

because the group means don’t tell the whole story: evidently, the

customer service people vary quite a bit on outdoor, so the

red dots are all over the left side of the plot.

There is quite a bit of intermingling of the three employee groups on the plot, but the point of the MANOVA is that the groups are (way) more separated than you’d expect by chance, that is if the employees were just randomly scattered across the plot.

To think back to that trace thing: here, it seems that

LD1 mainly separates customer service (left) from dispatchers

(right); the mechanics are all over the place on LD1, but

they tend to be low on LD2. So LD2 does have

something to say.

\(\blacksquare\)

- * Obtain predicted job allocations for each individual (based on their scores on the three scales), and tabulate the true jobs against the predicted jobs. How would you describe the quality of the classification? Is that in line with what the plot would suggest?

Solution