Chapter 28 Logistic regression with nominal response

library(nnet)

library(tidyverse)28.1 Finding non-missing values

* This is to prepare you for something in the next question. It’s meant to be easy.

In R, the code NA stands for “missing value” or

“value not known”. In R, NA should not have quotes around

it. (It is a special code, not a piece of text.)

Create a vector

vthat contains some numbers and some missing values, usingc(). Put those values into a one-column data frame.Obtain a new column containing

is.na(v). When is this true and when is this false?The symbol

!means “not” in R (and other programming languages). What does!is.na(v)do? Create a new column containing that.Use

filterto display just the rows of your data frame that have a non-missing value ofv.

28.3 Alligator food

What do alligators most like to eat? 219 alligators were captured in four Florida lakes. Each alligator’s stomach contents were observed, and the food that the alligator had eaten was classified into one of five categories: fish, invertebrates (such as snails or insects), reptiles (such as turtles), birds, and “other” (such as amphibians, plants or rocks). The researcher noted for each alligator what that alligator had most of in its stomach, as well as the gender of each alligator and whether it was “large” or “small” (greater or less than 2.3 metres in length). The data can be found in link. The numbers in the data set (apart from the first column) are all frequencies. (You can ignore that first column “profile”.)

Our aim is to predict food type from the other variables.

Read in the data and display the first few lines. Describe how the data are not “tidy”.

Use

pivot_longerto arrange the data suitably for analysis (which will be usingmultinom). Demonstrate (by looking at the first few rows of your new data frame) that you now have something tidy.What is different about this problem, compared to Question here, that would make

multinomthe right tool to use?Fit a suitable multinomial model predicting food type from gender, size and lake. Does each row represent one alligator or more than one? If more than one, account for this in your modelling.

Do a test to see whether

Gendershould stay in the model. (This will entail fitting another model.) What do you conclude?Predict the probability that an alligator prefers each food type, given its size, gender (if necessary) and the lake it was found in, using the more appropriate of the two models that you have fitted so far. This means (i) making a data frame for prediction, and (ii) obtaining and displaying the predicted probabilities in a way that is easy to read.

What do you think is the most important way in which the lakes differ? (Hint: look at where the biggest predicted probabilities are.)

How would you describe the major difference between the diets of the small and large alligators?

28.4 Crimes in San Francisco

The data in link is a subset of a huge dataset of crimes committed in San Francisco between 2003 and 2015. The variables are:

Dates: the date and time of the crimeCategory: the category of crime, eg. “larceny” or “vandalism” (response).Descript: detailed description of crime.DayOfWeek: the day of the week of the crime.PdDistrict: the name of the San Francisco Police Department district in which the crime took place.Resolution: how the crime was resolvedAddress: approximate street address of crimeX: longitudeY: latitude

Our aim is to see whether the category of crime depends on the day of the week and the district in which it occurred. However, there are a lot of crime categories, so we will focus on the top four “interesting” ones, which are the ones included in this data file.

Some of the model-fitting takes a while (you’ll see why below). If

you’re using R Markdown, you can wait for the models to fit each time

you re-run your document, or insert cache=T in the top line

of your code chunk (the one with r in curly brackets in it,

above the actual code). Put a comma and the cache=T inside

the curly brackets. What that does is to re-run that code chunk only

if it changes; if it hasn’t changed it will use the saved results from

last time it was run. That can save you a lot of waiting around.

Read in the data and display the dataset (or, at least, part of it).

Fit a multinomial logistic regression that predicts crime category from day of week and district. (You don’t need to look at it.) The model-fitting produces some output. (If you’re using R Markdown, that will come with it.)

Fit a model that predicts Category from only the district. Hand in the output from the fitting process as well.

Use

anovato compare the two models you just obtained. What does theanovatell you?Using your preferred model, obtain predicted probabilities that a crime will be of each of these four categories for each day of the week in the

TENDERLOINdistrict (the name is ALL CAPS). This will mean constructing a data frame to predict from, obtaining the predictions and then displaying them suitably.Describe briefly how the weekend days Saturday and Sunday differ from the rest.

28.5 Crimes in San Francisco – the data

The data in link is a huge dataset of crimes committed in San Francisco between 2003 and 2015. The variables are:

Dates: the date and time of the crimeCategory: the category of crime, eg. “larceny” or “vandalism” (response).Descript: detailed description of crime.DayOfWeek: the day of the week of the crime.PdDistrict: the name of the San Francisco Police Department district in which the crime took place.Resolution: how the crime was resolvedAddress: approximate street address of crimeX: longitudeY: latitude

Our aim is to see whether the category of crime depends on the day of the week and the district in which it occurred. However, there are a lot of crime categories, so we will focus on the top four “interesting” ones, which we will have to discover.

Read in the data and verify that you have these columns and a lot of rows. (The data may take a moment to read in. You will see why.)

How is the response variable here different to the one in the question about steak preferences (and therefore why would

multinomfrom packagennetbe the method of choice)?Find out which crime categories there are, and arrange them in order of how many crimes there were in each category.

Which are the four most frequent “interesting” crime categories, that is to say, not including “other offenses” and “non-criminal”? Get them into a vector called

my.crimes. See if you can find a way of doing this that doesn’t involve typing them in (for full marks).(Digression, but needed for the next part.) The R vector

letterscontains the lowercase letters fromatoz. Consider the vector('a','m',3,'Q'). Some of these are found amongst the lowercase letters, and some not. Type these into a vectorvand explain briefly whyv %in% lettersproduces what it does.We are going to

filteronly the rows of our data frame that have one of the crimes inmy.crimesas theirCategory. Also,selectonly the columnsCategory,DayOfWeekandPdDistrict. Save the resulting data frame and display its structure. (You should have a lot fewer rows than you did before.)Save these data in a file

sfcrime1.csv.

28.6 What sports do these athletes play?

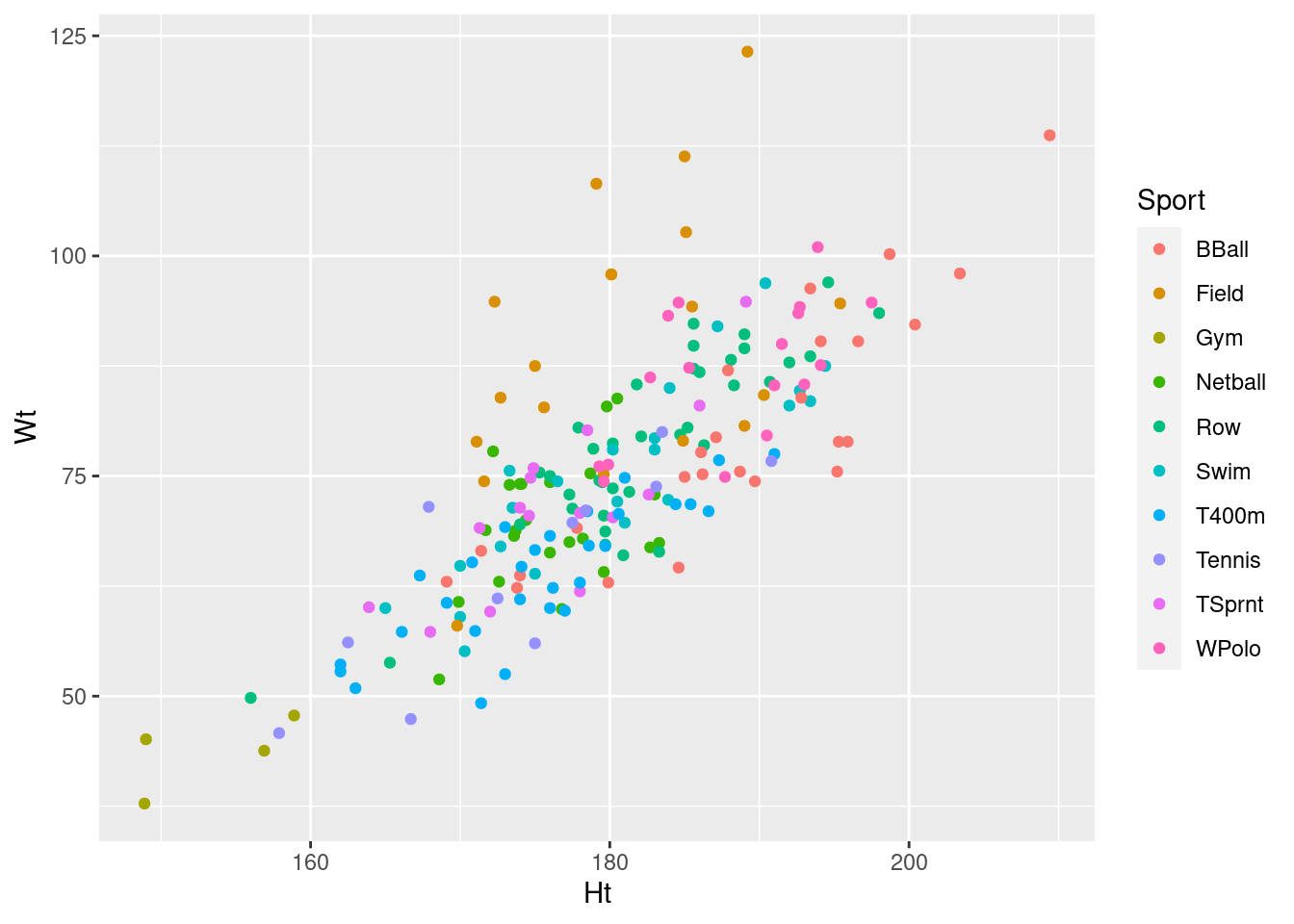

The data at link are physical and physiological measurements of 202 male and female Australian elite athletes. The data values are separated by tabs. We are going to see whether we can predict the sport an athlete plays from their height and weight.

The sports, if you care, are respectively basketball, “field athletics” (eg. shot put, javelin throw, long jump etc.), gymnastics, netball, rowing, swimming, 400m running, tennis, sprinting (100m or 200m running), water polo.

Read in the data and display the first few rows.

Make a scatterplot of height vs. weight, with the points coloured by what sport the athlete plays. Put height on the \(x\)-axis and weight on the \(y\)-axis.

Explain briefly why a multinomial model (

multinomfromnnet) would be the best thing to use to predict sport played from the other variables.Fit a suitable model for predicting sport played from height and weight. (You don’t need to look at the results.) 100 steps isn’t quite enough, so set

maxitequal to a larger number to allow the estimation to finish.Demonstrate using

anovathatWtshould not be removed from this model.Make a data frame consisting of all combinations of

Ht160, 180 and 200 (cm), andWt50, 75, and 100 (kg), and use it to obtain predicted probabilities of athletes of those heights and weights playing each of the sports. Display the results. You might have to display them smaller, or reduce the number of decimal places36 to fit them on the page.For an athlete who is 180 cm tall and weighs 100 kg, what sport would you guess they play? How sure are you that you are right? Explain briefly.

My solutions follow:

28.7 Finding non-missing values

* This is to prepare you for something in the next question. It’s meant to be easy.

In R, the code NA stands for “missing value” or

“value not known”. In R, NA should not have quotes around

it. (It is a special code, not a piece of text.)

- Create a vector

vthat contains some numbers and some missing values, usingc(). Put those values into a one-column data frame.

Solution

Like this. The arrangement of numbers and missing values doesn’t matter, as long as you have some of each:

v <- c(1, 2, NA, 4, 5, 6, 9, NA, 11)

mydata <- tibble(v)

mydata## # A tibble: 9 × 1

## v

## <dbl>

## 1 1

## 2 2

## 3 NA

## 4 4

## 5 5

## 6 6

## 7 9

## 8 NA

## 9 11This has one column called v.

\(\blacksquare\)

- Obtain a new column containing

is.na(v). When is this true and when is this false?

Solution

mydata <- mydata %>% mutate(isna = is.na(v))

mydata## # A tibble: 9 × 2

## v isna

## <dbl> <lgl>

## 1 1 FALSE

## 2 2 FALSE

## 3 NA TRUE

## 4 4 FALSE

## 5 5 FALSE

## 6 6 FALSE

## 7 9 FALSE

## 8 NA TRUE

## 9 11 FALSEThis is TRUE if the corresponding element of v is

missing (in my case, the third value and the second-last one), and

FALSE otherwise (when there is an actual value there).

\(\blacksquare\)

- The symbol

!means “not” in R (and other programming languages). What does!is.na(v)do? Create a new column containing that.

Solution

Try it and see. Give it whatever name you like. My name reflects that I know what it’s going to do:

mydata <- mydata %>% mutate(notisna = !is.na(v))

mydata## # A tibble: 9 × 3

## v isna notisna

## <dbl> <lgl> <lgl>

## 1 1 FALSE TRUE

## 2 2 FALSE TRUE

## 3 NA TRUE FALSE

## 4 4 FALSE TRUE

## 5 5 FALSE TRUE

## 6 6 FALSE TRUE

## 7 9 FALSE TRUE

## 8 NA TRUE FALSE

## 9 11 FALSE TRUEThis is the logical opposite of is.na: it’s true if there is

a value, and false if it’s missing.

\(\blacksquare\)

- Use

filterto display just the rows of your data frame that have a non-missing value ofv.

Solution

filter takes a column to say which rows to pick, in

which case the column should contain something that either is

TRUE or FALSE, or something that can be

interpreted that way:

mydata %>% filter(notisna)## # A tibble: 7 × 3

## v isna notisna

## <dbl> <lgl> <lgl>

## 1 1 FALSE TRUE

## 2 2 FALSE TRUE

## 3 4 FALSE TRUE

## 4 5 FALSE TRUE

## 5 6 FALSE TRUE

## 6 9 FALSE TRUE

## 7 11 FALSE TRUEor you can provide filter something that can be calculated

from what’s in the data frame, and also returns something that is

either true or false:

mydata %>% filter(!is.na(v))## # A tibble: 7 × 3

## v isna notisna

## <dbl> <lgl> <lgl>

## 1 1 FALSE TRUE

## 2 2 FALSE TRUE

## 3 4 FALSE TRUE

## 4 5 FALSE TRUE

## 5 6 FALSE TRUE

## 6 9 FALSE TRUE

## 7 11 FALSE TRUEIn either case, I only have non-missing values of v.

\(\blacksquare\)

28.9 Alligator food

What do alligators most like to eat? 219 alligators were captured in four Florida lakes. Each alligator’s stomach contents were observed, and the food that the alligator had eaten was classified into one of five categories: fish, invertebrates (such as snails or insects), reptiles (such as turtles), birds, and “other” (such as amphibians, plants or rocks). The researcher noted for each alligator what that alligator had most of in its stomach, as well as the gender of each alligator and whether it was “large” or “small” (greater or less than 2.3 metres in length). The data can be found in link. The numbers in the data set (apart from the first column) are all frequencies. (You can ignore that first column “profile”.)

Our aim is to predict food type from the other variables.

- Read in the data and display the first few lines. Describe how the data are not “tidy”.

Solution

Separated by exactly one space:

my_url <- "http://ritsokiguess.site/datafiles/alligator.txt"

gators.orig <- read_delim(my_url, " ")## Rows: 16 Columns: 9

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: " "

## chr (3): Gender, Size, Lake

## dbl (6): profile, Fish, Invertebrate, Reptile, Bird, Other

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.gators.orig## # A tibble: 16 × 9

## profile Gender Size Lake Fish Invertebrate Reptile Bird Other

## <dbl> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 f <2.3 george 3 9 1 0 1

## 2 2 m <2.3 george 13 10 0 2 2

## 3 3 f >2.3 george 8 1 0 0 1

## 4 4 m >2.3 george 9 0 0 1 2

## 5 5 f <2.3 hancock 16 3 2 2 3

## 6 6 m <2.3 hancock 7 1 0 0 5

## 7 7 f >2.3 hancock 3 0 1 2 3

## 8 8 m >2.3 hancock 4 0 0 1 2

## 9 9 f <2.3 oklawaha 3 9 1 0 2

## 10 10 m <2.3 oklawaha 2 2 0 0 1

## 11 11 f >2.3 oklawaha 0 1 0 1 0

## 12 12 m >2.3 oklawaha 13 7 6 0 0

## 13 13 f <2.3 trafford 2 4 1 1 4

## 14 14 m <2.3 trafford 3 7 1 0 1

## 15 15 f >2.3 trafford 0 1 0 0 0

## 16 16 m >2.3 trafford 8 6 6 3 5The last five columns are all frequencies. Or, one of the variables (food type) is spread over five columns instead of being contained in one. Either is good.

My choice of “temporary” name reflects that I’m going to obtain a

“tidy” data frame called gators in a moment.

\(\blacksquare\)

- Use

pivot_longerto arrange the data suitably for analysis (which will be usingmultinom). Demonstrate (by looking at the first few rows of your new data frame) that you now have something tidy.

Solution

I’m creating my “official” data frame here:

gators.orig %>%

pivot_longer(Fish:Other, names_to = "Food.type", values_to = "Frequency") -> gators

gators## # A tibble: 80 × 6

## profile Gender Size Lake Food.type Frequency

## <dbl> <chr> <chr> <chr> <chr> <dbl>

## 1 1 f <2.3 george Fish 3

## 2 1 f <2.3 george Invertebrate 9

## 3 1 f <2.3 george Reptile 1

## 4 1 f <2.3 george Bird 0

## 5 1 f <2.3 george Other 1

## 6 2 m <2.3 george Fish 13

## 7 2 m <2.3 george Invertebrate 10

## 8 2 m <2.3 george Reptile 0

## 9 2 m <2.3 george Bird 2

## 10 2 m <2.3 george Other 2

## # … with 70 more rowsI gave my column names Capital Letters to make them consistent with the others (and in an attempt to stop infesting my brain with annoying variable-name errors when I fit models later).

Looking at the first few lines reveals that I now have a column of food types and one column of frequencies, both of which are what I wanted. I can check that I have all the different food types by finding the distinct ones:

gators %>% distinct(Food.type)## # A tibble: 5 × 1

## Food.type

## <chr>

## 1 Fish

## 2 Invertebrate

## 3 Reptile

## 4 Bird

## 5 Other(Think about why count would be confusing here.)

Note that Food.type is text (chr) rather than being a

factor. I’ll hold my breath and see what happens when I fit a model

where it is supposed to be a factor.

\(\blacksquare\)

- What is different about this problem, compared to

Question here, that would make

multinomthe right tool to use?

Solution

Look at the response variable Food.type (or whatever

you called it): this has multiple categories, but they are

not ordered in any logical way. Thus, in short, a nominal

response.

\(\blacksquare\)

- Fit a suitable multinomial model predicting food type from gender, size and lake. Does each row represent one alligator or more than one? If more than one, account for this in your modelling.

Solution

Each row of the tidy gators represents as many

alligators as are in the Frequency column. That is, if

you look at female small alligators in Lake George that ate

mainly fish, there are three of those.42

This to remind you to include the weights piece,

otherwise multinom will assume that you have one

observation per line and not as many as the number in

Frequency.

That is the

reason that count earlier would have been confusing:

it would have told you how many rows contained each

food type, rather than how many alligators, and these

would have been different:

gators %>% count(Food.type)## # A tibble: 5 × 2

## Food.type n

## <chr> <int>

## 1 Bird 16

## 2 Fish 16

## 3 Invertebrate 16

## 4 Other 16

## 5 Reptile 16gators %>% count(Food.type, wt = Frequency)## # A tibble: 5 × 2

## Food.type n

## <chr> <dbl>

## 1 Bird 13

## 2 Fish 94

## 3 Invertebrate 61

## 4 Other 32

## 5 Reptile 19Each food type appears on 16 rows, but is the favoured diet of very

different numbers of alligators. Note the use of wt=

to specify a frequency variable.43

You ought to understand why those are different.

All right, back to modelling:

library(nnet)

gators.1 <- multinom(Food.type ~ Gender + Size + Lake,

weights = Frequency, data = gators

)## # weights: 35 (24 variable)

## initial value 352.466903

## iter 10 value 270.228588

## iter 20 value 268.944257

## final value 268.932741

## convergedThis worked, even though Food.type was actually text. I guess

it got converted to a factor. The ordering of the levels doesn’t

matter here anyway, since this is not an ordinal model.

No need to look at it, since the output is kind of confusing anyway:

summary(gators.1)## Call:

## multinom(formula = Food.type ~ Gender + Size + Lake, data = gators,

## weights = Frequency)

##

## Coefficients:

## (Intercept) Genderm Size>2.3 Lakehancock Lakeoklawaha

## Fish 2.4322304 0.60674971 -0.7308535 -0.5751295 0.5513785

## Invertebrate 2.6012531 0.14378459 -2.0671545 -2.3557377 1.4645820

## Other 1.0014505 0.35423803 -1.0214847 0.1914537 0.5775317

## Reptile -0.9829064 -0.02053375 -0.1741207 0.5534169 3.0807416

## Laketrafford

## Fish -1.23681053

## Invertebrate -0.08096493

## Other 0.32097943

## Reptile 1.82333205

##

## Std. Errors:

## (Intercept) Genderm Size>2.3 Lakehancock Lakeoklawaha

## Fish 0.7706940 0.6888904 0.6523273 0.7952147 1.210229

## Invertebrate 0.7917210 0.7292510 0.7084028 0.9463640 1.232835

## Other 0.8747773 0.7623738 0.7250455 0.9072182 1.374545

## Reptile 1.2827234 0.9088217 0.8555051 1.3797755 1.591542

## Laketrafford

## Fish 0.8661187

## Invertebrate 0.8814625

## Other 0.9589807

## Reptile 1.3388017

##

## Residual Deviance: 537.8655

## AIC: 585.8655You get one coefficient for each variable (along the top) and for each response group (down the side), using the first group as a baseline everywhere. These numbers are hard to interpret; doing predictions is much easier.

\(\blacksquare\)

- Do a test to see whether

Gendershould stay in the model. (This will entail fitting another model.) What do you conclude?

Solution

The other model to fit is the one without the variable you’re testing:

gators.2 <- update(gators.1, . ~ . - Gender)## # weights: 30 (20 variable)

## initial value 352.466903

## iter 10 value 272.246275

## iter 20 value 270.046891

## final value 270.040139

## convergedI did update here to show you that it works, but of course

there’s no problem in just writing out the whole model again and

taking out Gender, preferably by copying and pasting:

gators.2x <- multinom(Food.type ~ Size + Lake,

weights = Frequency, data = gators

)## # weights: 30 (20 variable)

## initial value 352.466903

## iter 10 value 272.246275

## iter 20 value 270.046891

## final value 270.040139

## convergedand then you compare the models with and without Gender using anova:

anova(gators.2, gators.1)## Likelihood ratio tests of Multinomial Models

##

## Response: Food.type

## Model Resid. df Resid. Dev Test Df LR stat. Pr(Chi)

## 1 Size + Lake 300 540.0803

## 2 Gender + Size + Lake 296 537.8655 1 vs 2 4 2.214796 0.6963214The P-value is not small, so the two models fit equally well, and

therefore we should go with the smaller, simpler one: that is, the one

without Gender.

Sometimes drop1 works here too (and sometimes it doesn’t, for

reasons I haven’t figured out):

drop1(gators.1, test = "Chisq")## trying - Gender## Error in if (trace) {: argument is not interpretable as logicalI don’t even know what this error message means, never mind what to do about it.

\(\blacksquare\)

- Predict the probability that an alligator prefers each food type, given its size, gender (if necessary) and the lake it was found in, using the more appropriate of the two models that you have fitted so far. This means (i) making a data frame for prediction, and (ii) obtaining and displaying the predicted probabilities in a way that is easy to read.

Solution

Our best model gators.2 contains size and lake, so we need to predict for all combinations of those.

You might think of this first, which is easiest:

predictions(gators.2, variables = c("Size", "Lake"), type = "probs")## rowid type group predicted std.error Food.type Size Lake

## 1 1 probs Bird 0.029671502 0.026490803 Fish <2.3 george

## 2 2 probs Bird 0.029671502 0.026490803 Fish <2.3 george

## 3 3 probs Bird 0.029671502 0.026490803 Fish <2.3 george

## 4 4 probs Bird 0.029671502 0.026490803 Fish <2.3 george

## 5 5 probs Bird 0.070400215 0.014591121 Fish <2.3 hancock

## 6 6 probs Bird 0.070400215 0.014591121 Fish <2.3 hancock

## 7 7 probs Bird 0.070400215 0.014591121 Fish <2.3 hancock

## 8 8 probs Bird 0.070400215 0.014591121 Fish <2.3 hancock

## 9 9 probs Bird 0.008818267 0.031423530 Fish <2.3 oklawaha

## 10 10 probs Bird 0.008818267 0.031423530 Fish <2.3 oklawaha

## 11 11 probs Bird 0.008818267 0.031423530 Fish <2.3 oklawaha

## 12 12 probs Bird 0.008818267 0.031423530 Fish <2.3 oklawaha

## 13 13 probs Bird 0.035892547 0.030455498 Fish <2.3 trafford

## 14 14 probs Bird 0.035892547 0.030455498 Fish <2.3 trafford

## 15 15 probs Bird 0.035892547 0.030455498 Fish <2.3 trafford

## 16 16 probs Bird 0.035892547 0.030455498 Fish <2.3 trafford

## 17 17 probs Bird 0.081071082 0.028409610 Fish >2.3 george

## 18 18 probs Bird 0.081071082 0.028409610 Fish >2.3 george

## 19 19 probs Bird 0.081071082 0.028409610 Fish >2.3 george

## 20 20 probs Bird 0.081071082 0.028409610 Fish >2.3 george

## 21 21 probs Bird 0.140898571 0.033231369 Fish >2.3 hancock

## 22 22 probs Bird 0.140898571 0.033231369 Fish >2.3 hancock

## 23 23 probs Bird 0.140898571 0.033231369 Fish >2.3 hancock

## 24 24 probs Bird 0.140898571 0.033231369 Fish >2.3 hancock

## 25 25 probs Bird 0.029419560 0.043739196 Fish >2.3 oklawaha

## 26 26 probs Bird 0.029419560 0.043739196 Fish >2.3 oklawaha

## 27 27 probs Bird 0.029419560 0.043739196 Fish >2.3 oklawaha

## 28 28 probs Bird 0.029419560 0.043739196 Fish >2.3 oklawaha

## 29 29 probs Bird 0.108222209 0.049665725 Fish >2.3 trafford

## 30 30 probs Bird 0.108222209 0.049665725 Fish >2.3 trafford

## 31 31 probs Bird 0.108222209 0.049665725 Fish >2.3 trafford

## 32 32 probs Bird 0.108222209 0.049665725 Fish >2.3 trafford

## 33 1 probs Fish 0.452103200 0.190911359 Fish <2.3 george

## 34 2 probs Fish 0.452103200 0.190911359 Fish <2.3 george

## 35 3 probs Fish 0.452103200 0.190911359 Fish <2.3 george

## 36 4 probs Fish 0.452103200 0.190911359 Fish <2.3 george

## 37 5 probs Fish 0.535303986 0.053136442 Fish <2.3 hancock

## 38 6 probs Fish 0.535303986 0.053136442 Fish <2.3 hancock

## 39 7 probs Fish 0.535303986 0.053136442 Fish <2.3 hancock

## 40 8 probs Fish 0.535303986 0.053136442 Fish <2.3 hancock

## 41 9 probs Fish 0.258187239 0.137187952 Fish <2.3 oklawaha

## 42 10 probs Fish 0.258187239 0.137187952 Fish <2.3 oklawaha

## 43 11 probs Fish 0.258187239 0.137187952 Fish <2.3 oklawaha

## 44 12 probs Fish 0.258187239 0.137187952 Fish <2.3 oklawaha

## 45 13 probs Fish 0.184299727 0.500835571 Fish <2.3 trafford

## 46 14 probs Fish 0.184299727 0.500835571 Fish <2.3 trafford

## 47 15 probs Fish 0.184299727 0.500835571 Fish <2.3 trafford

## 48 16 probs Fish 0.184299727 0.500835571 Fish <2.3 trafford

## 49 17 probs Fish 0.657439424 0.251626520 Fish >2.3 george

## 50 18 probs Fish 0.657439424 0.251626520 Fish >2.3 george

## 51 19 probs Fish 0.657439424 0.251626520 Fish >2.3 george

## 52 20 probs Fish 0.657439424 0.251626520 Fish >2.3 george

## 53 21 probs Fish 0.570196832 0.288825624 Fish >2.3 hancock

## 54 22 probs Fish 0.570196832 0.288825624 Fish >2.3 hancock

## 55 23 probs Fish 0.570196832 0.288825624 Fish >2.3 hancock

## 56 24 probs Fish 0.570196832 0.288825624 Fish >2.3 hancock

## 57 25 probs Fish 0.458436758 0.314296880 Fish >2.3 oklawaha

## 58 26 probs Fish 0.458436758 0.314296880 Fish >2.3 oklawaha

## 59 27 probs Fish 0.458436758 0.314296880 Fish >2.3 oklawaha

## 60 28 probs Fish 0.458436758 0.314296880 Fish >2.3 oklawaha

## 61 29 probs Fish 0.295752571 0.220261657 Fish >2.3 trafford

## 62 30 probs Fish 0.295752571 0.220261657 Fish >2.3 trafford

## 63 31 probs Fish 0.295752571 0.220261657 Fish >2.3 trafford

## 64 32 probs Fish 0.295752571 0.220261657 Fish >2.3 trafford

## 65 1 probs Invertebrate 0.412856987 0.028347945 Fish <2.3 george

## 66 2 probs Invertebrate 0.412856987 0.028347945 Fish <2.3 george

## 67 3 probs Invertebrate 0.412856987 0.028347945 Fish <2.3 george

## 68 4 probs Invertebrate 0.412856987 0.028347945 Fish <2.3 george

## 69 5 probs Invertebrate 0.093098852 0.011544508 Fish <2.3 hancock

## 70 6 probs Invertebrate 0.093098852 0.011544508 Fish <2.3 hancock

## 71 7 probs Invertebrate 0.093098852 0.011544508 Fish <2.3 hancock

## 72 8 probs Invertebrate 0.093098852 0.011544508 Fish <2.3 hancock

## 73 9 probs Invertebrate 0.601895180 0.072899406 Fish <2.3 oklawaha

## 74 10 probs Invertebrate 0.601895180 0.072899406 Fish <2.3 oklawaha

## 75 11 probs Invertebrate 0.601895180 0.072899406 Fish <2.3 oklawaha

## 76 12 probs Invertebrate 0.601895180 0.072899406 Fish <2.3 oklawaha

## 77 13 probs Invertebrate 0.516837696 0.031040421 Fish <2.3 trafford

## 78 14 probs Invertebrate 0.516837696 0.031040421 Fish <2.3 trafford

## 79 15 probs Invertebrate 0.516837696 0.031040421 Fish <2.3 trafford

## 80 16 probs Invertebrate 0.516837696 0.031040421 Fish <2.3 trafford

## 81 17 probs Invertebrate 0.139678770 0.266063328 Fish >2.3 george

## 82 18 probs Invertebrate 0.139678770 0.266063328 Fish >2.3 george

## 83 19 probs Invertebrate 0.139678770 0.266063328 Fish >2.3 george

## 84 20 probs Invertebrate 0.139678770 0.266063328 Fish >2.3 george

## 85 21 probs Invertebrate 0.023071788 0.253432814 Fish >2.3 hancock

## 86 22 probs Invertebrate 0.023071788 0.253432814 Fish >2.3 hancock

## 87 23 probs Invertebrate 0.023071788 0.253432814 Fish >2.3 hancock

## 88 24 probs Invertebrate 0.023071788 0.253432814 Fish >2.3 hancock

## 89 25 probs Invertebrate 0.248644081 0.340363100 Fish >2.3 oklawaha

## 90 26 probs Invertebrate 0.248644081 0.340363100 Fish >2.3 oklawaha

## 91 27 probs Invertebrate 0.248644081 0.340363100 Fish >2.3 oklawaha

## 92 28 probs Invertebrate 0.248644081 0.340363100 Fish >2.3 oklawaha

## 93 29 probs Invertebrate 0.192961478 0.293280651 Fish >2.3 trafford

## 94 30 probs Invertebrate 0.192961478 0.293280651 Fish >2.3 trafford

## 95 31 probs Invertebrate 0.192961478 0.293280651 Fish >2.3 trafford

## 96 32 probs Invertebrate 0.192961478 0.293280651 Fish >2.3 trafford

## 97 1 probs Other 0.093801903 0.030785528 Fish <2.3 george

## 98 2 probs Other 0.093801903 0.030785528 Fish <2.3 george

## 99 3 probs Other 0.093801903 0.030785528 Fish <2.3 george

## 100 4 probs Other 0.093801903 0.030785528 Fish <2.3 george

## 101 5 probs Other 0.253741634 0.025649228 Fish <2.3 hancock

## 102 6 probs Other 0.253741634 0.025649228 Fish <2.3 hancock

## 103 7 probs Other 0.253741634 0.025649228 Fish <2.3 hancock

## 104 8 probs Other 0.253741634 0.025649228 Fish <2.3 hancock

## 105 9 probs Other 0.053872406 0.048740534 Fish <2.3 oklawaha

## 106 10 probs Other 0.053872406 0.048740534 Fish <2.3 oklawaha

## 107 11 probs Other 0.053872406 0.048740534 Fish <2.3 oklawaha

## 108 12 probs Other 0.053872406 0.048740534 Fish <2.3 oklawaha

## 109 13 probs Other 0.174203303 0.287237268 Fish <2.3 trafford

## 110 14 probs Other 0.174203303 0.287237268 Fish <2.3 trafford

## 111 15 probs Other 0.174203303 0.287237268 Fish <2.3 trafford

## 112 16 probs Other 0.174203303 0.287237268 Fish <2.3 trafford

## 113 17 probs Other 0.097911930 0.005970406 Fish >2.3 george

## 114 18 probs Other 0.097911930 0.005970406 Fish >2.3 george

## 115 19 probs Other 0.097911930 0.005970406 Fish >2.3 george

## 116 20 probs Other 0.097911930 0.005970406 Fish >2.3 george

## 117 21 probs Other 0.194008990 0.008762261 Fish >2.3 hancock

## 118 22 probs Other 0.194008990 0.008762261 Fish >2.3 hancock

## 119 23 probs Other 0.194008990 0.008762261 Fish >2.3 hancock

## 120 24 probs Other 0.194008990 0.008762261 Fish >2.3 hancock

## 121 25 probs Other 0.068662061 0.007119469 Fish >2.3 oklawaha

## 122 26 probs Other 0.068662061 0.007119469 Fish >2.3 oklawaha

## 123 27 probs Other 0.068662061 0.007119469 Fish >2.3 oklawaha

## 124 28 probs Other 0.068662061 0.007119469 Fish >2.3 oklawaha

## 125 29 probs Other 0.200662408 0.106641288 Fish >2.3 trafford

## 126 30 probs Other 0.200662408 0.106641288 Fish >2.3 trafford

## 127 31 probs Other 0.200662408 0.106641288 Fish >2.3 trafford

## 128 32 probs Other 0.200662408 0.106641288 Fish >2.3 trafford

## 129 1 probs Reptile 0.011566408 0.137747240 Fish <2.3 george

## 130 2 probs Reptile 0.011566408 0.137747240 Fish <2.3 george

## 131 3 probs Reptile 0.011566408 0.137747240 Fish <2.3 george

## 132 4 probs Reptile 0.011566408 0.137747240 Fish <2.3 george

## 133 5 probs Reptile 0.047455313 0.011939732 Fish <2.3 hancock

## 134 6 probs Reptile 0.047455313 0.011939732 Fish <2.3 hancock

## 135 7 probs Reptile 0.047455313 0.011939732 Fish <2.3 hancock

## 136 8 probs Reptile 0.047455313 0.011939732 Fish <2.3 hancock

## 137 9 probs Reptile 0.077226908 0.055750872 Fish <2.3 oklawaha

## 138 10 probs Reptile 0.077226908 0.055750872 Fish <2.3 oklawaha

## 139 11 probs Reptile 0.077226908 0.055750872 Fish <2.3 oklawaha

## 140 12 probs Reptile 0.077226908 0.055750872 Fish <2.3 oklawaha

## 141 13 probs Reptile 0.088766727 0.321998572 Fish <2.3 trafford

## 142 14 probs Reptile 0.088766727 0.321998572 Fish <2.3 trafford

## 143 15 probs Reptile 0.088766727 0.321998572 Fish <2.3 trafford

## 144 16 probs Reptile 0.088766727 0.321998572 Fish <2.3 trafford

## 145 17 probs Reptile 0.023898795 0.025910578 Fish >2.3 george

## 146 18 probs Reptile 0.023898795 0.025910578 Fish >2.3 george

## 147 19 probs Reptile 0.023898795 0.025910578 Fish >2.3 george

## 148 20 probs Reptile 0.023898795 0.025910578 Fish >2.3 george

## 149 21 probs Reptile 0.071823819 0.003294197 Fish >2.3 hancock

## 150 22 probs Reptile 0.071823819 0.003294197 Fish >2.3 hancock

## 151 23 probs Reptile 0.071823819 0.003294197 Fish >2.3 hancock

## 152 24 probs Reptile 0.071823819 0.003294197 Fish >2.3 hancock

## 153 25 probs Reptile 0.194837540 0.005228529 Fish >2.3 oklawaha

## 154 26 probs Reptile 0.194837540 0.005228529 Fish >2.3 oklawaha

## 155 27 probs Reptile 0.194837540 0.005228529 Fish >2.3 oklawaha

## 156 28 probs Reptile 0.194837540 0.005228529 Fish >2.3 oklawaha

## 157 29 probs Reptile 0.202401335 0.119901577 Fish >2.3 trafford

## 158 30 probs Reptile 0.202401335 0.119901577 Fish >2.3 trafford

## 159 31 probs Reptile 0.202401335 0.119901577 Fish >2.3 trafford

## 160 32 probs Reptile 0.202401335 0.119901577 Fish >2.3 trafford

## Frequency

## 1 0.0

## 2 1.0

## 3 3.5

## 4 16.0

## 5 0.0

## 6 1.0

## 7 3.5

## 8 16.0

## 9 0.0

## 10 1.0

## 11 3.5

## 12 16.0

## 13 0.0

## 14 1.0

## 15 3.5

## 16 16.0

## 17 0.0

## 18 1.0

## 19 3.5

## 20 16.0

## 21 0.0

## 22 1.0

## 23 3.5

## 24 16.0

## 25 0.0

## 26 1.0

## 27 3.5

## 28 16.0

## 29 0.0

## 30 1.0

## 31 3.5

## 32 16.0

## 33 0.0

## 34 1.0

## 35 3.5

## 36 16.0

## 37 0.0

## 38 1.0

## 39 3.5

## 40 16.0

## 41 0.0

## 42 1.0

## 43 3.5

## 44 16.0

## 45 0.0

## 46 1.0

## 47 3.5

## 48 16.0

## 49 0.0

## 50 1.0

## 51 3.5

## 52 16.0

## 53 0.0

## 54 1.0

## 55 3.5

## 56 16.0

## 57 0.0

## 58 1.0

## 59 3.5

## 60 16.0

## 61 0.0

## 62 1.0

## 63 3.5

## 64 16.0

## 65 0.0

## 66 1.0

## 67 3.5

## 68 16.0

## 69 0.0

## 70 1.0

## 71 3.5

## 72 16.0

## 73 0.0

## 74 1.0

## 75 3.5

## 76 16.0

## 77 0.0

## 78 1.0

## 79 3.5

## 80 16.0

## 81 0.0

## 82 1.0

## 83 3.5

## 84 16.0

## 85 0.0

## 86 1.0

## 87 3.5

## 88 16.0

## 89 0.0

## 90 1.0

## 91 3.5

## 92 16.0

## 93 0.0

## 94 1.0

## 95 3.5

## 96 16.0

## 97 0.0

## 98 1.0

## 99 3.5

## 100 16.0

## 101 0.0

## 102 1.0

## 103 3.5

## 104 16.0

## 105 0.0

## 106 1.0

## 107 3.5

## 108 16.0

## 109 0.0

## 110 1.0

## 111 3.5

## 112 16.0

## 113 0.0

## 114 1.0

## 115 3.5

## 116 16.0

## 117 0.0

## 118 1.0

## 119 3.5

## 120 16.0

## 121 0.0

## 122 1.0

## 123 3.5

## 124 16.0

## 125 0.0

## 126 1.0

## 127 3.5

## 128 16.0

## 129 0.0

## 130 1.0

## 131 3.5

## 132 16.0

## 133 0.0

## 134 1.0

## 135 3.5

## 136 16.0

## 137 0.0

## 138 1.0

## 139 3.5

## 140 16.0

## 141 0.0

## 142 1.0

## 143 3.5

## 144 16.0

## 145 0.0

## 146 1.0

## 147 3.5

## 148 16.0

## 149 0.0

## 150 1.0

## 151 3.5

## 152 16.0

## 153 0.0

## 154 1.0

## 155 3.5

## 156 16.0

## 157 0.0

## 158 1.0

## 159 3.5

## 160 16.0except that this uses the original dataframe, in which the sizes and lakes are repeated (once for each food type, of which there are five):

gators## # A tibble: 80 × 6

## profile Gender Size Lake Food.type Frequency

## <dbl> <chr> <chr> <chr> <chr> <dbl>

## 1 1 f <2.3 george Fish 3

## 2 1 f <2.3 george Invertebrate 9

## 3 1 f <2.3 george Reptile 1

## 4 1 f <2.3 george Bird 0

## 5 1 f <2.3 george Other 1

## 6 2 m <2.3 george Fish 13

## 7 2 m <2.3 george Invertebrate 10

## 8 2 m <2.3 george Reptile 0

## 9 2 m <2.3 george Bird 2

## 10 2 m <2.3 george Other 2

## # … with 70 more rowsYou can persist with the above, if you are careful:

predictions(gators.2, variables = c("Size", "Lake"), type = "probs") %>%

select(group, predicted, Size, Lake) %>%

pivot_wider(names_from = group, values_from = predicted)## Warning: Values from `predicted` are not uniquely identified; output will contain list-cols.

## * Use `values_fn = list` to suppress this warning.

## * Use `values_fn = {summary_fun}` to summarise duplicates.

## * Use the following dplyr code to identify duplicates.

## {data} %>%

## dplyr::group_by(Size, Lake, group) %>%

## dplyr::summarise(n = dplyr::n(), .groups = "drop") %>%

## dplyr::filter(n > 1L)## # A tibble: 8 × 7

## Size Lake Bird Fish Invertebrate Other Reptile

## <chr> <chr> <list> <list> <list> <list> <list>

## 1 <2.3 george <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 2 <2.3 hancock <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 3 <2.3 oklawaha <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 4 <2.3 trafford <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 5 >2.3 george <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 6 >2.3 hancock <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 7 >2.3 oklawaha <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>

## 8 >2.3 trafford <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]> <dbl [4]>and now you have four numbers in each cell (that are actually all the same), corresponding to the four repeats of size and lake in the original dataset.

A little reading of the help for pivot_wider reveals that there is an option values_fn. This is for exactly this case, where pivoting wider has resulted in multiple values per cell. The input to values_fn is the name of a function that will be used to summarize the four values in each cell. Since those four values are all the same in each case (they are predictions for the same values of size and lake), you could summarize them by any of mean, median, max, min, etc:44

predictions(gators.2, variables = c("Size", "Lake"), type = "probs") %>%

select(group, predicted, Size, Lake) %>%

pivot_wider(names_from = group, values_from = predicted, values_fn = mean) -> preds1

preds1## # A tibble: 8 × 7

## Size Lake Bird Fish Invertebrate Other Reptile

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 <2.3 george 0.0297 0.452 0.413 0.0938 0.0116

## 2 <2.3 hancock 0.0704 0.535 0.0931 0.254 0.0475

## 3 <2.3 oklawaha 0.00882 0.258 0.602 0.0539 0.0772

## 4 <2.3 trafford 0.0359 0.184 0.517 0.174 0.0888

## 5 >2.3 george 0.0811 0.657 0.140 0.0979 0.0239

## 6 >2.3 hancock 0.141 0.570 0.0231 0.194 0.0718

## 7 >2.3 oklawaha 0.0294 0.458 0.249 0.0687 0.195

## 8 >2.3 trafford 0.108 0.296 0.193 0.201 0.202So that works, but you might not have thought of all that.

The other way is to get hold of the different names of lakes and sizes, for which this is one way, and then use datagrid:

Lakes <- gators %>% distinct(Lake) %>% pull(Lake)

Lakes## [1] "george" "hancock" "oklawaha" "trafford"Sizes <- gators %>% distinct(Size) %>% pull(Size)

Sizes## [1] "<2.3" ">2.3"I didn’t need to think about Genders because that’s not in

the better model. See below for what happens if you include it

anyway.

I have persisted with the Capital Letters, for consistency.

Then:

new <- datagrid(model = gators.2, Size = Sizes, Lake = Lakes)

new## Frequency Size Lake

## 1: 2.7375 <2.3 george

## 2: 2.7375 <2.3 hancock

## 3: 2.7375 <2.3 oklawaha

## 4: 2.7375 <2.3 trafford

## 5: 2.7375 >2.3 george

## 6: 2.7375 >2.3 hancock

## 7: 2.7375 >2.3 oklawaha

## 8: 2.7375 >2.3 traffordThe Frequency column is the mean Frequency observed, which was (kinda) part of the model, but won’t affect the predictions that we get.

Next:

predictions(gators.2, newdata = new, type = "probs")## rowid type group predicted std.error Frequency Size Lake

## 1 1 probs Bird 0.029671502 0.026490803 2.7375 <2.3 george

## 2 2 probs Bird 0.070400215 0.014591121 2.7375 <2.3 hancock

## 3 3 probs Bird 0.008818267 0.031423530 2.7375 <2.3 oklawaha

## 4 4 probs Bird 0.035892547 0.030455498 2.7375 <2.3 trafford

## 5 5 probs Bird 0.081071082 0.028409610 2.7375 >2.3 george

## 6 6 probs Bird 0.140898571 0.033231369 2.7375 >2.3 hancock

## 7 7 probs Bird 0.029419560 0.043739196 2.7375 >2.3 oklawaha

## 8 8 probs Bird 0.108222209 0.049665725 2.7375 >2.3 trafford

## 9 1 probs Fish 0.452103200 0.190911359 2.7375 <2.3 george

## 10 2 probs Fish 0.535303986 0.053136442 2.7375 <2.3 hancock

## 11 3 probs Fish 0.258187239 0.137187952 2.7375 <2.3 oklawaha

## 12 4 probs Fish 0.184299727 0.500835571 2.7375 <2.3 trafford

## 13 5 probs Fish 0.657439424 0.251626520 2.7375 >2.3 george

## 14 6 probs Fish 0.570196832 0.288825624 2.7375 >2.3 hancock

## 15 7 probs Fish 0.458436758 0.314296880 2.7375 >2.3 oklawaha

## 16 8 probs Fish 0.295752571 0.220261657 2.7375 >2.3 trafford

## 17 1 probs Invertebrate 0.412856987 0.028347945 2.7375 <2.3 george

## 18 2 probs Invertebrate 0.093098852 0.011544508 2.7375 <2.3 hancock

## 19 3 probs Invertebrate 0.601895180 0.072899406 2.7375 <2.3 oklawaha

## 20 4 probs Invertebrate 0.516837696 0.031040421 2.7375 <2.3 trafford

## 21 5 probs Invertebrate 0.139678770 0.266063328 2.7375 >2.3 george

## 22 6 probs Invertebrate 0.023071788 0.253432814 2.7375 >2.3 hancock

## 23 7 probs Invertebrate 0.248644081 0.340363100 2.7375 >2.3 oklawaha

## 24 8 probs Invertebrate 0.192961478 0.293280651 2.7375 >2.3 trafford

## 25 1 probs Other 0.093801903 0.030785528 2.7375 <2.3 george

## 26 2 probs Other 0.253741634 0.025649228 2.7375 <2.3 hancock

## 27 3 probs Other 0.053872406 0.048740534 2.7375 <2.3 oklawaha

## 28 4 probs Other 0.174203303 0.287237268 2.7375 <2.3 trafford

## 29 5 probs Other 0.097911930 0.005970406 2.7375 >2.3 george

## 30 6 probs Other 0.194008990 0.008762261 2.7375 >2.3 hancock

## 31 7 probs Other 0.068662061 0.007119469 2.7375 >2.3 oklawaha

## 32 8 probs Other 0.200662408 0.106641288 2.7375 >2.3 trafford

## 33 1 probs Reptile 0.011566408 0.137747240 2.7375 <2.3 george

## 34 2 probs Reptile 0.047455313 0.011939732 2.7375 <2.3 hancock

## 35 3 probs Reptile 0.077226908 0.055750872 2.7375 <2.3 oklawaha

## 36 4 probs Reptile 0.088766727 0.321998572 2.7375 <2.3 trafford

## 37 5 probs Reptile 0.023898795 0.025910578 2.7375 >2.3 george

## 38 6 probs Reptile 0.071823819 0.003294197 2.7375 >2.3 hancock

## 39 7 probs Reptile 0.194837540 0.005228529 2.7375 >2.3 oklawaha

## 40 8 probs Reptile 0.202401335 0.119901577 2.7375 >2.3 traffordThis is, you’ll remember, long, with one row for each diet (in group), so we need to select the columns of interest to us, and then pivot wider:

predictions(gators.2, newdata = new, type = "probs") %>%

select(rowid, group, predicted, Size, Lake) %>%

pivot_wider(names_from = group, values_from = predicted)## # A tibble: 8 × 8

## rowid Size Lake Bird Fish Invertebrate Other Reptile

## <int> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 <2.3 george 0.0297 0.452 0.413 0.0938 0.0116

## 2 2 <2.3 hancock 0.0704 0.535 0.0931 0.254 0.0475

## 3 3 <2.3 oklawaha 0.00882 0.258 0.602 0.0539 0.0772

## 4 4 <2.3 trafford 0.0359 0.184 0.517 0.174 0.0888

## 5 5 >2.3 george 0.0811 0.657 0.140 0.0979 0.0239

## 6 6 >2.3 hancock 0.141 0.570 0.0231 0.194 0.0718

## 7 7 >2.3 oklawaha 0.0294 0.458 0.249 0.0687 0.195

## 8 8 >2.3 trafford 0.108 0.296 0.193 0.201 0.202Either of these two ways is good to get to this point, or some other way that also gets you here.

If you thought that the better model was the one with Gender

in it, or you otherwise forgot that you didn’t need Gender

then you needed to do something like this as well:

Genders <- gators %>% distinct(Gender) %>% pull(Gender)

new <- datagrid(model = gators.2, Lake = Lakes, Size = Sizes, Gender = Genders)## Warning: Some of the variable names are missing from the model data: Gendernew## Frequency Lake Size Gender

## 1: 2.7375 george <2.3 f

## 2: 2.7375 george <2.3 m

## 3: 2.7375 george >2.3 f

## 4: 2.7375 george >2.3 m

## 5: 2.7375 hancock <2.3 f

## 6: 2.7375 hancock <2.3 m

## 7: 2.7375 hancock >2.3 f

## 8: 2.7375 hancock >2.3 m

## 9: 2.7375 oklawaha <2.3 f

## 10: 2.7375 oklawaha <2.3 m

## 11: 2.7375 oklawaha >2.3 f

## 12: 2.7375 oklawaha >2.3 m

## 13: 2.7375 trafford <2.3 f

## 14: 2.7375 trafford <2.3 m

## 15: 2.7375 trafford >2.3 f

## 16: 2.7375 trafford >2.3 mIf you predict this in the model without Gender, you’ll get

the following:

predictions(gators.2, newdata = new, type = "probs") %>%

select(rowid, group, predicted, Lake, Size, Gender) %>%

pivot_wider(names_from = group, values_from = predicted)## # A tibble: 16 × 9

## rowid Lake Size Gender Bird Fish Invertebrate Other Reptile

## <int> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 george <2.3 f 0.0297 0.452 0.413 0.0938 0.0116

## 2 2 george <2.3 m 0.0297 0.452 0.413 0.0938 0.0116

## 3 3 george >2.3 f 0.0811 0.657 0.140 0.0979 0.0239

## 4 4 george >2.3 m 0.0811 0.657 0.140 0.0979 0.0239

## 5 5 hancock <2.3 f 0.0704 0.535 0.0931 0.254 0.0475

## 6 6 hancock <2.3 m 0.0704 0.535 0.0931 0.254 0.0475

## 7 7 hancock >2.3 f 0.141 0.570 0.0231 0.194 0.0718

## 8 8 hancock >2.3 m 0.141 0.570 0.0231 0.194 0.0718

## 9 9 oklawaha <2.3 f 0.00882 0.258 0.602 0.0539 0.0772

## 10 10 oklawaha <2.3 m 0.00882 0.258 0.602 0.0539 0.0772

## 11 11 oklawaha >2.3 f 0.0294 0.458 0.249 0.0687 0.195

## 12 12 oklawaha >2.3 m 0.0294 0.458 0.249 0.0687 0.195

## 13 13 trafford <2.3 f 0.0359 0.184 0.517 0.174 0.0888

## 14 14 trafford <2.3 m 0.0359 0.184 0.517 0.174 0.0888

## 15 15 trafford >2.3 f 0.108 0.296 0.193 0.201 0.202

## 16 16 trafford >2.3 m 0.108 0.296 0.193 0.201 0.202Here, the predictions for each gender are exactly the same,

because not having Gender in the model means that we take its

effect to be exactly zero.

Alternatively, if you really thought the model with Gender was the

better one, then you’d do this:

predictions(gators.1, newdata = new, type = "probs") %>%

select(rowid, group, predicted, Lake, Size, Gender) %>%

pivot_wider(names_from = group, values_from = predicted)## # A tibble: 16 × 9

## rowid Lake Size Gender Bird Fish Invertebrate Other Reptile

## <int> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 george <2.3 f 0.0345 0.393 0.465 0.0940 0.0129

## 2 2 george <2.3 m 0.0240 0.501 0.373 0.0930 0.00879

## 3 3 george >2.3 f 0.105 0.578 0.180 0.103 0.0332

## 4 4 george >2.3 m 0.0679 0.683 0.134 0.0948 0.0209

## 5 5 hancock <2.3 f 0.0792 0.507 0.101 0.261 0.0515

## 6 6 hancock <2.3 m 0.0511 0.601 0.0755 0.240 0.0326

## 7 7 hancock >2.3 f 0.167 0.516 0.0271 0.199 0.0914

## 8 8 hancock >2.3 m 0.110 0.624 0.0206 0.186 0.0591

## 9 9 oklawaha <2.3 f 0.0109 0.215 0.633 0.0527 0.0885

## 10 10 oklawaha <2.3 m 0.00837 0.303 0.564 0.0579 0.0668

## 11 11 oklawaha >2.3 f 0.0378 0.359 0.279 0.0659 0.258

## 12 12 oklawaha >2.3 m 0.0276 0.483 0.236 0.0688 0.185

## 13 13 trafford <2.3 f 0.0438 0.145 0.545 0.165 0.102

## 14 14 trafford <2.3 m 0.0344 0.209 0.494 0.184 0.0782

## 15 15 trafford >2.3 f 0.134 0.213 0.211 0.181 0.261

## 16 16 trafford >2.3 m 0.105 0.305 0.190 0.201 0.199and this time there is an effect of gender, but it is smallish, as befits an effect that is not significant.45

\(\blacksquare\)

- What do you think is the most important way in which the lakes differ? (Hint: look at where the biggest predicted probabilities are.)

Solution

Here are the predictions again:

preds1## # A tibble: 8 × 7

## Size Lake Bird Fish Invertebrate Other Reptile

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 <2.3 george 0.0297 0.452 0.413 0.0938 0.0116

## 2 <2.3 hancock 0.0704 0.535 0.0931 0.254 0.0475

## 3 <2.3 oklawaha 0.00882 0.258 0.602 0.0539 0.0772

## 4 <2.3 trafford 0.0359 0.184 0.517 0.174 0.0888

## 5 >2.3 george 0.0811 0.657 0.140 0.0979 0.0239

## 6 >2.3 hancock 0.141 0.570 0.0231 0.194 0.0718

## 7 >2.3 oklawaha 0.0294 0.458 0.249 0.0687 0.195

## 8 >2.3 trafford 0.108 0.296 0.193 0.201 0.202Following my own hint: the preferred diet in George and Hancock lakes is fish, but the preferred diet in Oklawaha and Trafford lakes is (at least sometimes) invertebrates. That is to say, the preferred diet in those last two lakes is less likely to be invertebrates than it is in the first two (comparing for alligators of the same size). This is true for both large and small alligators, as it should be, since there is no interaction in the model. That will do, though you can also note that reptiles are more commonly found in the last two lakes, and birds sometimes appear in the diet in Hancock and Trafford but rarely in the other two lakes.

Another way to think about this is to hold size constant and compare lakes (and then check that it applies to the other size too). In this case, you’d find the biggest predictions among the first four rows, and then check that the pattern persists in the second four rows. (It does.)

I think looking at predicted probabilities like this is the easiest way to see what the model is telling you.

\(\blacksquare\)

- How would you describe the major difference between the diets of the small and large alligators?

Solution

Same idea: hold lake constant, and compare small and large, then check that your conclusion holds for the other lakes as it should. For example, in George Lake, the large alligators are more likely to eat fish, and less likely to eat invertebrates, compared to the small ones. The other food types are not that much different, though you might also note that birds appear more in the diets of large alligators than small ones. Does that hold in the other lakes? I think so, though there is less difference for fish in Hancock lake than the others (where invertebrates are rare for both sizes). Birds don’t commonly appear in any alligator’s diets, but where they do, they are commoner for large alligators than small ones.

\(\blacksquare\)

28.10 Crimes in San Francisco

The data in link is a subset of a huge dataset of crimes committed in San Francisco between 2003 and 2015. The variables are:

Dates: the date and time of the crimeCategory: the category of crime, eg. “larceny” or “vandalism” (response).Descript: detailed description of crime.DayOfWeek: the day of the week of the crime.PdDistrict: the name of the San Francisco Police Department district in which the crime took place.Resolution: how the crime was resolvedAddress: approximate street address of crimeX: longitudeY: latitude

Our aim is to see whether the category of crime depends on the day of the week and the district in which it occurred. However, there are a lot of crime categories, so we will focus on the top four “interesting” ones, which are the ones included in this data file.

Some of the model-fitting takes a while (you’ll see why below). If

you’re using R Markdown, you can wait for the models to fit each time

you re-run your document, or insert cache=T in the top line

of your code chunk (the one with r in curly brackets in it,

above the actual code). Put a comma and the cache=T inside

the curly brackets. What that does is to re-run that code chunk only

if it changes; if it hasn’t changed it will use the saved results from

last time it was run. That can save you a lot of waiting around.

- Read in the data and display the dataset (or, at least, part of it).

Solution

The usual:

my_url <- "http://utsc.utoronto.ca/~butler/d29/sfcrime1.csv"

sfcrime <- read_csv(my_url)## Rows: 359528 Columns: 3

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (3): Category, DayOfWeek, PdDistrict

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.sfcrime## # A tibble: 359,528 × 3

## Category DayOfWeek PdDistrict

## <chr> <chr> <chr>

## 1 LARCENY/THEFT Wednesday NORTHERN

## 2 LARCENY/THEFT Wednesday PARK

## 3 LARCENY/THEFT Wednesday INGLESIDE

## 4 VEHICLE THEFT Wednesday INGLESIDE

## 5 VEHICLE THEFT Wednesday BAYVIEW

## 6 LARCENY/THEFT Wednesday RICHMOND

## 7 LARCENY/THEFT Wednesday CENTRAL

## 8 LARCENY/THEFT Wednesday CENTRAL

## 9 LARCENY/THEFT Wednesday NORTHERN

## 10 ASSAULT Wednesday INGLESIDE

## # … with 359,518 more rowsThis is a tidied-up version of the data, with only the variables we’ll look at, and only the observations from one of the “big four” crimes, a mere 300,000 of them. This is the data set we created earlier.

\(\blacksquare\)

- Fit a multinomial logistic regression that predicts crime category from day of week and district. (You don’t need to look at it.) The model-fitting produces some output. (If you’re using R Markdown, that will come with it.)

Solution

The modelling part is easy enough, as long as you can get the uppercase letters in the right places:

sfcrime.1 <- multinom(Category~DayOfWeek+PdDistrict,data=sfcrime)## # weights: 68 (48 variable)

## initial value 498411.639069

## iter 10 value 430758.073422

## iter 20 value 430314.270403

## iter 30 value 423303.587698

## iter 40 value 420883.528523

## iter 50 value 418355.242764

## final value 418149.979622

## converged\(\blacksquare\)

- Fit a model that predicts Category from only the district. Hand in the output from the fitting process as well.

Solution

Same idea. Write it out, or use update:

sfcrime.2 <- update(sfcrime.1,.~.-DayOfWeek)## # weights: 44 (30 variable)

## initial value 498411.639069

## iter 10 value 426003.543845

## iter 20 value 425542.806828

## iter 30 value 421715.787609

## final value 418858.235297

## converged\(\blacksquare\)

- Use

anovato compare the two models you just obtained. What does theanovatell you?

Solution

This:

anova(sfcrime.2,sfcrime.1)## Likelihood ratio tests of Multinomial Models

##

## Response: Category

## Model Resid. df Resid. Dev Test Df LR stat. Pr(Chi)

## 1 PdDistrict 1078554 837716.5

## 2 DayOfWeek + PdDistrict 1078536 836300.0 1 vs 2 18 1416.511 0This is a very small P-value. The null hypothesis is that the two

models are equally good, and this is clearly rejected. We need the

bigger model: that is, we need to keep DayOfWeek in there,

because the pattern of crimes (in each district) differs over day of

week.

One reason the P-value came out so small is that we have a ton of data, so that even a very small difference between days of the week could come out very strongly significant. The Machine Learning people (this is a machine learning dataset) don’t worry so much about tests for that reason: they are more concerned with predicting things well, so they just throw everything into the model and see what comes out.

\(\blacksquare\)

- Using your preferred model, obtain predicted probabilities

that a crime will be of each of these four categories for each day of

the week in the

TENDERLOINdistrict (the name is ALL CAPS). This will mean constructing a data frame to predict from, obtaining the predictions and then displaying them suitably.

Solution

There is an easier way, which takes a bit of adapting (and takes a bit of time to run):

p <- predictions(sfcrime.1, variables = c("DayOfWeek", "PdDistrict"), type = "probs")

p## rowid type group predicted std.error Category DayOfWeek

## 1 1 probs ASSAULT 0.16740047 0.004249022 LARCENY/THEFT Wednesday

## 2 2 probs ASSAULT 0.17495015 0.007700747 LARCENY/THEFT Wednesday

## 3 3 probs ASSAULT 0.27563912 0.004316150 LARCENY/THEFT Wednesday

## 4 4 probs ASSAULT 0.29800440 0.004808902 LARCENY/THEFT Wednesday

## 5 5 probs ASSAULT 0.17099635 0.007372097 LARCENY/THEFT Wednesday

## 6 6 probs ASSAULT 0.17783225 0.004947000 LARCENY/THEFT Wednesday

## 7 7 probs ASSAULT 0.21299344 0.005677917 LARCENY/THEFT Wednesday

## 8 8 probs ASSAULT 0.17038113 0.006150633 LARCENY/THEFT Wednesday

## 9 9 probs ASSAULT 0.18748667 0.007646783 LARCENY/THEFT Wednesday

## 10 10 probs ASSAULT 0.23214690 0.003291730 LARCENY/THEFT Wednesday

## 11 11 probs ASSAULT 0.16766532 0.002257512 LARCENY/THEFT Tuesday

## 12 12 probs ASSAULT 0.17593330 0.003469069 LARCENY/THEFT Tuesday

## 13 13 probs ASSAULT 0.27614457 0.001866336 LARCENY/THEFT Tuesday

## 14 14 probs ASSAULT 0.30028506 0.001571976 LARCENY/THEFT Tuesday

## 15 15 probs ASSAULT 0.17069994 0.003170870 LARCENY/THEFT Tuesday

## 16 16 probs ASSAULT 0.17733529 0.002258191 LARCENY/THEFT Tuesday

## 17 17 probs ASSAULT 0.21285423 0.002724312 LARCENY/THEFT Tuesday

## 18 18 probs ASSAULT 0.17126617 0.003098840 LARCENY/THEFT Tuesday

## 19 19 probs ASSAULT 0.19424470 0.004026416 LARCENY/THEFT Tuesday

## 20 20 probs ASSAULT 0.23480816 0.001286873 LARCENY/THEFT Tuesday

## 21 21 probs ASSAULT 0.17389840 0.005326866 LARCENY/THEFT Monday

## 22 22 probs ASSAULT 0.18249497 0.008362492 LARCENY/THEFT Monday

## 23 23 probs ASSAULT 0.28336267 0.004541134 LARCENY/THEFT Monday

## 24 24 probs ASSAULT 0.30985102 0.006048981 LARCENY/THEFT Monday

## 25 25 probs ASSAULT 0.17601746 0.008187888 LARCENY/THEFT Monday

## 26 26 probs ASSAULT 0.18337060 0.005174147 LARCENY/THEFT Monday

## 27 27 probs ASSAULT 0.21906616 0.006007794 LARCENY/THEFT Monday

## 28 28 probs ASSAULT 0.17845671 0.006830243 LARCENY/THEFT Monday

## 29 29 probs ASSAULT 0.20724299 0.008414602 LARCENY/THEFT Monday

## 30 30 probs ASSAULT 0.24433533 0.003468537 LARCENY/THEFT Monday

## 31 31 probs ASSAULT 0.19696492 0.003559191 LARCENY/THEFT Sunday

## 32 32 probs ASSAULT 0.20800510 0.005728635 LARCENY/THEFT Sunday

## 33 33 probs ASSAULT 0.31443475 0.003774785 LARCENY/THEFT Sunday

## 34 34 probs ASSAULT 0.34749104 0.003963707 LARCENY/THEFT Sunday

## 35 35 probs ASSAULT 0.19736750 0.005492126 LARCENY/THEFT Sunday

## 36 36 probs ASSAULT 0.20540858 0.004071987 LARCENY/THEFT Sunday

## 37 37 probs ASSAULT 0.24446582 0.005088369 LARCENY/THEFT Sunday

## 38 38 probs ASSAULT 0.20397995 0.005591464 LARCENY/THEFT Sunday

## 39 39 probs ASSAULT 0.25485968 0.007256869 LARCENY/THEFT Sunday

## 40 40 probs ASSAULT 0.27984344 0.002676152 LARCENY/THEFT Sunday

## 41 41 probs ASSAULT 0.18092763 0.004311834 LARCENY/THEFT Saturday

## 42 42 probs ASSAULT 0.19195798 0.006726984 LARCENY/THEFT Saturday

## 43 43 probs ASSAULT 0.29267736 0.005522881 LARCENY/THEFT Saturday

## 44 44 probs ASSAULT 0.32591927 0.004402369 LARCENY/THEFT Saturday

## 45 45 probs ASSAULT 0.18093521 0.006584115 LARCENY/THEFT Saturday

## 46 46 probs ASSAULT 0.18820496 0.005898019 LARCENY/THEFT Saturday

## 47 47 probs ASSAULT 0.22541501 0.006543871 LARCENY/THEFT Saturday

## 48 48 probs ASSAULT 0.18797843 0.007245661 LARCENY/THEFT Saturday

## 49 49 probs ASSAULT 0.24259978 0.008750468 LARCENY/THEFT Saturday

## 50 50 probs ASSAULT 0.26134330 0.003953550 LARCENY/THEFT Saturday

## 51 51 probs ASSAULT 0.16632335 0.003055645 LARCENY/THEFT Friday

## 52 52 probs ASSAULT 0.17517886 0.005516369 LARCENY/THEFT Friday

## 53 53 probs ASSAULT 0.27029845 0.003146449 LARCENY/THEFT Friday

## 54 54 probs ASSAULT 0.29998131 0.002873313 LARCENY/THEFT Friday

## 55 55 probs ASSAULT 0.16663037 0.005263419 LARCENY/THEFT Friday

## 56 56 probs ASSAULT 0.17433914 0.003450540 LARCENY/THEFT Friday

## 57 57 probs ASSAULT 0.20798507 0.004412468 LARCENY/THEFT Friday

## 58 58 probs ASSAULT 0.17233336 0.004688380 LARCENY/THEFT Friday

## 59 59 probs ASSAULT 0.21259967 0.006090589 LARCENY/THEFT Friday

## 60 60 probs ASSAULT 0.23824733 0.002408765 LARCENY/THEFT Friday

## 61 61 probs ASSAULT 0.16586163 0.005495554 LARCENY/THEFT Thursday

## 62 62 probs ASSAULT 0.17387271 0.007175742 LARCENY/THEFT Thursday

## 63 63 probs ASSAULT 0.27237141 0.004860729 LARCENY/THEFT Thursday

## 64 64 probs ASSAULT 0.29706897 0.005153000 LARCENY/THEFT Thursday

## 65 65 probs ASSAULT 0.16838408 0.006982086 LARCENY/THEFT Thursday

## 66 66 probs ASSAULT 0.17539267 0.005469551 LARCENY/THEFT Thursday

## 67 67 probs ASSAULT 0.20998973 0.006113640 LARCENY/THEFT Thursday

## 68 68 probs ASSAULT 0.16979145 0.006498304 LARCENY/THEFT Thursday

## 69 69 probs ASSAULT 0.19387537 0.007413903 LARCENY/THEFT Thursday

## 70 70 probs ASSAULT 0.23270297 0.003658268 LARCENY/THEFT Thursday

## 71 1 probs DRUG/NARCOTIC 0.11430177 0.006284541 LARCENY/THEFT Wednesday

## 72 2 probs DRUG/NARCOTIC 0.16067021 0.003709099 LARCENY/THEFT Wednesday

## 73 3 probs DRUG/NARCOTIC 0.09574189 0.002921544 LARCENY/THEFT Wednesday

## 74 4 probs DRUG/NARCOTIC 0.17019228 0.003543725 LARCENY/THEFT Wednesday

## 75 5 probs DRUG/NARCOTIC 0.06670490 0.003813318 LARCENY/THEFT Wednesday

## 76 6 probs DRUG/NARCOTIC 0.05827926 0.002740684 LARCENY/THEFT Wednesday

## 77 7 probs DRUG/NARCOTIC 0.07436918 0.003443478 LARCENY/THEFT Wednesday

## 78 8 probs DRUG/NARCOTIC 0.16282479 0.003402016 LARCENY/THEFT Wednesday

## 79 9 probs DRUG/NARCOTIC 0.54002868 0.002666268 LARCENY/THEFT Wednesday

## 80 10 probs DRUG/NARCOTIC 0.22943594 0.004915437 LARCENY/THEFT Wednesday

## 81 11 probs DRUG/NARCOTIC 0.10657874 0.008527641 LARCENY/THEFT Tuesday

## 82 12 probs DRUG/NARCOTIC 0.15041812 0.007622952 LARCENY/THEFT Tuesday

## 83 13 probs DRUG/NARCOTIC 0.08929533 0.008338873 LARCENY/THEFT Tuesday

## 84 14 probs DRUG/NARCOTIC 0.15965480 0.007334967 LARCENY/THEFT Tuesday

## 85 15 probs DRUG/NARCOTIC 0.06199196 0.008108777 LARCENY/THEFT Tuesday

## 86 16 probs DRUG/NARCOTIC 0.05410405 0.007388529 LARCENY/THEFT Tuesday

## 87 17 probs DRUG/NARCOTIC 0.06918948 0.008831074 LARCENY/THEFT Tuesday

## 88 18 probs DRUG/NARCOTIC 0.15237078 0.008574787 LARCENY/THEFT Tuesday

## 89 19 probs DRUG/NARCOTIC 0.52086682 0.007446236 LARCENY/THEFT Tuesday

## 90 20 probs DRUG/NARCOTIC 0.21604430 0.010113856 LARCENY/THEFT Tuesday

## 91 21 probs DRUG/NARCOTIC 0.09948621 0.005230659 LARCENY/THEFT Monday

## 92 22 probs DRUG/NARCOTIC 0.14042453 0.002905915 LARCENY/THEFT Monday

## 93 23 probs DRUG/NARCOTIC 0.08246598 0.002204010 LARCENY/THEFT Monday

## 94 24 probs DRUG/NARCOTIC 0.14826586 0.002799410 LARCENY/THEFT Monday

## 95 25 probs DRUG/NARCOTIC 0.05753044 0.002999987 LARCENY/THEFT Monday

## 96 26 probs DRUG/NARCOTIC 0.05035056 0.002009011 LARCENY/THEFT Monday

## 97 27 probs DRUG/NARCOTIC 0.06408746 0.002602336 LARCENY/THEFT Monday

## 98 28 probs DRUG/NARCOTIC 0.14289037 0.002553548 LARCENY/THEFT Monday

## 99 29 probs DRUG/NARCOTIC 0.50014661 0.001959300 LARCENY/THEFT Monday

## 100 30 probs DRUG/NARCOTIC 0.20232795 0.003720120 LARCENY/THEFT Monday

## 101 31 probs DRUG/NARCOTIC 0.07854536 0.008107965 LARCENY/THEFT Sunday

## 102 32 probs DRUG/NARCOTIC 0.11156563 0.005288932 LARCENY/THEFT Sunday

## 103 33 probs DRUG/NARCOTIC 0.06378625 0.004092791 LARCENY/THEFT Sunday

## 104 34 probs DRUG/NARCOTIC 0.11590340 0.005041854 LARCENY/THEFT Sunday

## 105 35 probs DRUG/NARCOTIC 0.04496576 0.005449351 LARCENY/THEFT Sunday

## 106 36 probs DRUG/NARCOTIC 0.03931493 0.003862875 LARCENY/THEFT Sunday

## 107 37 probs DRUG/NARCOTIC 0.04985173 0.004826396 LARCENY/THEFT Sunday

## 108 38 probs DRUG/NARCOTIC 0.11384708 0.004732057 LARCENY/THEFT Sunday

## 109 39 probs DRUG/NARCOTIC 0.42872910 0.003948120 LARCENY/THEFT Sunday

## 110 40 probs DRUG/NARCOTIC 0.16152847 0.006018277 LARCENY/THEFT Sunday

## 111 41 probs DRUG/NARCOTIC 0.07426621 0.008310284 LARCENY/THEFT Saturday

## 112 42 probs DRUG/NARCOTIC 0.10597839 0.005931533 LARCENY/THEFT Saturday

## 113 43 probs DRUG/NARCOTIC 0.06111395 0.005196966 LARCENY/THEFT Saturday

## 114 44 probs DRUG/NARCOTIC 0.11189671 0.005614768 LARCENY/THEFT Saturday

## 115 45 probs DRUG/NARCOTIC 0.04243108 0.006053404 LARCENY/THEFT Saturday

## 116 46 probs DRUG/NARCOTIC 0.03707872 0.005344226 LARCENY/THEFT Saturday

## 117 47 probs DRUG/NARCOTIC 0.04731509 0.005799895 LARCENY/THEFT Saturday

## 118 48 probs DRUG/NARCOTIC 0.10799338 0.005347127 LARCENY/THEFT Saturday

## 119 49 probs DRUG/NARCOTIC 0.42007509 0.004293186 LARCENY/THEFT Saturday

## 120 50 probs DRUG/NARCOTIC 0.15527446 0.008273414 LARCENY/THEFT Saturday

## 121 51 probs DRUG/NARCOTIC 0.08638758 0.006242735 LARCENY/THEFT Friday

## 122 52 probs DRUG/NARCOTIC 0.12237835 0.003771573 LARCENY/THEFT Friday

## 123 53 probs DRUG/NARCOTIC 0.07141782 0.002931476 LARCENY/THEFT Friday

## 124 54 probs DRUG/NARCOTIC 0.13032065 0.003578776 LARCENY/THEFT Friday

## 125 55 probs DRUG/NARCOTIC 0.04944552 0.003915027 LARCENY/THEFT Friday

## 126 56 probs DRUG/NARCOTIC 0.04346106 0.002744315 LARCENY/THEFT Friday

## 127 57 probs DRUG/NARCOTIC 0.05524090 0.003446647 LARCENY/THEFT Friday

## 128 58 probs DRUG/NARCOTIC 0.12527668 0.003379966 LARCENY/THEFT Friday

## 129 59 probs DRUG/NARCOTIC 0.46581221 0.002597267 LARCENY/THEFT Friday

## 130 60 probs DRUG/NARCOTIC 0.17911356 0.004828833 LARCENY/THEFT Friday

## 131 61 probs DRUG/NARCOTIC 0.10504150 0.006327675 LARCENY/THEFT Thursday

## 132 62 probs DRUG/NARCOTIC 0.14810551 0.003638651 LARCENY/THEFT Thursday

## 133 63 probs DRUG/NARCOTIC 0.08774885 0.002902641 LARCENY/THEFT Thursday

## 134 64 probs DRUG/NARCOTIC 0.15735959 0.003600833 LARCENY/THEFT Thursday

## 135 65 probs DRUG/NARCOTIC 0.06092432 0.003776777 LARCENY/THEFT Thursday

## 136 66 probs DRUG/NARCOTIC 0.05331308 0.002908602 LARCENY/THEFT Thursday

## 137 67 probs DRUG/NARCOTIC 0.06800542 0.003052086 LARCENY/THEFT Thursday

## 138 68 probs DRUG/NARCOTIC 0.15049900 0.002863440 LARCENY/THEFT Thursday

## 139 69 probs DRUG/NARCOTIC 0.51795000 0.001949593 LARCENY/THEFT Thursday

## 140 70 probs DRUG/NARCOTIC 0.21331394 0.004079474 LARCENY/THEFT Thursday

## 141 1 probs LARCENY/THEFT 0.59175834 0.007984061 LARCENY/THEFT Wednesday

## 142 2 probs LARCENY/THEFT 0.46733060 0.006390188 LARCENY/THEFT Wednesday

## 143 3 probs LARCENY/THEFT 0.33934630 0.005653598 LARCENY/THEFT Wednesday

## 144 4 probs LARCENY/THEFT 0.31385481 0.005484272 LARCENY/THEFT Wednesday

## 145 5 probs LARCENY/THEFT 0.54243172 0.006886299 LARCENY/THEFT Wednesday

## 146 6 probs LARCENY/THEFT 0.65657190 0.004909500 LARCENY/THEFT Wednesday

## 147 7 probs LARCENY/THEFT 0.47347628 0.008217094 LARCENY/THEFT Wednesday

## 148 8 probs LARCENY/THEFT 0.60079333 0.007680014 LARCENY/THEFT Wednesday

## 149 9 probs LARCENY/THEFT 0.24794975 0.009270139 LARCENY/THEFT Wednesday

## 150 10 probs LARCENY/THEFT 0.38966273 0.007904725 LARCENY/THEFT Wednesday

## 151 11 probs LARCENY/THEFT 0.59833785 0.005173013 LARCENY/THEFT Tuesday

## 152 12 probs LARCENY/THEFT 0.47443144 0.005660640 LARCENY/THEFT Tuesday

## 153 13 probs LARCENY/THEFT 0.34320556 0.005313942 LARCENY/THEFT Tuesday

## 154 14 probs LARCENY/THEFT 0.31926799 0.005081944 LARCENY/THEFT Tuesday

## 155 15 probs LARCENY/THEFT 0.54664723 0.005920510 LARCENY/THEFT Tuesday

## 156 16 probs LARCENY/THEFT 0.66097109 0.004468250 LARCENY/THEFT Tuesday

## 157 17 probs LARCENY/THEFT 0.47767204 0.007550911 LARCENY/THEFT Tuesday

## 158 18 probs LARCENY/THEFT 0.60966429 0.007305707 LARCENY/THEFT Tuesday

## 159 19 probs LARCENY/THEFT 0.25933313 0.008657594 LARCENY/THEFT Tuesday

## 160 20 probs LARCENY/THEFT 0.39788238 0.007471666 LARCENY/THEFT Tuesday

## 161 21 probs LARCENY/THEFT 0.59573012 0.009559346 LARCENY/THEFT Monday

## 162 22 probs LARCENY/THEFT 0.47241866 0.008177660 LARCENY/THEFT Monday

## 163 23 probs LARCENY/THEFT 0.33807352 0.006940633 LARCENY/THEFT Monday

## 164 24 probs LARCENY/THEFT 0.31624620 0.006927657 LARCENY/THEFT Monday

## 165 25 probs LARCENY/THEFT 0.54110340 0.008026769 LARCENY/THEFT Monday

## 166 26 probs LARCENY/THEFT 0.65609650 0.006192411 LARCENY/THEFT Monday

## 167 27 probs LARCENY/THEFT 0.47192561 0.009510114 LARCENY/THEFT Monday

## 168 28 probs LARCENY/THEFT 0.60982156 0.008758318 LARCENY/THEFT Monday

## 169 29 probs LARCENY/THEFT 0.26560692 0.009942019 LARCENY/THEFT Monday

## 170 30 probs LARCENY/THEFT 0.39744635 0.008844026 LARCENY/THEFT Monday

## 171 31 probs LARCENY/THEFT 0.59260407 0.009033232 LARCENY/THEFT Sunday

## 172 32 probs LARCENY/THEFT 0.47290283 0.007531301 LARCENY/THEFT Sunday

## 173 33 probs LARCENY/THEFT 0.32947377 0.006474767 LARCENY/THEFT Sunday

## 174 34 probs LARCENY/THEFT 0.31148545 0.006792907 LARCENY/THEFT Sunday

## 175 35 probs LARCENY/THEFT 0.53287082 0.007784299 LARCENY/THEFT Sunday

## 176 36 probs LARCENY/THEFT 0.64547345 0.005651106 LARCENY/THEFT Sunday

## 177 37 probs LARCENY/THEFT 0.46252816 0.008448728 LARCENY/THEFT Sunday

## 178 38 probs LARCENY/THEFT 0.61218000 0.009038393 LARCENY/THEFT Sunday

## 179 39 probs LARCENY/THEFT 0.28686823 0.010263234 LARCENY/THEFT Sunday

## 180 40 probs LARCENY/THEFT 0.39978745 0.009370157 LARCENY/THEFT Sunday

## 181 41 probs LARCENY/THEFT 0.61012247 0.008609439 LARCENY/THEFT Saturday

## 182 42 probs LARCENY/THEFT 0.48914822 0.007464901 LARCENY/THEFT Saturday

## 183 43 probs LARCENY/THEFT 0.34372871 0.007305465 LARCENY/THEFT Saturday

## 184 44 probs LARCENY/THEFT 0.32744667 0.006962282 LARCENY/THEFT Saturday

## 185 45 probs LARCENY/THEFT 0.54752727 0.007613625 LARCENY/THEFT Saturday

## 186 46 probs LARCENY/THEFT 0.66286830 0.005204388 LARCENY/THEFT Saturday

## 187 47 probs LARCENY/THEFT 0.47801249 0.009458660 LARCENY/THEFT Saturday

## 188 48 probs LARCENY/THEFT 0.63231874 0.009280756 LARCENY/THEFT Saturday

## 189 49 probs LARCENY/THEFT 0.30606108 0.011494559 LARCENY/THEFT Saturday

## 190 50 probs LARCENY/THEFT 0.41846755 0.009767265 LARCENY/THEFT Saturday

## 191 51 probs LARCENY/THEFT 0.60806299 0.007546729 LARCENY/THEFT Friday

## 192 52 probs LARCENY/THEFT 0.48394860 0.005984849 LARCENY/THEFT Friday

## 193 53 probs LARCENY/THEFT 0.34415452 0.005240767 LARCENY/THEFT Friday

## 194 54 probs LARCENY/THEFT 0.32674427 0.004696771 LARCENY/THEFT Friday

## 195 55 probs LARCENY/THEFT 0.54666349 0.006314808 LARCENY/THEFT Friday

## 196 56 probs LARCENY/THEFT 0.66569350 0.004751163 LARCENY/THEFT Friday

## 197 57 probs LARCENY/THEFT 0.47815843 0.007875598 LARCENY/THEFT Friday

## 198 58 probs LARCENY/THEFT 0.62846433 0.006871688 LARCENY/THEFT Friday

## 199 59 probs LARCENY/THEFT 0.29077933 0.007962724 LARCENY/THEFT Friday

## 200 60 probs LARCENY/THEFT 0.41358206 0.007362817 LARCENY/THEFT Friday

## 201 61 probs LARCENY/THEFT 0.59859147 0.010281887 LARCENY/THEFT Thursday

## 202 62 probs LARCENY/THEFT 0.47417453 0.008878386 LARCENY/THEFT Thursday

## 203 63 probs LARCENY/THEFT 0.34234239 0.008578470 LARCENY/THEFT Thursday

## 204 64 probs LARCENY/THEFT 0.31941867 0.007577982 LARCENY/THEFT Thursday

## 205 65 probs LARCENY/THEFT 0.54532596 0.009163512 LARCENY/THEFT Thursday

## 206 66 probs LARCENY/THEFT 0.66111973 0.008568597 LARCENY/THEFT Thursday

## 207 67 probs LARCENY/THEFT 0.47657026 0.009999979 LARCENY/THEFT Thursday

## 208 68 probs LARCENY/THEFT 0.61124644 0.008665315 LARCENY/THEFT Thursday

## 209 69 probs LARCENY/THEFT 0.26176575 0.008555238 LARCENY/THEFT Thursday

## 210 70 probs LARCENY/THEFT 0.39877214 0.008349837 LARCENY/THEFT Thursday

## 211 1 probs VEHICLE THEFT 0.12653942 0.004778899 LARCENY/THEFT Wednesday

## 212 2 probs VEHICLE THEFT 0.19704904 0.005426455 LARCENY/THEFT Wednesday

## 213 3 probs VEHICLE THEFT 0.28927268 0.007319912 LARCENY/THEFT Wednesday

## 214 4 probs VEHICLE THEFT 0.21794851 0.004875745 LARCENY/THEFT Wednesday

## 215 5 probs VEHICLE THEFT 0.21986703 0.005971657 LARCENY/THEFT Wednesday

## 216 6 probs VEHICLE THEFT 0.10731659 0.007864579 LARCENY/THEFT Wednesday

## 217 7 probs VEHICLE THEFT 0.23916110 0.008140716 LARCENY/THEFT Wednesday

## 218 8 probs VEHICLE THEFT 0.06600076 0.005079759 LARCENY/THEFT Wednesday

## 219 9 probs VEHICLE THEFT 0.02453490 0.002094417 LARCENY/THEFT Wednesday

## 220 10 probs VEHICLE THEFT 0.14875443 0.006893103 LARCENY/THEFT Wednesday

## 221 11 probs VEHICLE THEFT 0.12741809 0.003022617 LARCENY/THEFT Tuesday

## 222 12 probs VEHICLE THEFT 0.19921715 0.004393776 LARCENY/THEFT Tuesday

## 223 13 probs VEHICLE THEFT 0.29135453 0.008232705 LARCENY/THEFT Tuesday

## 224 14 probs VEHICLE THEFT 0.22079215 0.004383307 LARCENY/THEFT Tuesday

## 225 15 probs VEHICLE THEFT 0.22066087 0.004907536 LARCENY/THEFT Tuesday

## 226 16 probs VEHICLE THEFT 0.10758957 0.008930829 LARCENY/THEFT Tuesday

## 227 17 probs VEHICLE THEFT 0.24028425 0.007038654 LARCENY/THEFT Tuesday

## 228 18 probs VEHICLE THEFT 0.06669875 0.004251317 LARCENY/THEFT Tuesday

## 229 19 probs VEHICLE THEFT 0.02555535 0.001469659 LARCENY/THEFT Tuesday

## 230 20 probs VEHICLE THEFT 0.15126516 0.006274676 LARCENY/THEFT Tuesday

## 231 21 probs VEHICLE THEFT 0.13088527 0.005619880 LARCENY/THEFT Monday

## 232 22 probs VEHICLE THEFT 0.20466185 0.006133126 LARCENY/THEFT Monday

## 233 23 probs VEHICLE THEFT 0.29609782 0.008063400 LARCENY/THEFT Monday

## 234 24 probs VEHICLE THEFT 0.22563692 0.005675618 LARCENY/THEFT Monday

## 235 25 probs VEHICLE THEFT 0.22534869 0.006536327 LARCENY/THEFT Monday

## 236 26 probs VEHICLE THEFT 0.11018235 0.008545907 LARCENY/THEFT Monday

## 237 27 probs VEHICLE THEFT 0.24492077 0.008849760 LARCENY/THEFT Monday

## 238 28 probs VEHICLE THEFT 0.06883136 0.005615097 LARCENY/THEFT Monday

## 239 29 probs VEHICLE THEFT 0.02700348 0.002201869 LARCENY/THEFT Monday

## 240 30 probs VEHICLE THEFT 0.15589038 0.007687735 LARCENY/THEFT Monday

## 241 31 probs VEHICLE THEFT 0.13188565 0.006641383 LARCENY/THEFT Sunday

## 242 32 probs VEHICLE THEFT 0.20752645 0.007785445 LARCENY/THEFT Sunday

## 243 33 probs VEHICLE THEFT 0.29230523 0.009429464 LARCENY/THEFT Sunday

## 244 34 probs VEHICLE THEFT 0.22512011 0.007509519 LARCENY/THEFT Sunday

## 245 35 probs VEHICLE THEFT 0.22479591 0.008327058 LARCENY/THEFT Sunday

## 246 36 probs VEHICLE THEFT 0.10980304 0.010221023 LARCENY/THEFT Sunday

## 247 37 probs VEHICLE THEFT 0.24315429 0.009800940 LARCENY/THEFT Sunday

## 248 38 probs VEHICLE THEFT 0.06999296 0.007301930 LARCENY/THEFT Sunday

## 249 39 probs VEHICLE THEFT 0.02954299 0.002952967 LARCENY/THEFT Sunday

## 250 40 probs VEHICLE THEFT 0.15884064 0.008979404 LARCENY/THEFT Sunday

## 251 41 probs VEHICLE THEFT 0.13468369 0.004122828 LARCENY/THEFT Saturday

## 252 42 probs VEHICLE THEFT 0.21291541 0.005337961 LARCENY/THEFT Saturday

## 253 43 probs VEHICLE THEFT 0.30247998 0.009106063 LARCENY/THEFT Saturday

## 254 44 probs VEHICLE THEFT 0.23473735 0.005329537 LARCENY/THEFT Saturday

## 255 45 probs VEHICLE THEFT 0.22910644 0.005736426 LARCENY/THEFT Saturday

## 256 46 probs VEHICLE THEFT 0.11184803 0.010481614 LARCENY/THEFT Saturday

## 257 47 probs VEHICLE THEFT 0.24925741 0.008383304 LARCENY/THEFT Saturday

## 258 48 probs VEHICLE THEFT 0.07170945 0.005065664 LARCENY/THEFT Saturday

## 259 49 probs VEHICLE THEFT 0.03126405 0.001761442 LARCENY/THEFT Saturday

## 260 50 probs VEHICLE THEFT 0.16491469 0.007913243 LARCENY/THEFT Saturday

## 261 51 probs VEHICLE THEFT 0.13922608 0.004256068 LARCENY/THEFT Friday

## 262 52 probs VEHICLE THEFT 0.21849420 0.004514973 LARCENY/THEFT Friday

## 263 53 probs VEHICLE THEFT 0.31412922 0.006302301 LARCENY/THEFT Friday

## 264 54 probs VEHICLE THEFT 0.24295377 0.003783108 LARCENY/THEFT Friday

## 265 55 probs VEHICLE THEFT 0.23726062 0.005015415 LARCENY/THEFT Friday

## 266 56 probs VEHICLE THEFT 0.11650631 0.007255296 LARCENY/THEFT Friday

## 267 57 probs VEHICLE THEFT 0.25861559 0.007256625 LARCENY/THEFT Friday

## 268 58 probs VEHICLE THEFT 0.07392563 0.004209260 LARCENY/THEFT Friday

## 269 59 probs VEHICLE THEFT 0.03080879 0.001798800 LARCENY/THEFT Friday