Chapter 18 Simple regression

library(tidyverse)18.1 Rainfall in California

The data in link are rainfall and other measurements for 30 weather stations in California. Our aim is to understand how well the annual rainfall at these stations (measured in inches) can be predicted from the other measurements, which are the altitude (in feet above sea level), the latitude (degrees north of the equator) and the distance from the coast (in miles).

Read the data into R. You’ll have to be careful here, since the values are space-delimited, but sometimes by more than one space, to make the columns line up.

read_table2, with filename or url, will read it in. One of the variables is calledrainfall, so as long as you do not call the data frame that, you should be safe.Make a boxplot of the rainfall figures, and explain why the values are reasonable. (A rainfall cannot be negative, and it is unusual for a annual rainfall to exceed 60 inches.) A

ggplotboxplot needs something on the \(x\)-axis: the number 1 will do.Plot

rainfallagainst each of the other quantitative variables (that is, notstation).Look at the relationship of each other variable with

rainfall. Justify the assertion thatlatitudeseems most strongly related withrainfall. Is that relationship positive or negative? linear? Explain briefly.Fit a regression with

rainfallas the response variable, andlatitudeas your explanatory variable. What are the intercept, slope and R-squared values? Is there a significant relationship betweenrainfalland your explanatory variable? What does that mean?Fit a multiple regression predicting

rainfallfrom all three of the other (quantitative) variables. Display the results. Comment is coming up later.What is the R-squared for the regression of the last part? How does that compare with the R-squared of your regression in part (e)?

What do you conclude about the importance of the variables that you did not include in your model in (e)? Explain briefly.

Make a suitable hypothesis test that the variables

altitudeandfromcoastsignificantly improve the prediction ofrainfallover the use oflatitudealone. What do you conclude?

18.2 Carbon monoxide in cigarettes

The (US) Federal Trade Commission assesses cigarettes according to their tar, nicotine and carbon monoxide contents. In a particular year, 25 brands were assessed. For each brand, the tar, nicotine and carbon monoxide (all in milligrams) were measured, along with the weight in grams. Our aim is to predict carbon monoxide from any or all of the other variables. The data are in link. These are aligned by column (except for the variable names), with more than one space between each column of data.

Read the data into R, and check that you have 25 observations and 4 variables.

Run a regression to predict carbon monoxide from the other variables, and obtain a summary of the output.

Which one of your explanatory variables would you remove from this regression? Explain (very) briefly. Go ahead and fit the regression without it, and describe how the change in R-squared from the regression in (b) was entirely predictable.

Fit a regression predicting carbon monoxide from

nicotineonly, and display the summary.nicotinewas far from being significant in the model of (c), and yet in the model of (d), it was strongly significant, and the R-squared value of (d) was almost as high as that of (c). What does this say about the importance ofnicotineas an explanatory variable? Explain, as briefly as you can manage.Make a “pairs plot”: that is, scatter plots between all pairs of variables. This can be done by feeding the whole data frame into

plot.22 Do you see any strong relationships that do not includeco? Does that shed any light on the last part? Explain briefly (or “at length” if that’s how it comes out).

18.3 Maximal oxygen uptake in young boys

A physiologist wanted to understand the relationship between physical characteristics of pre-adolescent boys and their maximal oxygen uptake (millilitres of oxygen per kilogram of body weight). The data are in link for a random sample of 10 pre-adolescent boys. The variables are (with units):

uptake: Oxygen uptake (millitres of oxygen per kilogram of body weight)age: boy’s age (years)height: boy’s height (cm)weight: boy’s weight (kg)chest: chest depth (cm).

Read the data into R and confirm that you do indeed have 10 observations.

Fit a regression predicting oxygen uptake from all the other variables, and display the results.

(A one-mark question.) Would you say, on the evidence so far, that the regression fits well or badly? Explain (very) briefly.

It seems reasonable that an older boy should have a greater oxygen uptake, all else being equal. Is this supported by your output? Explain briefly.

It seems reasonable that a boy with larger weight should have larger lungs and thus a statistically significantly larger oxygen uptake. Is that what happens here? Explain briefly.

Fit a model that contains only the significant explanatory variables from your first regression. How do the R-squared values from the two regressions compare? (The last sentence asks for more or less the same thing as the next part. Answer it either here or there. Either place is good.)

How has R-squared changed between your two regressions? Describe what you see in a few words.

Carry out a test comparing the fit of your two regression models. What do you conclude, and therefore what recommendation would you make about the regression that would be preferred?

Obtain a table of correlations between all the variables in the data frame. Do this by feeding the whole data frame into

cor. We found that a regression predicting oxygen uptake from justheightwas acceptably good. What does your table of correlations say about why that is? (Hint: look for all the correlations that are large.)

18.4 Facebook friends and grey matter

Is there a relationship between the number of Facebook friends a person has, and the density of grey matter in the areas of the brain associated with social perception and associative memory? To find out, a 2012 study measured both of these variables for a sample of 40 students at City University in London (England). The data are at link. The grey matter density is on a \(z\)-score standardized scale. The values are separated by tabs.

The aim of this question is to produce an R Markdown report that contains your answers to the questions below.

You should aim to make your report flow smoothly, so that it would be pleasant for a grader to read, and can stand on its own as an analysis (rather than just being the answer to a question that I set you). Some suggestions: give your report a title and arrange it into sections with an Introduction; add a small amount of additional text here and there explaining what you are doing and why. I don’t expect you to spend a large amount of time on this, but I do hope you will make some effort. (My report came out to 4 Word pages.)

Read in the data and make a scatterplot for predicting the number of Facebook friends from the grey matter density. On your scatterplot, add a smooth trend.

Describe what you see on your scatterplot: is there a trend, and if so, what kind of trend is it? (Don’t get too taken in by the exact shape of your smooth trend.) Think “form, direction, strength”.

Fit a regression predicting the number of Facebook friends from the grey matter density, and display the output.

Is the slope of your regression line significantly different from zero? What does that mean, in the context of the data?

Are you surprised by the results of parts (b) and (d)? Explain briefly.

Obtain a scatterplot with the regression line on it.

Obtain a plot of the residuals from the regression against the fitted values, and comment briefly on it.

18.5 Endogenous nitrogen excretion in carp

A paper in Fisheries Science reported on variables that affect “endogenous nitrogen excretion” or ENE in carp raised in Japan. A number of carp were divided into groups based on body weight, and each group was placed in a different tank. The mean body weight of the carp placed in each tank was recorded. The carp were then fed a protein-free diet three times daily for a period of 20 days. At the end of the experiment, the amount of ENE in each tank was measured, in milligrams of total fish body weight per day. (Thus it should not matter that some of the tanks had more fish than others, because the scaling is done properly.)

For this question, write a report in R Markdown that answers the questions below and contains some narrative that describes your analysis. Create an HTML document from your R Markdown.

Read the data in from link. There are 10 tanks.

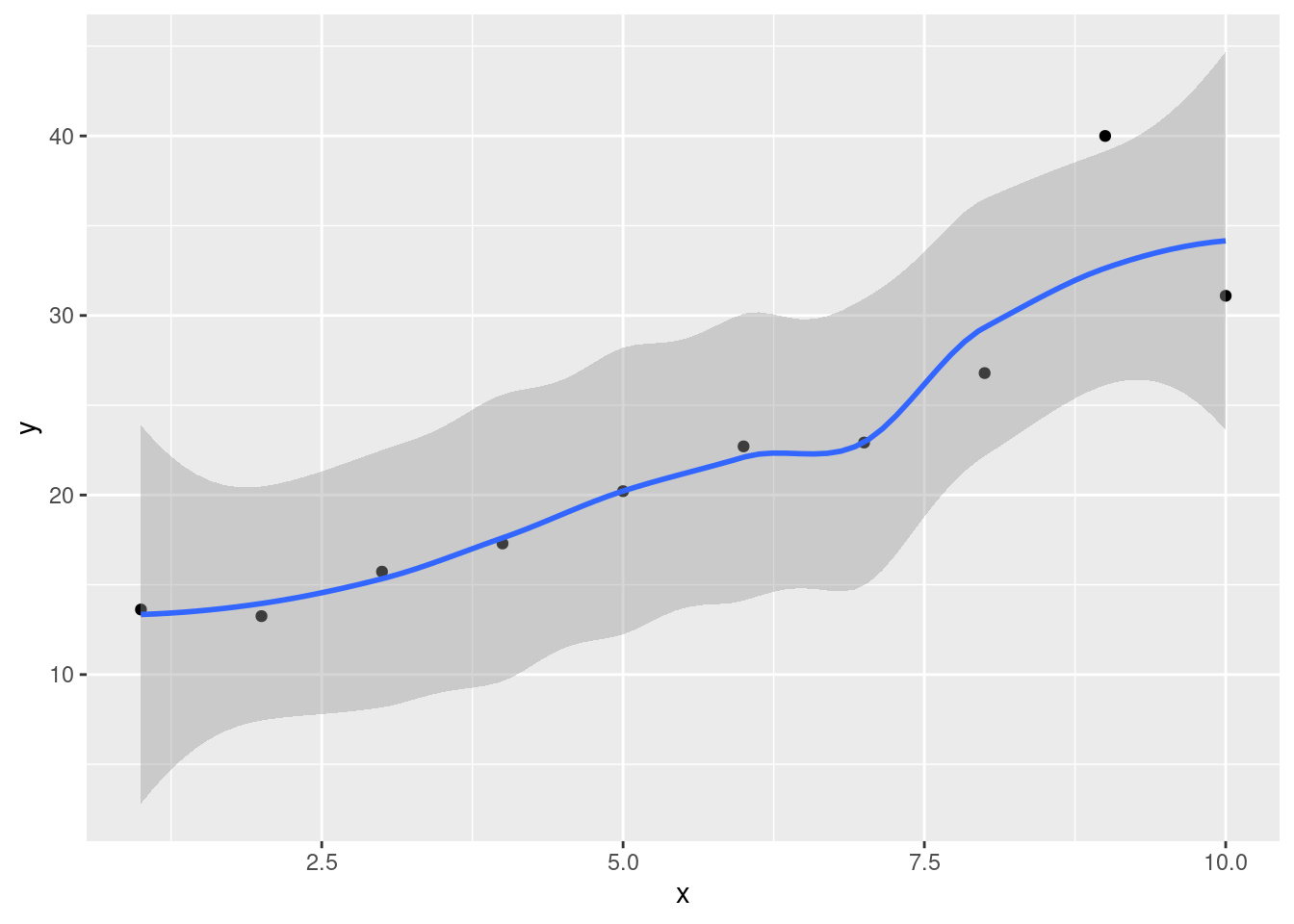

Create a scatterplot of ENE (response) against bodyweight (explanatory). Add a smooth trend to your plot.

Is there an upward or downward trend (or neither)? Is the relationship a line or a curve? Explain briefly.

Fit a straight line to the data, and obtain the R-squared for the regression.

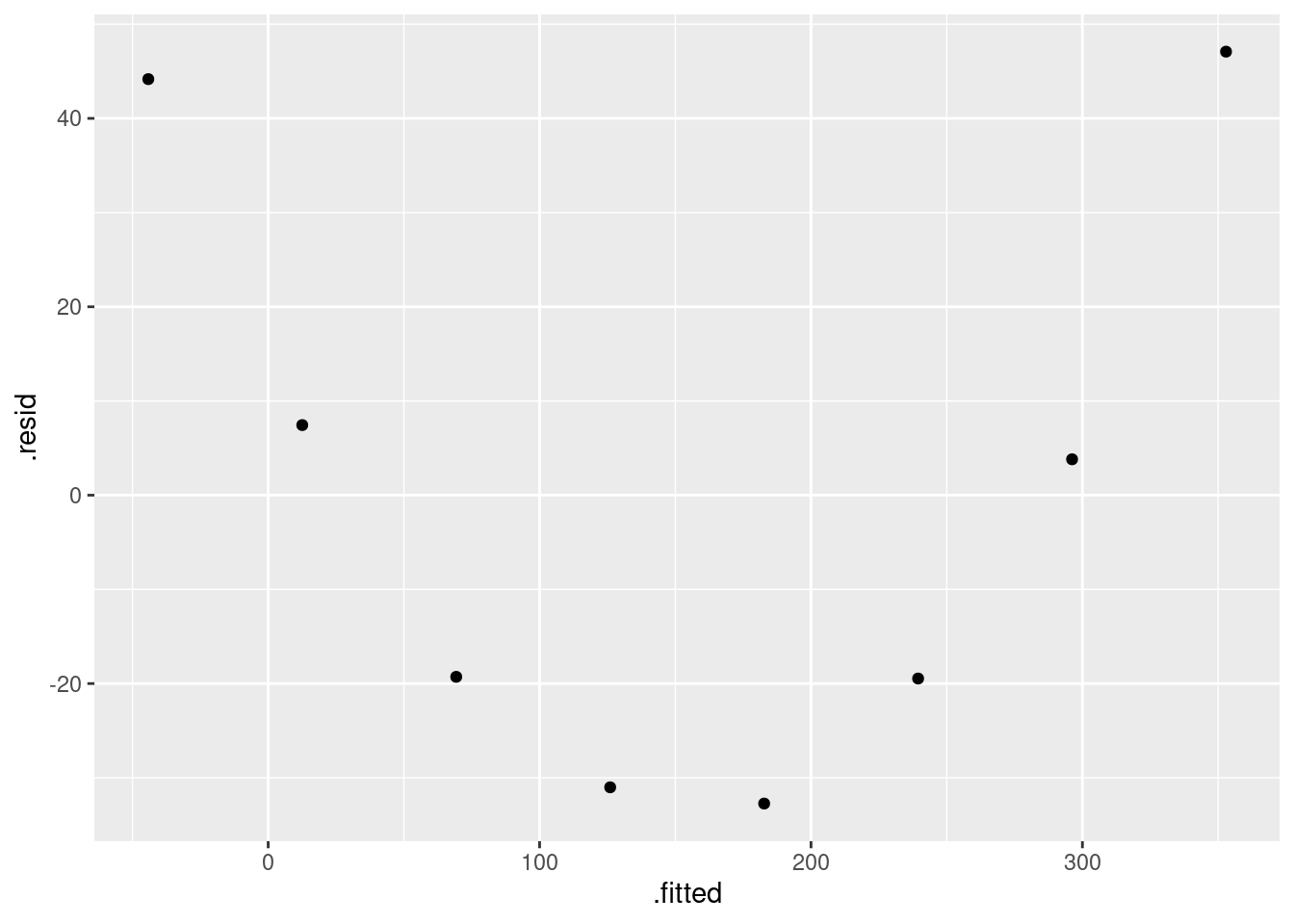

Obtain a residual plot (residuals against fitted values) for this regression. Do you see any problems? If so, what does that tell you about the relationship in the data?

Fit a parabola to the data (that is, including an \(x\)-squared term). Compare the R-squared values for the models in this part and part (d). Does that suggest that the parabola model is an improvement here over the linear model?

Is the test for the slope coefficient for the squared term significant? What does this mean?

Make the scatterplot of part (b), but add the fitted curve. Describe any way in which the curve fails to fit well.

Obtain a residual plot for the parabola model. Do you see any problems with it? (If you do, I’m not asking you to do anything about them in this question, but I will.)

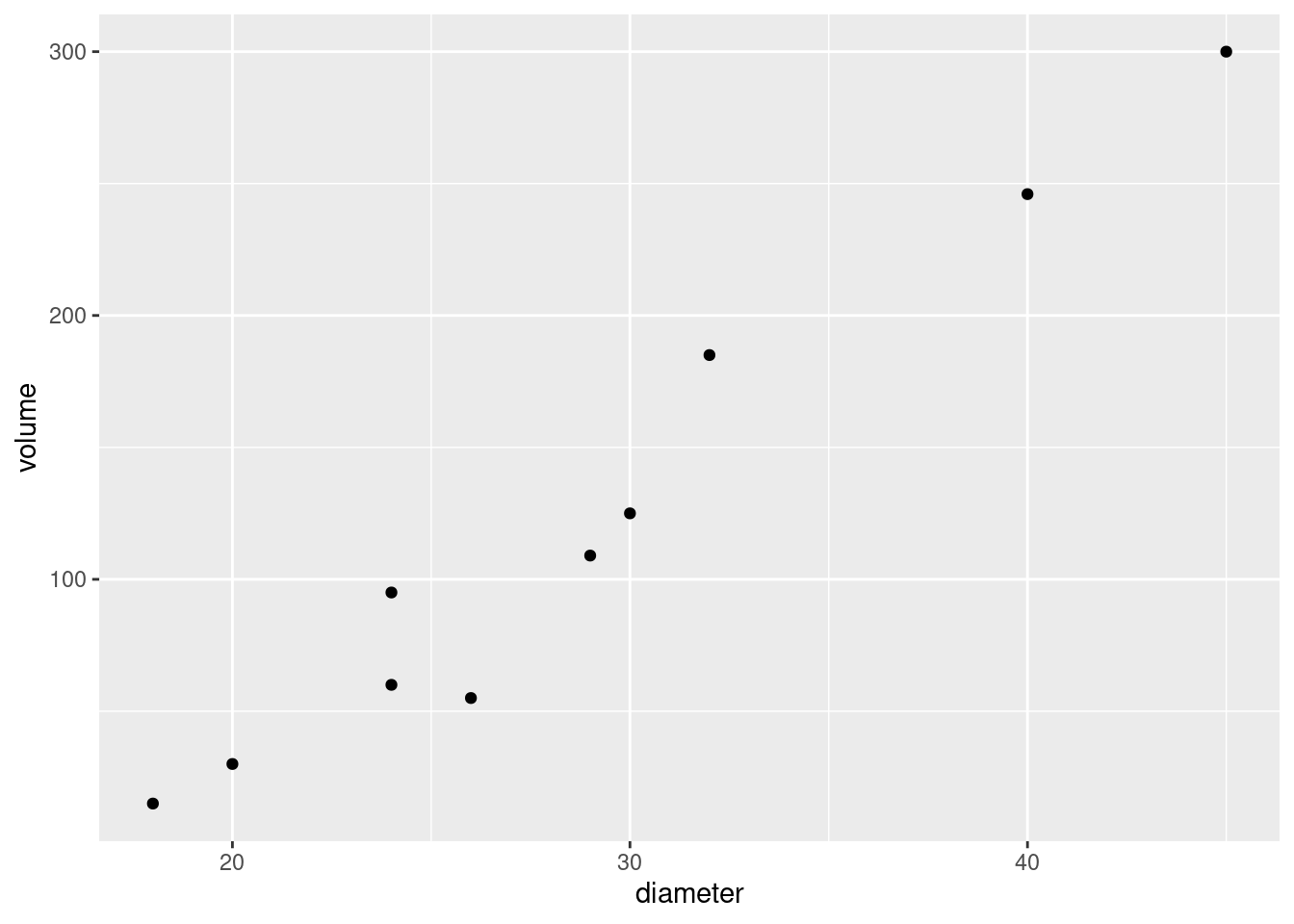

18.7 Predicting volume of wood in pine trees

In forestry, the financial value of a tree is the volume of wood that it contains. This is difficult to estimate while the tree is still standing, but the diameter is easy to measure with a tape measure (to measure the circumference) and a calculation involving \(\pi\), assuming that the cross-section of the tree is at least approximately circular. The standard measurement is “diameter at breast height” (that is, at the height of a human breast or chest), defined as being 4.5 feet above the ground.

Several pine trees had their diameter measured shortly before being cut down, and for each tree, the volume of wood was recorded. The data are in link. The diameter is in inches and the volume is in cubic inches. Is it possible to predict the volume of wood from the diameter?

Read the data into R and display the values (there are not very many).

Make a suitable plot.

Describe what you learn from your plot about the relationship between diameter and volume, if anything.

Fit a (linear) regression, predicting volume from diameter, and obtain the

summary. How would you describe the R-squared?Draw a graph that will help you decide whether you trust the linearity of this regression. What do you conclude? Explain briefly.

What would you guess would be the volume of a tree of diameter zero? Is that what the regression predicts? Explain briefly.

A simple way of modelling a tree’s shape is to pretend it is a cone, like this, but probably taller and skinnier:

with its base on the ground. What is the relationship between the diameter (at the base) and volume of a cone? (If you don’t remember, look it up. You’ll probably get a formula in terms of the radius, which you’ll have to convert. Cite the website you used.)

- Fit a regression model that predicts volume from diameter

according to the formula you obtained in the previous part. You can

assume that the trees in this data set are of similar heights, so

that the height can be treated as a constant.

Display the results.

18.8 Tortoise shells and eggs

A biologist measured the length of the carapace (shell) of female tortoises, and then x-rayed the tortoises to count how many eggs they were carrying. The length is measured in millimetres. The data are in link. The biologist is wondering what kind of relationship, if any, there is between the carapace length (as an explanatory variable) and the number of eggs (as a response variable).

Read in the data, and check that your values look reasonable.

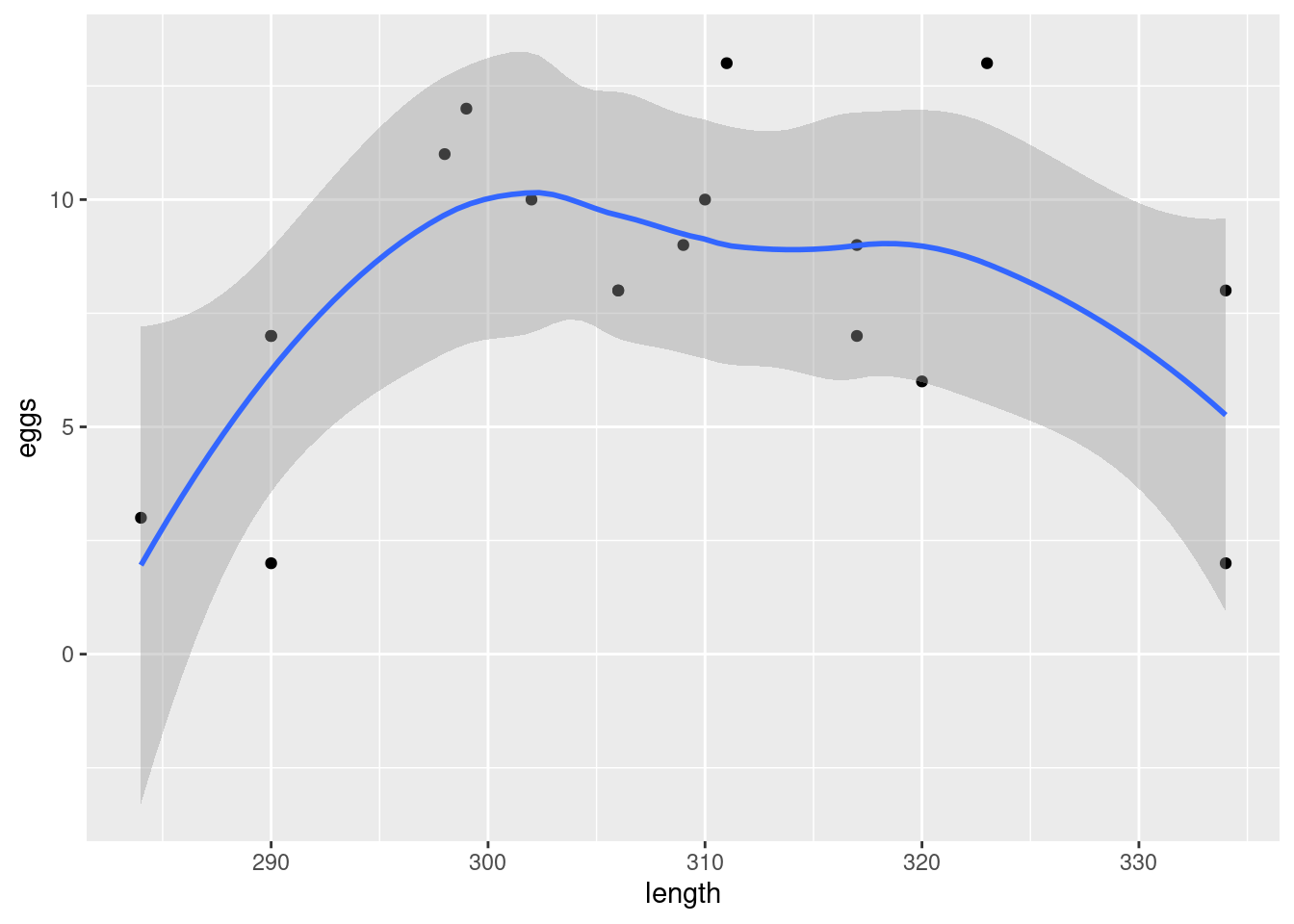

Obtain a scatterplot, with a smooth trend, of the data.

The biologist expected that a larger tortoise would be able to carry more eggs. Is that what the scatterplot is suggesting? Explain briefly why or why not.

Fit a straight-line relationship and display the summary.

Add a squared term to your regression, fit that and display the summary.

Is a curve better than a line for these data? Justify your answer in two ways: by comparing a measure of fit, and by doing a suitable test of significance.

Make a residual plot for the straight line model: that is, plot the residuals against the fitted values. Does this echo your conclusions of the previous part? In what way? Explain briefly.

18.9 Roller coasters

A poll on the Discovery Channel asked people to nominate the best roller-coasters in the United States. We will examine the 10 roller-coasters that received the most votes. Two features of a roller-coaster that are of interest are the distance it drops from start to finish, measured here in feet23 and the duration of the ride, measured in seconds. Is it true that roller-coasters with a bigger drop also tend to have a longer ride? The data are at link.24

Read the data into R and verify that you have a sensible number of rows and columns.

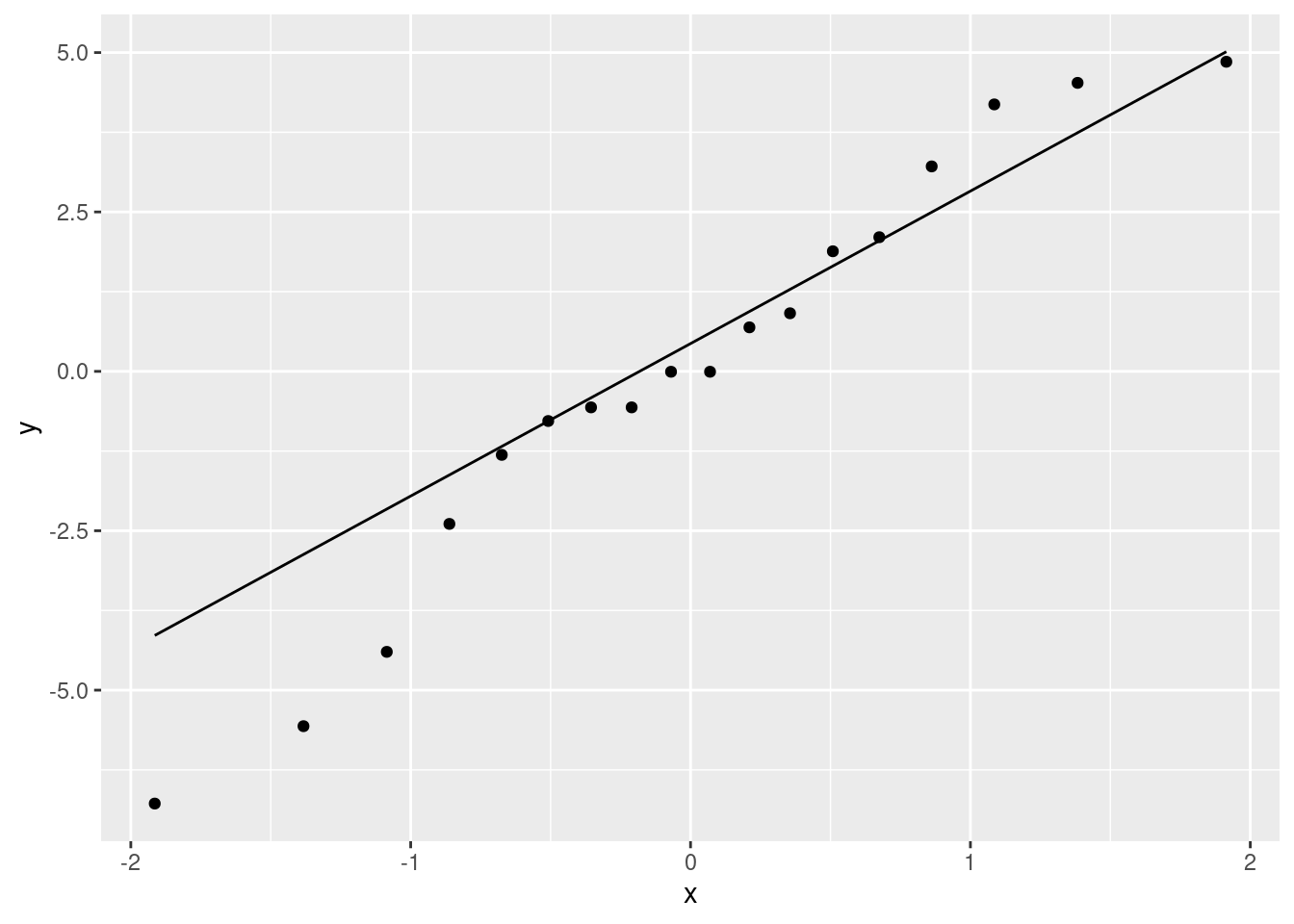

Make a scatterplot of duration (response) against drop (explanatory), labelling each roller-coaster with its name in such a way that the labels do not overlap. Add a regression line to your plot.

Would you say that roller-coasters with a larger drop tend to have a longer ride? Explain briefly.

Find a roller-coaster that is unusual compared to the others. What about its combination of

dropanddurationis unusual?

18.10 Running and blood sugar

A diabetic wants to know how aerobic exercise affects his blood sugar. When his blood sugar reaches 170 (mg/dl), he goes out for a run at a pace of 10 minutes per mile. He runs different distances on different days. Each time he runs, he measures his blood sugar after the run. (The preferred blood sugar level is between 80 and 120 on this scale.) The data are in the file link. Our aim is to predict blood sugar from distance.

Read in the data and display the data frame that you read in.

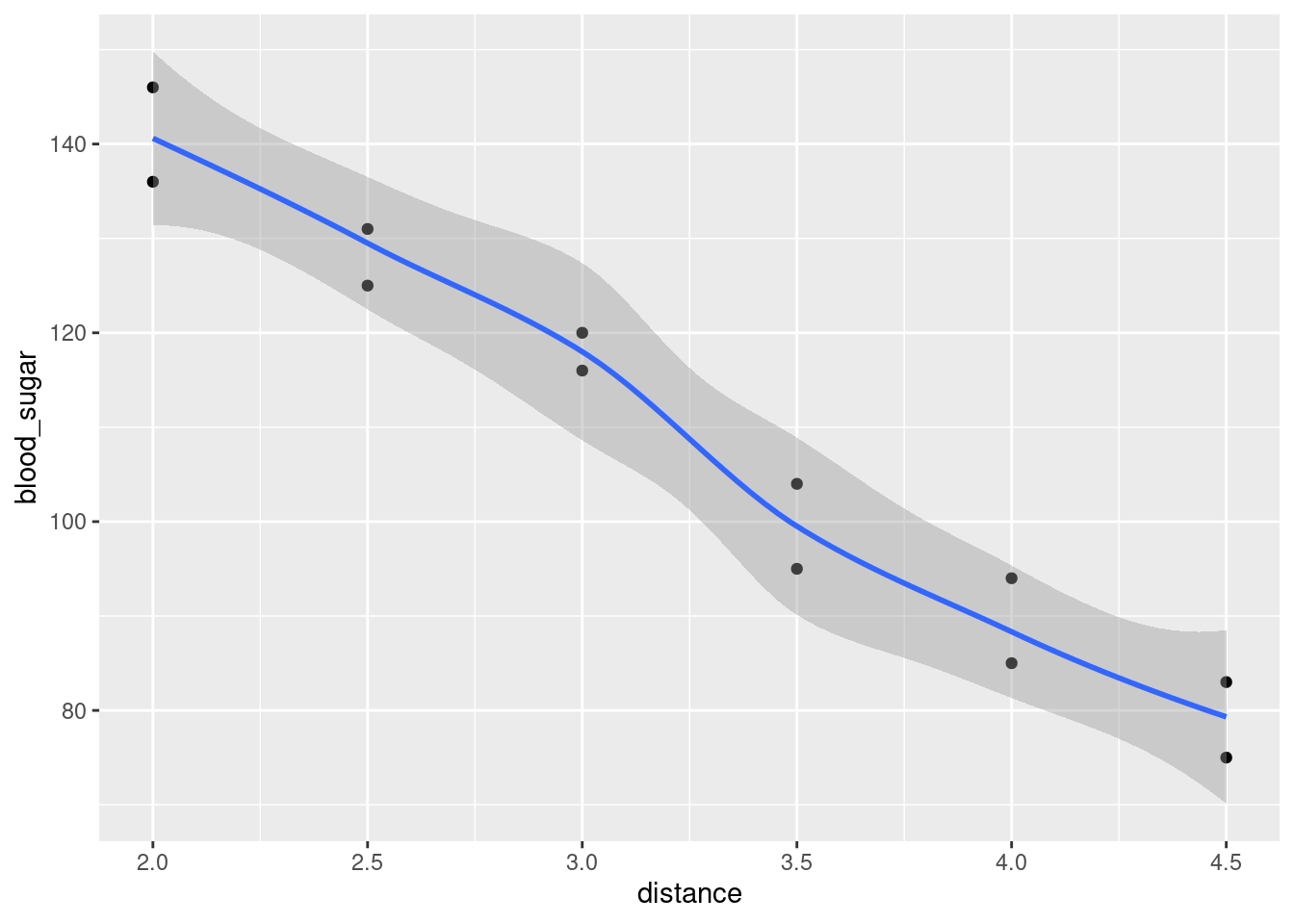

Make a scatterplot and add a smooth trend to it.

Would you say that the relationship between blood sugar and running distance is approximately linear, or not? It is therefore reasonable to use a regression of blood sugar on distance? Explain briefly.

Fit a suitable regression, and obtain the regression output.

How would you interpret the slope? That is, what is the slope, and what does that mean about blood sugar and running distance?

Is there a (statistically) significant relationship between running distance and blood sugar? How do you know? Do you find this surprising, given what you have seen so far? Explain briefly.

This diabetic is planning to go for a 3-mile run tomorrow and a 5-mile run the day after. Obtain suitable 95% intervals that say what his blood sugar might be after each of these runs.

Which of your two intervals is longer? Does this make sense? Explain briefly.

18.11 Calories and fat in pizza

The file at link came from a spreadsheet of information about 24 brands of pizza: specifically, per 5-ounce serving, the number of calories, the grams of fat, and the cost (in US dollars). The names of the pizza brands are quite long. This file may open in a spreadsheet when you browse to the link, depending on your computer’s setup.

Read in the data and display at least some of the data frame. Are the variables of the right types? (In particular, why is the number of calories labelled one way and the cost labelled a different way?)

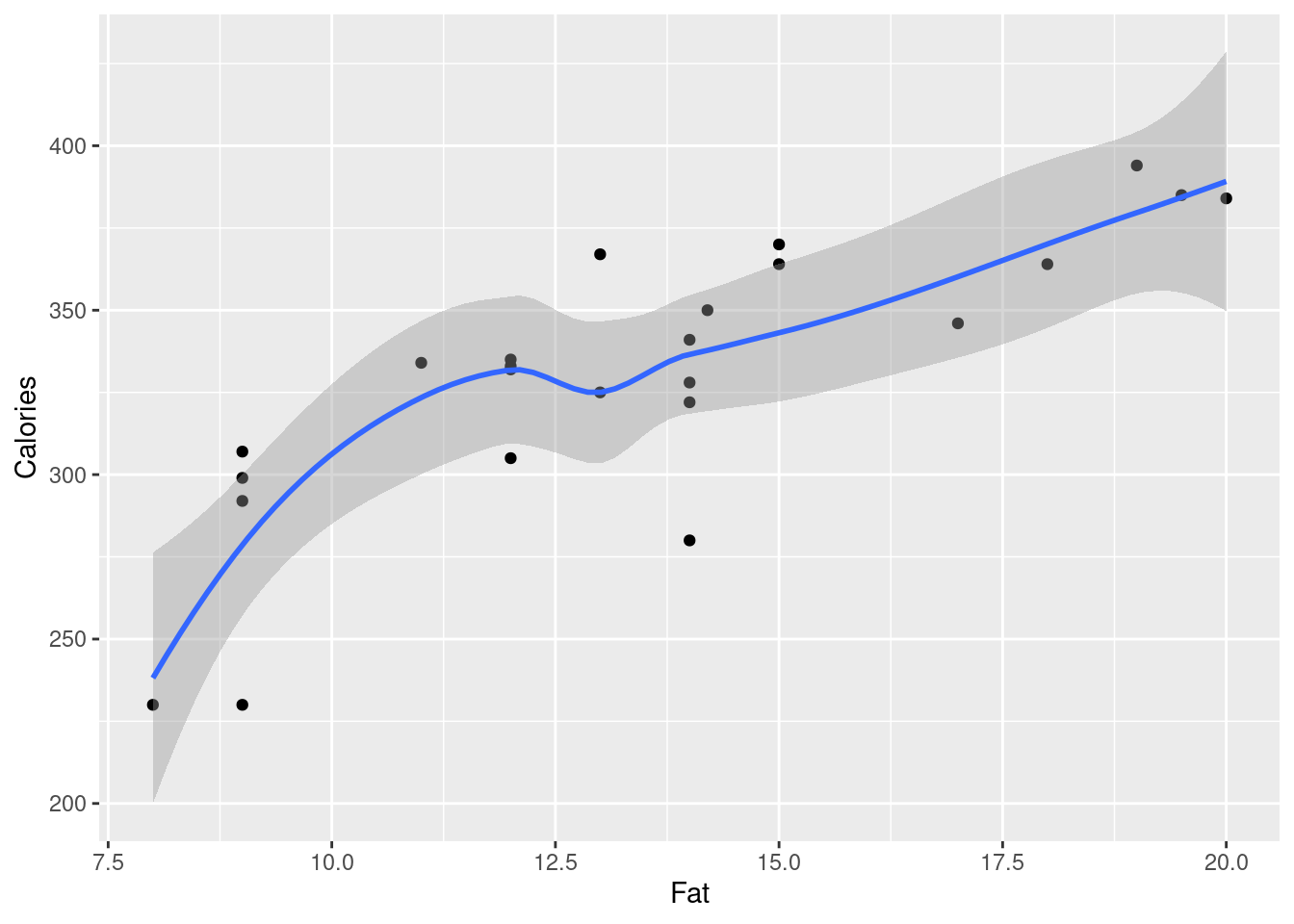

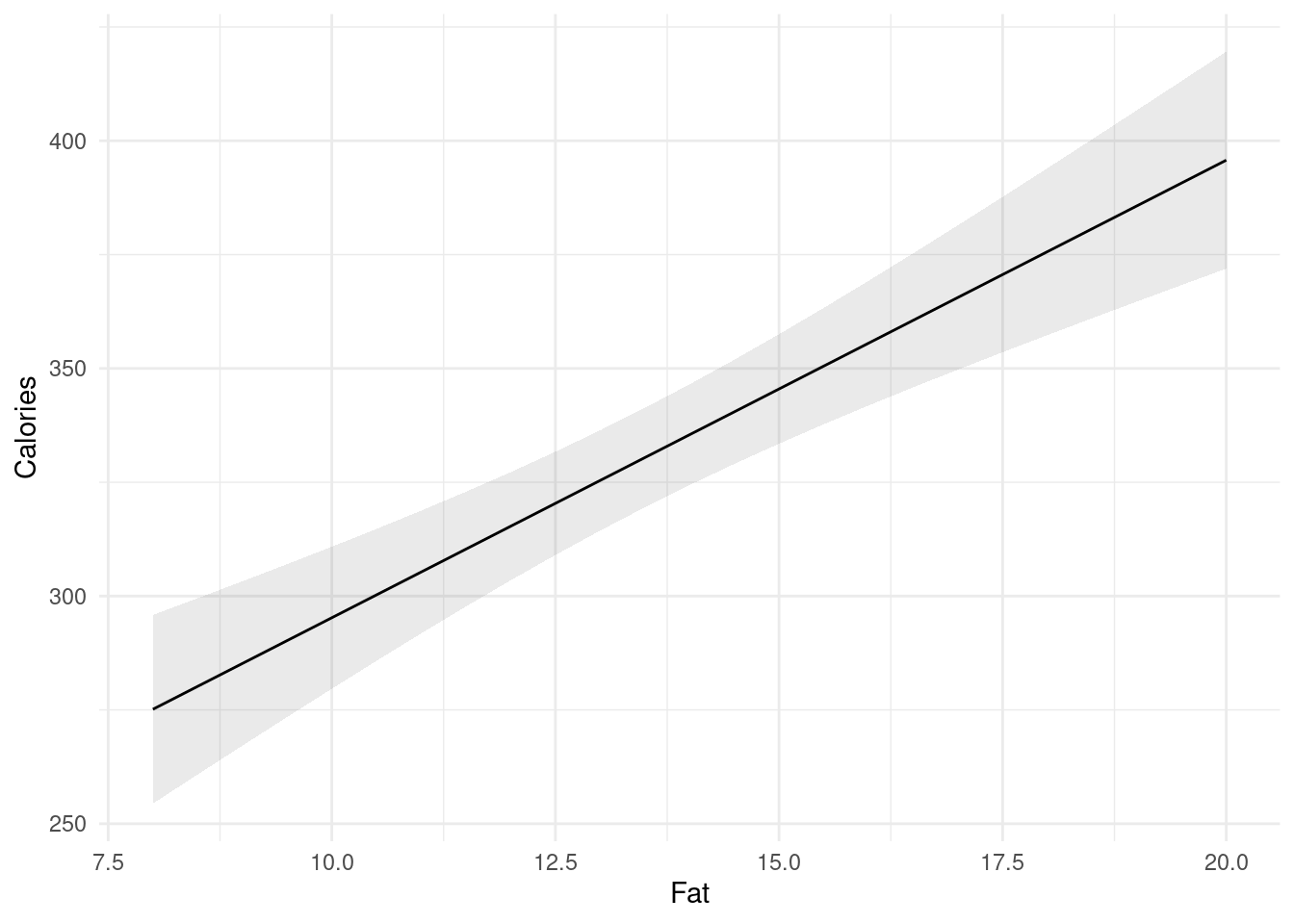

Make a scatterplot for predicting calories from the number of grams of fat. Add a smooth trend. What kind of relationship do you see, if any?

Fit a straight-line relationship, and display the intercept, slope, R-squared, etc. Is there a real relationship between the two variables, or is any apparent trend just chance?

Obtain a plot of the residuals against the fitted values for this regression. Does this indicate that there are any problems with this regression, or not? Explain briefly.

The research assistant in this study returns with two new brands of pizza (ones that were not in the original data). The fat content of a 5-ounce serving was 12 grams for the first brand and 20 grams for the second brand. For each of these brands of pizza, obtain a suitable 95% interval for the number of calories contained in a 5-ounce serving.

18.12 Where should the fire stations be?

In city planning, one major issue is where to locate fire stations. If a city has too many fire stations, it will spend too much on running them, but if it has too few, there may be unnecessary fire damage because the fire trucks take too long to get to the fire.

The first part of a study of this kind of issue is to understand the relationship between the distance from the fire station (measured in miles in our data set) and the amount of fire damage caused (measured in thousands of dollars). A city recorded the fire damage and distance from fire station for 15 residential fires (which you can take as a sample of “all possible residential fires in that city”). The data are in link.

Read in and display the data, verifying that you have the right number of rows and the right columns.

* Obtain a 95% confidence interval for the mean fire damage. (There is nothing here from STAD29, and your answer should have nothing to do with distance.)

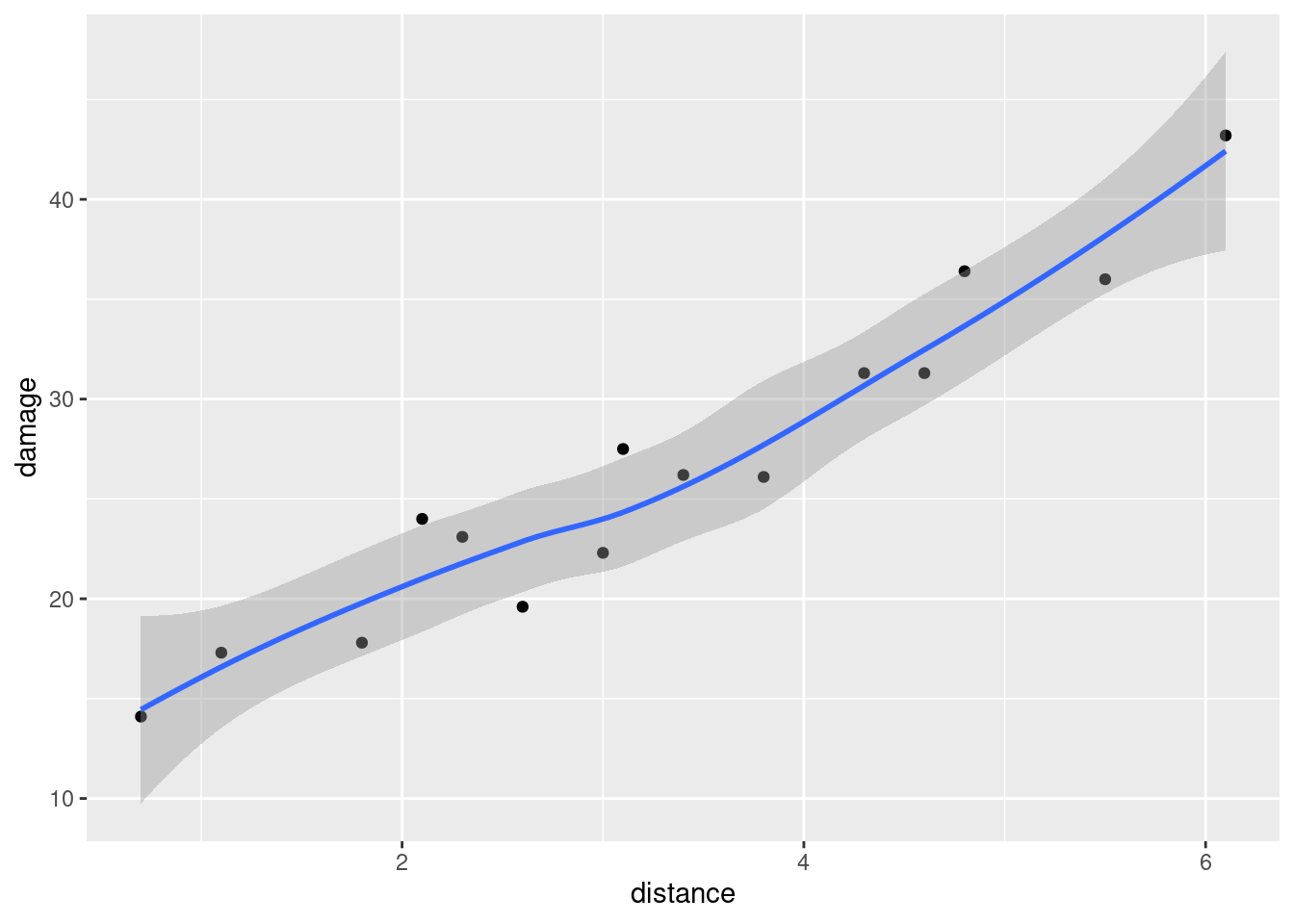

Draw a scatterplot for predicting the amount of fire damage from the distance from the fire station. Add a smooth trend to your plot.

* Is there a relationship between distance from fire station and fire damage? Is it linear or definitely curved? How strong is it? Explain briefly.

Fit a regression predicting fire damage from distance. How is the R-squared consistent (or inconsistent) with your answer from part~(here)?

Obtain a 95% confidence interval for the mean fire damage for a residence that is 4 miles from the nearest fire station*. (Note the contrast with part~(here).)

Compare the confidence intervals of parts (here) and (here). Specifically, compare their centres and their lengths, and explain briefly why the results make sense.

18.13 Making it stop

If you are driving, and you hit the brakes, how far do you travel before coming to a complete stop? Presumably this depends on how fast you are going. Knowing this relationship is important in setting speed limits on roads. For example, on a very bendy road, the speed limit needs to be low, because you cannot see very far ahead, and there could be something just out of sight that you need to stop for.

Data were collected for a typical car and driver, as shown in http://ritsokiguess.site/datafiles/stopping.csv. These are American data, so the speeds are miles per hour and the stopping distances are in feet.

Read in and display (probably all of) the data.

Make a suitable plot of the data.

Describe any trend you see in your graph.

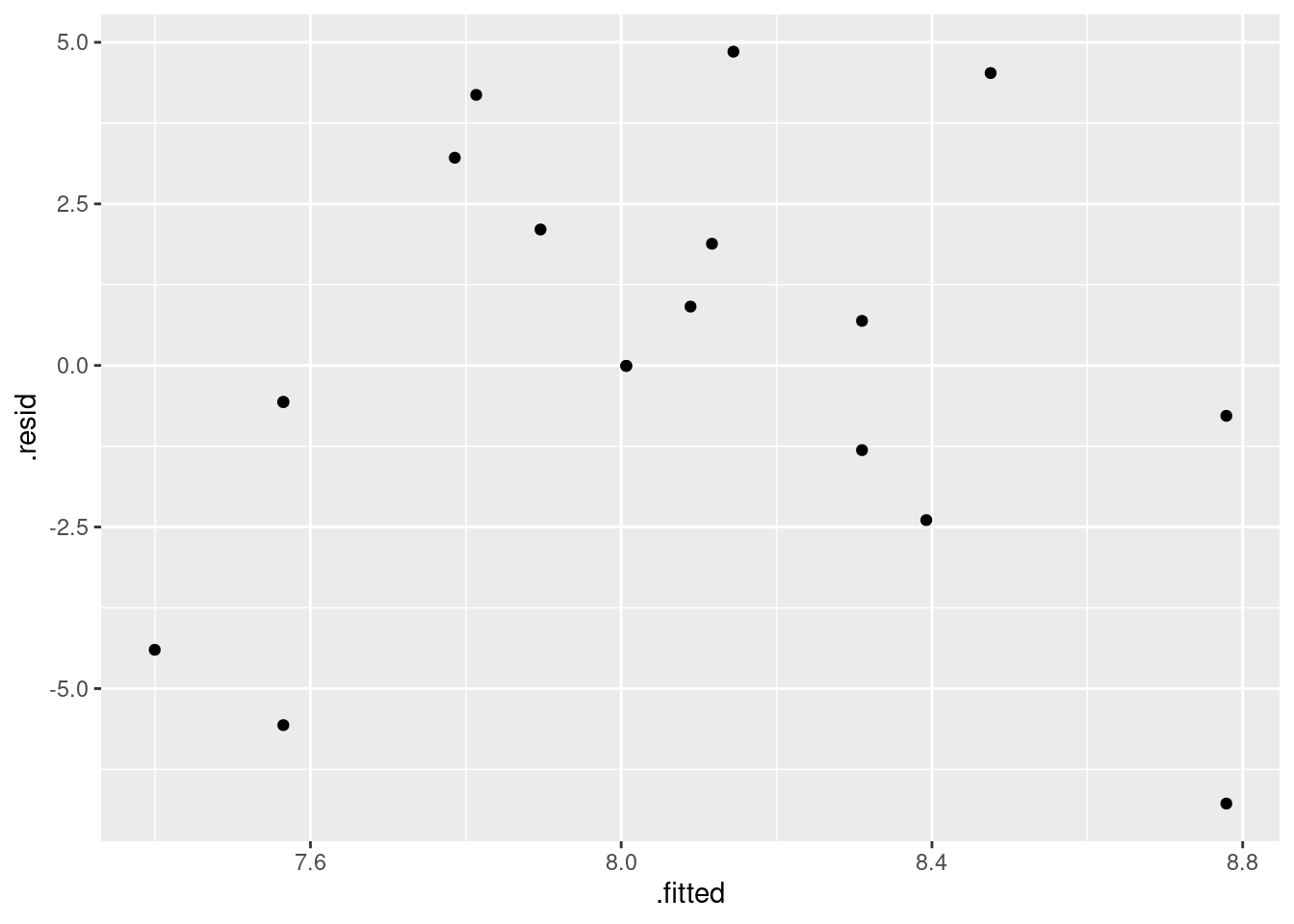

Fit a linear regression predicting stopping distance from speed. (You might have some misgivings about doing this, but do it anyway.)

Plot the residuals against the fitted values for this regression.

What do you learn from the residual plot? Does that surprise you? Explain briefly.

What is the actual relationship between stopping distance and speed, according to the physics? See if you can find out. Cite any books or websites that you use: that is, include a link to a website, or give enough information about a book that the grader could find it.

Fit the relationship that your research indicated (in the previous part) and display the results. Comment briefly on the R-squared value.

Somebody says to you “if you have a regression with a high R-squared, like 95%, there is no need to look for a better model.” How do you respond to this? Explain briefly.

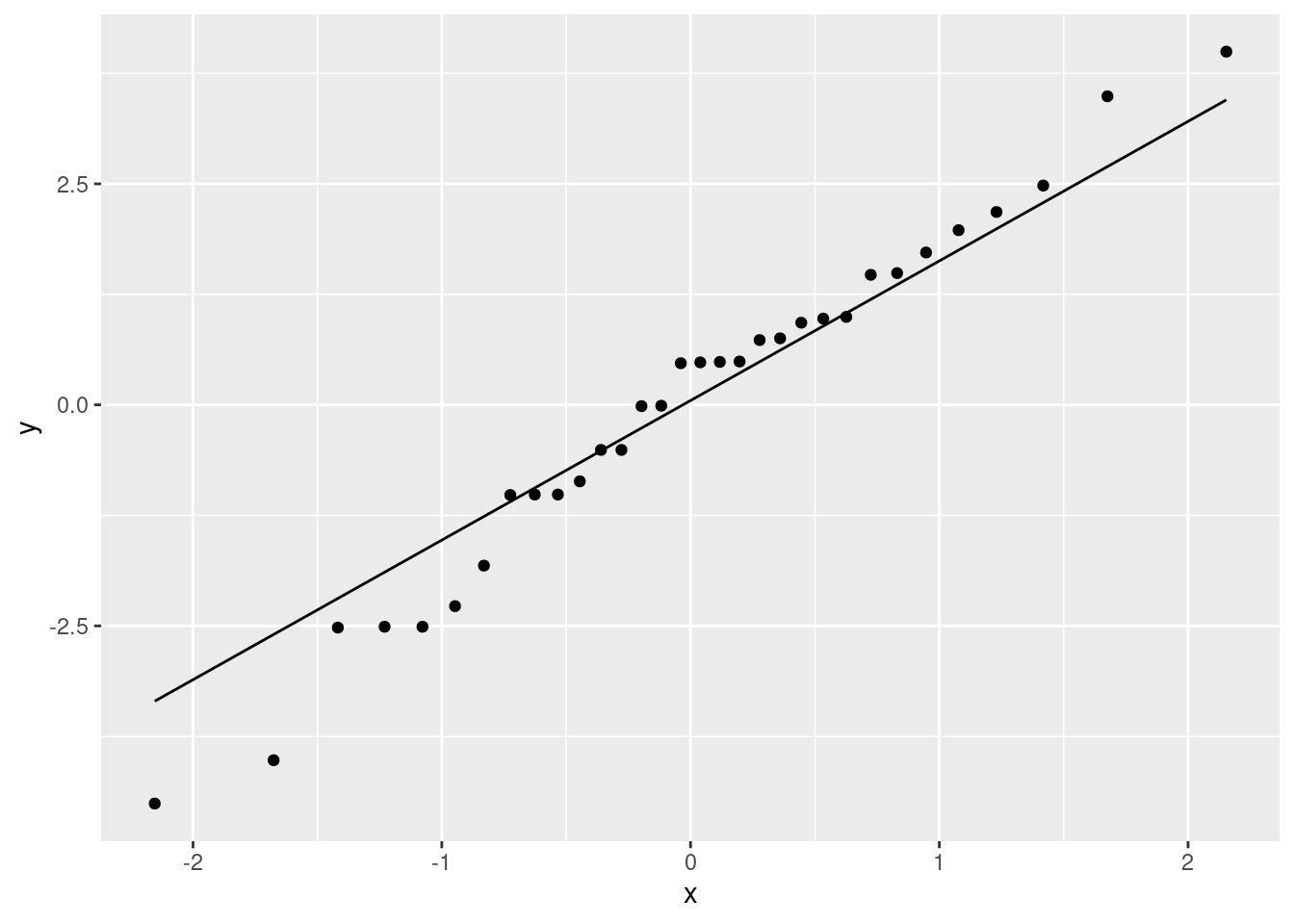

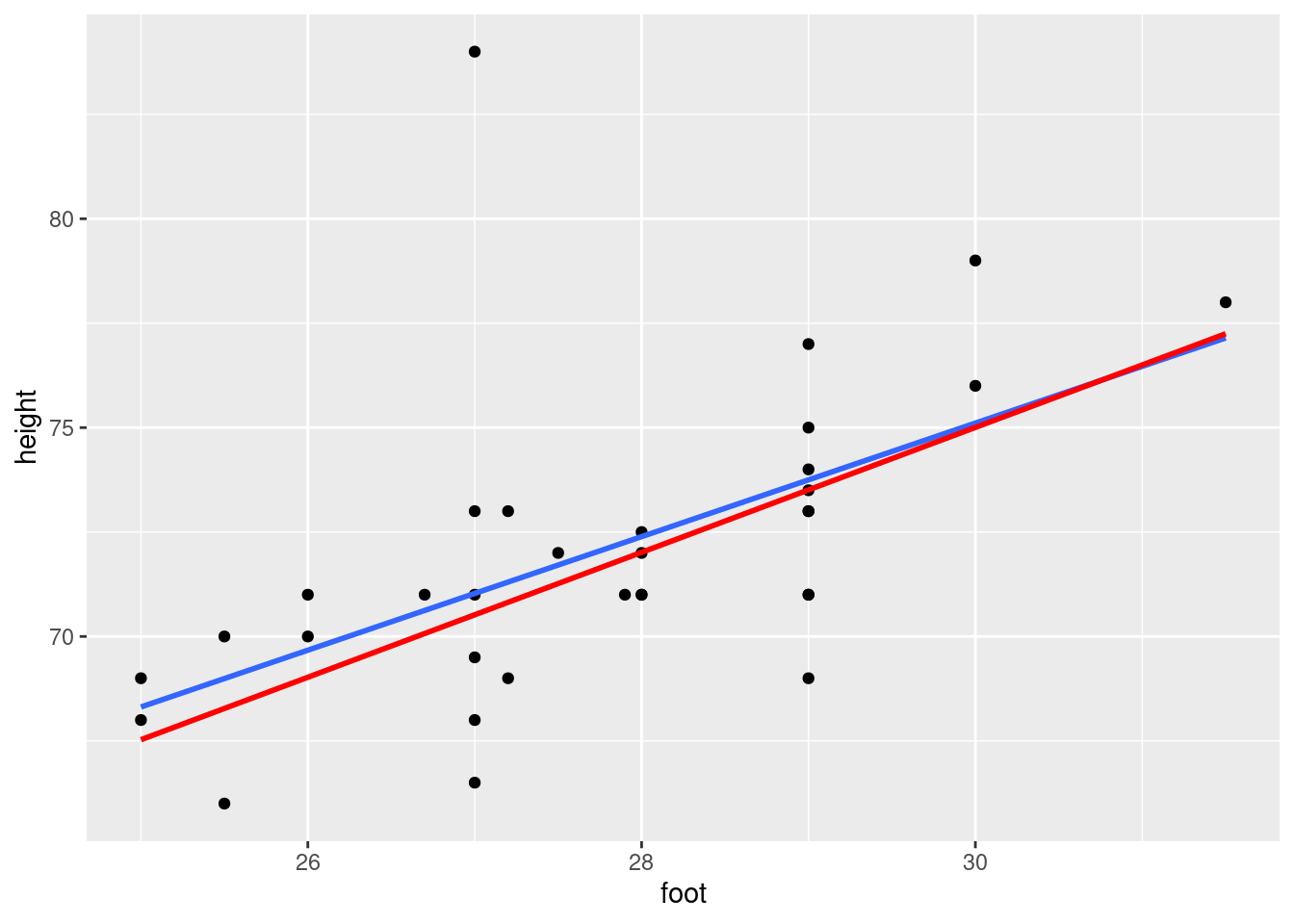

18.14 Predicting height from foot length

Is it possible to estimate the height of a person from the length of their foot? To find out, 33 (male) students had their height and foot length measured. The data are in http://ritsokiguess.site/datafiles/heightfoot.csv.

Read in and display (some of) the data. (If you are having trouble, make sure you have exactly the right URL. The correct URL has no spaces or other strange characters in it.)

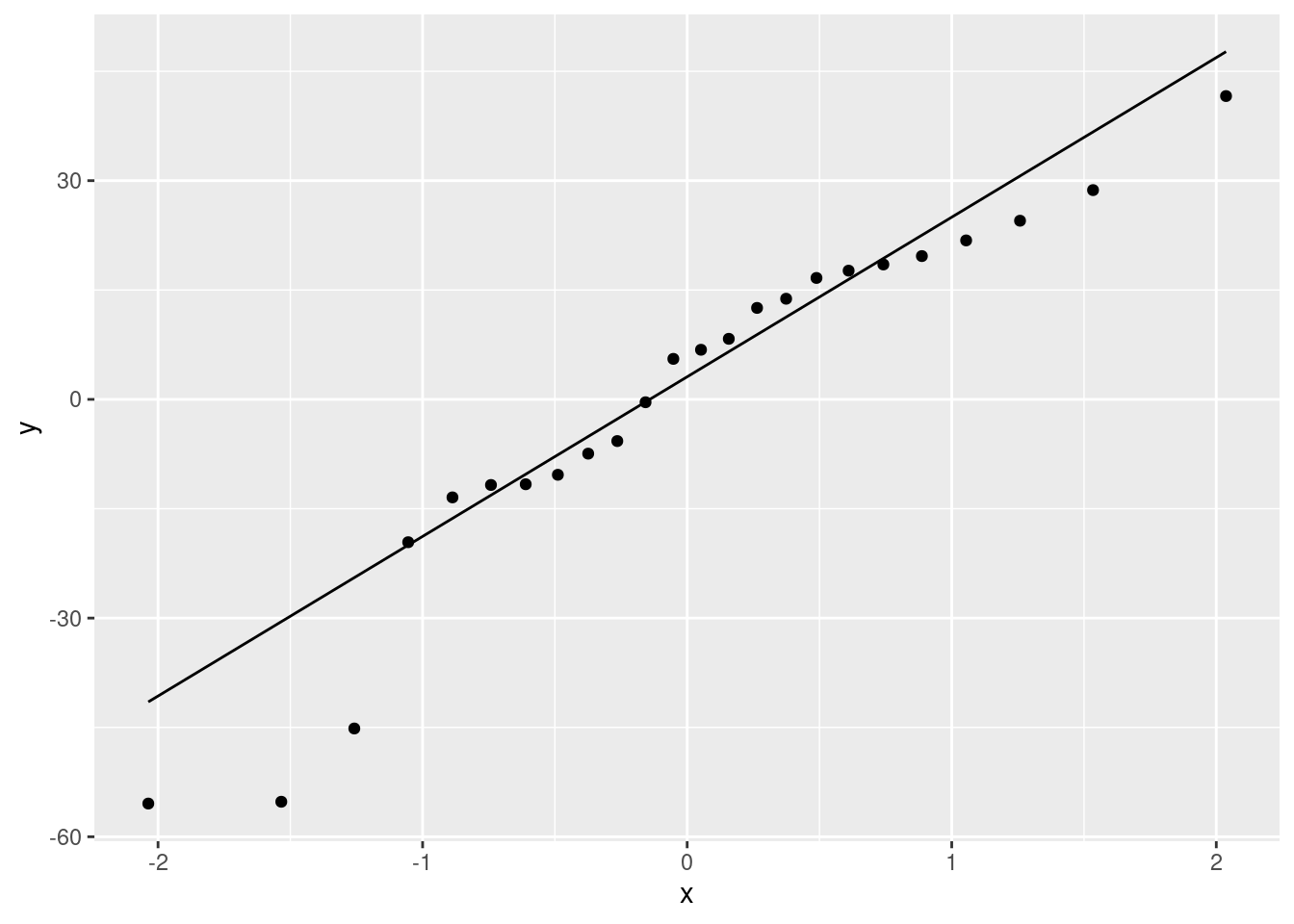

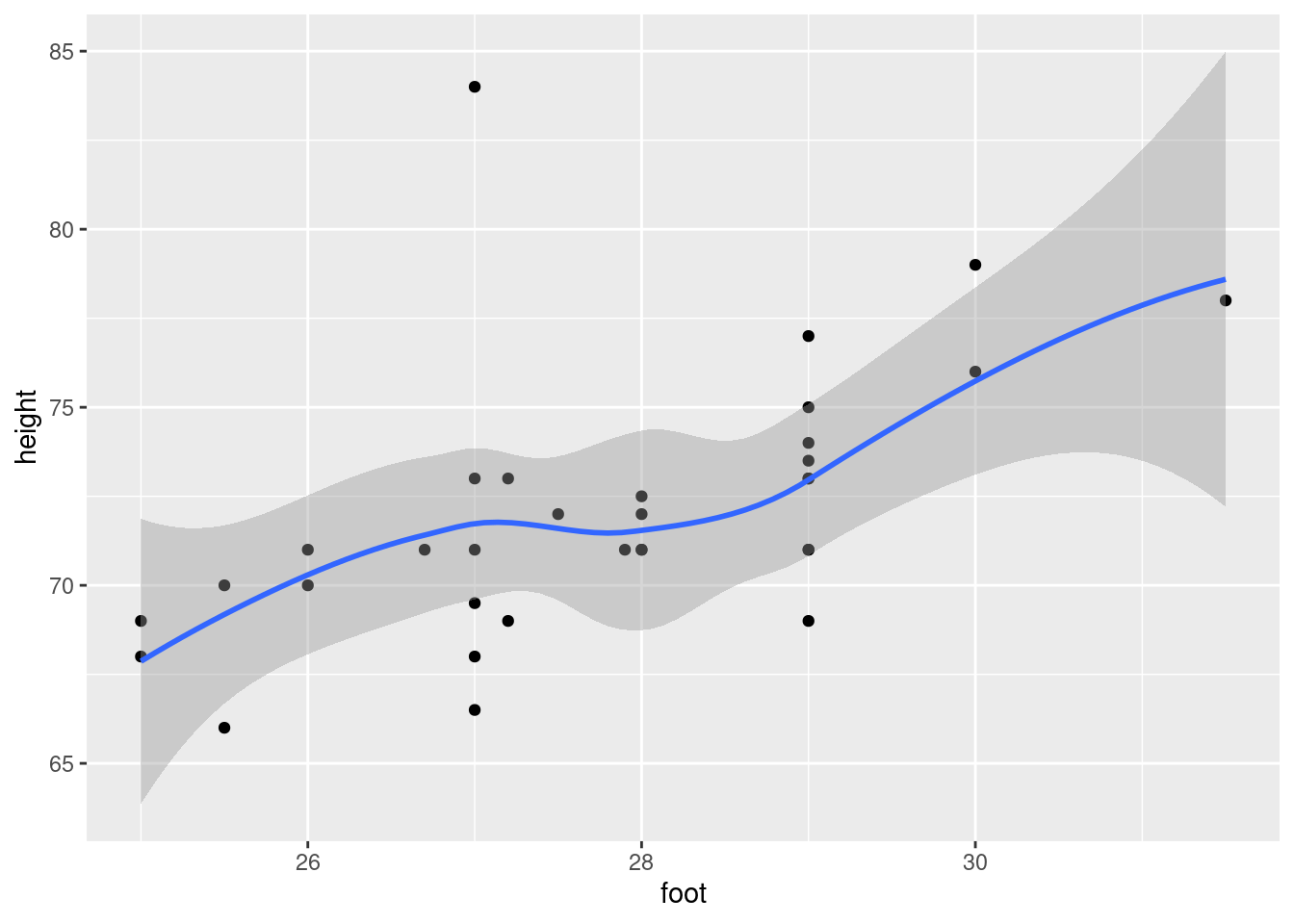

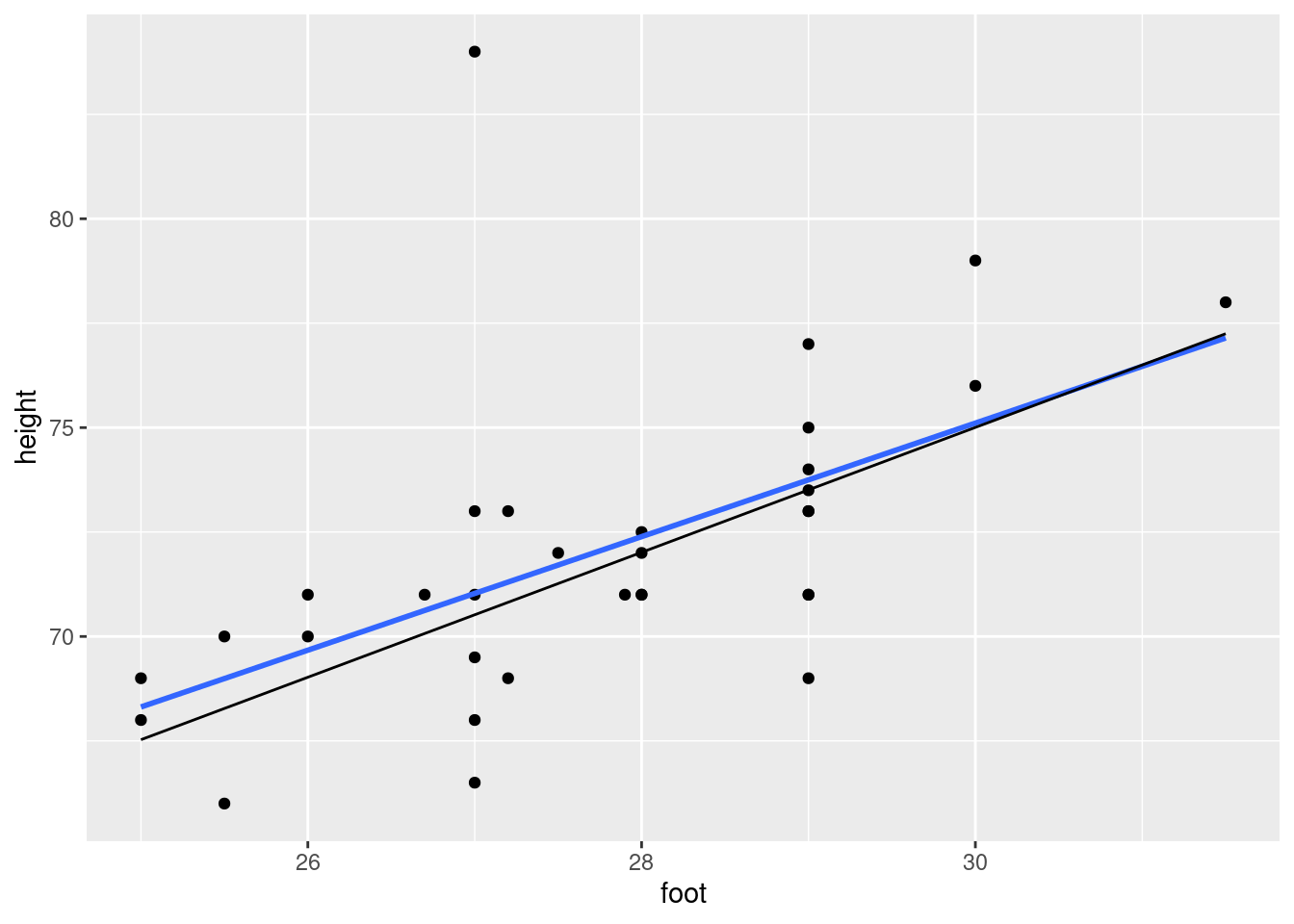

Make a suitable plot of the two variables in the data frame.

Are there any observations not on the trend of the other points? What is unusual about those observations?

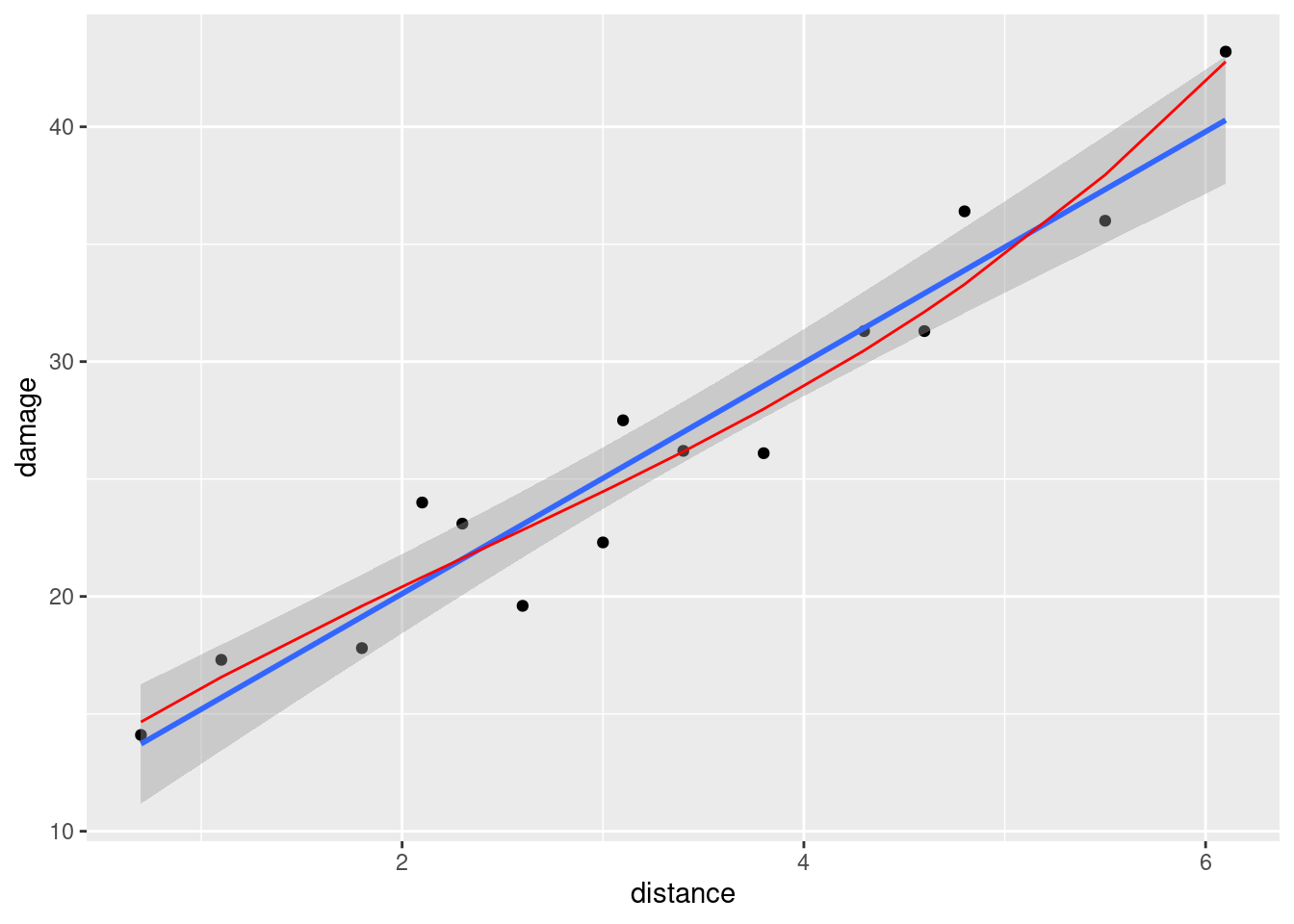

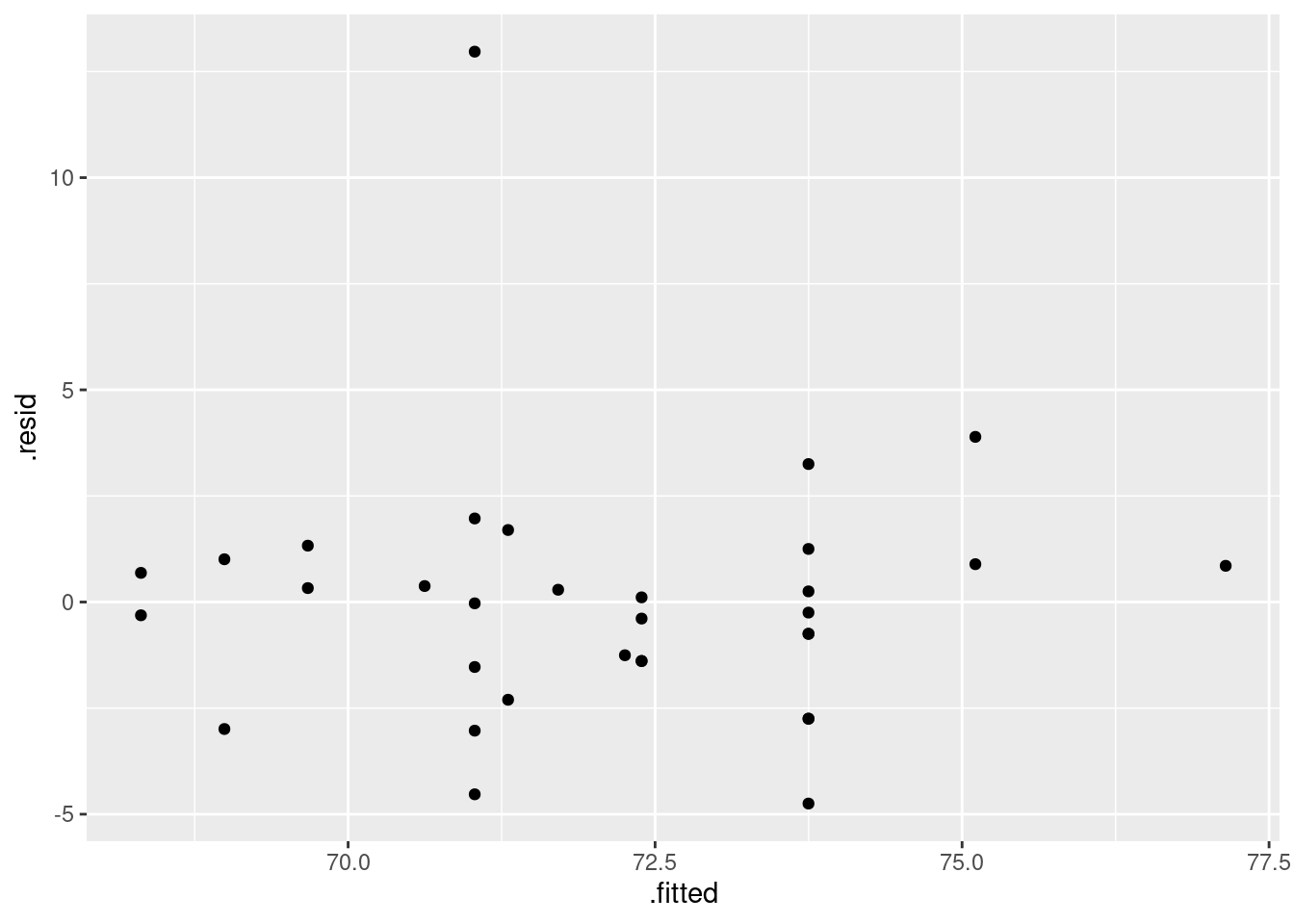

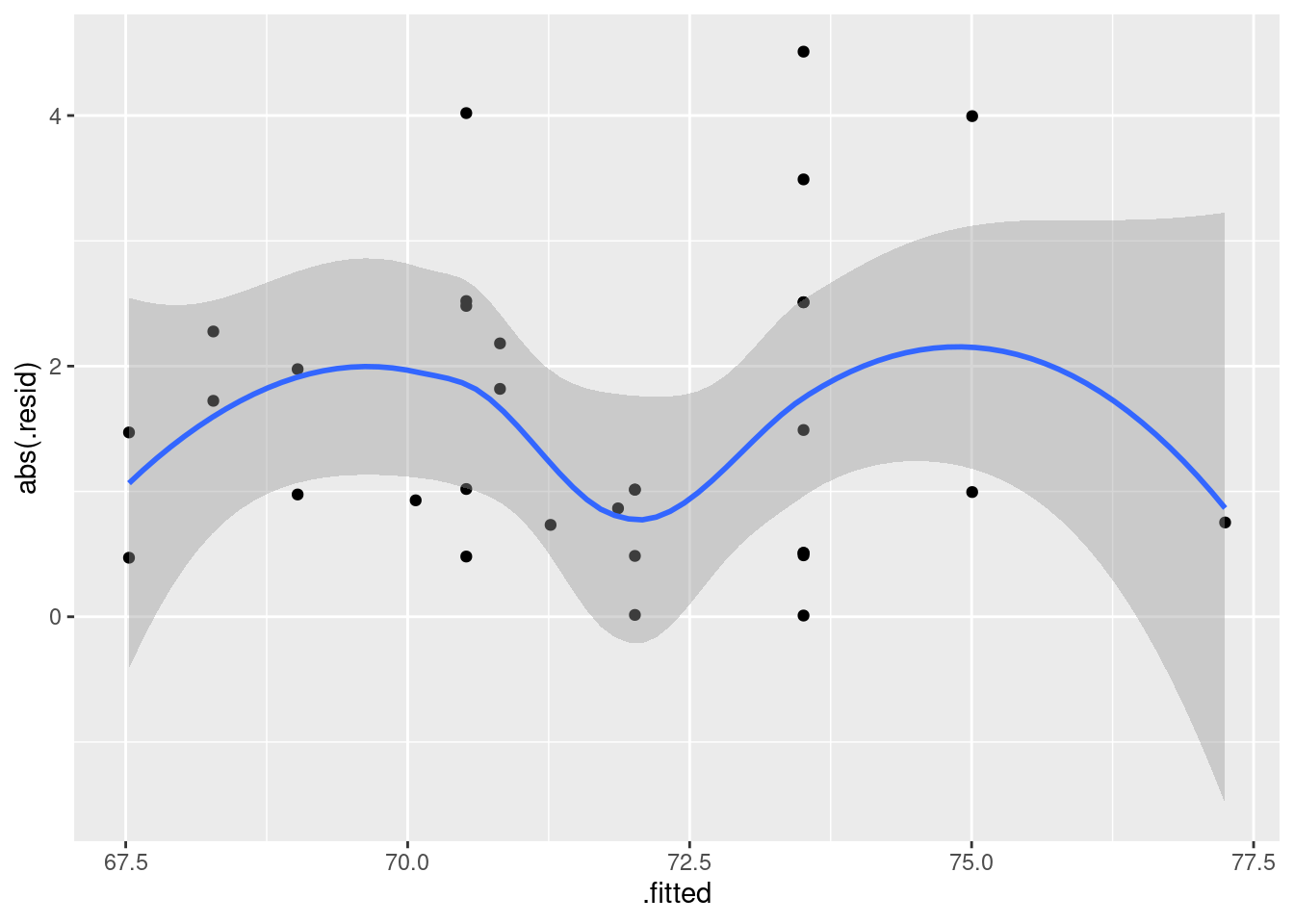

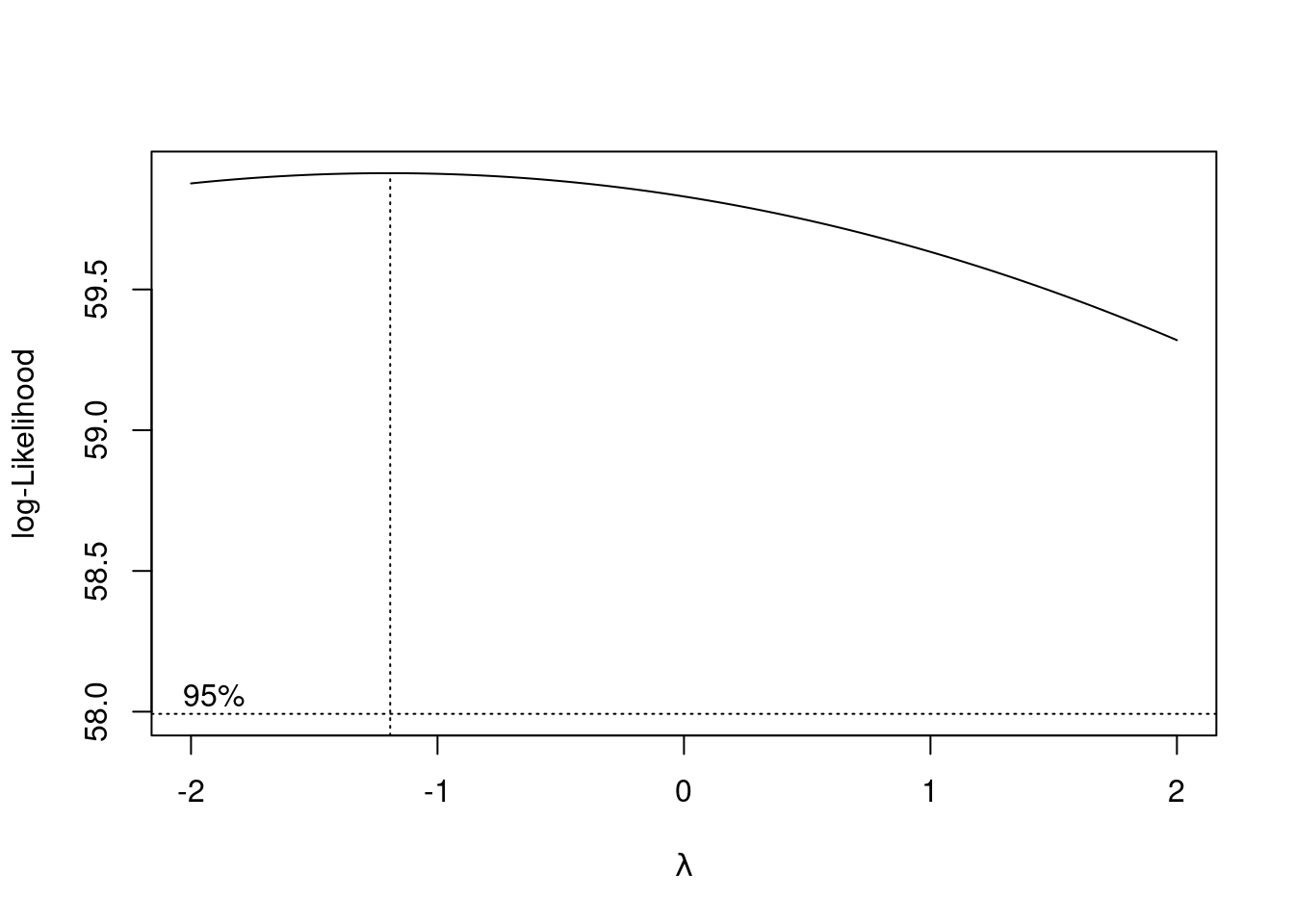

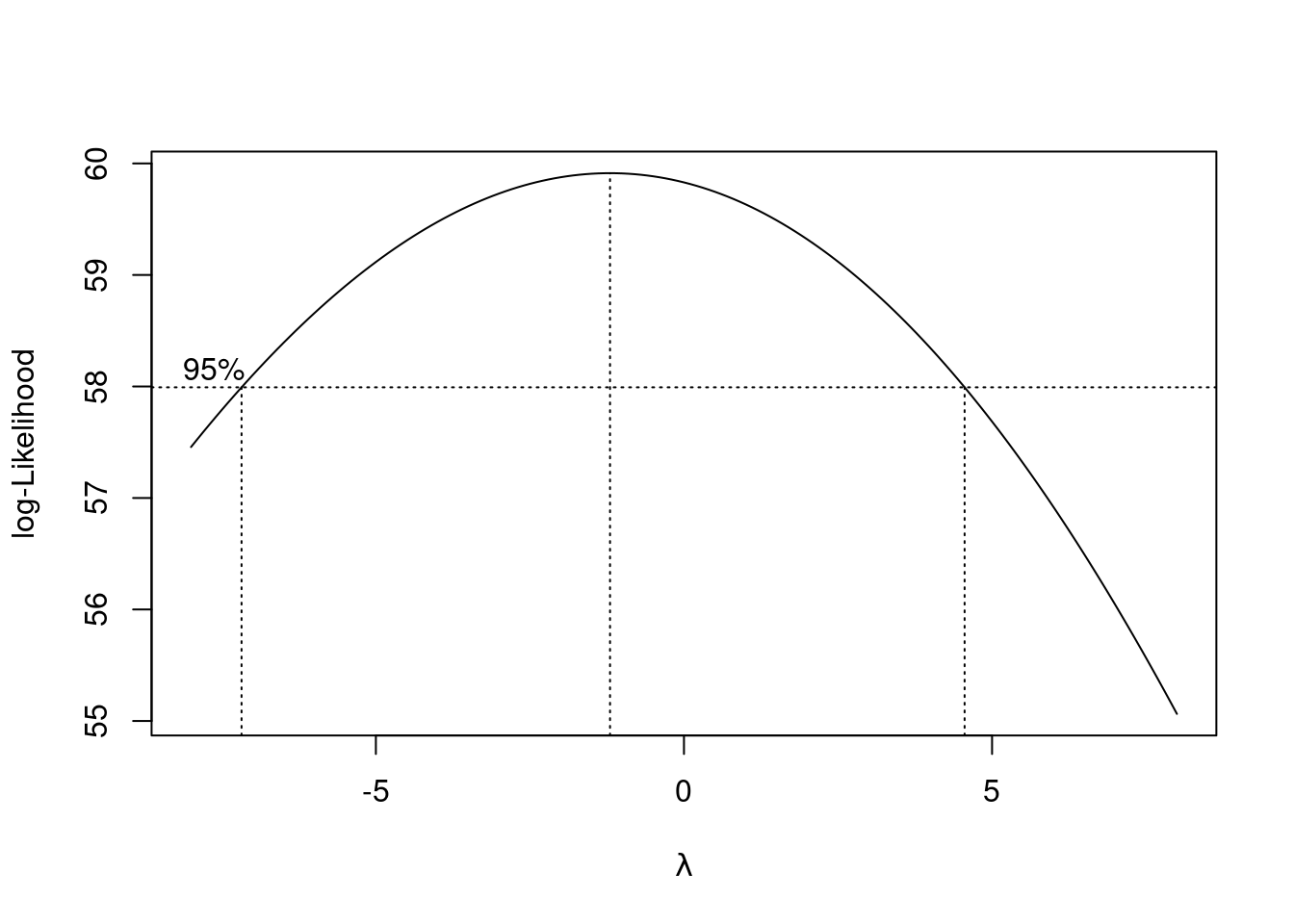

Fit a regression predicting height from foot length, including any observations that you identified in the previous part. For that regression, plot the residuals against the fitted values and make a normal quantile plot of the residuals.

Earlier, you identified one or more observations that were off the trend. How does this point or points show up on each of the plots you drew in the previous part?

Any data points that concerned you earlier were actually errors. Create and save a new data frame that does not contain any of those data points.

Run a regression predicting height from foot length for your data set without errors. Obtain a plot of the residuals against fitted values and a normal quantile plot of the residuals for this regression.

Do you see any problems on the plots you drew in the previous part? Explain briefly.

Find a way to plot the data and both regression lines on the same plot, in such a way that you can see which regression line is which. If you get help from anything outside the course materials, cite your source(s).

Discuss briefly how removing the observation(s) that were errors has changed where the regression line goes, and whether that is what you expected.

My solutions follow:

18.15 Rainfall in California

The data in link are rainfall and other measurements for 30 weather stations in California. Our aim is to understand how well the annual rainfall at these stations (measured in inches) can be predicted from the other measurements, which are the altitude (in feet above sea level), the latitude (degrees north of the equator) and the distance from the coast (in miles).

- Read the data into R. You’ll have to be careful here, since the

values are space-delimited, but sometimes by more than one space, to

make the columns line up.

read_table2, with filename or url, will read it in. One of the variables is calledrainfall, so as long as you do not call the data frame that, you should be safe.

Solution

I used rains as the name of my data frame:

my_url="http://ritsokiguess.site/datafiles/calirain.txt"

rains=read_table2(my_url)## Warning: `read_table2()` was deprecated in readr 2.0.0.

## ℹ Please use `read_table()` instead.##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## station = col_character(),

## rainfall = col_double(),

## altitude = col_double(),

## latitude = col_double(),

## fromcoast = col_double()

## )I have the right number of rows and columns.

There is also read_table, but that requires all the

columns, including the header row, to be lined up. You can try that

here and see how it fails.

I don’t need you to investigate the data yet (that happens in the next part), but this is interesting (to me):

rains## # A tibble: 30 × 5

## station rainfall altitude latitude fromcoast

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Eureka 39.6 43 40.8 1

## 2 RedBluff 23.3 341 40.2 97

## 3 Thermal 18.2 4152 33.8 70

## 4 FortBragg 37.5 74 39.4 1

## 5 SodaSprings 49.3 6752 39.3 150

## 6 SanFrancisco 21.8 52 37.8 5

## 7 Sacramento 18.1 25 38.5 80

## 8 SanJose 14.2 95 37.4 28

## 9 GiantForest 42.6 6360 36.6 145

## 10 Salinas 13.8 74 36.7 12

## # … with 20 more rowsSome of the station names are two words, but they have been smooshed

into one word, so that read_table2 will recognize them as a

single thing. Someone had already done that for us, so I didn’t even

have to do it myself.

If the station names had been two genuine words, a .csv would

probably have been the best choice (the actual data values being

separated by commas then, and not spaces).

\(\blacksquare\)

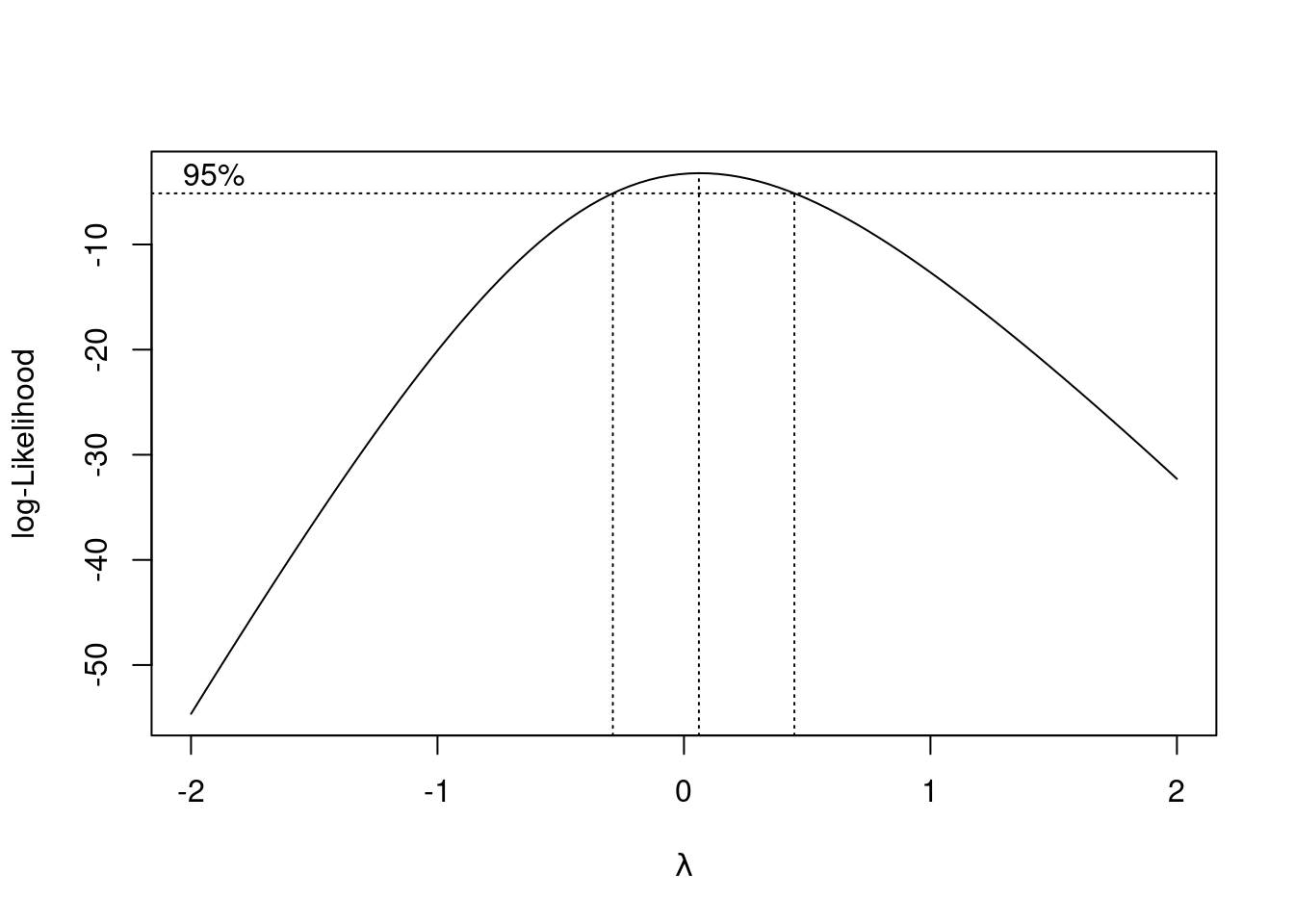

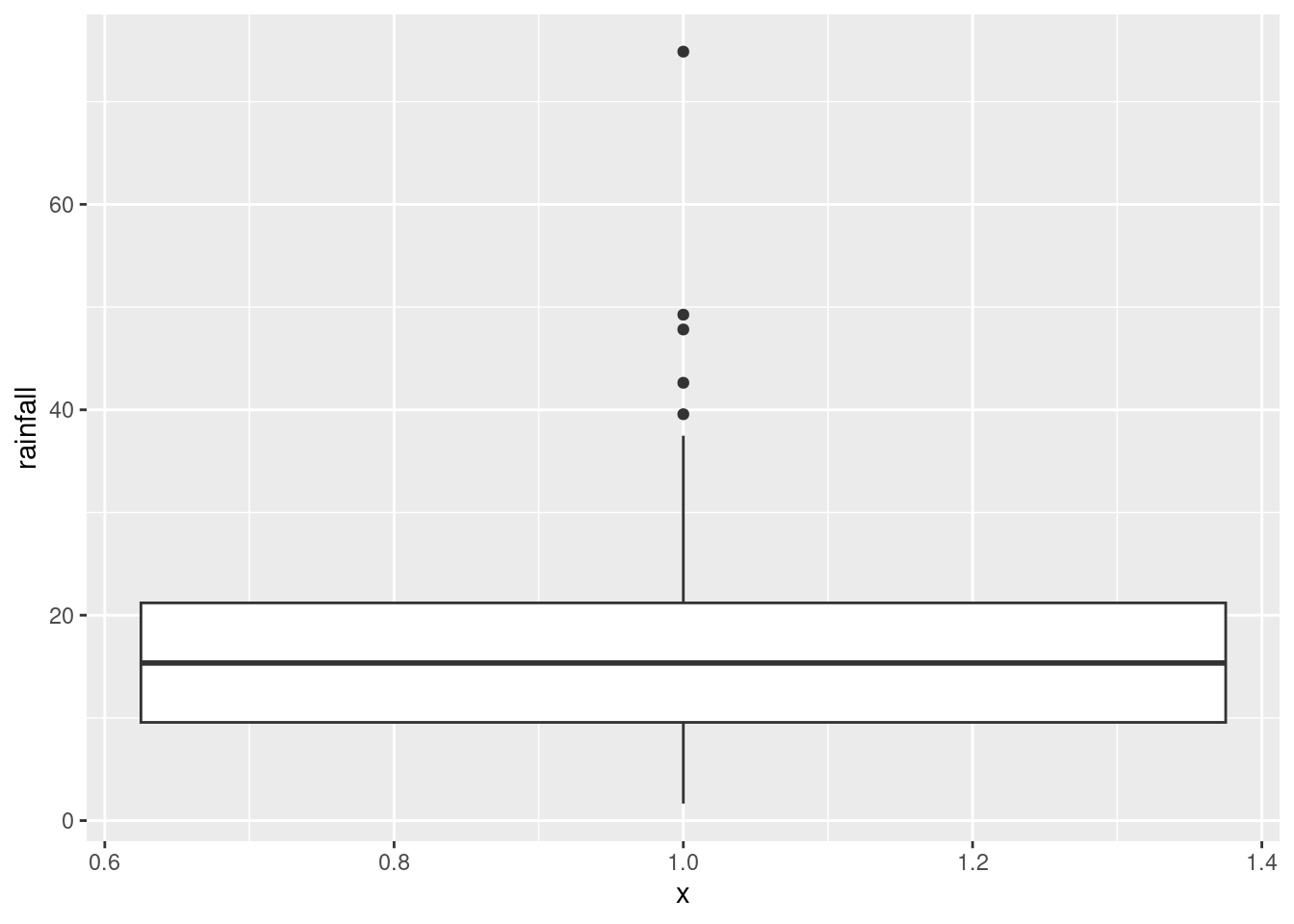

- Make a boxplot of the rainfall figures, and explain why the

values are reasonable. (A rainfall cannot be negative, and it is

unusual for a annual rainfall to exceed 60 inches.) A

ggplotboxplot needs something on the \(x\)-axis: the number 1 will do.

Solution

ggplot(rains,aes(y=rainfall,x=1))+geom_boxplot()

There is only one rainfall over 60 inches, and the smallest one is close to zero but positive, so that is good.

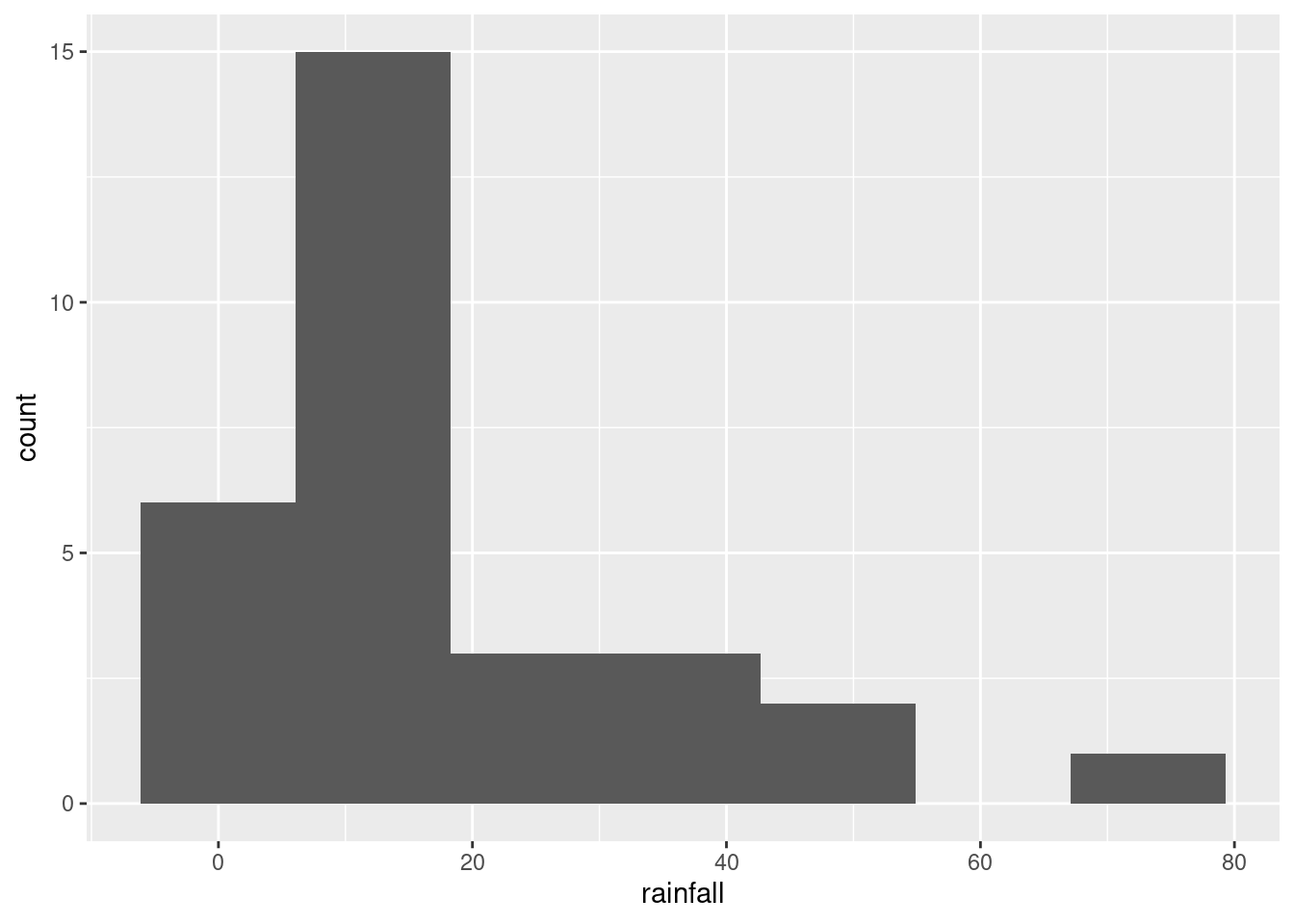

Another possible plot here is a histogram, since there is only one quantitative variable:

ggplot(rains, aes(x=rainfall))+geom_histogram(bins=7)

This clearly shows the rainfall value above 60 inches, but some other things are less clear: are those two rainfall values around 50 inches above or below 50, and are those six rainfall values near zero actually above zero? Extra: What stations have those extreme values? Should you wish to find out:

rains %>% filter(rainfall>60)## # A tibble: 1 × 5

## station rainfall altitude latitude fromcoast

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 CrescentCity 74.9 35 41.7 1This is a place right on the Pacific coast, almost up into Oregon (it’s almost the northernmost of all the stations). So it makes sense that it would have a high rainfall, if anywhere does. (If you know anything about rainy places, you’ll probably think of Vancouver and Seattle, in the Pacific Northwest.) Here it is: link. Which station has less than 2 inches of annual rainfall?

rains %>% filter(rainfall<2) ## # A tibble: 1 × 5

## station rainfall altitude latitude fromcoast

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 DeathValley 1.66 -178 36.5 194The name of the station is a clue: this one is in the desert. So you’d expect very little rain. Its altitude is negative, so it’s actually below sea level. This is correct. Here is where it is: link.

\(\blacksquare\)

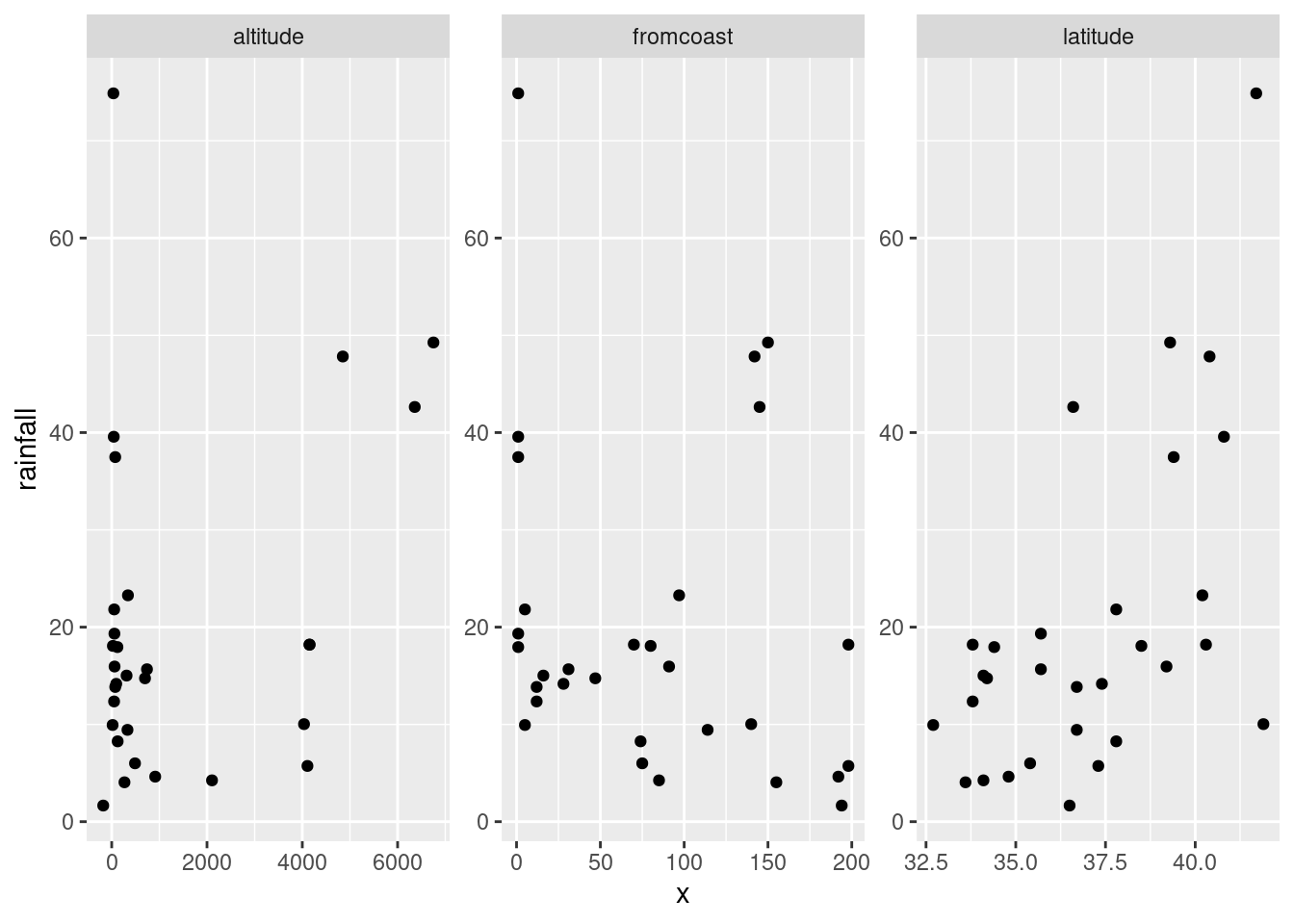

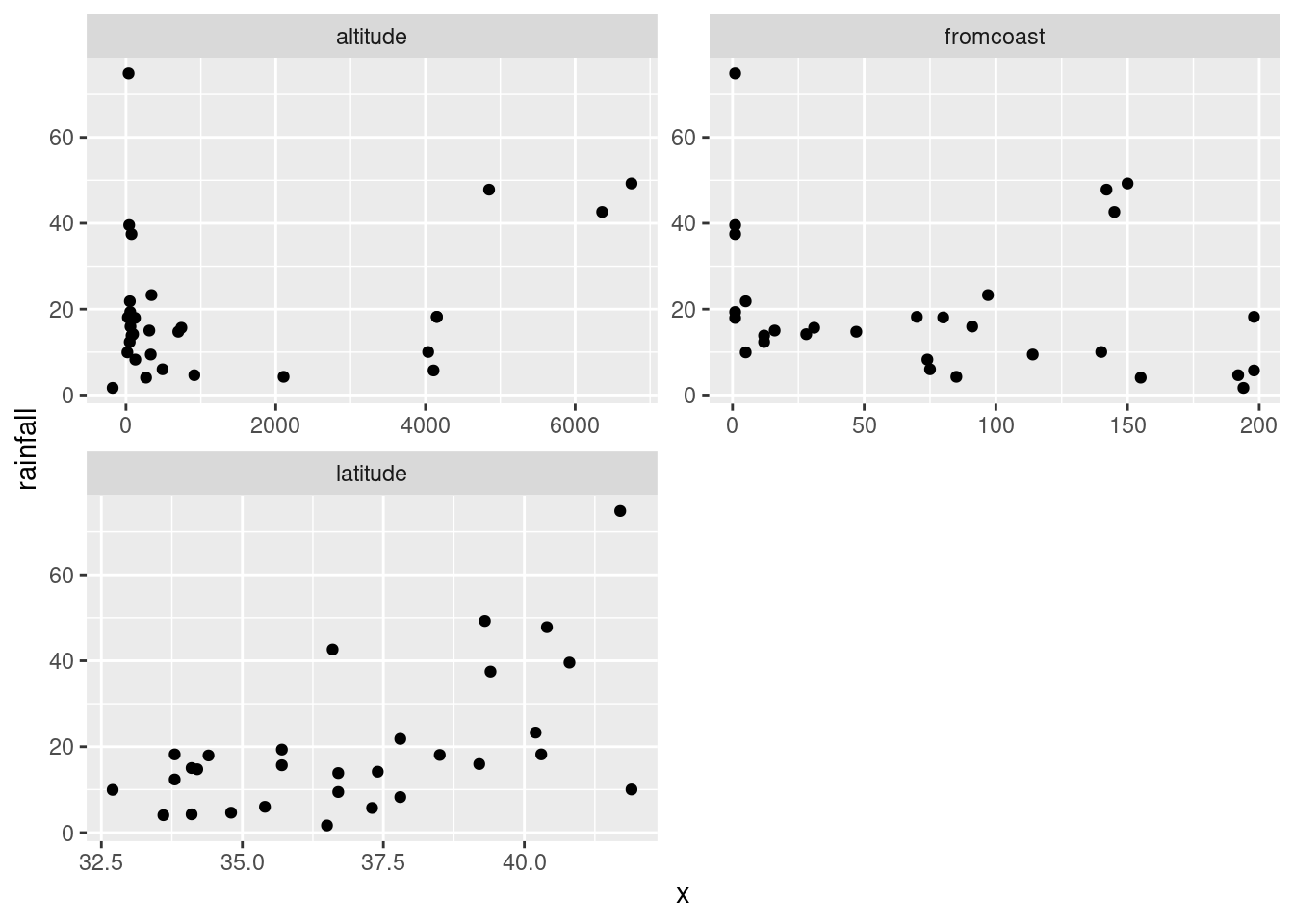

- Plot

rainfallagainst each of the other quantitative variables (that is, notstation).

Solution

That is, altitude, latitude and

fromcoast. The obvious way to do this (perfectly

acceptable) is one plot at a time:

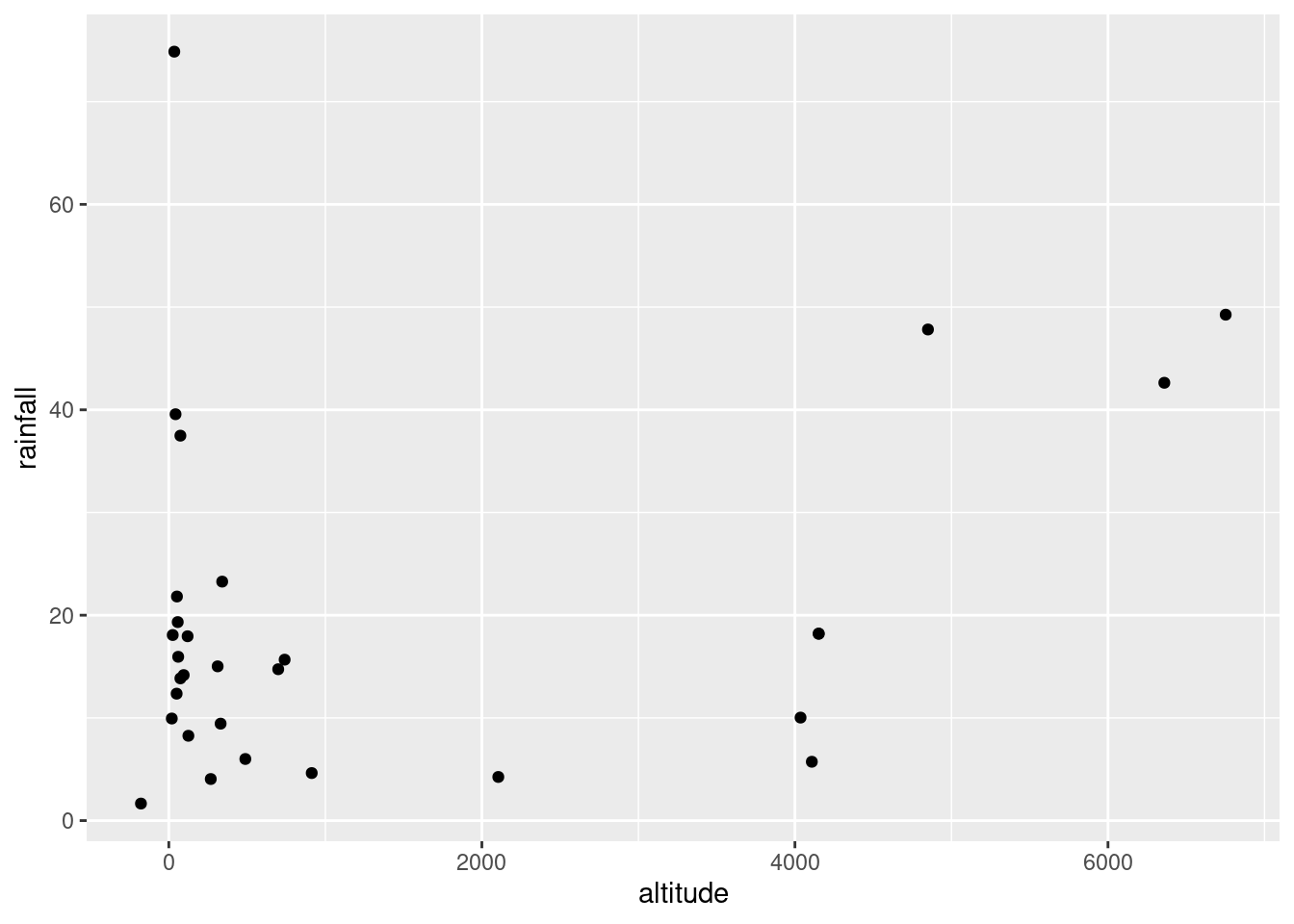

ggplot(rains,aes(y=rainfall,x=altitude))+geom_point()

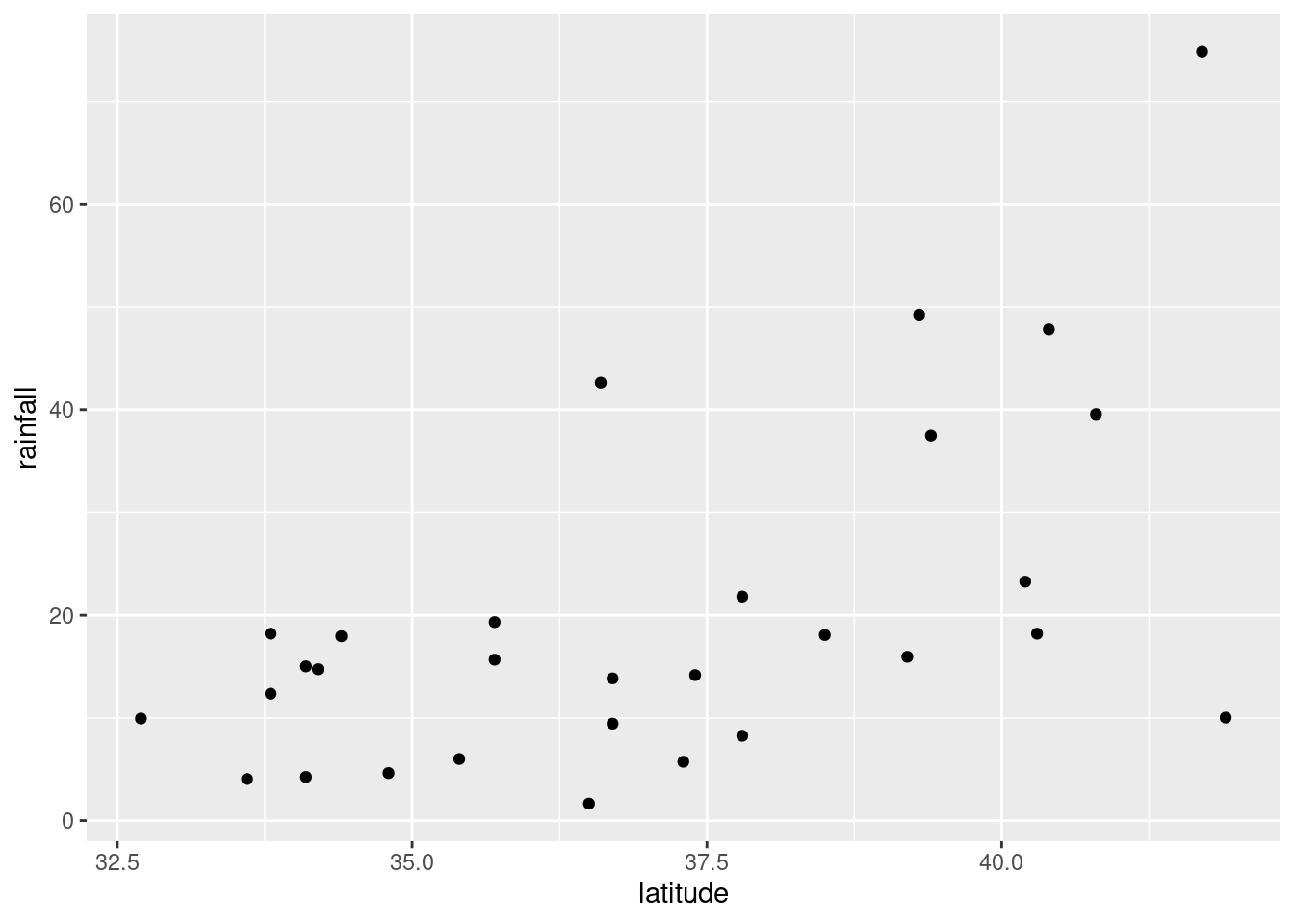

ggplot(rains,aes(y=rainfall,x=latitude))+geom_point()

and finally

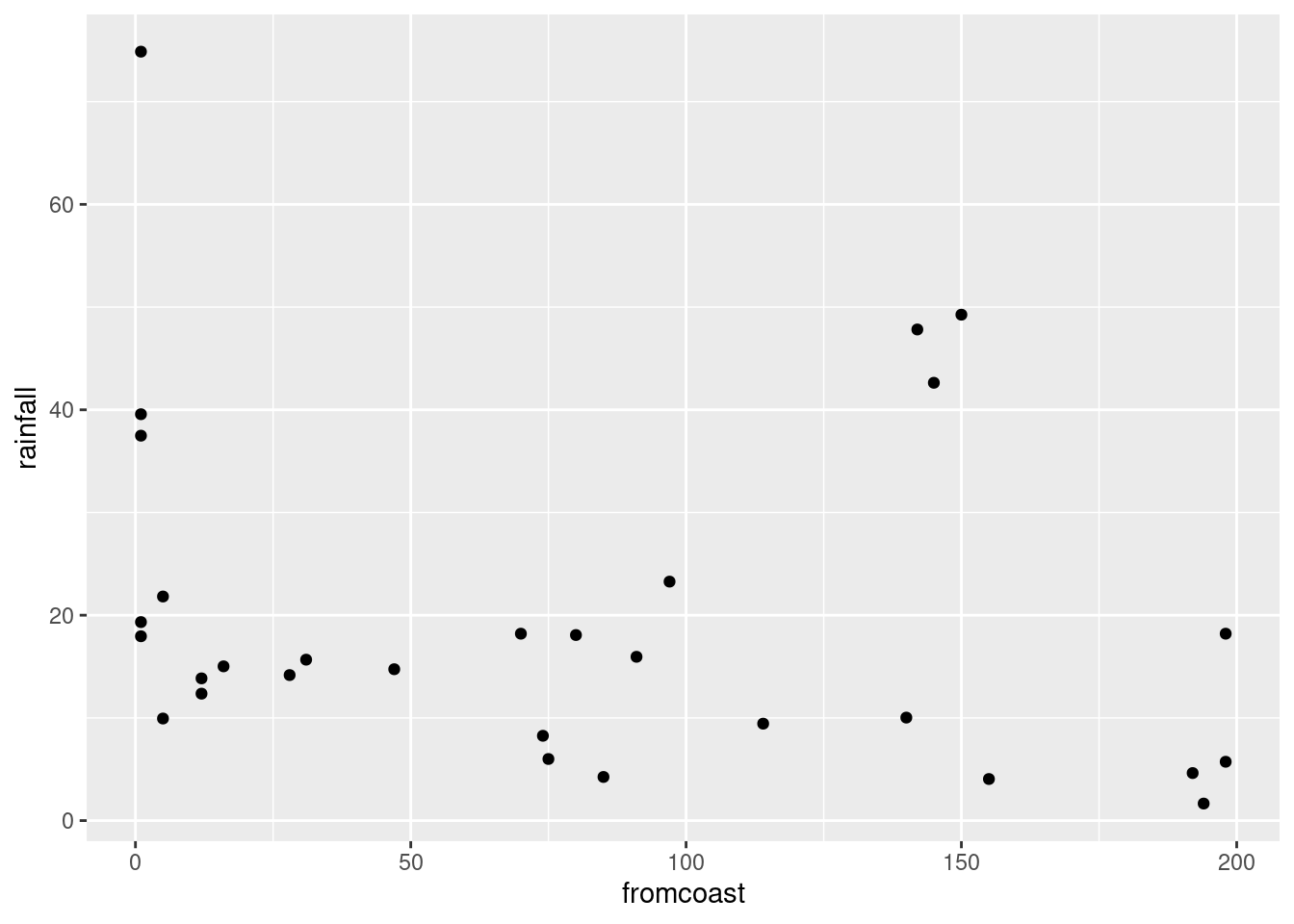

ggplot(rains,aes(y=rainfall,x=fromcoast))+geom_point()

You can add a smooth trend to these if you want. Up to you. Just the points is fine with me.

Here is a funky way to get all three plots in one shot:

rains %>%

pivot_longer(altitude:fromcoast, names_to="xname",values_to="x") %>%

ggplot(aes(x=x,y=rainfall))+geom_point()+

facet_wrap(~xname,scales="free")

This always seems extraordinarily strange if you haven’t run into it before. The strategy is to put all the \(x\)-variables you want to plot into one column and then plot your \(y\) against the \(x\)-column. Thus: make a column of all the \(x\)’s glued together, labelled by which \(x\) they are, then plot \(y\) against \(x\) but make a different sub-plot or “facet” for each different \(x\)-name. The last thing is that each \(x\) is measured on a different scale, and unless we take steps, all the sub-plots will have the same scale on each axis, which we don’t want.

I’m not sure I like how it came out, with three very tall

plots. facet_wrap can also take an nrow or an

ncol, which tells it how many rows or columns to use for the

display. Here, for example, two columns because I thought three was

too many:

rains %>%

pivot_longer(altitude:fromcoast, names_to="xname",values_to="x") %>%

ggplot(aes(x=x,y=rainfall))+geom_point()+

facet_wrap(~xname,scales="free",ncol=2)

Now, the three plots have come out about square, or at least “landscape”, which I like a lot better.

\(\blacksquare\)

- Look at the relationship of each other variable with

rainfall. Justify the assertion thatlatitudeseems most strongly related withrainfall. Is that relationship positive or negative? linear? Explain briefly.

Solution

Let’s look at the three variables in turn:

altitude: not much of anything. The stations near sea level have rainfall all over the place, though the three highest-altitude stations have the three highest rainfalls apart from Crescent City.latitude: there is a definite upward trend here, in that stations further north (higher latitude) are likely to have a higher rainfall. I’d call this trend linear (or, not obviously curved), though the two most northerly stations have one higher and one much lower rainfall than you’d expect.fromcoast: this is a weak downward trend, though the trend is spoiled by those three stations about 150 miles from the coast that have more than 40 inches of rainfall.

Out of those, only latitude seems to have any meaningful

relationship with rainfall.

\(\blacksquare\)

- Fit a regression with

rainfallas the response variable, andlatitudeas your explanatory variable. What are the intercept, slope and R-squared values? Is there a significant relationship betweenrainfalland your explanatory variable? What does that mean?

Solution

Save your lm into a

variable, since it will get used again later:

rainfall.1=lm(rainfall~latitude,data=rains)

summary(rainfall.1)##

## Call:

## lm(formula = rainfall ~ latitude, data = rains)

##

## Residuals:

## Min 1Q Median 3Q Max

## -27.297 -7.956 -2.103 6.082 38.262

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -113.3028 35.7210 -3.172 0.00366 **

## latitude 3.5950 0.9623 3.736 0.00085 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 13.82 on 28 degrees of freedom

## Multiple R-squared: 0.3326, Adjusted R-squared: 0.3088

## F-statistic: 13.96 on 1 and 28 DF, p-value: 0.0008495My intercept is \(-113.3\), slope is \(3.6\) and R-squared is \(0.33\) or 33%. (I want you to pull these numbers out of the output and round them off to something sensible.) The slope is significantly nonzero, its P-value being 0.00085: rainfall really does depend on latitude, although not strongly so.

Extra: Of course, I can easily do the others as well, though you don’t have to:

rainfall.2=lm(rainfall~fromcoast,data=rains)

summary(rainfall.2)##

## Call:

## lm(formula = rainfall ~ fromcoast, data = rains)

##

## Residuals:

## Min 1Q Median 3Q Max

## -15.240 -9.431 -6.603 2.871 51.147

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 23.77306 4.61296 5.154 1.82e-05 ***

## fromcoast -0.05039 0.04431 -1.137 0.265

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 16.54 on 28 degrees of freedom

## Multiple R-squared: 0.04414, Adjusted R-squared: 0.01

## F-statistic: 1.293 on 1 and 28 DF, p-value: 0.2651Here, the intercept is 23.8, the slope is \(-0.05\) and R-squared is a dismal 0.04 (4%). This is a way of seeing that this relationship is really weak, and it doesn’t even have a curve to the trend or anything that would compensate for this. I looked at the scatterplot again and saw that if it were not for the point bottom right which is furthest from the coast and has almost no rainfall, there would be almost no trend at all. The slope here is not significantly different from zero, with a P-value of 0.265.

Finally:

rainfall.3=lm(rainfall~altitude,data=rains)

summary(rainfall.3)##

## Call:

## lm(formula = rainfall ~ altitude, data = rains)

##

## Residuals:

## Min 1Q Median 3Q Max

## -20.620 -8.479 -2.729 4.555 58.271

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 16.514799 3.539141 4.666 6.9e-05 ***

## altitude 0.002394 0.001428 1.676 0.105

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 16.13 on 28 degrees of freedom

## Multiple R-squared: 0.09121, Adjusted R-squared: 0.05875

## F-statistic: 2.81 on 1 and 28 DF, p-value: 0.1048The intercept is 16.5, the slope is 0.002 and the R-squared is 0.09 or 9%, also terrible. The P-value is 0.105, which is not small enough to be significant.

So it looks as if it’s only latitude that

has any impact at all. This is the only explanatory variable with a

significantly nonzero slope. On its own, at least.

\(\blacksquare\)

- Fit a multiple regression predicting

rainfallfrom all three of the other (quantitative) variables. Display the results. Comment is coming up later.

Solution

This, then:

rainfall.4=lm(rainfall~latitude+altitude+fromcoast,data=rains)

summary(rainfall.4)##

## Call:

## lm(formula = rainfall ~ latitude + altitude + fromcoast, data = rains)

##

## Residuals:

## Min 1Q Median 3Q Max

## -28.722 -5.603 -0.531 3.510 33.317

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.024e+02 2.921e+01 -3.505 0.001676 **

## latitude 3.451e+00 7.949e-01 4.342 0.000191 ***

## altitude 4.091e-03 1.218e-03 3.358 0.002431 **

## fromcoast -1.429e-01 3.634e-02 -3.931 0.000559 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 11.1 on 26 degrees of freedom

## Multiple R-squared: 0.6003, Adjusted R-squared: 0.5542

## F-statistic: 13.02 on 3 and 26 DF, p-value: 2.205e-05\(\blacksquare\)

- What is the R-squared for the regression of the last part? How does that compare with the R-squared of your regression in part (e)?

Solution

The R-squared is 0.60 (60%), which is quite a bit bigger than the R-squared of 0.33 (33%) we got back in (e).

\(\blacksquare\)

- What do you conclude about the importance of the variables that you did not include in your model in (e)? Explain briefly.

Solution

Both variables altitude and fromcoast are

significant in this regression, so they have something to add over and above latitude when it comes to

predicting rainfall, even though (and this seems odd) they have no

apparent relationship with rainfall on their own.

Another way to say this is that the three variables work together

as a team to predict rainfall, and together they do much better

than any one of them can do by themselves.

This also goes to show that the scatterplots we began

with don’t get to the heart of multi-variable relationships,

because they are only looking at the variables two at a time.

\(\blacksquare\)

- Make a suitable hypothesis test that the variables

altitudeandfromcoastsignificantly improve the prediction ofrainfallover the use oflatitudealone. What do you conclude?

Solution

This calls for anova. Feed this two fitted models,

smaller (fewer explanatory variables) first. The null hypothesis

is that the two models are equally good (so we should go with the

smaller); the alternative is that the larger model is better, so

that the extra complication is worth it:

anova(rainfall.1,rainfall.4) ## Analysis of Variance Table

##

## Model 1: rainfall ~ latitude

## Model 2: rainfall ~ latitude + altitude + fromcoast

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 28 5346.8

## 2 26 3202.3 2 2144.5 8.7057 0.001276 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The P-value is small, so we reject the null in favour of the

alternative: the regression with all three explanatory variables fits

better than the one with just latitude, so the bigger model

is the one we should go with.

If you have studied these things: this one is a “multiple-partial \(F\)-test”, for testing the combined significance of more than one \(x\) but less than all the \(x\)’s.25

\(\blacksquare\)

18.16 Carbon monoxide in cigarettes

The (US) Federal Trade Commission assesses cigarettes according to their tar, nicotine and carbon monoxide contents. In a particular year, 25 brands were assessed. For each brand, the tar, nicotine and carbon monoxide (all in milligrams) were measured, along with the weight in grams. Our aim is to predict carbon monoxide from any or all of the other variables. The data are in link. These are aligned by column (except for the variable names), with more than one space between each column of data.

- Read the data into R, and check that you have 25 observations and 4 variables.

Solution

This specification calls for read_table2:

my_url <- "http://ritsokiguess.site/datafiles/ftccigar.txt"

cigs <- read_table2(my_url)##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## tar = col_double(),

## nicotine = col_double(),

## weight = col_double(),

## co = col_double()

## )cigs## # A tibble: 25 × 4

## tar nicotine weight co

## <dbl> <dbl> <dbl> <dbl>

## 1 14.1 0.86 0.985 13.6

## 2 16 1.06 1.09 16.6

## 3 29.8 2.03 1.16 23.5

## 4 8 0.67 0.928 10.2

## 5 4.1 0.4 0.946 5.4

## 6 15 1.04 0.888 15

## 7 8.8 0.76 1.03 9

## 8 12.4 0.95 0.922 12.3

## 9 16.6 1.12 0.937 16.3

## 10 14.9 1.02 0.886 15.4

## # … with 15 more rowsYes, I have 25 observations on 4 variables indeed.

read_delim won’t work (try it and see what happens), because

that would require the values to be separated by exactly one space.

\(\blacksquare\)

- Run a regression to predict carbon monoxide from the other variables, and obtain a summary of the output.

Solution

The word “summary” is meant to be a big clue that

summary is what you need:

cigs.1 <- lm(co ~ tar + nicotine + weight, data = cigs)

summary(cigs.1)##

## Call:

## lm(formula = co ~ tar + nicotine + weight, data = cigs)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.89261 -0.78269 0.00428 0.92891 2.45082

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.2022 3.4618 0.925 0.365464

## tar 0.9626 0.2422 3.974 0.000692 ***

## nicotine -2.6317 3.9006 -0.675 0.507234

## weight -0.1305 3.8853 -0.034 0.973527

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.446 on 21 degrees of freedom

## Multiple R-squared: 0.9186, Adjusted R-squared: 0.907

## F-statistic: 78.98 on 3 and 21 DF, p-value: 1.329e-11\(\blacksquare\)

- Which one of your explanatory variables would you remove from this regression? Explain (very) briefly. Go ahead and fit the regression without it, and describe how the change in R-squared from the regression in (b) was entirely predictable.

Solution

First, the \(x\)-variable to remove. The obvious candidate is

weight, since it has easily the highest, and clearly

non-significant, P-value. So, out it comes:

cigs.2 <- lm(co ~ tar + nicotine, data = cigs)

summary(cigs.2)##

## Call:

## lm(formula = co ~ tar + nicotine, data = cigs)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.89941 -0.78470 -0.00144 0.91585 2.43064

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.0896 0.8438 3.662 0.001371 **

## tar 0.9625 0.2367 4.067 0.000512 ***

## nicotine -2.6463 3.7872 -0.699 0.492035

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.413 on 22 degrees of freedom

## Multiple R-squared: 0.9186, Adjusted R-squared: 0.9112

## F-statistic: 124.1 on 2 and 22 DF, p-value: 1.042e-12R-squared has dropped from 0.9186 to 0.9186! That is, taking

out weight has not just had a minimal effect on R-squared;

it’s not changed R-squared at all. This is because weight was

so far from being significant: it literally had nothing to add.

Another way of achieving the same thing is via the function

update, which takes a fitted model object and describes the

change that you want to make:

cigs.2a <- update(cigs.1, . ~ . - weight)

summary(cigs.2a)##

## Call:

## lm(formula = co ~ tar + nicotine, data = cigs)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.89941 -0.78470 -0.00144 0.91585 2.43064

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.0896 0.8438 3.662 0.001371 **

## tar 0.9625 0.2367 4.067 0.000512 ***

## nicotine -2.6463 3.7872 -0.699 0.492035

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.413 on 22 degrees of freedom

## Multiple R-squared: 0.9186, Adjusted R-squared: 0.9112

## F-statistic: 124.1 on 2 and 22 DF, p-value: 1.042e-12This can be shorter than describing the whole model again, as you do

with the cigs.2 version of lm. The syntax is that

you first specify a “base” fitted model object that you’re going to

update. Because the model cigs.1 contains all the information

about the kind of model it is, and which data frame the data come

from, R already knows that this is a linear

multiple regression and which \(x\)’s it contains. The second thing to describe is the change from

the “base”. In this case, we want to use the same response variable

and all the same explanatory variables that we had before, except for

weight. This is specified by a special kind of model formula

where . means “whatever was there before”: in English,

“same response and same explanatories except take out weight”.

\(\blacksquare\)

- Fit a regression predicting carbon monoxide from

nicotineonly, and display the summary.

Solution

As you would guess:

cigs.3 <- lm(co ~ nicotine, data = cigs)

summary(cigs.3)##

## Call:

## lm(formula = co ~ nicotine, data = cigs)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.3273 -1.2228 0.2304 1.2700 3.9357

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.6647 0.9936 1.675 0.107

## nicotine 12.3954 1.0542 11.759 3.31e-11 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.828 on 23 degrees of freedom

## Multiple R-squared: 0.8574, Adjusted R-squared: 0.8512

## F-statistic: 138.3 on 1 and 23 DF, p-value: 3.312e-11\(\blacksquare\)

nicotinewas far from being significant in the model of (c), and yet in the model of (d), it was strongly significant, and the R-squared value of (d) was almost as high as that of (c). What does this say about the importance ofnicotineas an explanatory variable? Explain, as briefly as you can manage.

Solution

What this says is that you cannot say anything about the

“importance” of nicotine without also describing the

context that you’re talking about. By itself,

nicotine is important, but when you have

tar in the model, nicotine is not

important: precisely, it now has nothing to add over and above

the predictive value that tar has. You might guess that

this is because tar and nicotine are

“saying the same thing” in some fashion.

We’ll explore that in a moment.

\(\blacksquare\)

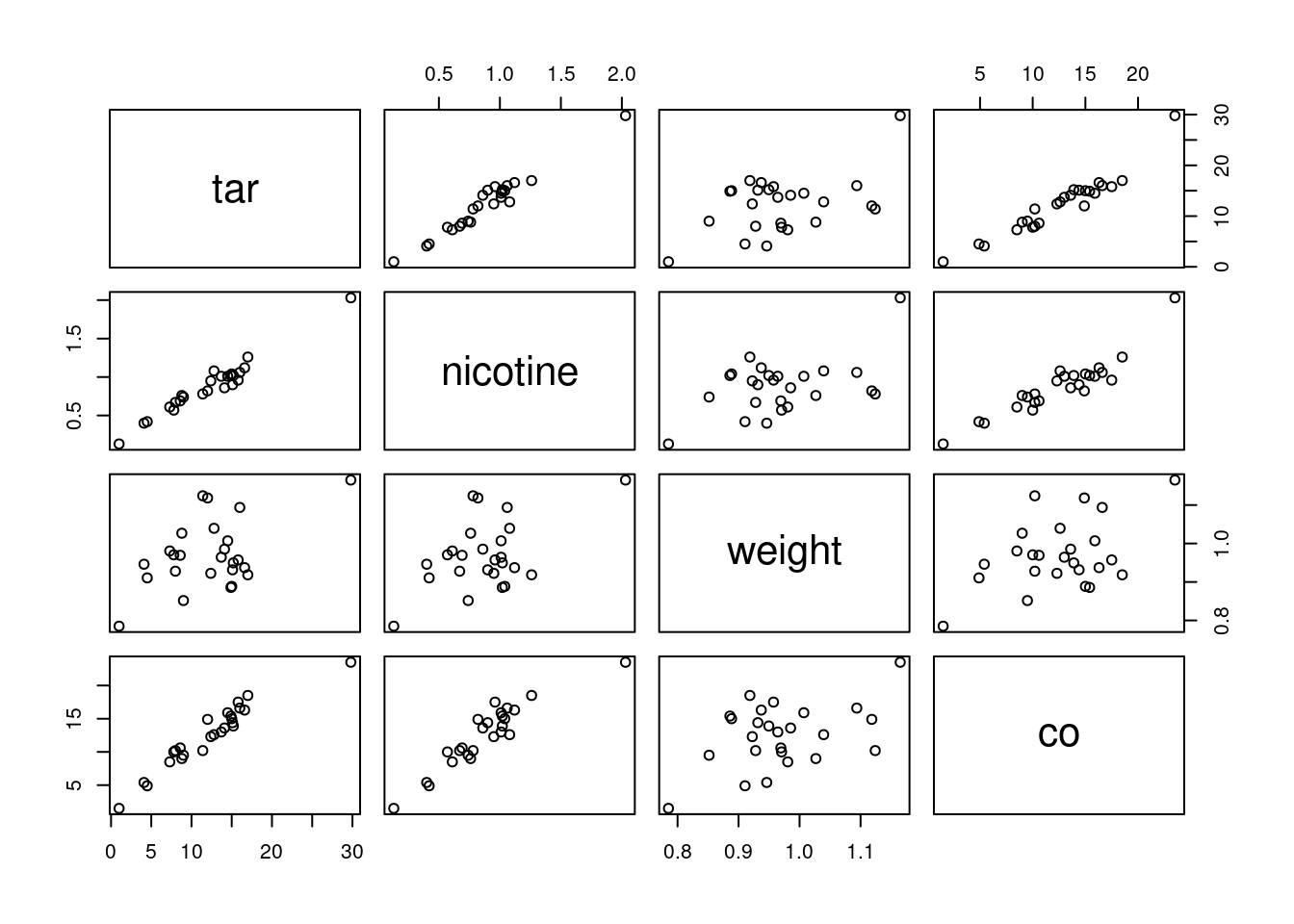

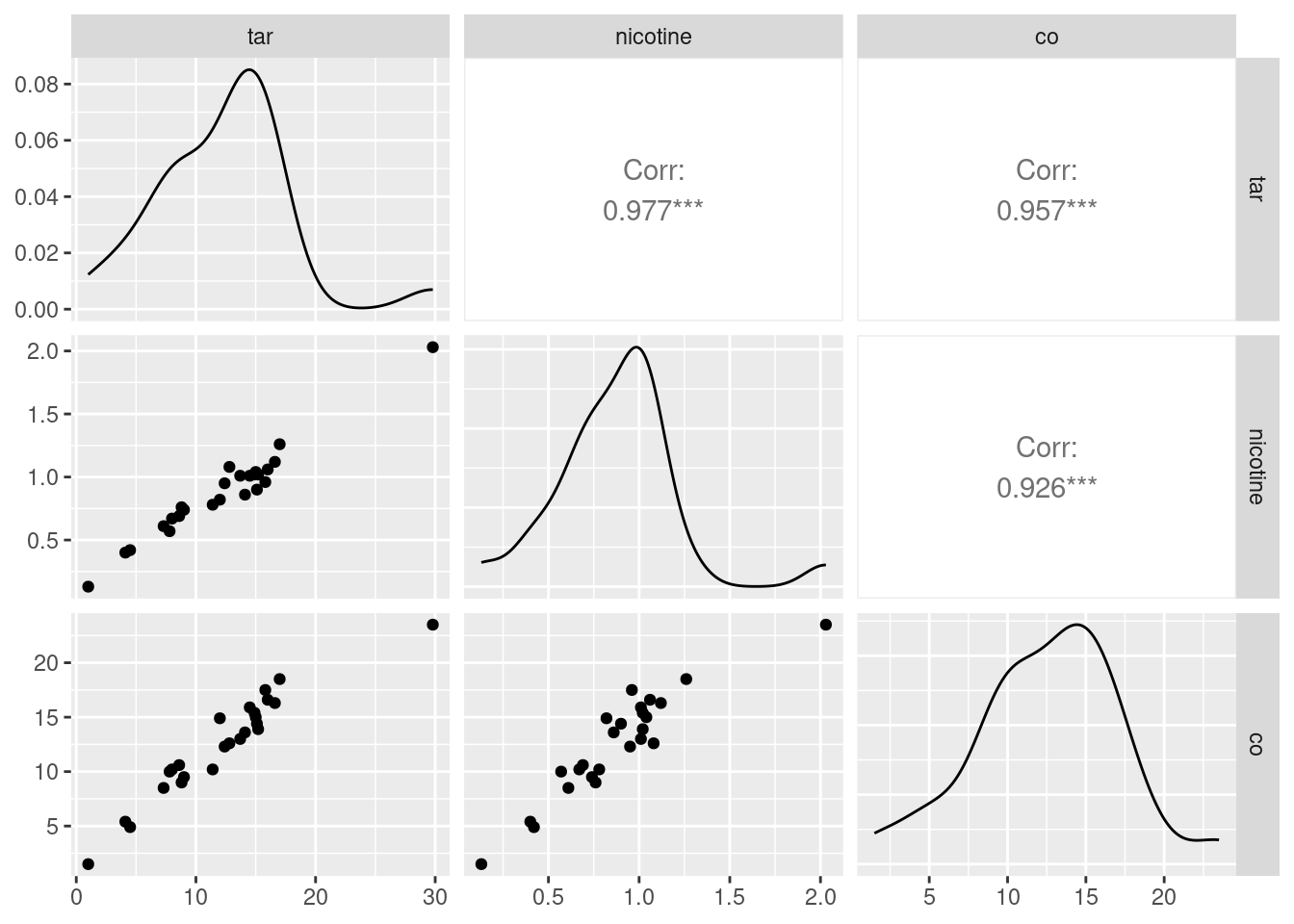

- Make a “pairs plot”: that is, scatter plots between all

pairs of variables. This can be done by feeding the whole data frame

into

plot.26 Do you see any strong relationships that do not includeco? Does that shed any light on the last part? Explain briefly (or “at length” if that’s how it comes out).

Solution

Plot the entire data frame:

plot(cigs)

We’re supposed to ignore co, but I comment that strong

relationships between co and both of tar and

nicotine show up here, along with weight being

at most weakly related to anything else.

That leaves the relationship of tar and nicotine

with each other. That also looks like a strong linear trend. When you

have correlations between explanatory variables, it is called

“multicollinearity”.

Having correlated \(x\)’s is

trouble. Here is where we find out why. The problem is that when

co is large, nicotine is large, and a large value of

tar will come along with it. So we don’t know whether a large

value of co is caused by a large value of tar or a

large value of nicotine: there is no way to separate out

their effects because in effect they are “glued together”.

You might know of this effect (in an experimental design context) as

“confounding”: the effect of tar on co is

confounded with the effect of nicotine on co, and

you can’t tell which one deserves the credit for predicting co.

If you were able to design an experiment here, you could (in

principle) manufacture a bunch of cigarettes with high tar; some of

them would have high nicotine and some would have low. Likewise for

low tar. Then the

correlation between nicotine and tar would go away,

their effects on co would no longer be confounded, and you

could see unambiguously which one of the variables deserves credit for

predicting co. Or maybe it depends on both, genuinely, but at

least then you’d know.

We, however, have an observational study, so we have to make do with the data we have. Confounding is one of the risks we take when we work with observational data.

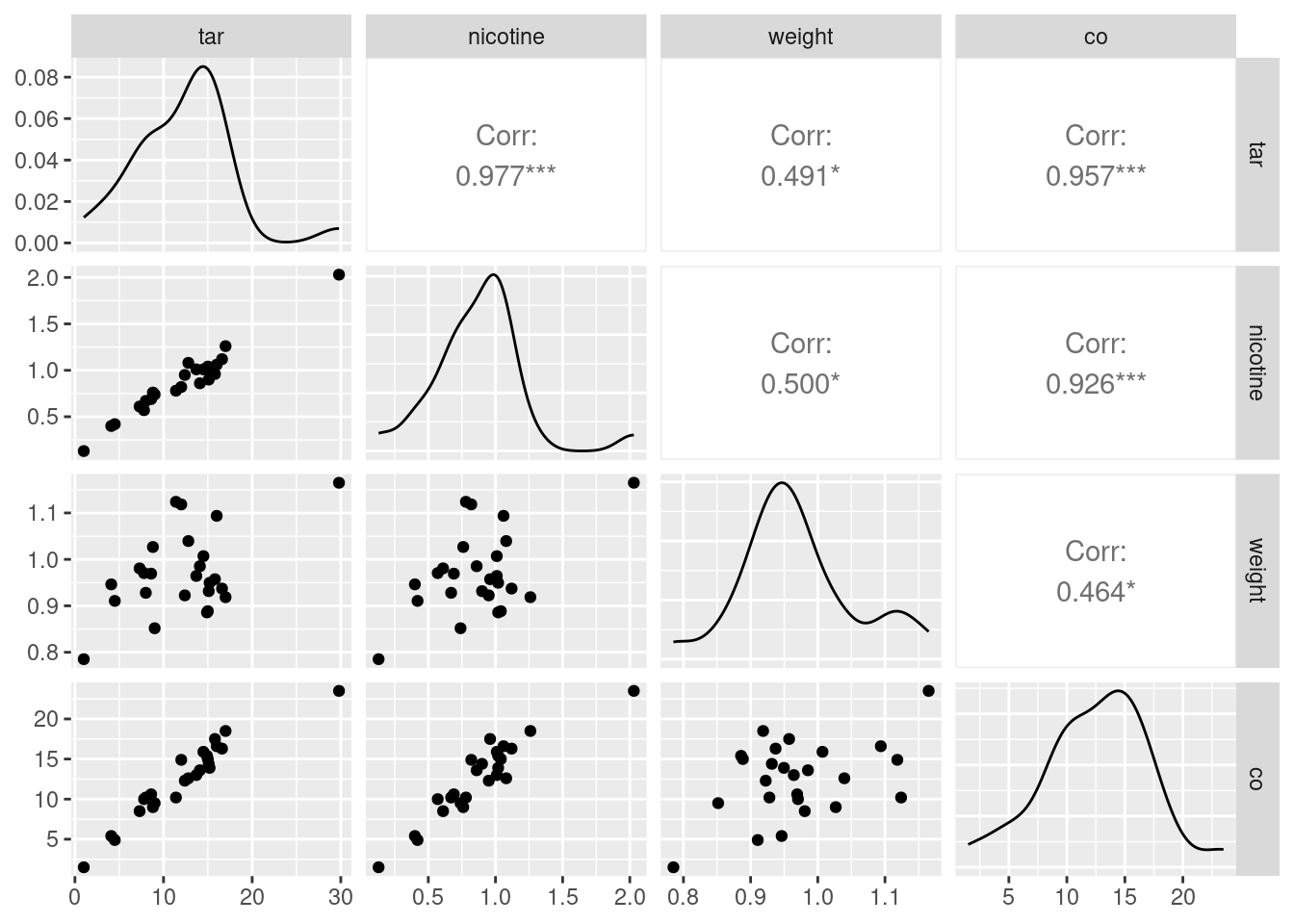

This was a “base graphics” plot. There is a way of doing a

ggplot-style “pairs plot”, as this is called, thus:

library(GGally)## Registered S3 method overwritten by 'GGally':

## method from

## +.gg ggplot2cigs %>% ggpairs(progress = FALSE)

As ever, install.packages first, in the likely event that you

don’t have this package installed yet. Once you do, though, I think

this is a nicer way to get a pairs plot.

This plot is a bit more sophisticated: instead of just having the

scatterplots of the pairs of variables in the row and column, it uses

the diagonal to show a “kernel density” (a smoothed-out histogram),

and upper-right it shows the correlation between each pair of

variables. The three correlations between co, tar

and nicotine are clearly the highest.

If you want only some of the columns to appear in your pairs plot,

select them first, and then pass that data frame into

ggpairs. Here, we found that weight was not

correlated with anything much, so we can take it out and then make a

pairs plot of the other variables:

cigs %>% select(-weight) %>% ggpairs(progress = FALSE)

The three correlations that remain are all very high, which is entirely consistent with the strong linear relationships that you see bottom left.

\(\blacksquare\)

18.17 Maximal oxygen uptake in young boys

A physiologist wanted to understand the relationship between physical characteristics of pre-adolescent boys and their maximal oxygen uptake (millilitres of oxygen per kilogram of body weight). The data are in link for a random sample of 10 pre-adolescent boys. The variables are (with units):

uptake: Oxygen uptake (millitres of oxygen per kilogram of body weight)age: boy’s age (years)height: boy’s height (cm)weight: boy’s weight (kg)chest: chest depth (cm).

- Read the data into R and confirm that you do indeed have 10 observations.

Solution

my_url <- "http://ritsokiguess.site/datafiles/youngboys.txt"

boys <- read_delim(my_url, " ")## Rows: 10 Columns: 5

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: " "

## dbl (5): uptake, age, height, weight, chest

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.boys## # A tibble: 10 × 5

## uptake age height weight chest

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1.54 8.4 132 29.1 14.4

## 2 1.74 8.7 136. 29.7 14.5

## 3 1.32 8.9 128. 28.4 14

## 4 1.5 9.9 131. 28.8 14.2

## 5 1.46 9 130 25.9 13.6

## 6 1.35 7.7 128. 27.6 13.9

## 7 1.53 7.3 130. 29 14

## 8 1.71 9.9 138. 33.6 14.6

## 9 1.27 9.3 127. 27.7 13.9

## 10 1.5 8.1 132. 30.8 14.510 boys (rows) indeed.

\(\blacksquare\)

- Fit a regression predicting oxygen uptake from all the other variables, and display the results.

Solution

Fitting four explanatory variables with only ten observations is likely to be pretty shaky, but we press ahead regardless:

boys.1 <- lm(uptake ~ age + height + weight + chest, data = boys)

summary(boys.1)##

## Call:

## lm(formula = uptake ~ age + height + weight + chest, data = boys)

##

## Residuals:

## 1 2 3 4 5 6 7 8

## -0.020697 0.019741 -0.003649 0.038470 -0.023639 -0.026026 0.050459 -0.014380

## 9 10

## 0.004294 -0.024573

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.774739 0.862818 -5.534 0.002643 **

## age -0.035214 0.015386 -2.289 0.070769 .

## height 0.051637 0.006215 8.308 0.000413 ***

## weight -0.023417 0.013428 -1.744 0.141640

## chest 0.034489 0.085239 0.405 0.702490

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.03721 on 5 degrees of freedom

## Multiple R-squared: 0.9675, Adjusted R-squared: 0.9415

## F-statistic: 37.2 on 4 and 5 DF, p-value: 0.0006513\(\blacksquare\)

- (A one-mark question.) Would you say, on the evidence so far, that the regression fits well or badly? Explain (very) briefly.

Solution

R-squared of 0.97 (97%) is very high, so I’d say this regression fits very well. That’s all. I said “on the evidence so far” to dissuade you from overthinking this, or thinking that you needed to produce some more evidence. That, plus the fact that this was only one mark.

\(\blacksquare\)

- It seems reasonable that an older boy should have a greater oxygen uptake, all else being equal. Is this supported by your output? Explain briefly.

Solution

If an older boy has greater oxygen uptake (the “all else equal” was a hint),

the slope of age should be

positive. It is not: it is \(-0.035\), so it is suggesting

(all else equal) that a greater age goes with a

smaller oxygen uptake.

The reason why this happens (which you didn’t need, but

you can include it if you like) is that age has a

non-small P-value of 0.07, so that the age slope

is not significantly different from zero. With all the

other variables, age has nothing to add

over and above them, and we could therefore remove it.

\(\blacksquare\)

- It seems reasonable that a boy with larger weight should have larger lungs and thus a statistically significantly larger oxygen uptake. Is that what happens here? Explain briefly.

Solution

Look at the P-value for weight. This is 0.14,

not small, and so a boy with larger weight does not have

a significantly larger oxygen uptake, all else

equal. (The slope for weight is not

significantly different from zero either.)

I emphasized “statistically significant” to remind you

that this means to do a test and get a P-value.

\(\blacksquare\)

- Fit a model that contains only the significant explanatory variables from your first regression. How do the R-squared values from the two regressions compare? (The last sentence asks for more or less the same thing as the next part. Answer it either here or there. Either place is good.)

Solution

Only height is significant, so that’s the

only explanatory variable we need to keep. I would

just do the regression straight rather than using

update here:

boys.2 <- lm(uptake ~ height, data = boys)

summary(boys.2)##

## Call:

## lm(formula = uptake ~ height, data = boys)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.069879 -0.033144 0.001407 0.009581 0.084012

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.843326 0.609198 -6.309 0.000231 ***

## height 0.040718 0.004648 8.761 2.26e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.05013 on 8 degrees of freedom

## Multiple R-squared: 0.9056, Adjusted R-squared: 0.8938

## F-statistic: 76.75 on 1 and 8 DF, p-value: 2.258e-05If you want, you can use update here, which looks like this:

boys.2a <- update(boys.1, . ~ . - age - weight - chest)

summary(boys.2a)##

## Call:

## lm(formula = uptake ~ height, data = boys)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.069879 -0.033144 0.001407 0.009581 0.084012

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.843326 0.609198 -6.309 0.000231 ***

## height 0.040718 0.004648 8.761 2.26e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.05013 on 8 degrees of freedom

## Multiple R-squared: 0.9056, Adjusted R-squared: 0.8938

## F-statistic: 76.75 on 1 and 8 DF, p-value: 2.258e-05This doesn’t go quite so smoothly here because there are three variables being removed, and it’s a bit of work to type them all.

\(\blacksquare\)

- How has R-squared changed between your two regressions? Describe what you see in a few words.

Solution

R-squared has dropped by a bit, from 97% to 91%. (Make your own call: pull out the two R-squared numbers, and say a word or two about how they compare. I don’t much mind what you say: “R-squared has decreased (noticeably)”, “R-squared has hardly changed”. But say something.)

\(\blacksquare\)

- Carry out a test comparing the fit of your two regression models. What do you conclude, and therefore what recommendation would you make about the regression that would be preferred?

Solution

The word “test” again implies something that produces a P-value with a

null hypothesis that you might reject. In this case, the test that

compares two models differing by more than one \(x\) uses

anova, testing the null hypothesis that the two regressions

are equally good, against the alternative that the bigger (first) one

is better. Feed anova two fitted model objects, smaller first:

anova(boys.2, boys.1)## Analysis of Variance Table

##

## Model 1: uptake ~ height

## Model 2: uptake ~ age + height + weight + chest

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 8 0.0201016

## 2 5 0.0069226 3 0.013179 3.1729 0.123This P-value of 0.123 is not small, so we do not reject the null

hypothesis. There is not a significant difference in fit between the

two models. Therefore, we should go with the smaller model

boys.2 because it is simpler.

That drop in R-squared from 97% to 91% was, it turns out, not significant: the three extra variables could have produced a change in R-squared like that, even if they were worthless.27

If you have learned about “adjusted R-squared”, you might recall

that this is supposed to go down only if the variables you took

out should not have been taken out. But adjusted R-squared goes down

here as well, from 94% to 89% (not quite as much, therefore). What

happens is that adjusted R-squared is rather more relaxed about

keeping variables than the anova \(F\)-test is; if we had used

an \(\alpha\) of something like 0.10, the decision between the two

models would have been a lot closer, and this is reflected in the

adjusted R-squared values.

\(\blacksquare\)

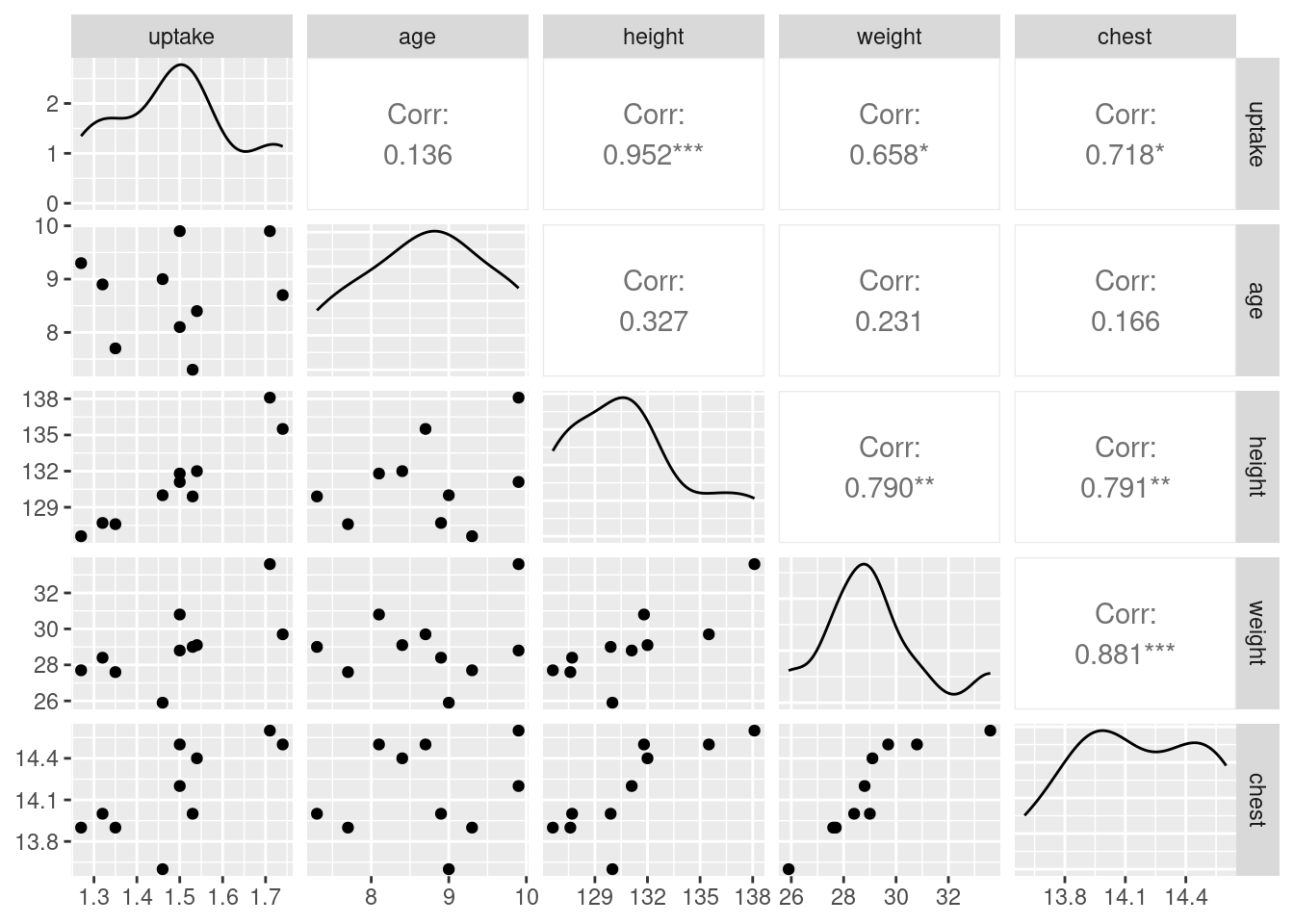

- Obtain a table of correlations between all

the variables in the data frame. Do this by feeding

the whole data frame into

cor. We found that a regression predicting oxygen uptake from justheightwas acceptably good. What does your table of correlations say about why that is? (Hint: look for all the correlations that are large.)

Solution

Correlations first:

cor(boys)## uptake age height weight chest

## uptake 1.0000000 0.1361907 0.9516347 0.6576883 0.7182659

## age 0.1361907 1.0000000 0.3274830 0.2307403 0.1657523

## height 0.9516347 0.3274830 1.0000000 0.7898252 0.7909452

## weight 0.6576883 0.2307403 0.7898252 1.0000000 0.8809605

## chest 0.7182659 0.1657523 0.7909452 0.8809605 1.0000000The correlations with age are all on the low side, but all

the other correlations are high, not just between uptake and the

other variables, but between the explanatory variables as well.

Why is this helpful in understanding what’s going on? Well, imagine a

boy with large height (a tall one). The regression boys.2

says that this alone is enough to predict that such a boy’s oxygen

uptake is likely to be large, since the slope is positive. But the

correlations tell you more: a boy with large height is also (somewhat)

likely to be older (have large age), heavier (large weight) and to have

larger chest cavity. So oxygen uptake does depend on those other

variables as well, but once you know height you can make a

good guess at their values; you don’t need to know them.

Further remarks: age has a low correlation with

uptake, so its non-significance earlier appears to be

“real”: it really does have nothing extra to say, because the other

variables have a stronger link with uptake than

age. Height, however, seems to be the best way of relating

oxygen uptake to any of the other variables. I think the suppositions

from earlier about relating oxygen uptake to “bigness”28 in some sense

are actually sound, but age and weight and chest capture

“bigness” worse than height does. Later, when you learn about

Principal Components, you will see that the first principal component,

the one that best captures how the variables vary together, is often

“bigness” in some sense.

Another way to think about these things is via pairwise

scatterplots. The nicest way to produce these is via ggpairs

from package GGally:

boys %>% ggpairs(progress = FALSE)

A final remark: with five variables, we really ought to have more than ten observations (something like 50 would be better). But with more observations and the same correlation structure, the same issues would come up again, so the question would not be materially changed.

\(\blacksquare\)

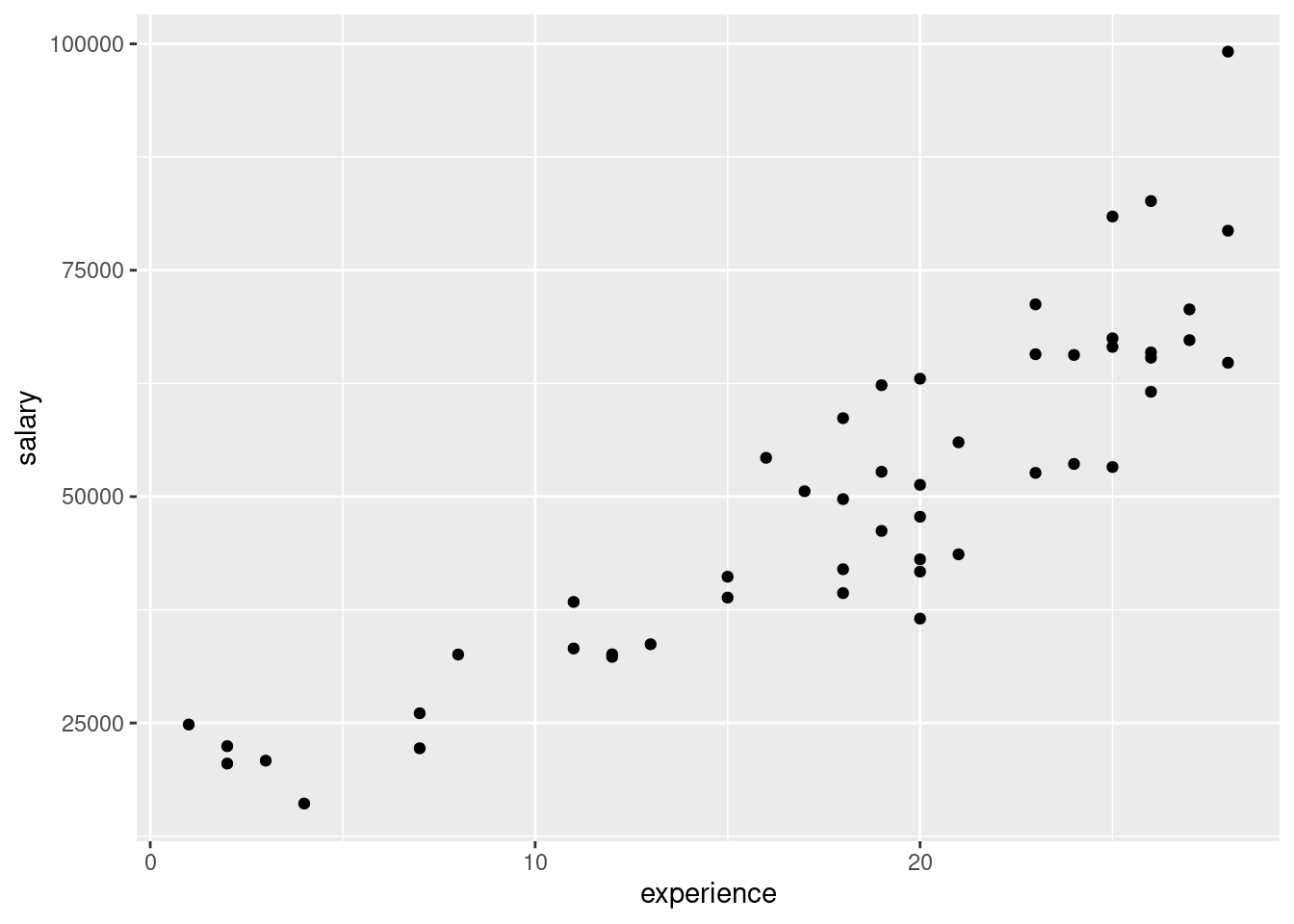

18.18 Facebook friends and grey matter

Is there a relationship between the number of Facebook friends a person has, and the density of grey matter in the areas of the brain associated with social perception and associative memory? To find out, a 2012 study measured both of these variables for a sample of 40 students at City University in London (England). The data are at link. The grey matter density is on a \(z\)-score standardized scale. The values are separated by tabs.

The aim of this question is to produce an R Markdown report that contains your answers to the questions below.

You should aim to make your report flow smoothly, so that it would be pleasant for a grader to read, and can stand on its own as an analysis (rather than just being the answer to a question that I set you). Some suggestions: give your report a title and arrange it into sections with an Introduction; add a small amount of additional text here and there explaining what you are doing and why. I don’t expect you to spend a large amount of time on this, but I do hope you will make some effort. (My report came out to 4 Word pages.)

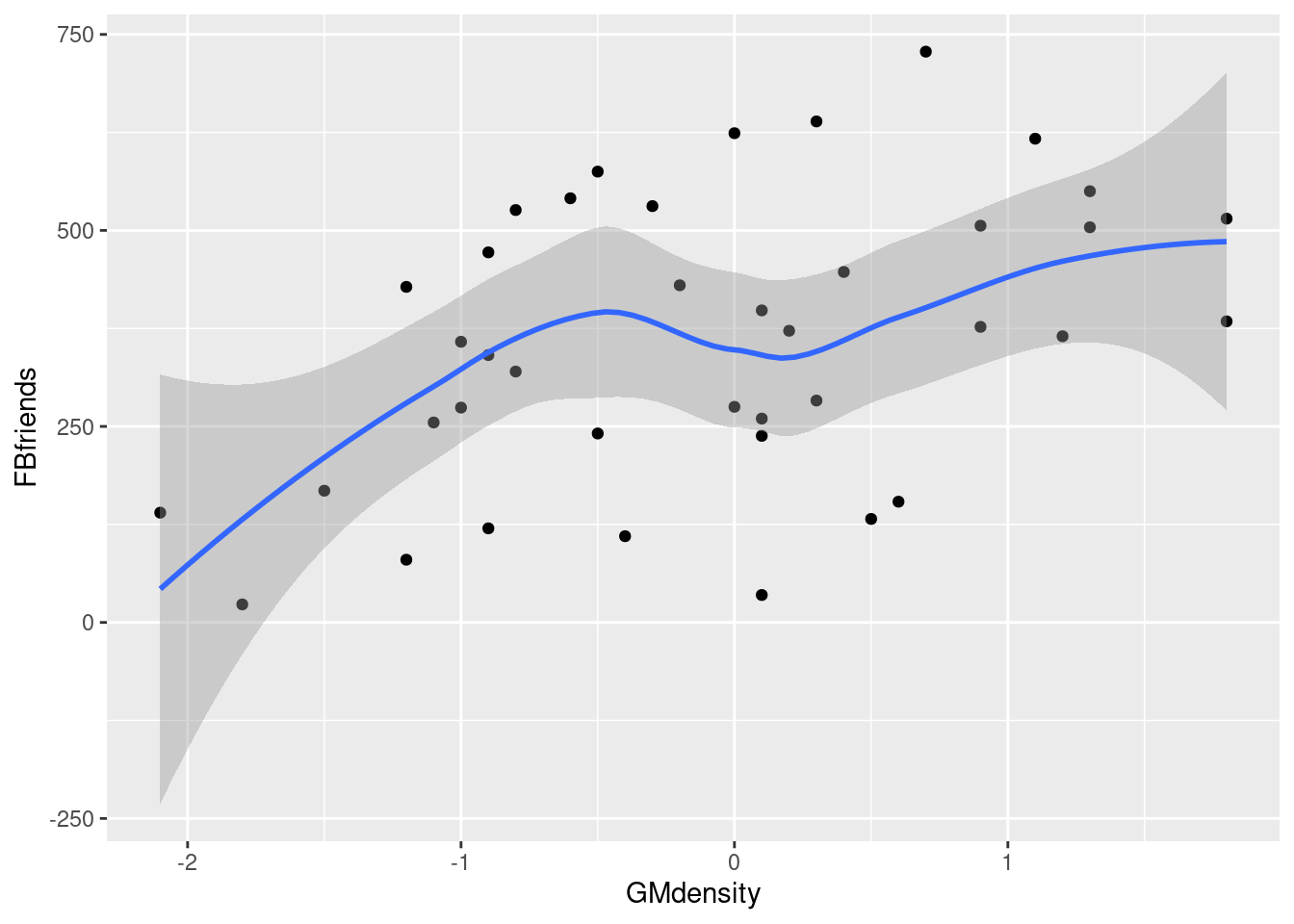

- Read in the data and make a scatterplot for predicting the number of Facebook friends from the grey matter density. On your scatterplot, add a smooth trend.

Solution

Begin your document with a code chunk containing

library(tidyverse). The data values are

separated by tabs, which you will need to take into account:

my_url <- "http://ritsokiguess.site/datafiles/facebook.txt"

fb <- read_tsv(my_url)## Rows: 40 Columns: 2

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: "\t"

## dbl (2): GMdensity, FBfriends

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.fb## # A tibble: 40 × 2

## GMdensity FBfriends

## <dbl> <dbl>

## 1 -1.8 23

## 2 0.1 35

## 3 -1.2 80

## 4 -0.4 110

## 5 -0.9 120

## 6 -2.1 140

## 7 -1.5 168

## 8 0.5 132

## 9 0.6 154

## 10 -0.5 241

## # … with 30 more rowsggplot(fb, aes(x = GMdensity, y = FBfriends)) + geom_point() + geom_smooth()## `geom_smooth()` using method = 'loess' and formula = 'y ~ x'

\(\blacksquare\)

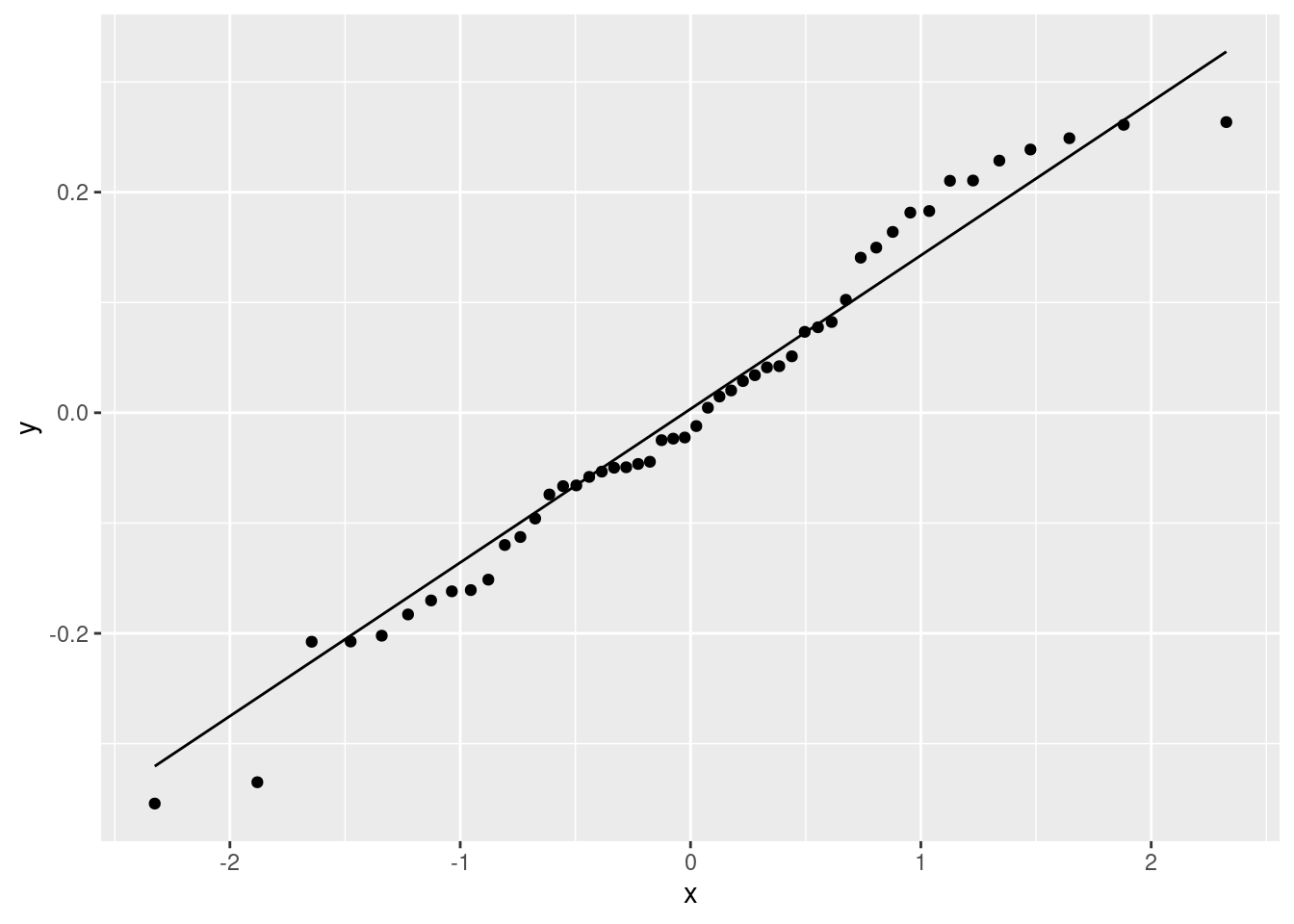

- Describe what you see on your scatterplot: is there a trend, and if so, what kind of trend is it? (Don’t get too taken in by the exact shape of your smooth trend.) Think “form, direction, strength”.

Solution

I’d say there seems to be a weak, upward, apparently linear trend. The points are not especially close to the trend, so I don’t think there’s any justification for calling this other than “weak”. (If you think the trend is, let’s say, “moderate”, you ought to say what makes you think that: for example, that the people with a lot of Facebook friends also tend to have a higher grey matter density. I can live with a reasonably-justified “moderate”.) The reason I said not to get taken in by the shape of the smooth trend is that this has a “wiggle” in it: it goes down again briefly in the middle. But this is likely a quirk of the data, and the trend, if there is any, seems to be an upward one.

\(\blacksquare\)

- Fit a regression predicting the number of Facebook friends from the grey matter density, and display the output.

Solution

That looks like this. You can call the “fitted model object” whatever you like, but you’ll need to get the capitalization of the variable names correct:

fb.1 <- lm(FBfriends ~ GMdensity, data = fb)

summary(fb.1)##

## Call:

## lm(formula = FBfriends ~ GMdensity, data = fb)

##

## Residuals:

## Min 1Q Median 3Q Max

## -339.89 -110.01 -5.12 99.80 303.64

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 366.64 26.35 13.916 < 2e-16 ***

## GMdensity 82.45 27.58 2.989 0.00488 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 165.7 on 38 degrees of freedom

## Multiple R-squared: 0.1904, Adjusted R-squared: 0.1691

## F-statistic: 8.936 on 1 and 38 DF, p-value: 0.004882I observe, though I didn’t ask you to, that the R-squared is pretty awful, going with a correlation of

sqrt(0.1904)## [1] 0.4363485which would look like as weak of a trend as we saw.29

\(\blacksquare\)

- Is the slope of your regression line significantly different from zero? What does that mean, in the context of the data?

Solution

The P-value of the slope is 0.005, which is less than 0.05. Therefore the slope is significantly different from zero. That means that the number of Facebook friends really does depend on the grey matter density, for the whole population of interest and not just the 40 students observed here (that were a sample from that population). I don’t mind so much what you think the population is, but it needs to be clear that the relationship applies to a population. Another way to approach this is to say that you would expect this relationship to show up again in another similar experiment. That also works, because it gets at the idea of reproducibility.

\(\blacksquare\)

- Are you surprised by the results of parts (b) and (d)? Explain briefly.

Solution

I am surprised, because I thought the trend on the scatterplot was so weak that there would not be a significant slope. I guess there was enough of an upward trend to be significant, and with \(n=40\) observations we were able to get a significant slope out of that scatterplot. With this many observations, even a weak correlation can be significantly nonzero. You can be surprised or not, but you need to have some kind of consideration of the strength of the trend on the scatterplot as against the significance of the slope. For example, if you decided that the trend was “moderate” in strength, you would be justified in being less surprised than I was. Here, there is the usual issue that we have proved that the slope is not zero (that the relationship is not flat), but we may not have a very clear idea of what the slope actually is. There are a couple of ways to get a confidence interval. The obvious one is to use R as a calculator and go up and down twice its standard error (to get a rough idea):

82.45 + 2 * 27.58 * c(-1, 1)## [1] 27.29 137.61The c() thing is to get both confidence limits at once. The

smoother way is this:

confint(fb.1)## 2.5 % 97.5 %

## (Intercept) 313.30872 419.9810

## GMdensity 26.61391 138.2836Feed confint a “fitted model object” and it’ll give you

confidence intervals (by default 95%) for all the parameters in it.

The confidence interval for the slope goes from about 27 to about 138. That is to say, a one-unit increase in grey matter density goes with an increase in Facebook friends of this much. This is not especially insightful: it’s bigger than zero (the test was significant), but other than that, it could be almost anything. This is where the weakness of the trend comes back to bite us. With this much scatter in our data, we need a much larger sample size to estimate accurately how big an effect grey matter density has.

\(\blacksquare\)

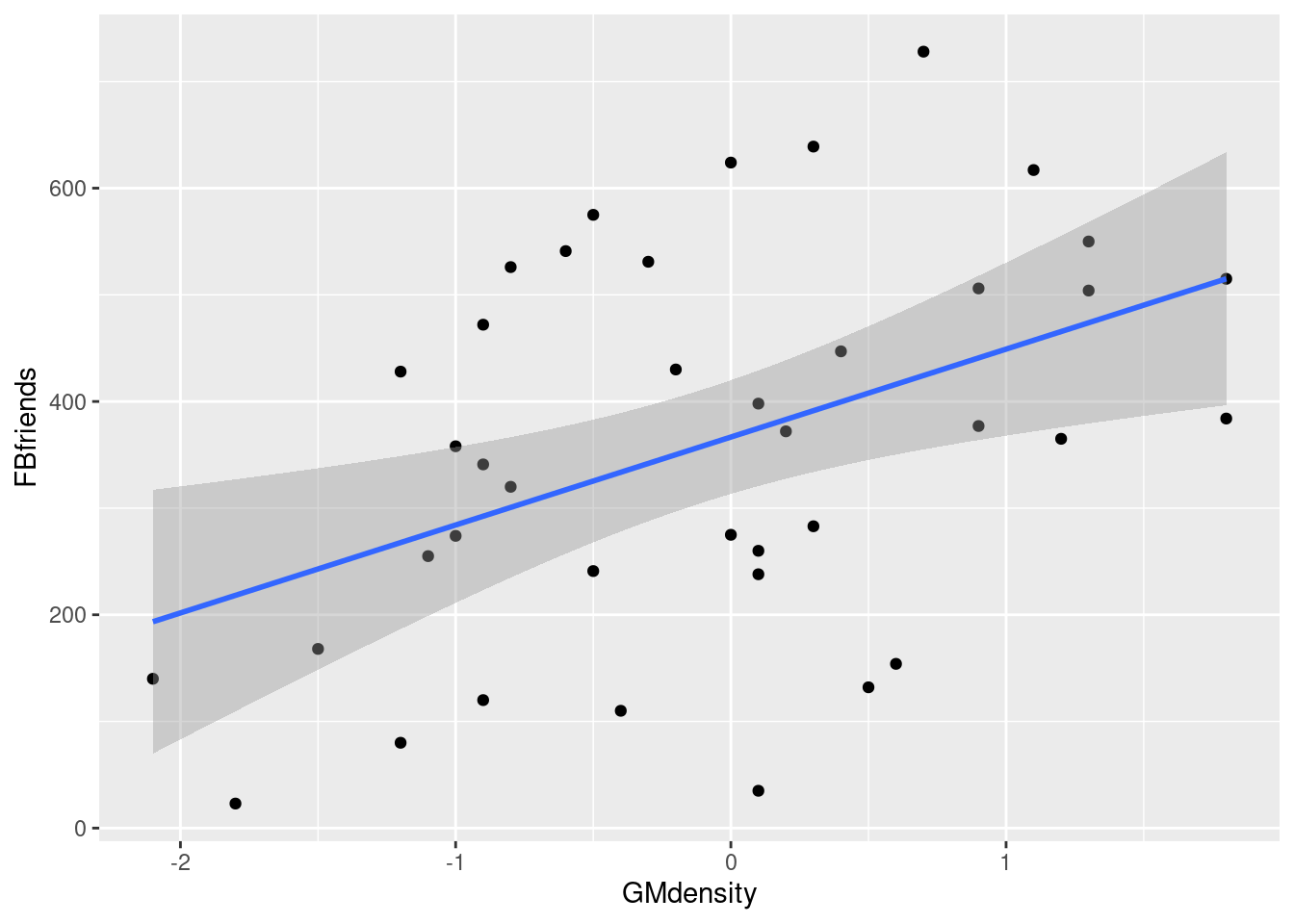

- Obtain a scatterplot with the regression line on it.

Solution

Just a modification of (a):

ggplot(fb, aes(x = GMdensity, y = FBfriends)) + geom_point() +

geom_smooth(method = "lm")## `geom_smooth()` using formula = 'y ~ x'

\(\blacksquare\)

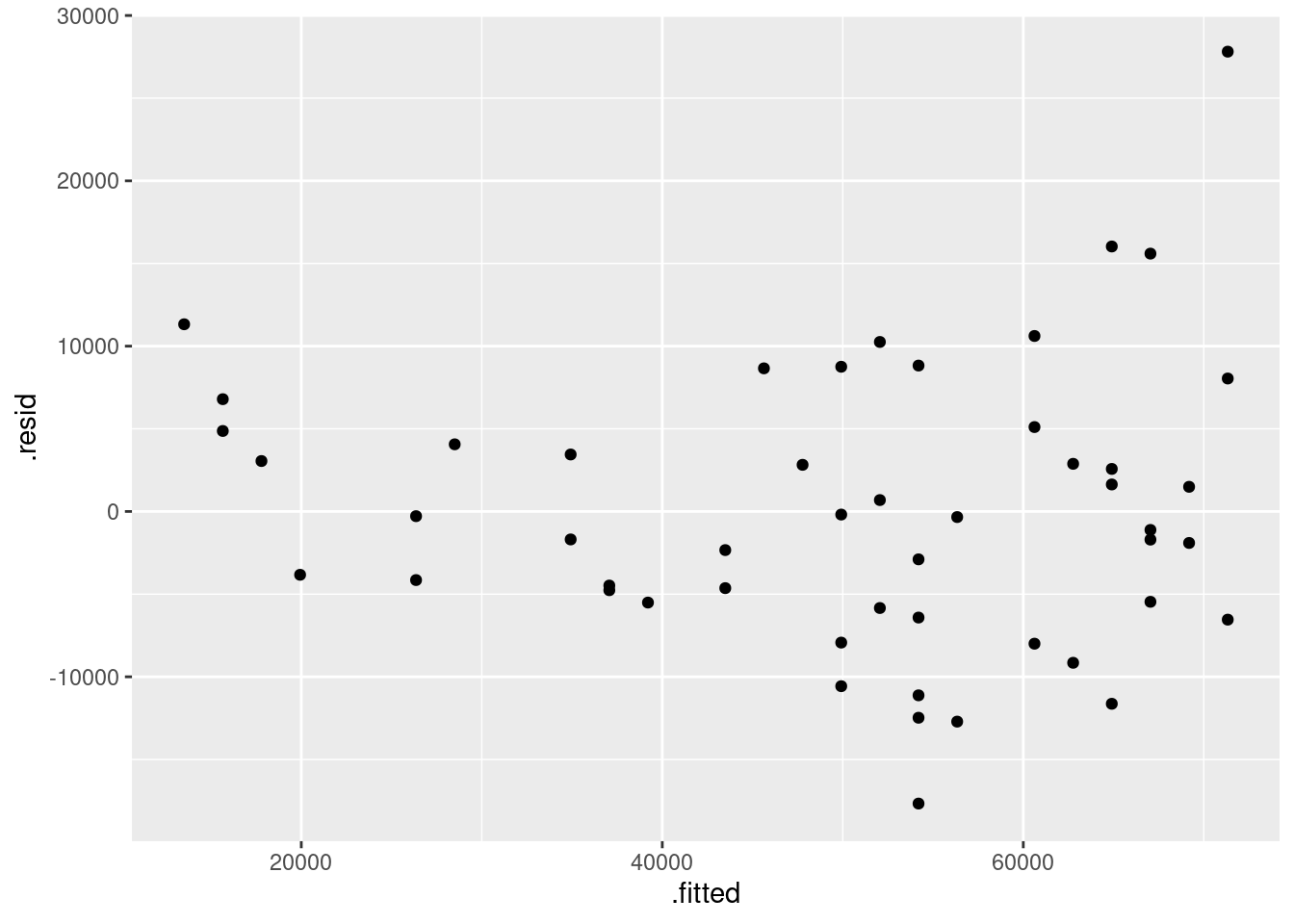

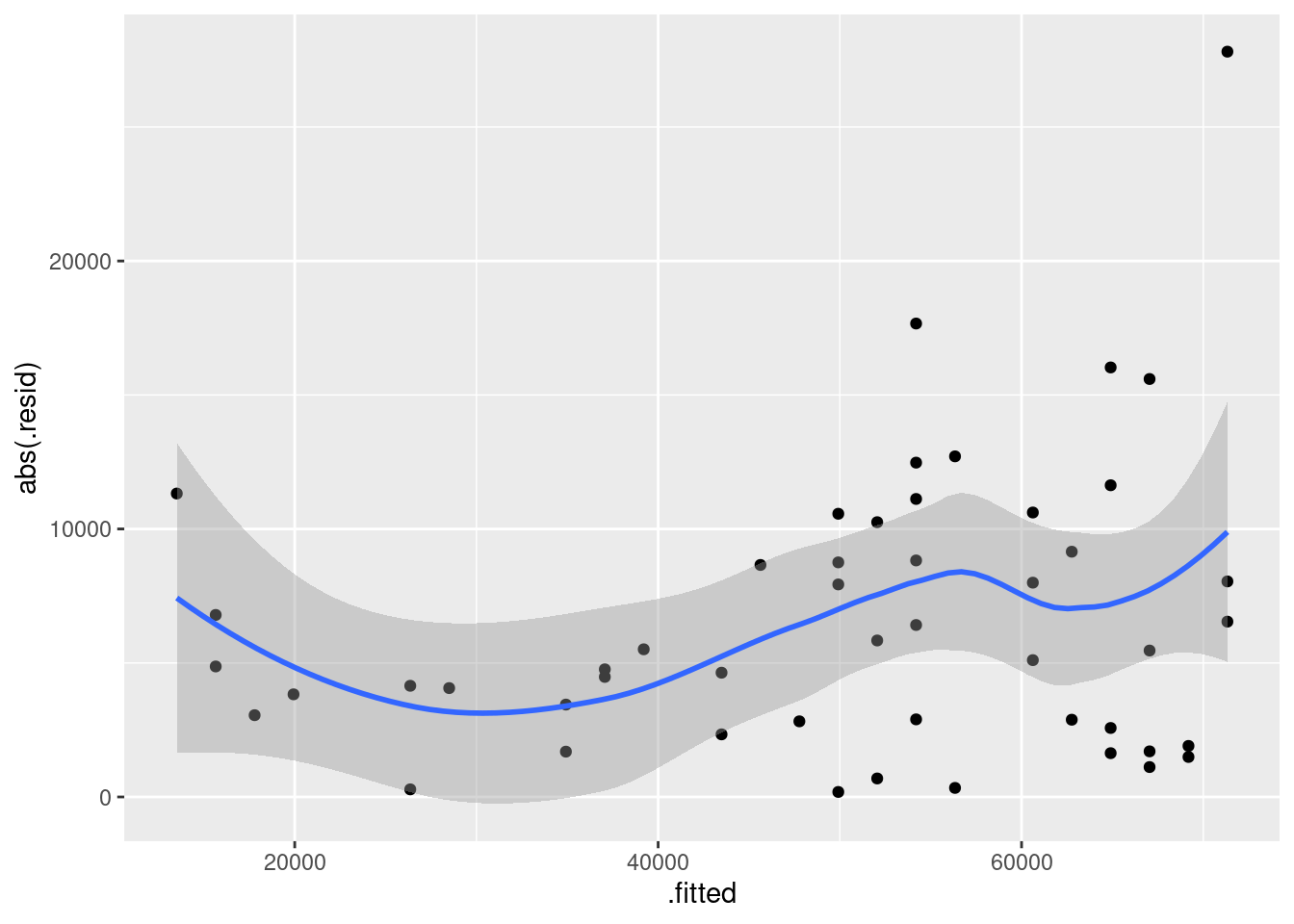

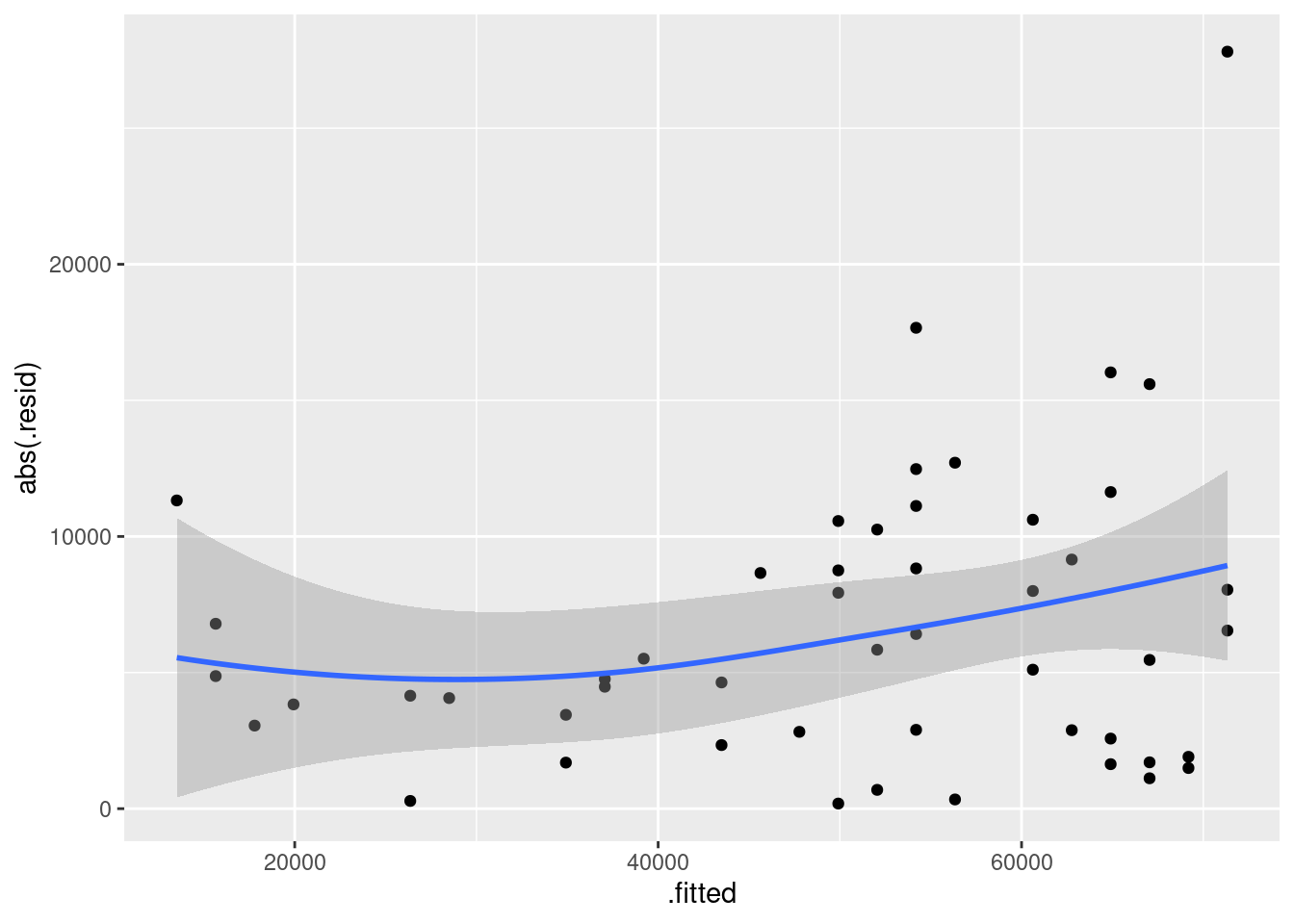

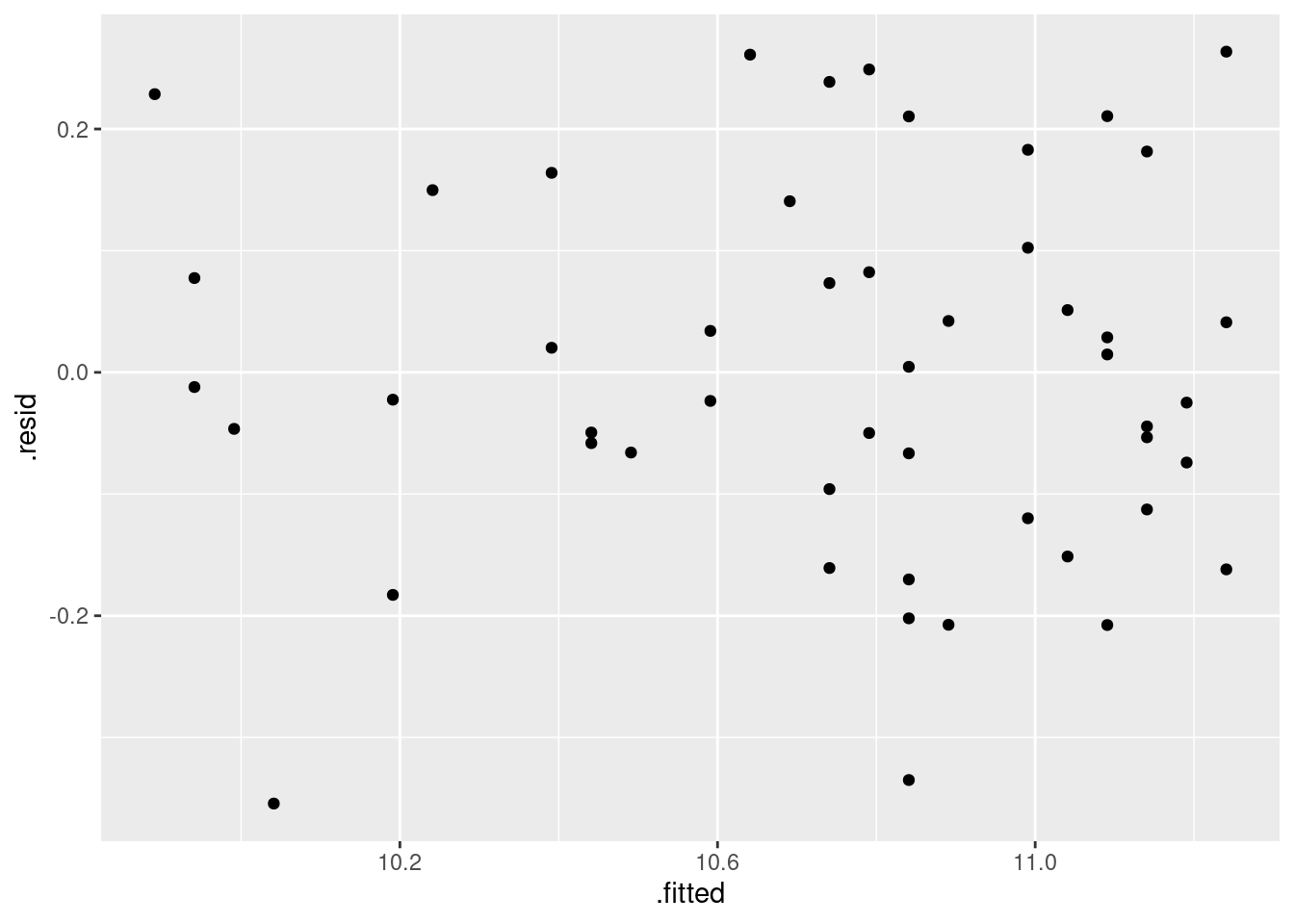

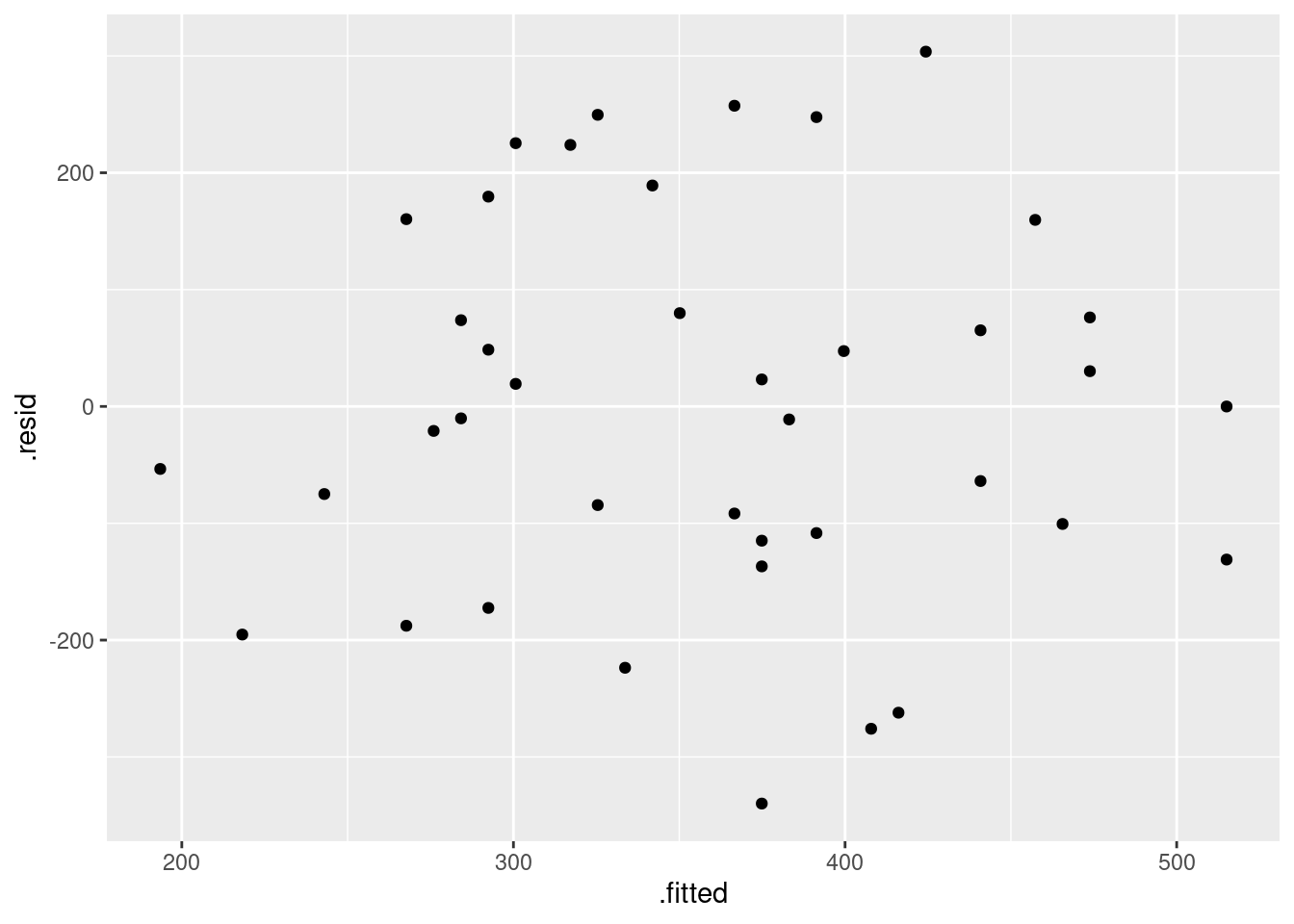

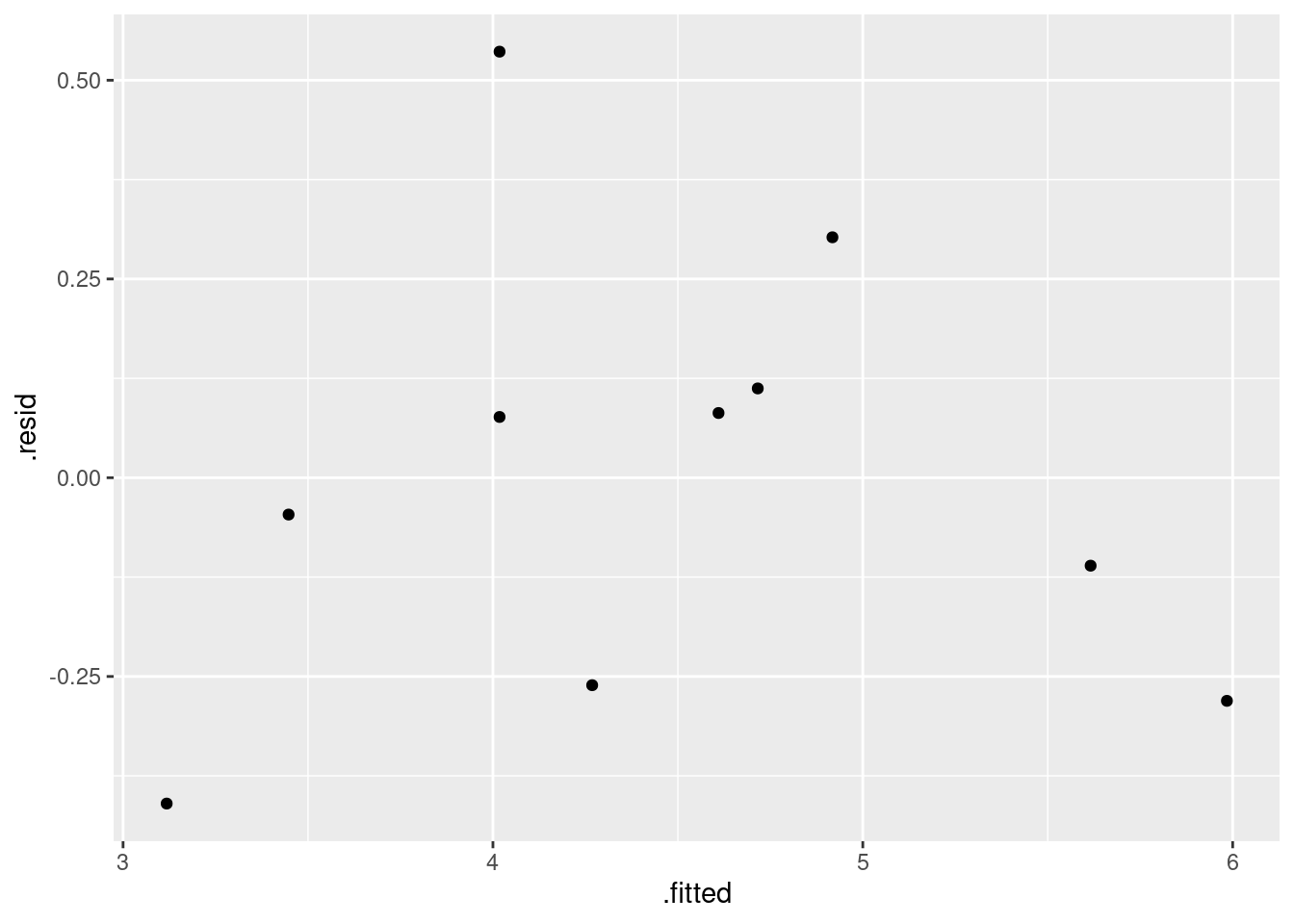

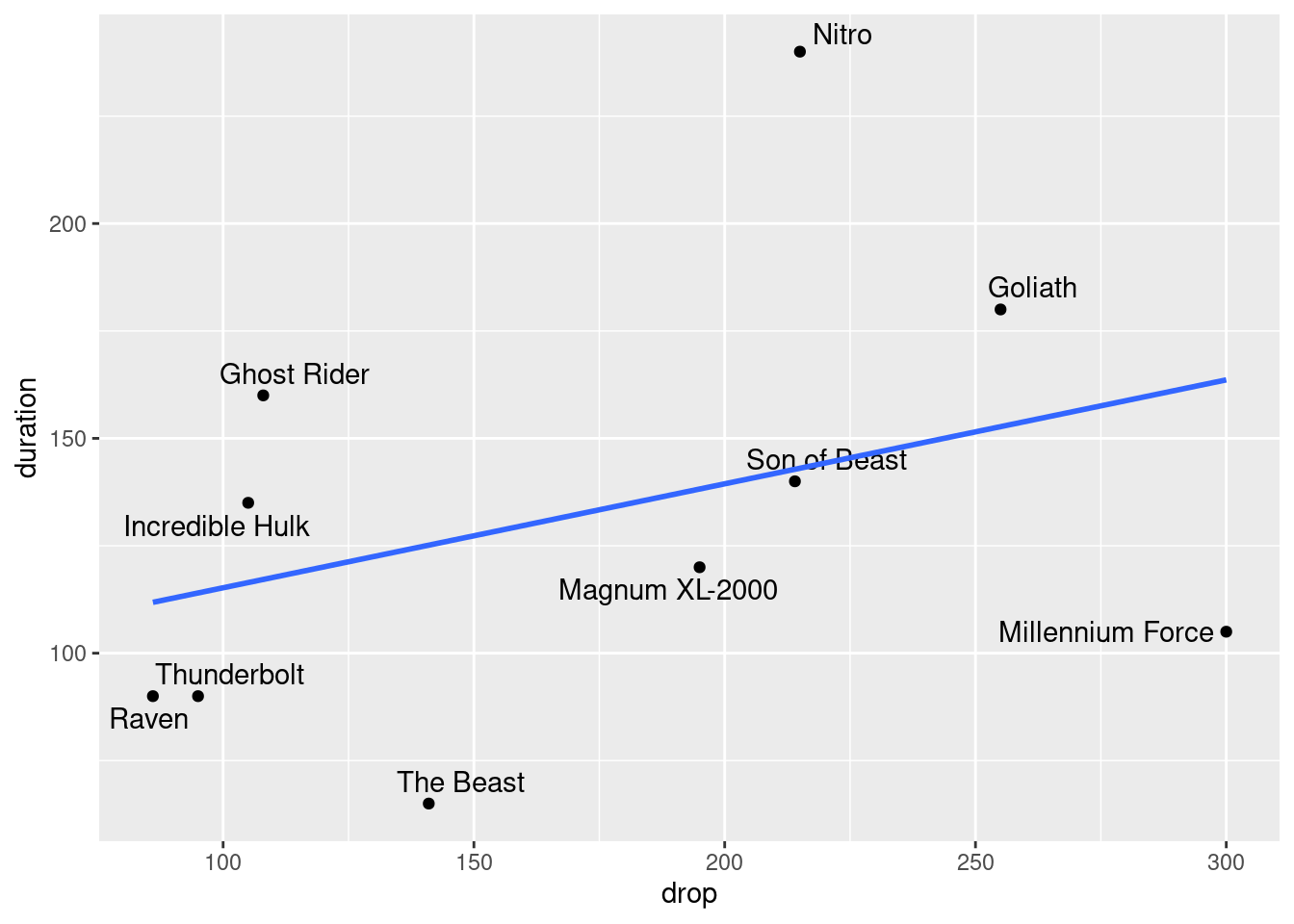

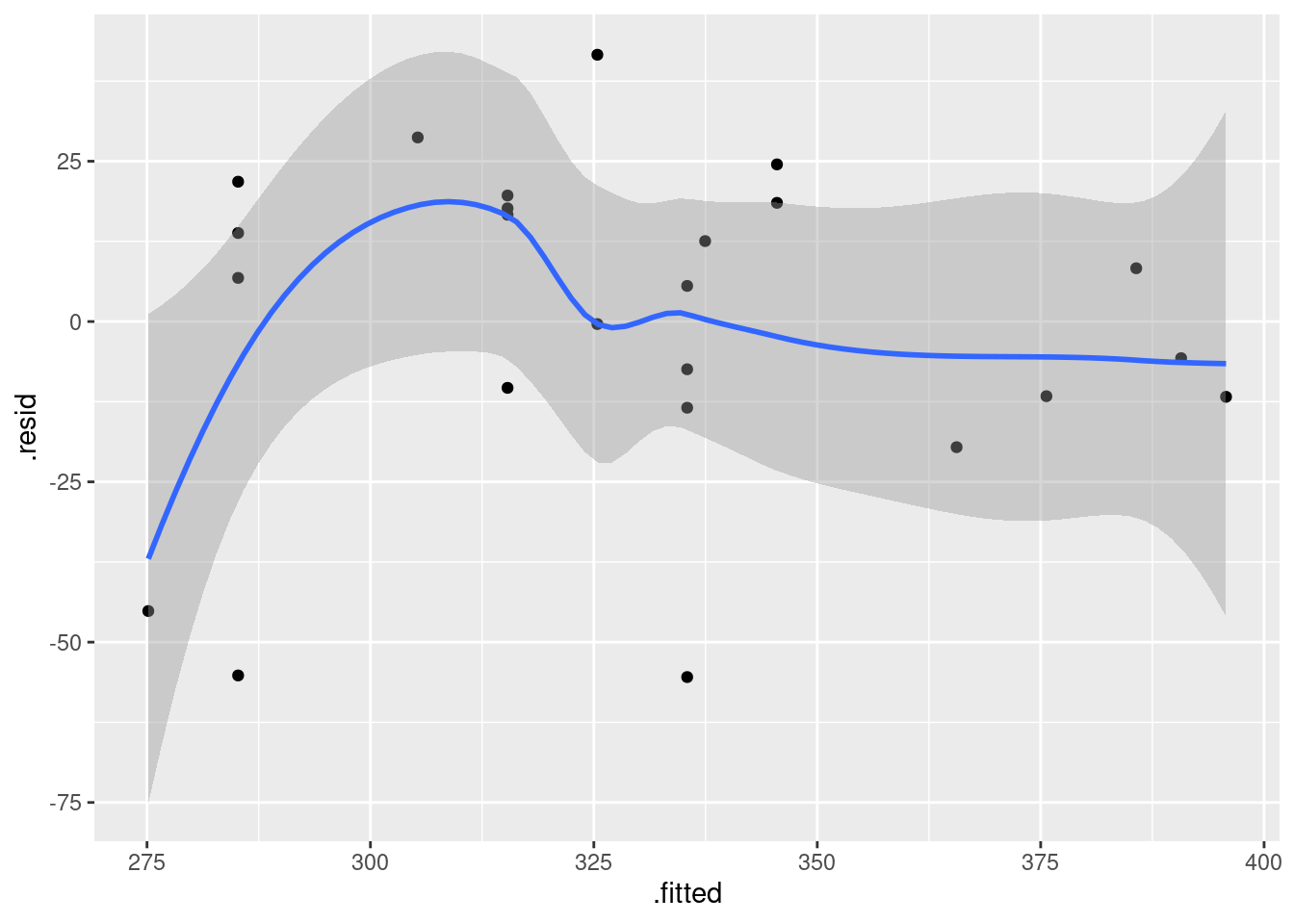

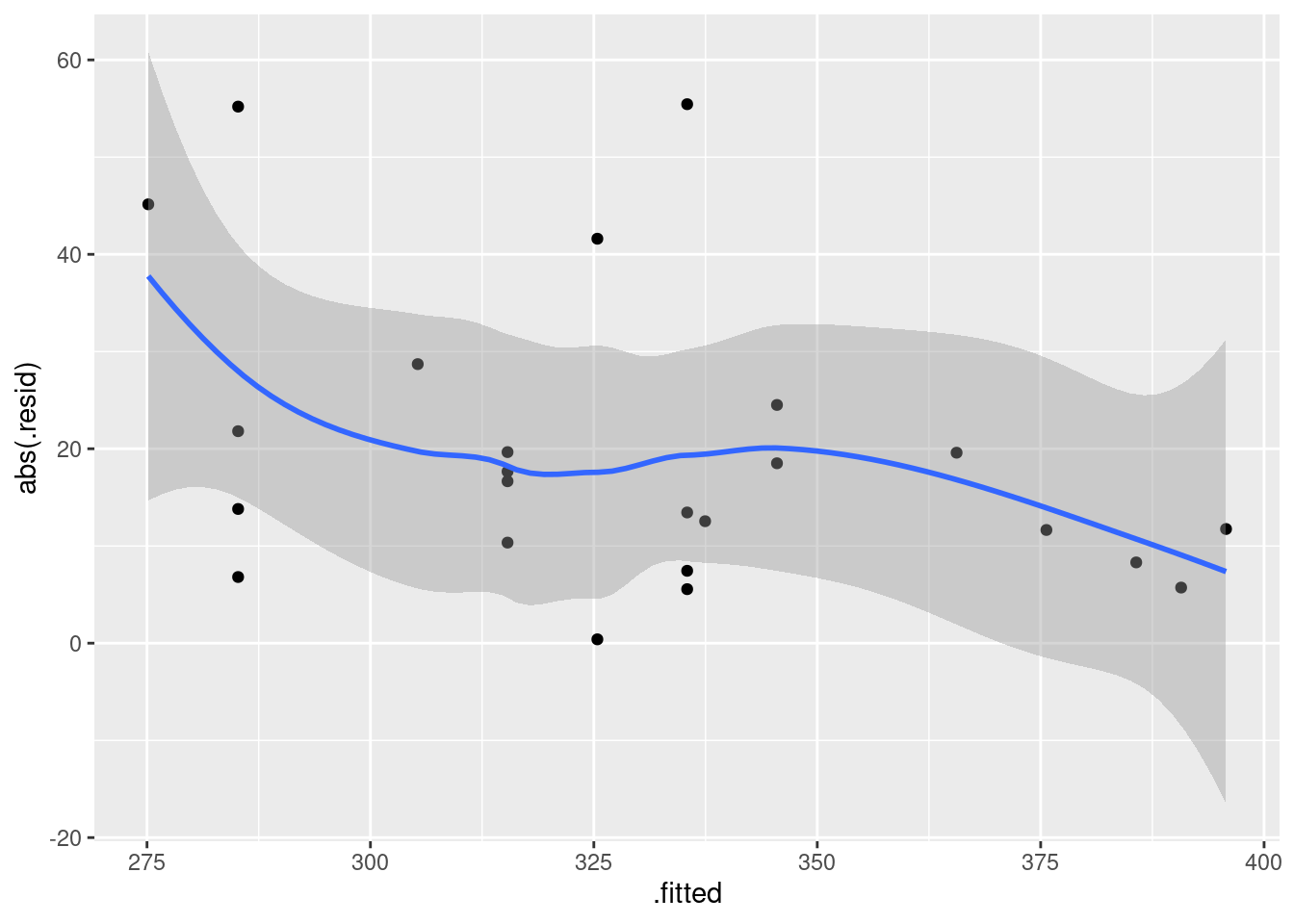

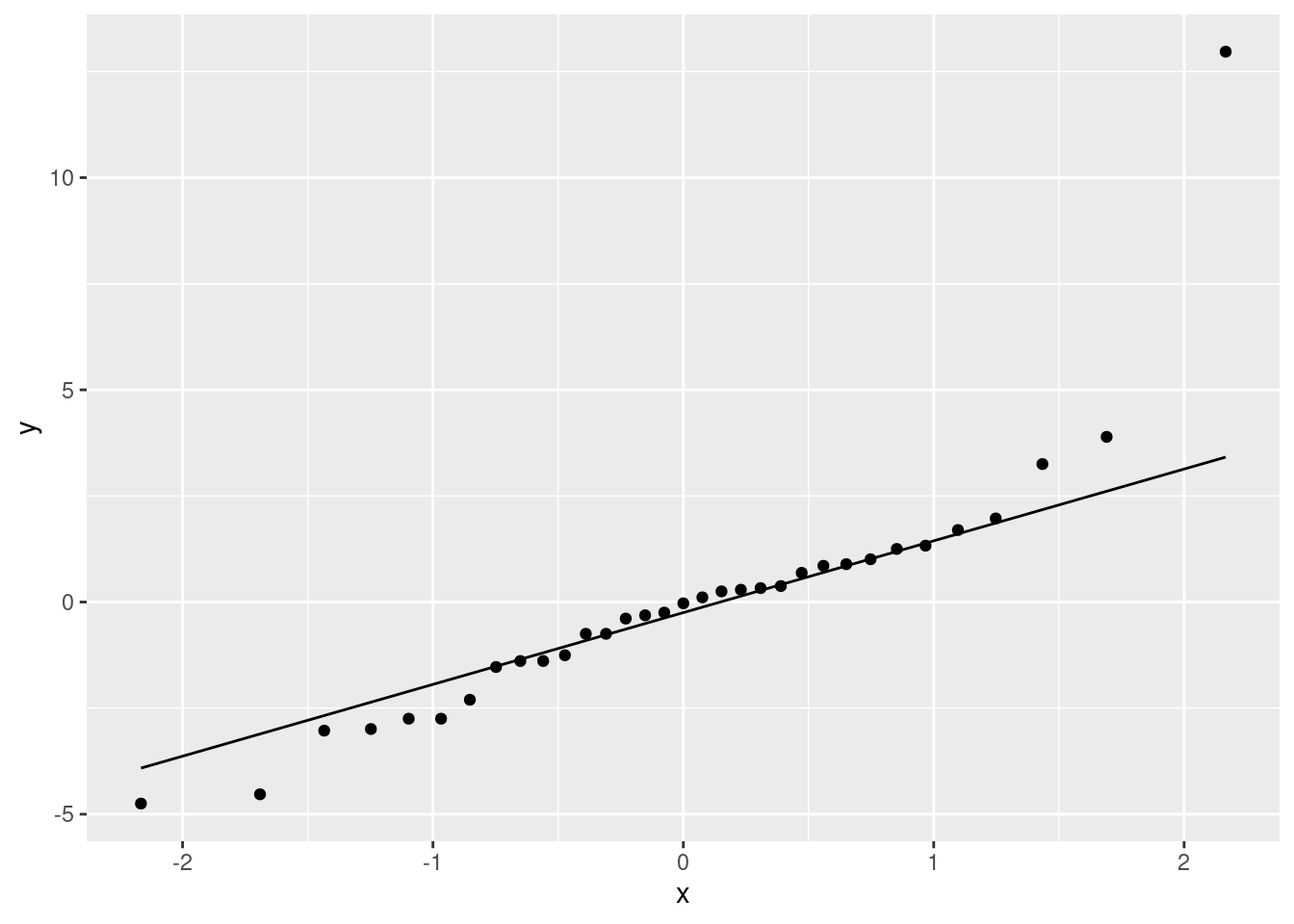

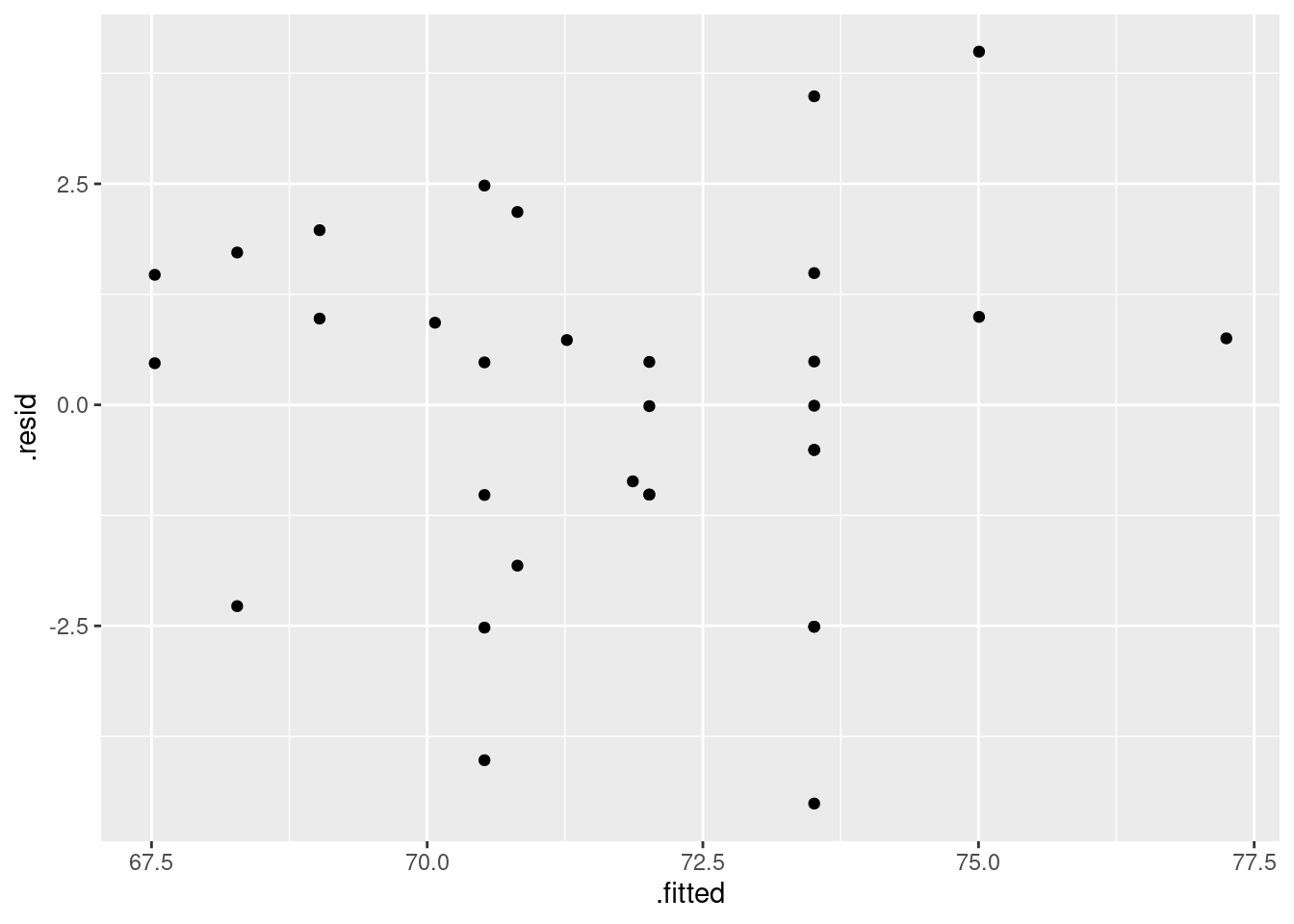

- Obtain a plot of the residuals from the regression against the fitted values, and comment briefly on it.

Solution

This is, to my mind, the easiest way:

ggplot(fb.1, aes(x = .fitted, y = .resid)) + geom_point()

There is some “magic” here, since the fitted model object is not actually a data frame, but it works this way. That looks to me like a completely random scatter of points. Thus, I am completely happy with the straight-line regression that we fitted, and I see no need to improve it.

(You should make two points here: one, describe what you see, and two, what it implies about whether or not your regression is satisfactory.)

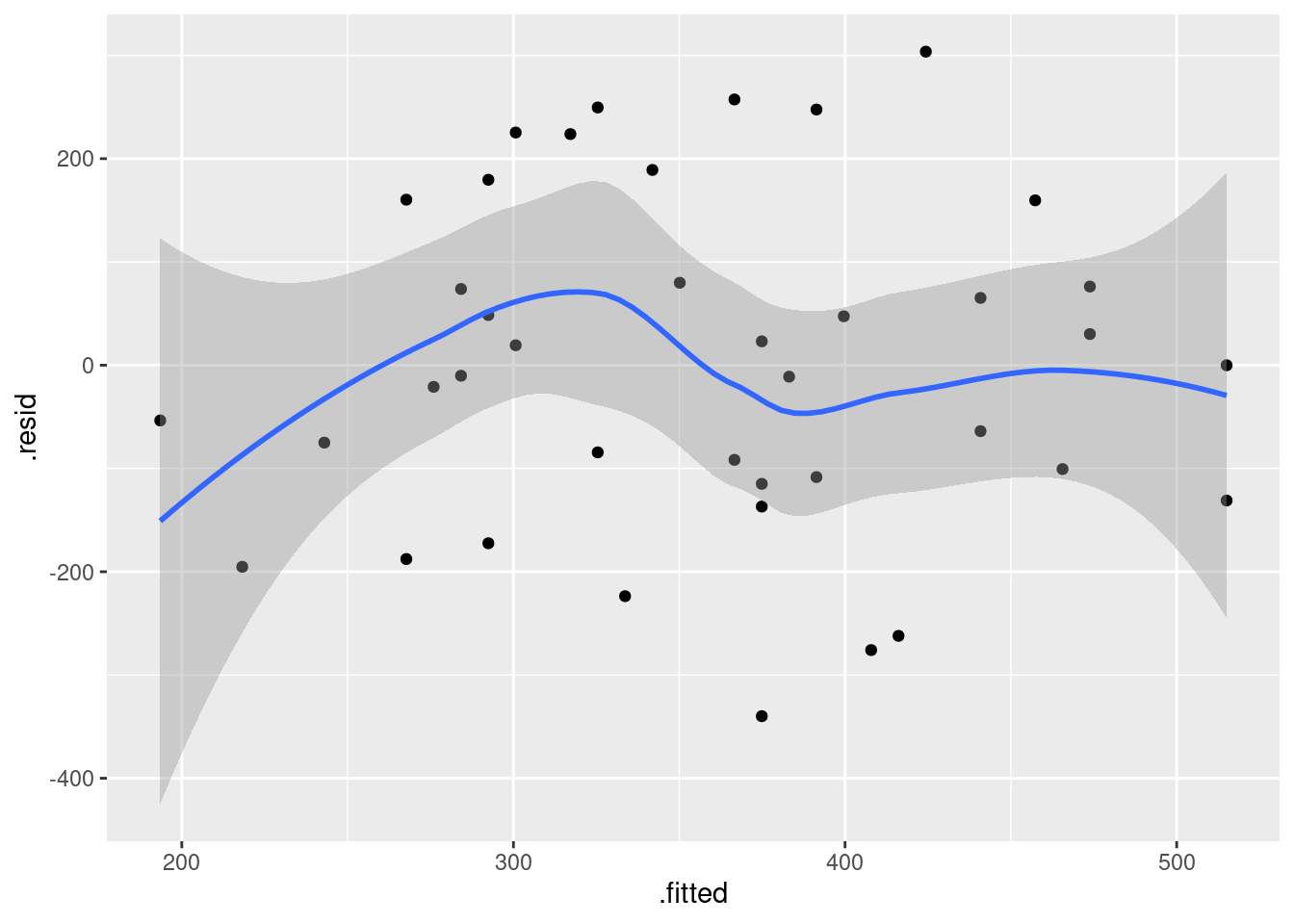

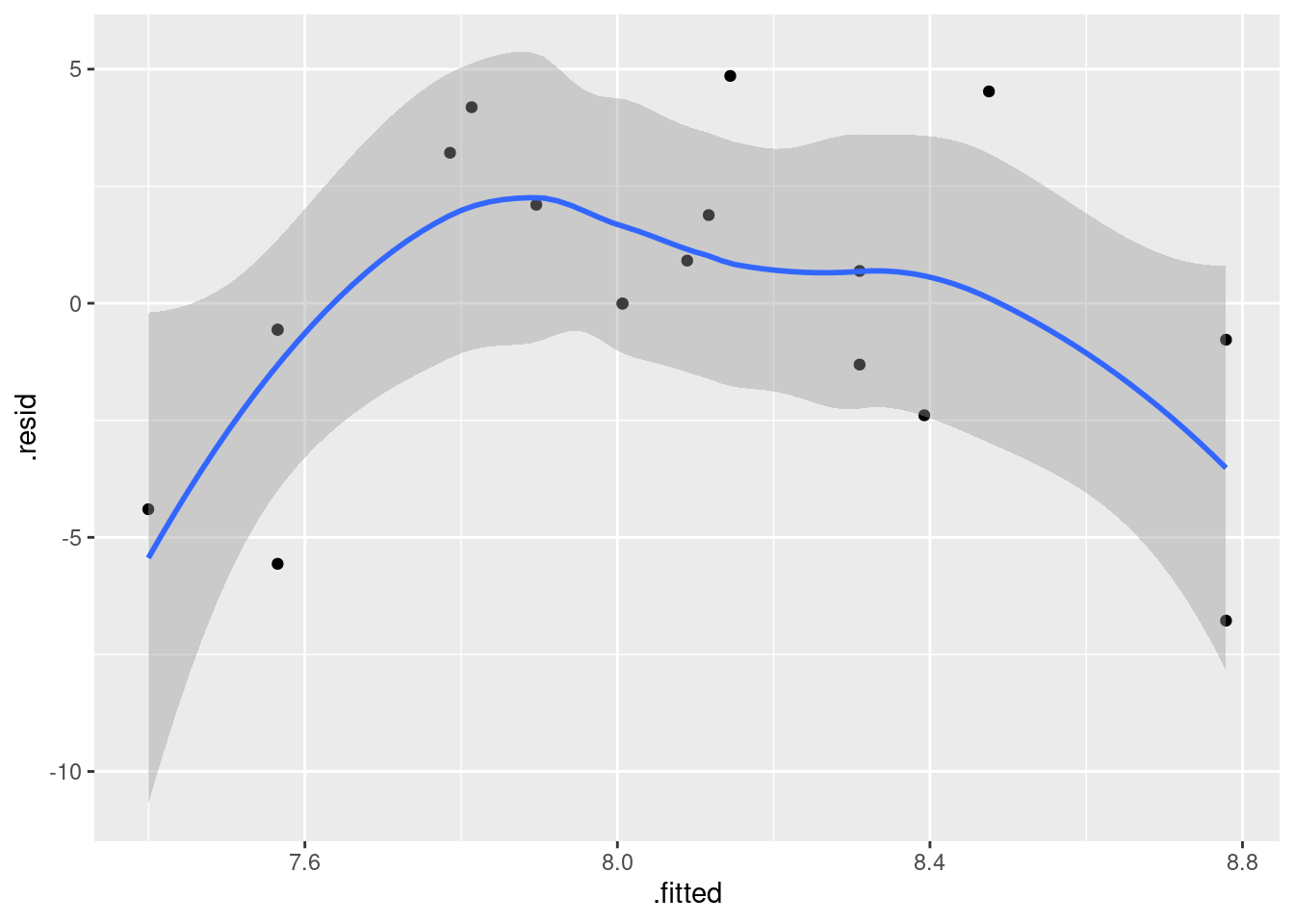

Compare that residual plot with this one:

ggplot(fb.1, aes(x = .fitted, y = .resid)) +

geom_point() + geom_smooth()## `geom_smooth()` using method = 'loess' and formula = 'y ~ x'

Now, why did I try adding a smooth trend, and why is it not necessarily a good idea? The idea of a residual plot is that there should be no trend, and so the smooth trend curve ought to go straight across. The problem is that it will tend to wiggle, just by chance, as here: it looks as if it goes up and down before flattening out. But if you look at the points, they are all over the place, not close to the smooth trend at all. So the smooth trend is rather deceiving. Or, to put it another way, to indicate a real problem, the smooth trend would have to be a lot farther from flat than this one is. I’d call this one basically flat.

\(\blacksquare\)

18.19 Endogenous nitrogen excretion in carp

A paper in Fisheries Science reported on variables that affect “endogenous nitrogen excretion” or ENE in carp raised in Japan. A number of carp were divided into groups based on body weight, and each group was placed in a different tank. The mean body weight of the carp placed in each tank was recorded. The carp were then fed a protein-free diet three times daily for a period of 20 days. At the end of the experiment, the amount of ENE in each tank was measured, in milligrams of total fish body weight per day. (Thus it should not matter that some of the tanks had more fish than others, because the scaling is done properly.)

For this question, write a report in R Markdown that answers the questions below and contains some narrative that describes your analysis. Create an HTML document from your R Markdown.

- Read the data in from link. There are 10 tanks.

Solution

Just this. Listing the data is up to you, but doing so and commenting that the values appear to be correct will improve your report.

my_url <- "http://ritsokiguess.site/datafiles/carp.txt"

carp <- read_delim(my_url, " ")## Rows: 10 Columns: 3

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: " "

## dbl (3): tank, bodyweight, ENE

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.carp## # A tibble: 10 × 3

## tank bodyweight ENE

## <dbl> <dbl> <dbl>

## 1 1 11.7 15.3

## 2 2 25.3 9.3

## 3 3 90.2 6.5

## 4 4 213 6

## 5 5 10.2 15.7

## 6 6 17.6 10

## 7 7 32.6 8.6

## 8 8 81.3 6.4

## 9 9 142. 5.6

## 10 10 286. 6\(\blacksquare\)

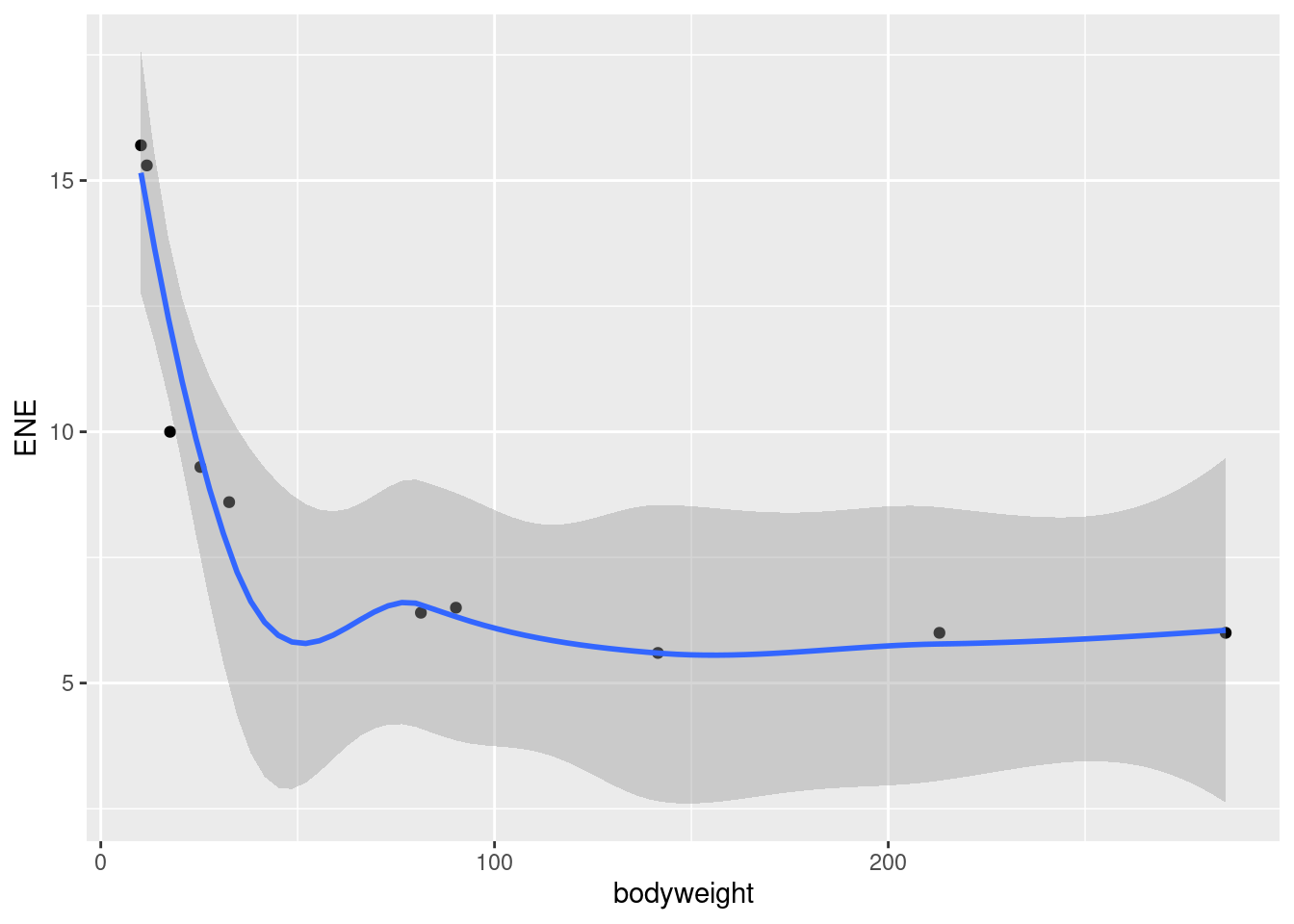

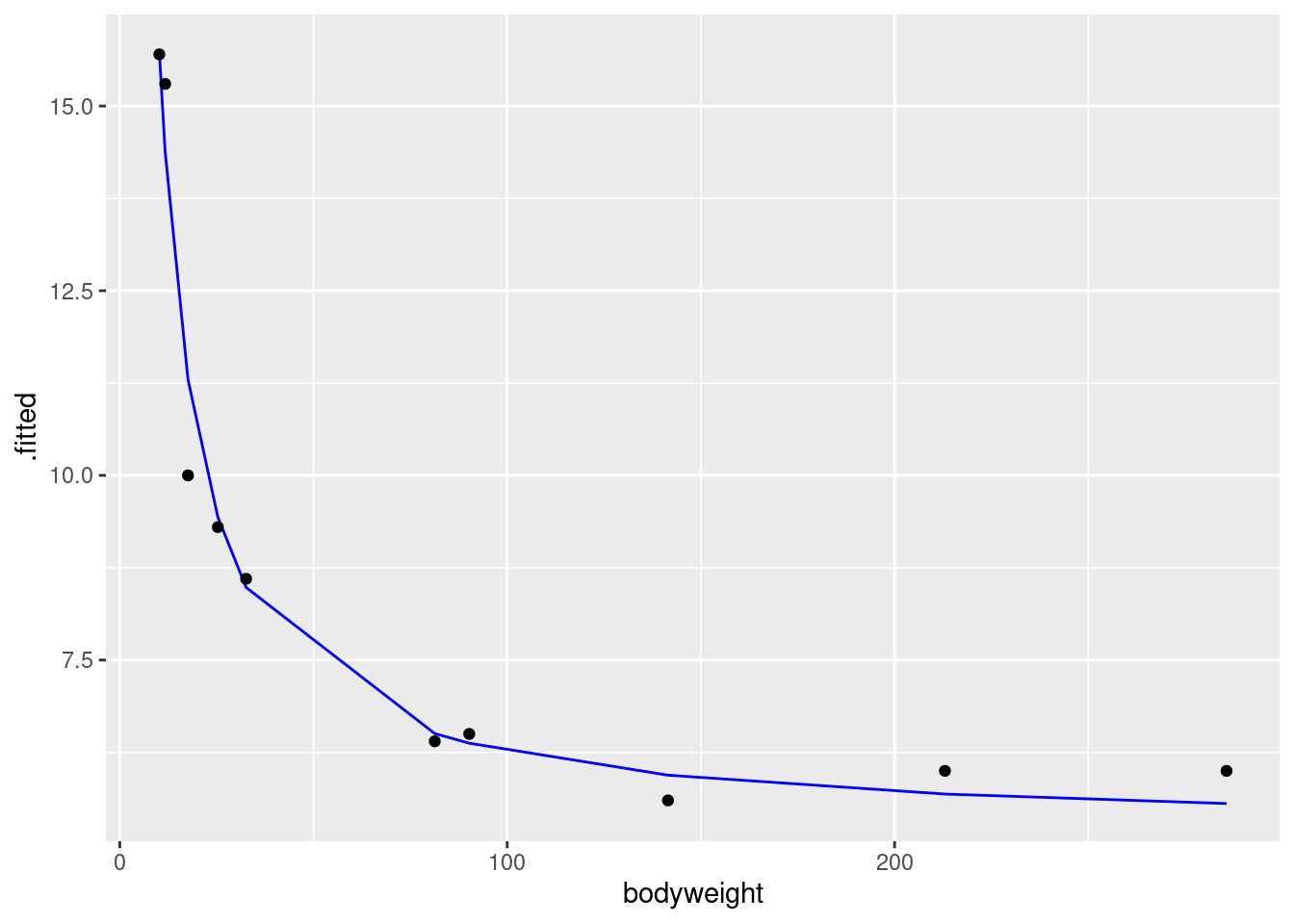

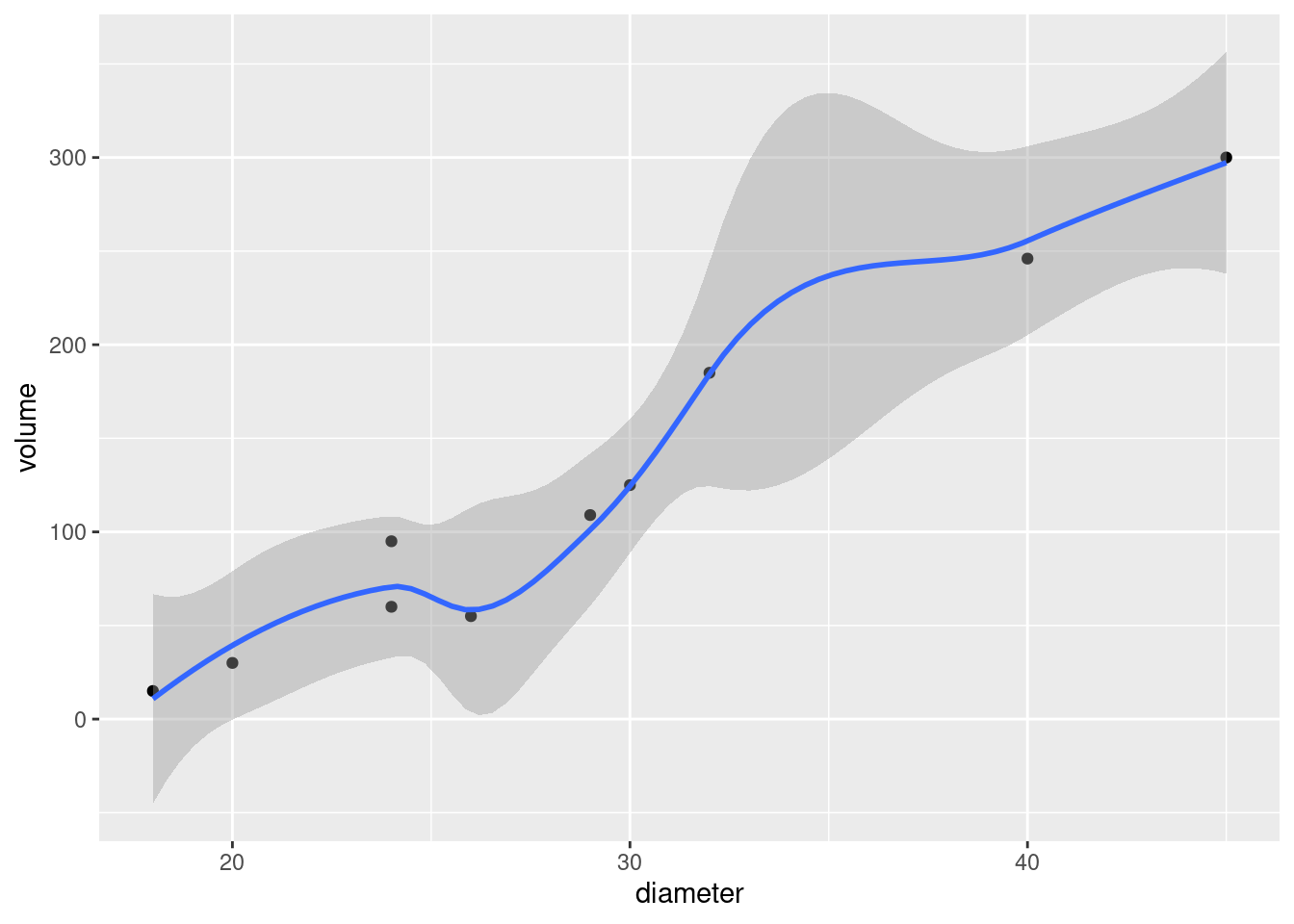

- Create a scatterplot of ENE (response) against bodyweight (explanatory). Add a smooth trend to your plot.

Solution

ggplot(carp, aes(x = bodyweight, y = ENE)) + geom_point() +

geom_smooth()## `geom_smooth()` using method = 'loess' and formula = 'y ~ x'

This part is just about getting the plot. Comments are coming in a

minute. Note that ENE is capital letters, so that

ene will not work.

\(\blacksquare\)

- Is there an upward or downward trend (or neither)? Is the relationship a line or a curve? Explain briefly.

Solution

The trend is downward: as bodyweight increases, ENE decreases. However, the decrease is rapid at first and then levels off, so the relationship is nonlinear. I want some kind of support for an assertion of non-linearity: anything that says that the slope or rate of decrease is not constant is good.

\(\blacksquare\)

- Fit a straight line to the data, and obtain the R-squared for the regression.

Solution

lm. The first stage is to fit the straight line, saving

the result in a variable, and the second stage is to look at the

“fitted model object”, here via summary:

carp.1 <- lm(ENE ~ bodyweight, data = carp)

summary(carp.1)##

## Call:

## lm(formula = ENE ~ bodyweight, data = carp)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.800 -1.957 -1.173 1.847 4.572

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.40393 1.31464 8.675 2.43e-05 ***

## bodyweight -0.02710 0.01027 -2.640 0.0297 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.928 on 8 degrees of freedom

## Multiple R-squared: 0.4656, Adjusted R-squared: 0.3988

## F-statistic: 6.971 on 1 and 8 DF, p-value: 0.0297Finally, you need to give me a (suitably rounded) value for R-squared: 46.6% or 47% or the equivalents as a decimal. I just need the value at this point. This kind of R-squared is actually pretty good for natural data, but the issue is whether we can improve it by fitting a non-linear model.30

\(\blacksquare\)

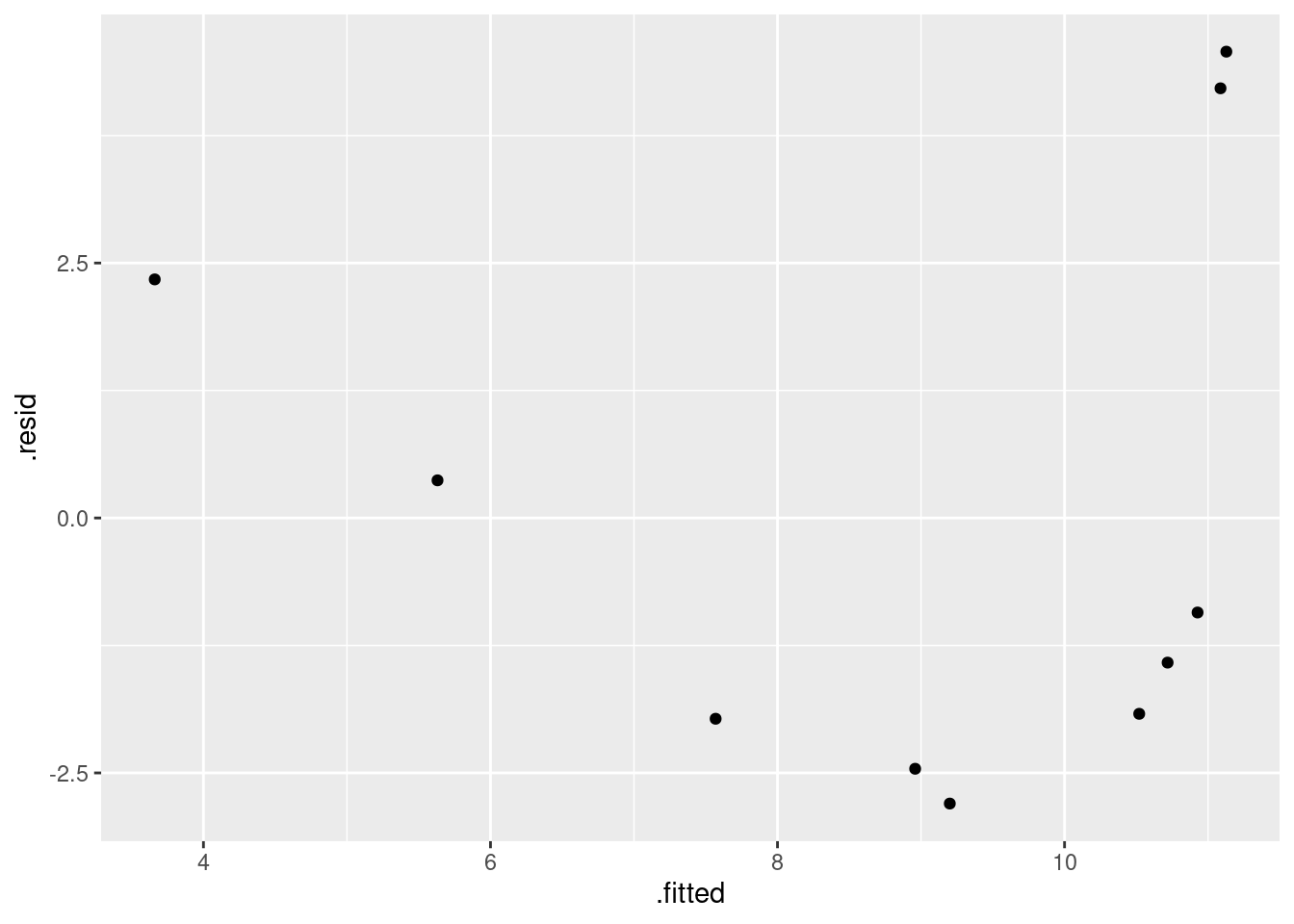

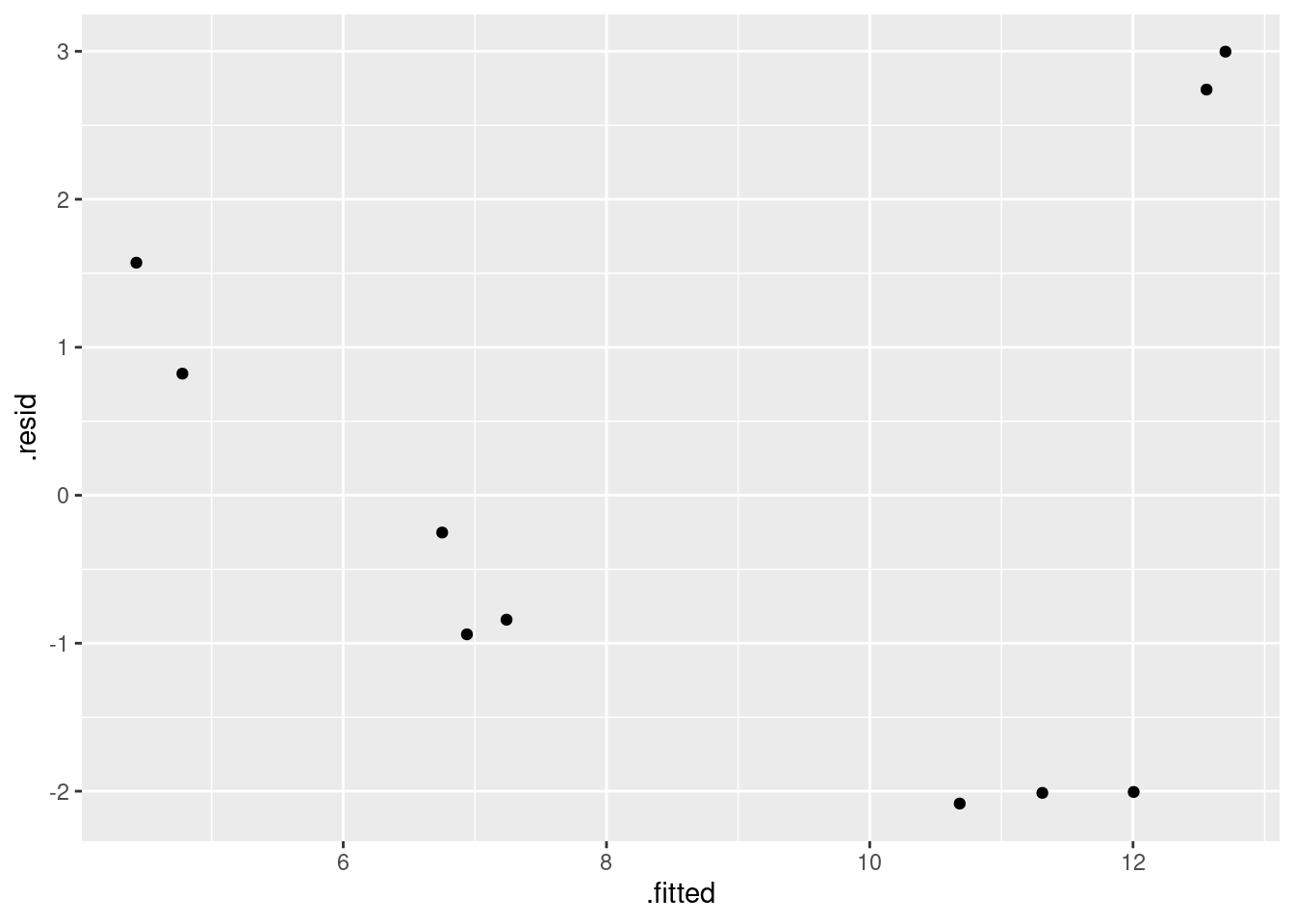

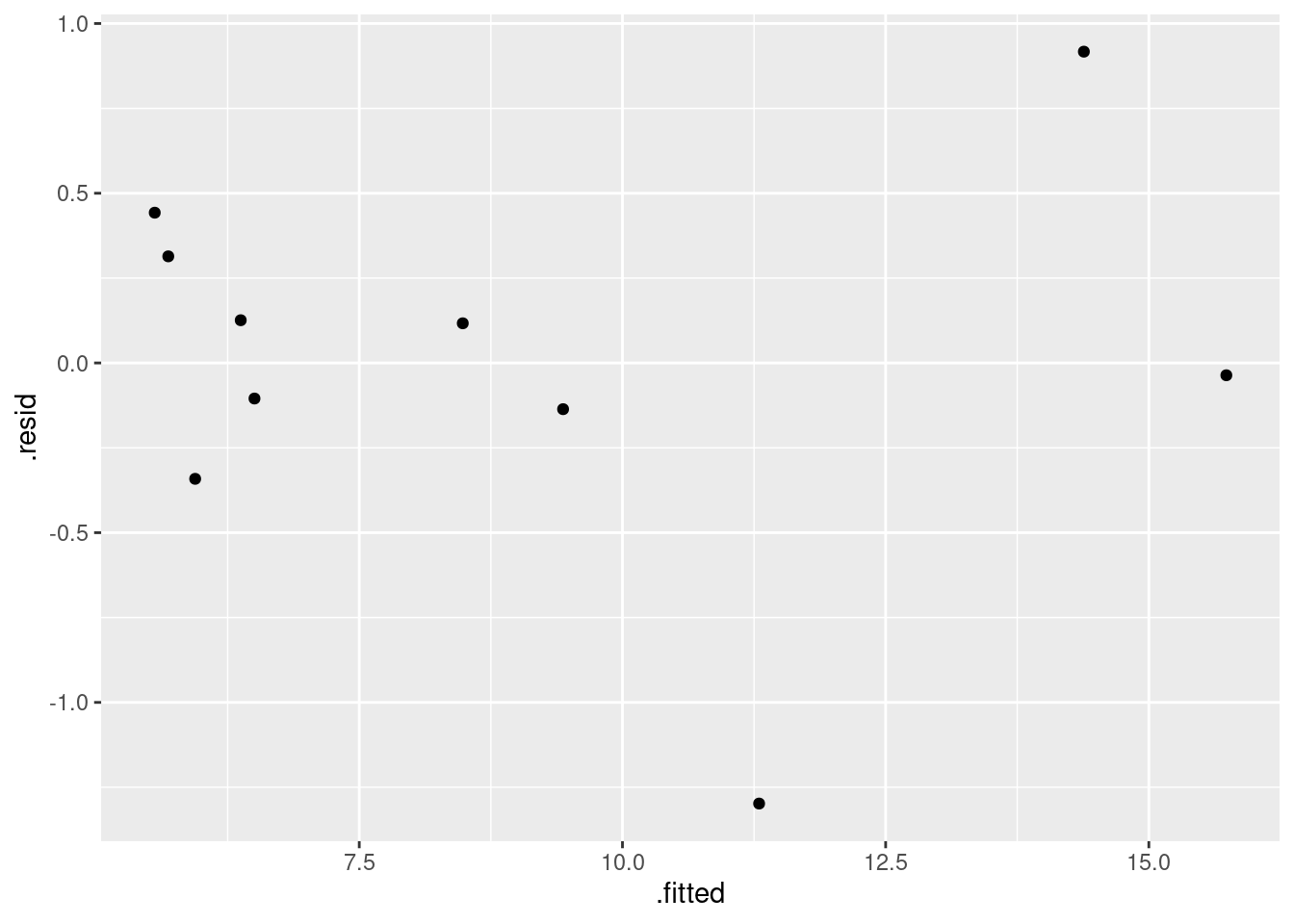

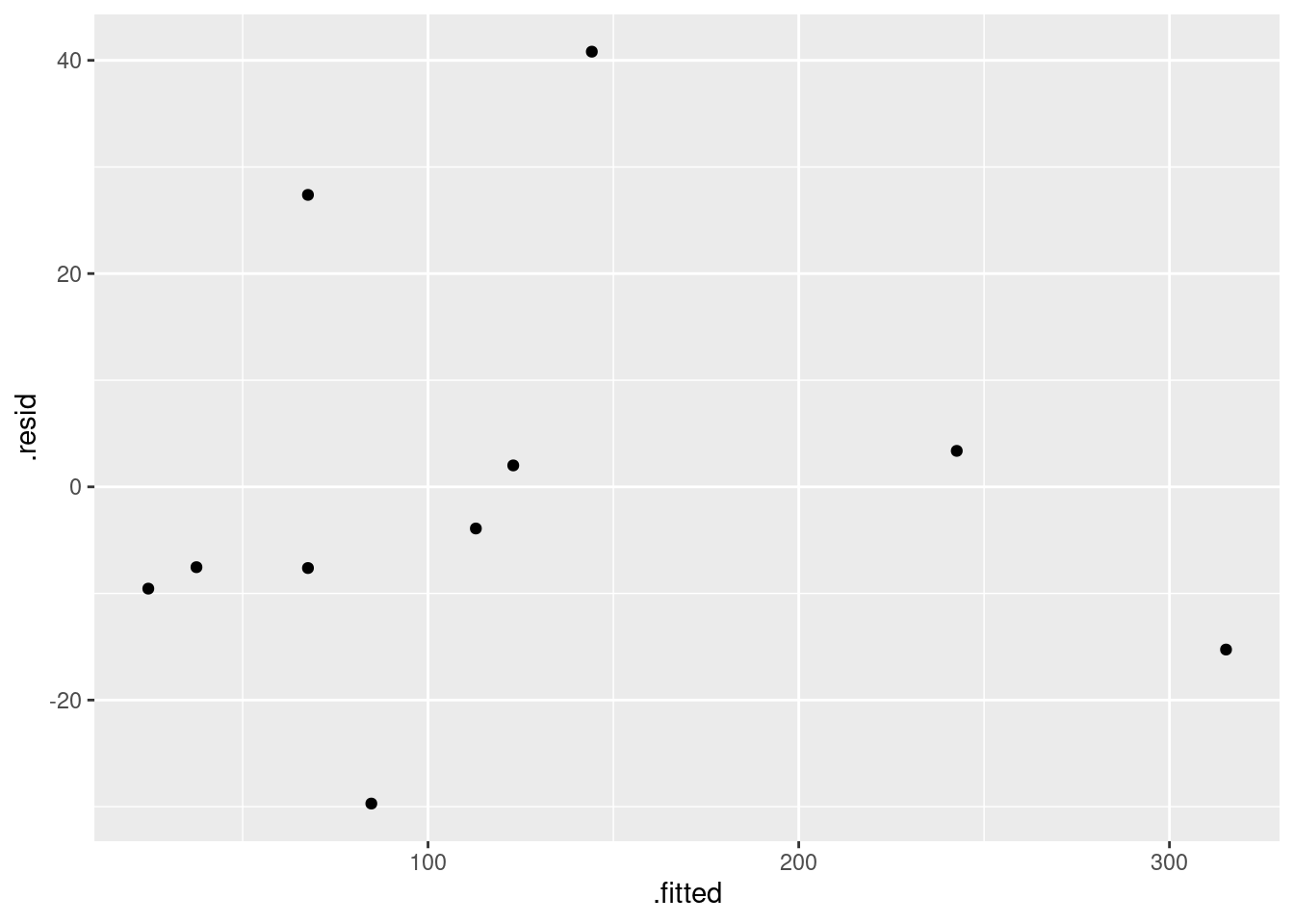

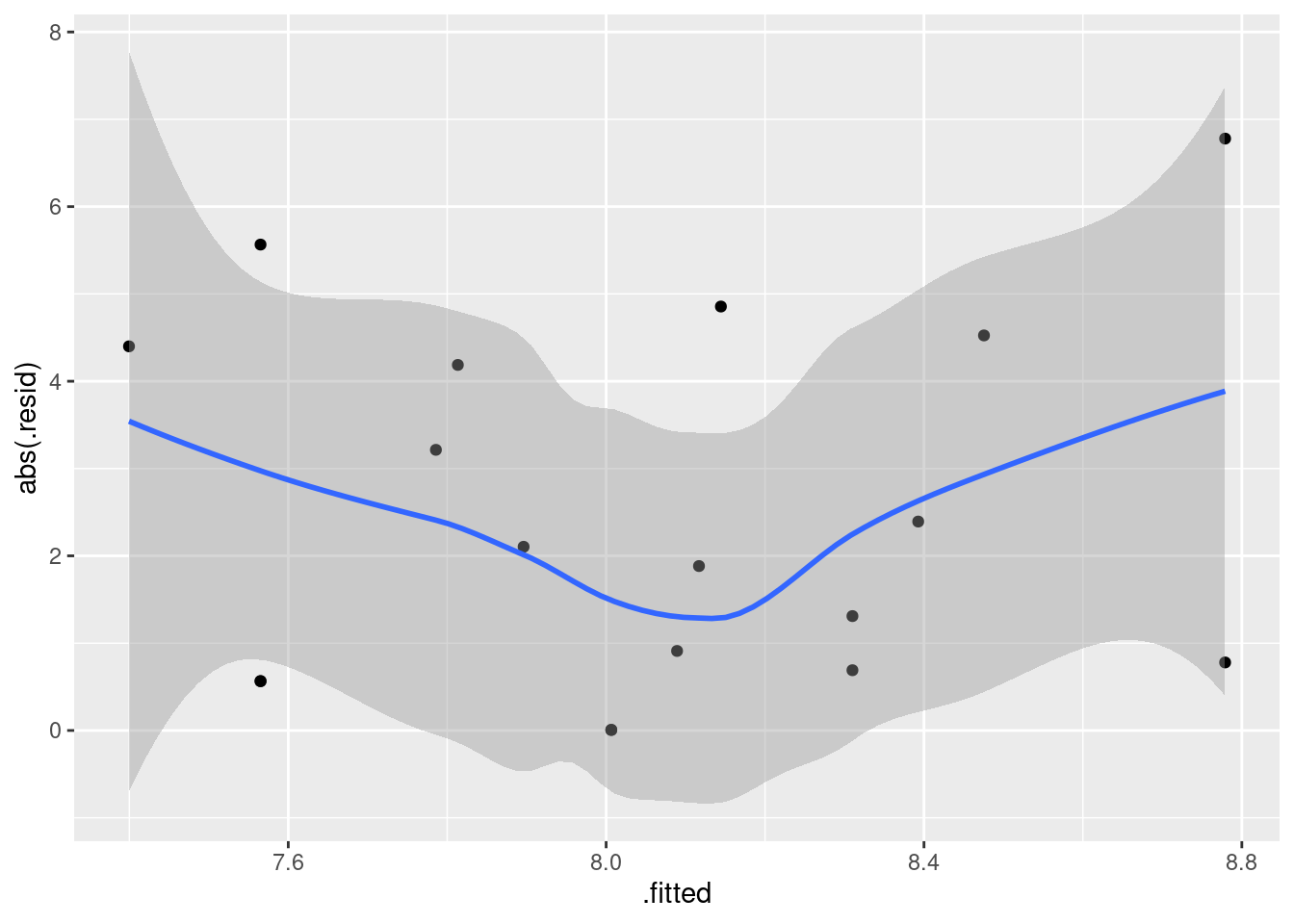

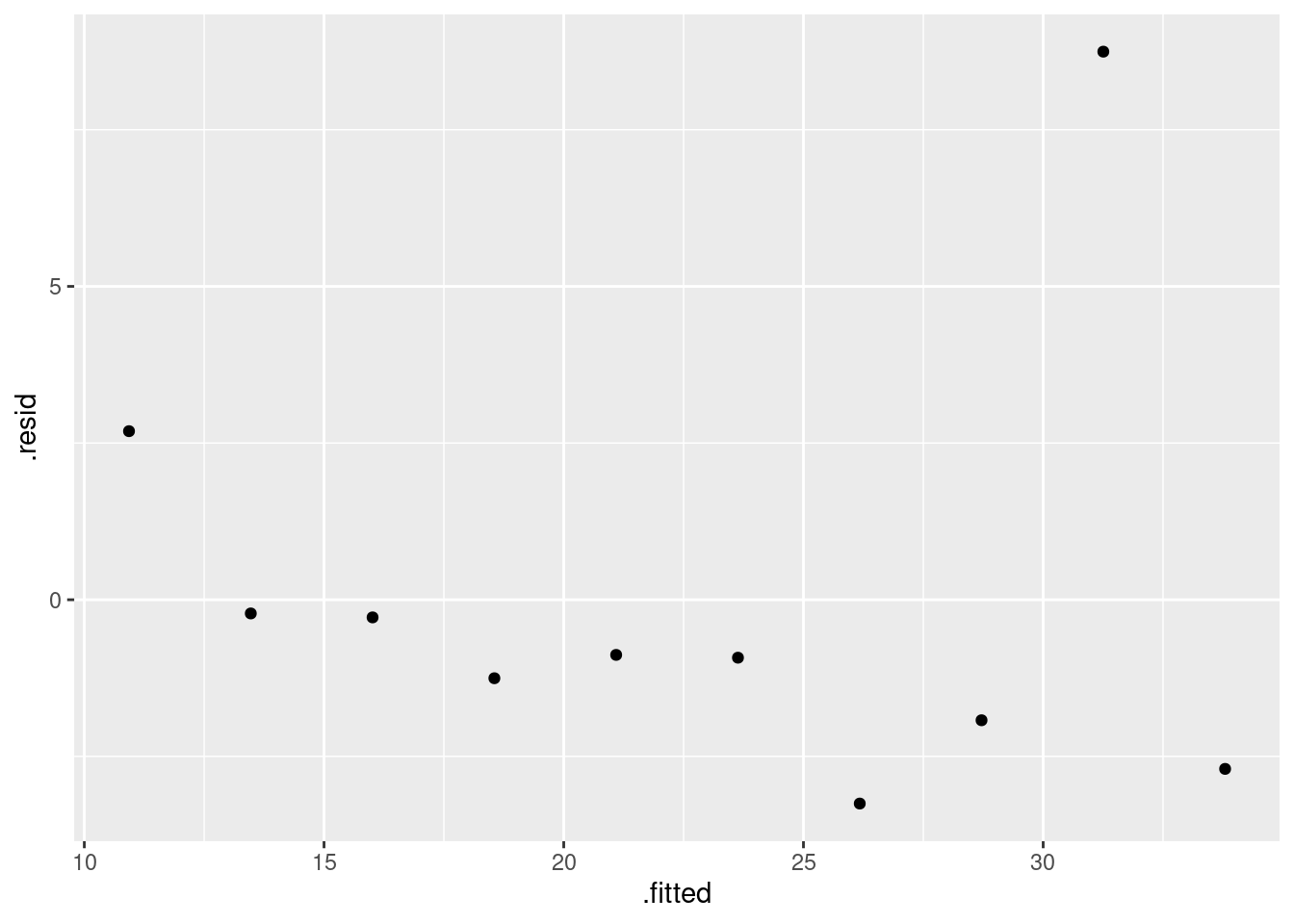

- Obtain a residual plot (residuals against fitted values) for this regression. Do you see any problems? If so, what does that tell you about the relationship in the data?

Solution

This is the easiest way: feed the output of the regression

straight into ggplot:

ggplot(carp.1, aes(x = .fitted, y = .resid)) + geom_point()

\(\blacksquare\)

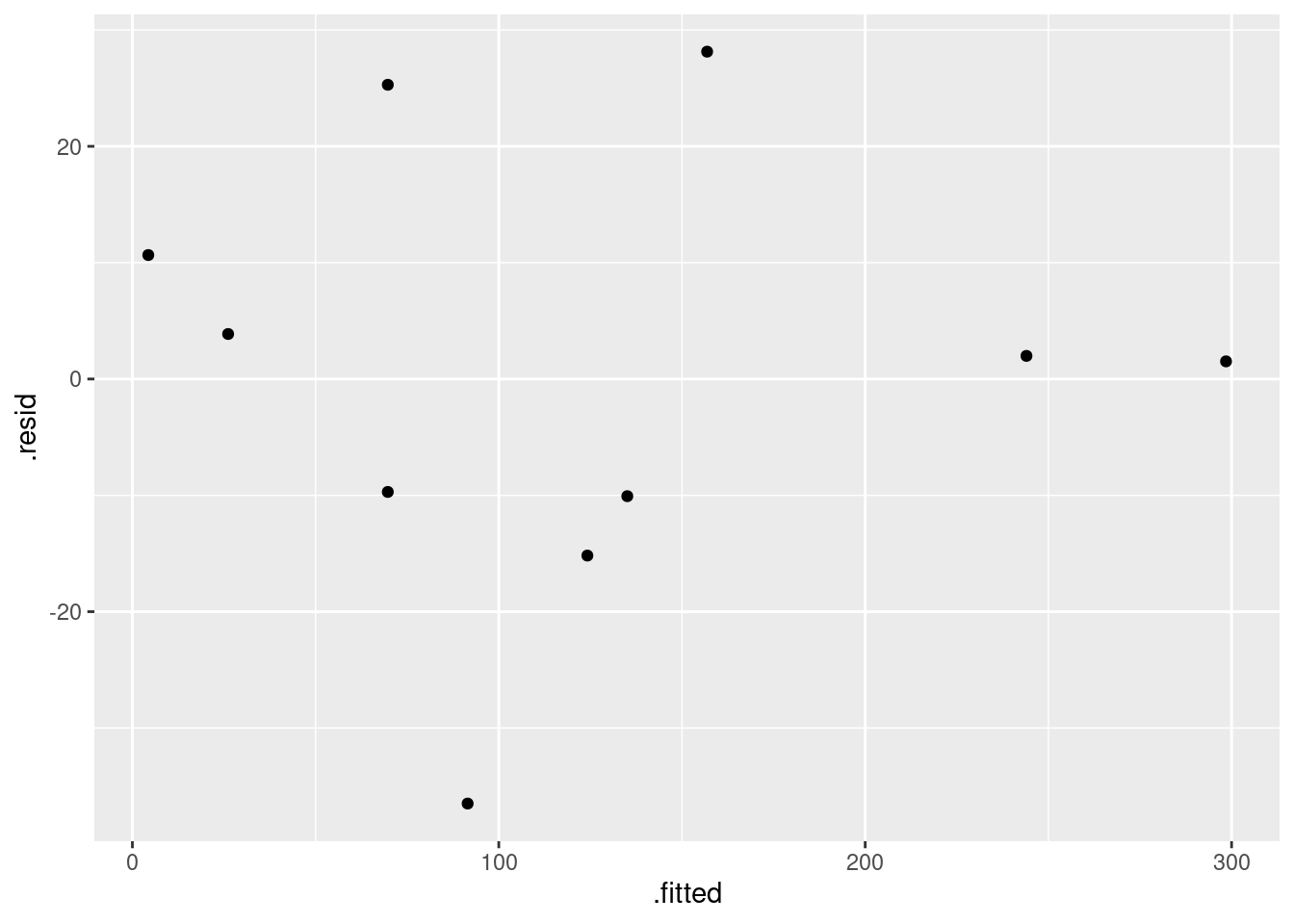

- Fit a parabola to the data (that is, including an \(x\)-squared term). Compare the R-squared values for the models in this part and part (d). Does that suggest that the parabola model is an improvement here over the linear model?

Solution

Add bodyweight-squared to

the regression. Don’t forget the I():

carp.2 <- lm(ENE ~ bodyweight + I(bodyweight^2), data = carp)

summary(carp.2)##

## Call:

## lm(formula = ENE ~ bodyweight + I(bodyweight^2), data = carp)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.0834 -1.7388 -0.5464 1.3841 2.9976

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 13.7127373 1.3062494 10.498 1.55e-05 ***

## bodyweight -0.1018390 0.0288109 -3.535 0.00954 **

## I(bodyweight^2) 0.0002735 0.0001016 2.692 0.03101 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.194 on 7 degrees of freedom

## Multiple R-squared: 0.7374, Adjusted R-squared: 0.6624

## F-statistic: 9.829 on 2 and 7 DF, p-value: 0.009277R-squared has gone up from 47% to 74%, a substantial improvement. This suggests to me that the parabola model is a substantial improvement.31

I try to avoid using the word “significant” in this context, since we haven’t actually done a test of significance.

The reason for the I() is that the up-arrow has a special

meaning in lm, relating to interactions between factors (as

in ANOVA), that we don’t want here. Putting I() around it

means “use as is”, that is, raise bodyweight to power 2, rather than

using the special meaning of the up-arrow in lm.

Because it’s the up-arrow that is the problem, this applies whenever you’re raising an explanatory variable to a power (or taking a reciprocal or a square root, say).

\(\blacksquare\)

- Is the test for the slope coefficient for the squared term significant? What does this mean?

Solution

Look along the bodyweight-squared line to get a P-value

of 0.031. This is less than the default 0.05, so it is

significant.

This means, in short, that the quadratic model is a significant

improvement over the linear one.32

Said longer: the null hypothesis being tested is that the slope

coefficient of the squared term is zero (that is, that the squared

term has nothing to add over the linear model). This is rejected,

so the squared term has something to add in terms of

quality of prediction.

\(\blacksquare\)

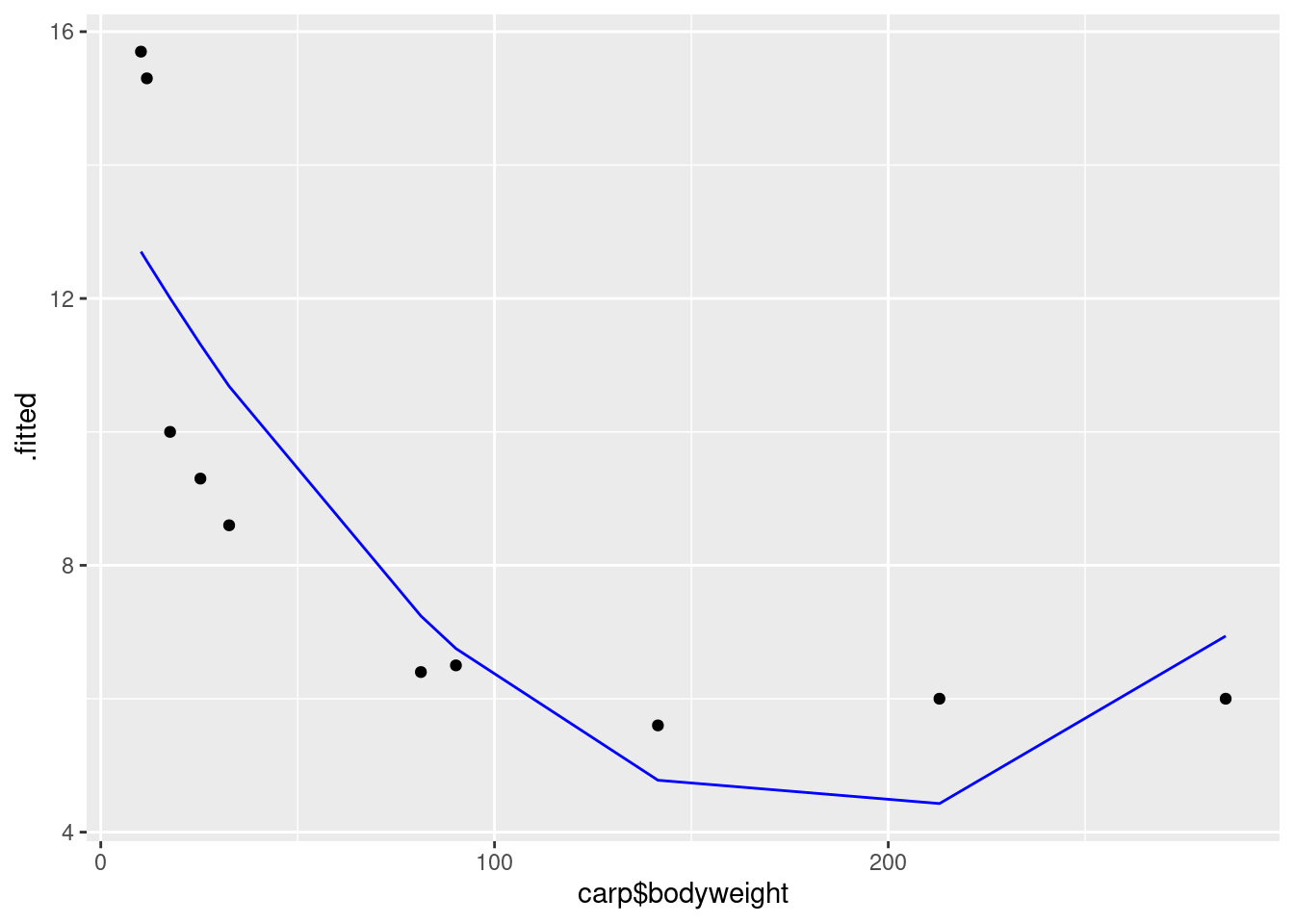

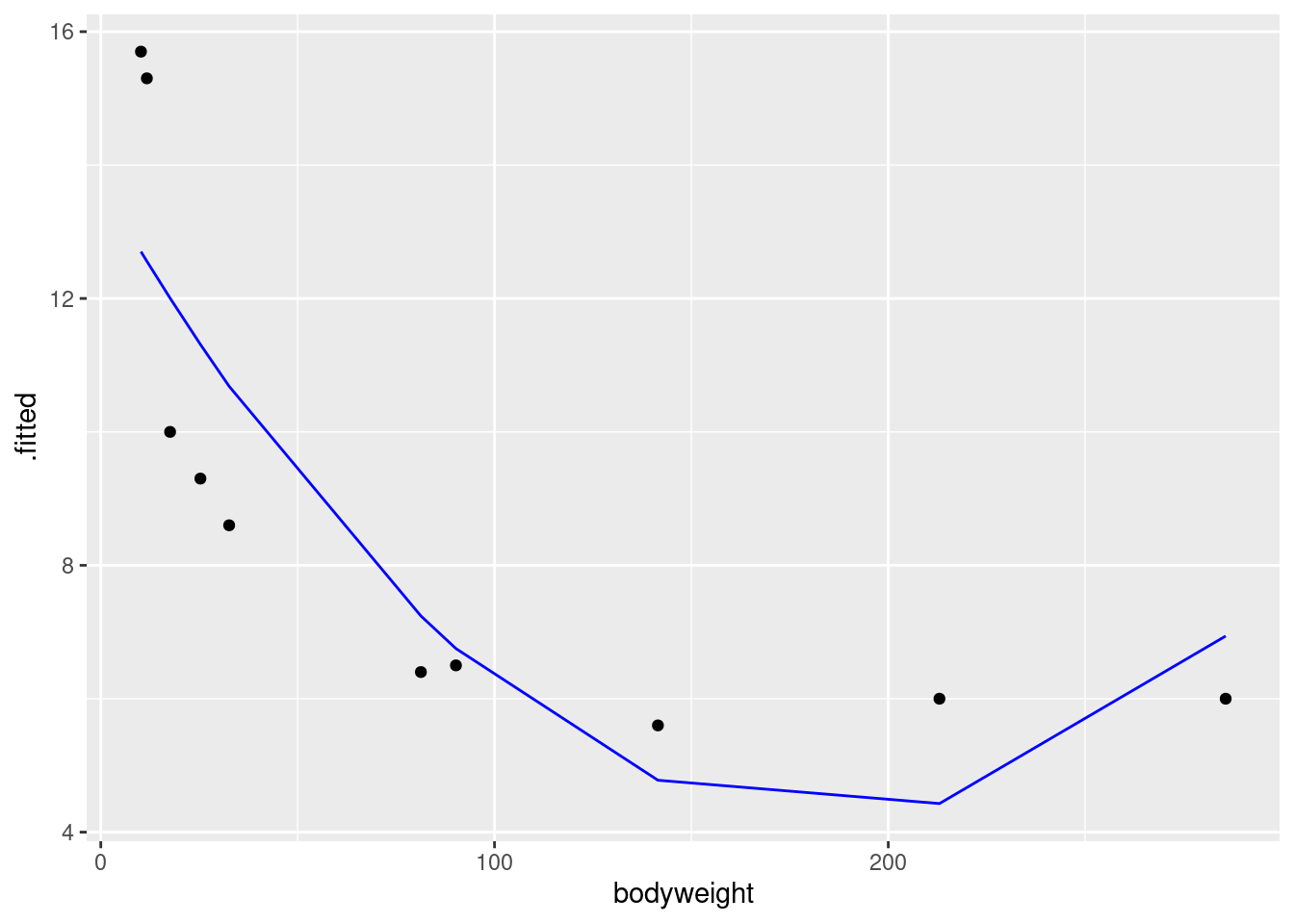

- Make the scatterplot of part (b), but add the fitted curve. Describe any way in which the curve fails to fit well.

Solution

This is a bit slippery, because the points to plot and the

fitted curve are from different data frames. What you do in this

case is to put a data= in one of the geoms,

which says “don’t use the data frame that was in the ggplot, but use this one instead”.

I would think about

starting with the regression object carp.2 as my base

data frame, since we want (or I want) to do two things with

that: plot the fitted values and join them with lines. Then I

want to add the original data, just the points:

ggplot(carp.2, aes(x = carp$bodyweight, y = .fitted), colour = "blue") +

geom_line(colour = "blue") +

geom_point(data = carp, aes(x = bodyweight, y = ENE))

This works, but is not very aesthetic, because the bodyweight that is plotted against the fitted values is in the wrong data frame, and so we have to use the dollar-sign thing to get it from the right one.

A better way around this is “augment” the data with output from the regression object.

This is done using augment from

package broom:

library(broom)

carp.2a <- augment(carp.2, carp)

carp.2a## # A tibble: 10 × 9

## tank bodyweight ENE .fitted .resid .hat .sigma .cooksd .std.resid

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 11.7 15.3 12.6 2.74 0.239 1.99 0.215 1.43

## 2 2 25.3 9.3 11.3 -2.01 0.163 2.19 0.0651 -1.00

## 3 3 90.2 6.5 6.75 -0.252 0.240 2.37 0.00182 -0.132

## 4 4 213 6 4.43 1.57 0.325 2.24 0.122 0.871

## 5 5 10.2 15.7 12.7 3.00 0.251 1.90 0.279 1.58

## 6 6 17.6 10 12.0 -2.01 0.199 2.19 0.0866 -1.02

## 7 7 32.6 8.6 10.7 -2.08 0.143 2.18 0.0583 -1.03

## 8 8 81.3 6.4 7.24 -0.841 0.211 2.34 0.0166 -0.431

## 9 9 142. 5.6 4.78 0.822 0.355 2.33 0.0398 0.466

## 10 10 286. 6 6.94 -0.940 0.875 2.11 3.40 -1.21so now you see what carp.2a has in it, and then:

g <- ggplot(carp.2a, aes(x = bodyweight, y = .fitted)) +

geom_line(colour = "blue") +

geom_point(aes(y = ENE))This is easier coding: there are only two non-standard things. The

first is that the fitted-value lines should be a distinct colour like

blue so that you can tell them from the data points. The second thing

is that for the second geom_point, the one that plots the data,

the \(x\) coordinate bodyweight is correct so that we don’t