library(car)

library(lme4)

library(tidyverse)34 Repeated measures

Packages for this chapter:

34.1 Effect of drug on rat weight

Box (1950) gives data on the weights of three groups of rats. One group was given thyroxin in their drinking water, one group thiouracil, and the third group was a control. (This description comes from Christensen (2001).)1 Weights are measured in grams at weekly intervals (over a 4-week period, so that each rat is measured 5 times). The data are in link as a .csv file.

Read in the data and check that you have a column of

drugand five columns of rat weights at different times.Why would it be wrong to use something like

pivot_longerto create one column of weights, and separate columns of drug and time, and then to run a two-way ANOVA? Explain briefly.Create a suitable response variable and fit a suitable

lmas the first step of the repeated-measures analysis.Load the package

carand run a suitableManova. To do this, you will need to set up the right thing foridataandidesign.Take a look at the output from the MANOVA. Is there a significant interaction? What does its significance (or lack thereof) mean?

We are going to draw an interaction plot in a moment. To set that up, use

pivot_longeras in the lecture notes to create one column of weights and a second column of times. (You don’t need to do theseparatething that I did in class, though if you want to try it, go ahead.)Obtain an interaction plot. Putting

timeas thexwill put time along the horizontal axis, which is the way we’re used to seeing such things. Begin by calculating the meanweightfor eachtime-drugcombination.How does this plot show why the interaction was significant? Explain briefly.

34.3 Children’s stress levels and airports

If you did STAC32, you might remember this question, which we can now do properly. Some of this question is a repeat from there.

The data in link are based on a 1998 study of stress levels in children as a result of the building of a new airport in Munich, Germany. A total of 200 children had their epinephrine levels (a stress indicator) measured at each of four different times: before the airport was built, and 6, 18 and 36 months after it was built. The four measurements are labelled epi_1 through epi_4. Out of the children, 100 were living near the new airport (location 1 in the data set), and could be expected to suffer stress because of the new airport. The other 100 children lived in the same city, but outside the noise impact zone. These children thus serve as a control group. The children are identified with numbers 1 through 200.

If we were testing for the effect of time, explain briefly what it is about the structure of the data that would make an analysis of variance inappropriate.

Read the data into R and demonstrate that you have the right number of observations and variables.

Create and save a “longer” data frame with all the epinephrine values collected together into one column.

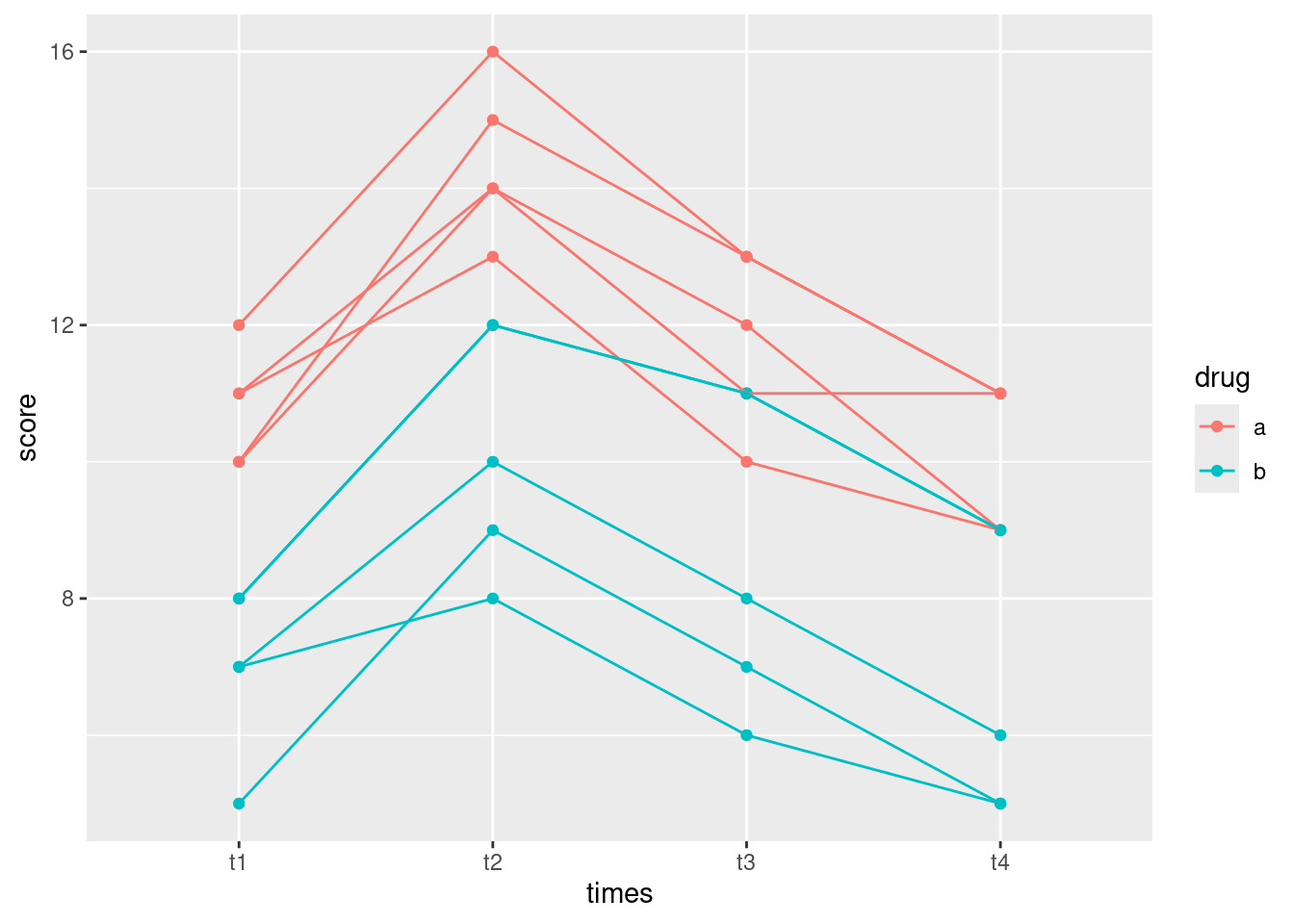

Make a “spaghetti plot” of these data: that is, a plot of epinephrine levels against time, with the locations identified by colour, and the points for the same child joined by lines. To do this: (i) from the long data frame, create a new column containing only the numeric values of time (1 through 4), (ii) plot epinephrine level against time with the points grouped by child and coloured by location (which you may have to turn from a number into a factor.)

What do you see on your spaghetti plot? We are looking ahead to possible effects of time, location and their interaction.

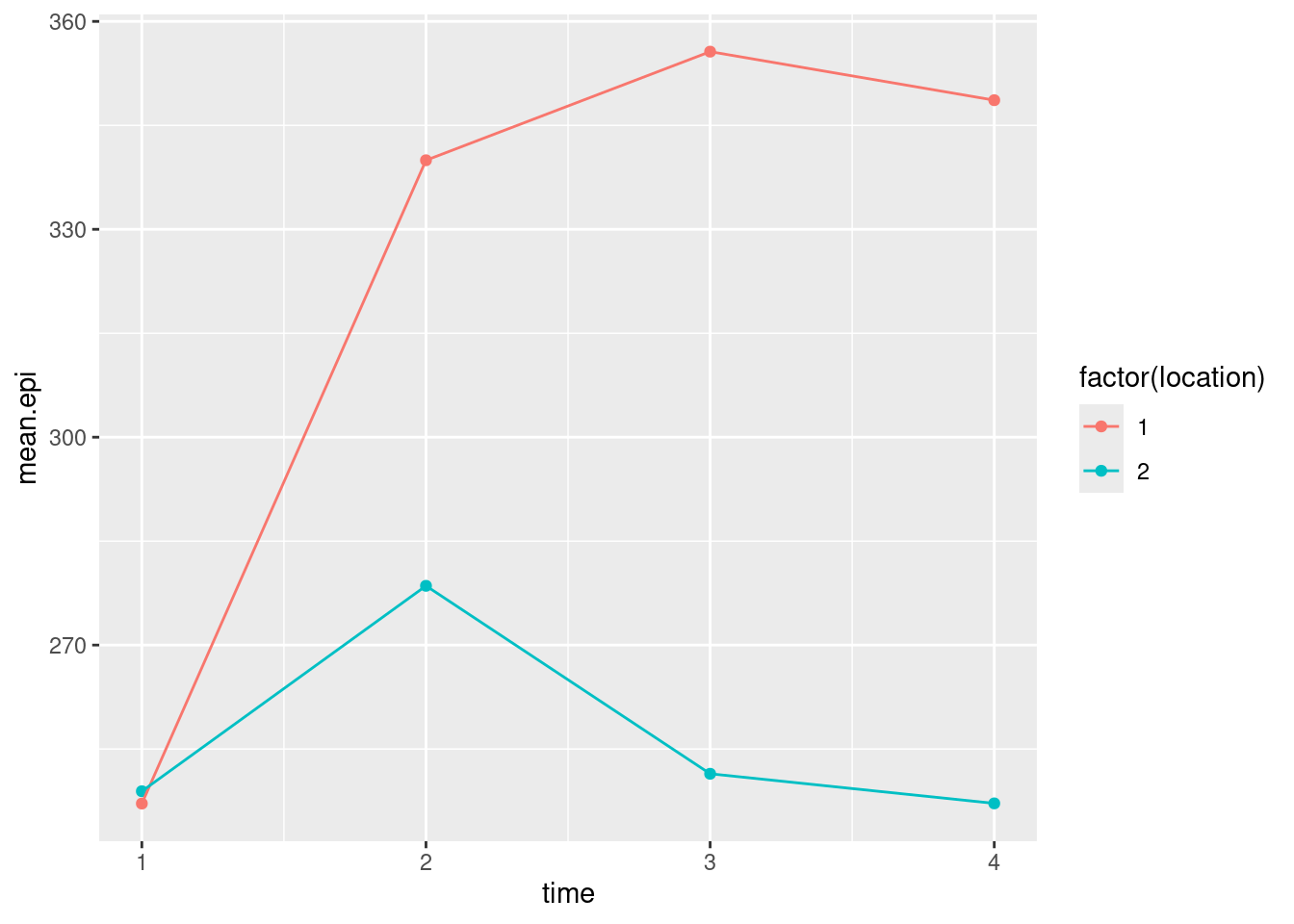

The spaghetti plot was hard to interpret because there are so many children. Calculate the mean epinephrine levels for each location-time combination, and make an interaction plot with time on the \(x\)-axis and location as the second factor.

What do you conclude from your interaction plot? Is your conclusion clearer than from the spaghetti plot?

Run a repeated-measures analysis of variance and display the results. Go back to your original data frame, the one you read in from the file, for this. You’ll need to make sure your numeric

locationgets treated as afactor.What do you conclude from the MANOVA? Is that consistent with your graphs? Explain briefly.

34.4 Body fat as repeated measures

This one is also stolen from STAC32. Athletes are concerned with measuring their body fat percentage. Two different methods are available: one using ultrasound, and the other using X-ray technology. We are interested in whether there is a difference in the mean body fat percentage as measured by these two methods, and if so, how big that difference is. Data on 16 athletes are at link.

Read in the data and check that you have a sensible number of rows and columns.

Carry out a suitable (matched-pairs) \(t\)-test to determine whether the means are the same or different.

What do you conclude from the test?

Run a repeated-measures analysis of variance, treating the two methods of measuring body fat as the repeated measures (ie., playing the role of “time” that you have seen in most of the other repeated measures analyses). There is no “treatment” here, so there is nothing to go on the right side of the squiggle. Insert a

1there to mean “just an intercept”. Display the results.Compare your repeated-measures analysis to your matched-pairs one. Do you draw the same conclusions?

34.5 Investigating motor activity in rats

A researcher named King was investigating the effect of the drug midazolam on motor activity in rats. Typically, the first time the drug is injected, a rat’s motor activity decreases substantially, but rats typically develop a “tolerance”, so that further injections of the drug have less impact on the rat’s motor activity.

The data shown in link were all taken in one day, called the “experiment day” below. 24 different rats were used. Each rat, on the experiment day, was injected with a fixed amount of midazolam, and at each of six five-minute intervals after being injected, the rat’s motor activity was measured (these are labelled i1 through i6 in the data). The rats differed in how they had been treated before the experiment day. The control group of rats had previously been injected repeatedly with a saline solution (no active ingredient), so the experiment day was the first time this group of rats had received midazolam. The other two groups of rats had both received midazolam repeatedly before the experiment day: the “same” group was injected on experiment day in the same environment that the previous injections had taken place (this is known in psychology as a “conditioned tolerance”), but the “different” group had the previous injections in a different environment than on experiment day.

The column id identifies the rat from which each sequence of values was obtained.

Explain briefly why we need to use a repeated measures analysis for these data.

Read in the data and note that you have what was promised in the question.

We are going to do a repeated-measures analysis using the “profile” method shown in class. Create a suitable response variable for this method.

Set up the “within-subjects” part of the analysis. That means getting hold of the names of the columns that hold the different times, saving them, and also making a data frame out of them:

Fit the repeated-measures ANOVA. This will involve fitting an

lmfirst, if you have not already done so.What do you conclude from your repeated-measures ANOVA? Explain briefly, in the context of the data.

To understand the results of the previous part, we are going to make a spaghetti plot. In preparation for that, we need to save the data in “long format” with one observation on one time point in each row. Arrange that, and show by displaying (some of) the data that you have done so.

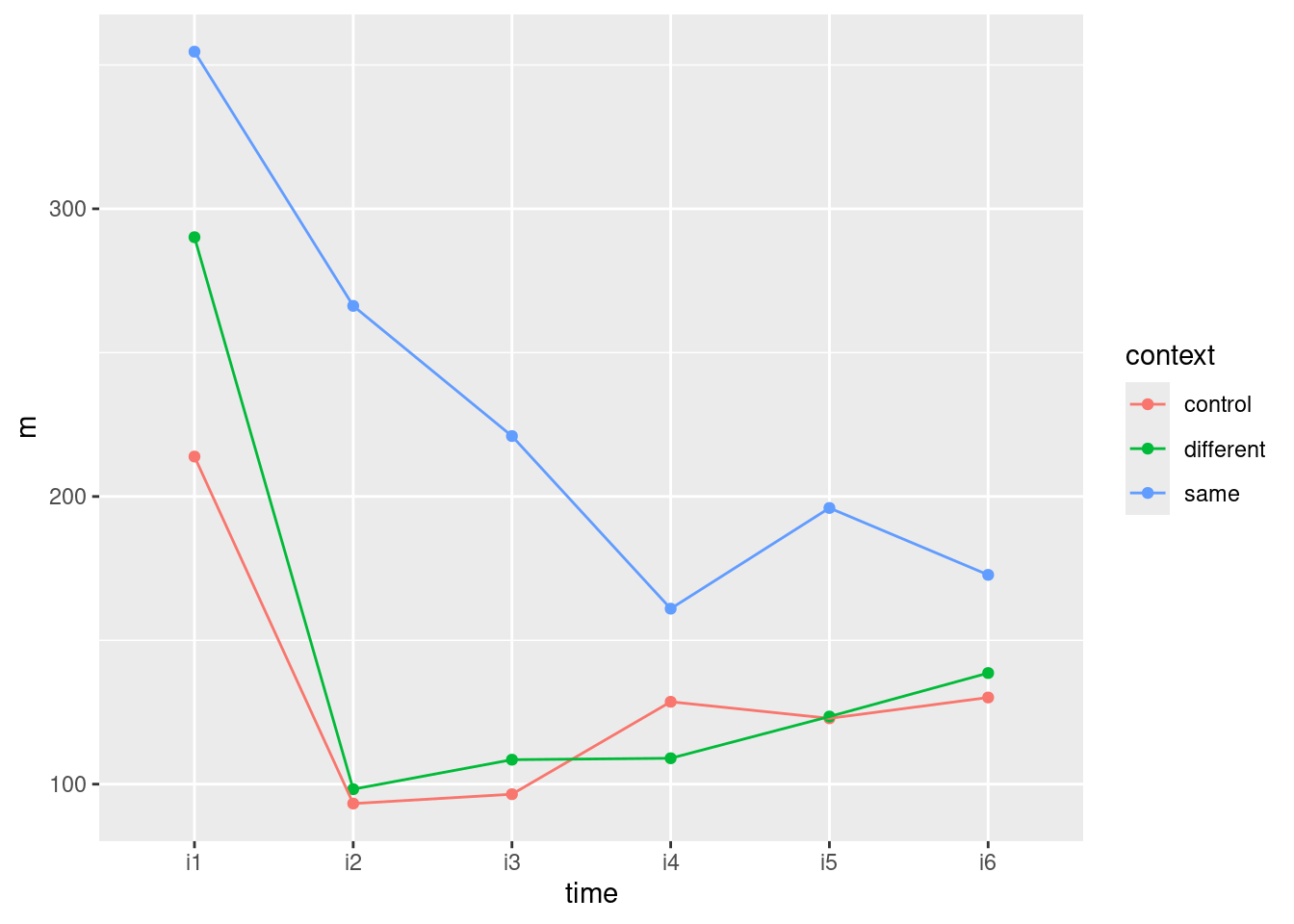

Make a spaghetti plot: that is, plot motor activity against the time points, joining the points for each rat by lines, and colouring the points and lines according to the context.

Looking at your spaghetti plot, why do you think your repeated-measures ANOVA came out as it did? Explain briefly.

34.6 Repeated measures with no background

Nine people are randomly chosen to receive one of three treatments, labelled A, B and C. Each person has their response y to the treatment measured at three times, labelled T1, T2 and T3. The main aim of the study is to properly assess the effects of the treatments. A higher value of y is better.

The data are in link.

There are \(9 \times 3=27\) observations of

yin this study. Why would it be wrong to treat these as 27 independent observations? Explain briefly.Read in the data values. Are they tidy or untidy? Explain briefly. (The data values are separated by tabs, like the Australian athlete data.)

Make a spaghetti plot: that is, a plot of

yagainst time, with the observations for the same individual joined by lines which are coloured according to the treatment that individual received.On your spaghetti plot, how do the values of

yfor the treatments compare over time?Explain briefly how the data are in the wrong format for a repeated-measures ANOVA (done using MANOVA, as in class), and use

pivot_widerto get the data set into the right format.Run a repeated-measures ANOVA the

Manovaway. What do you conclude from it?How is your conclusion from the previous part consistent with your spaghetti plot? Explain briefly.

My solutions follow:

34.7 Effect of drug on rat weight

Box (1950) gives data on the weights of three groups of rats.2 One group was given thyroxin in their drinking water, one group thiouracil, and the third group was a control. (This description comes from Christensen (2001).)3 Weights are measured in grams at weekly intervals (over a 4-week period, so that each rat is measured 5 times). The data are in link as a .csv file.

- Read in the data and check that you have a column of

drugand five columns of rat weights at different times.

Solution

A .csv file, so read_csv. (I typed the data from Christensen (2001) into a spreadsheet.)

my_url <- "http://ritsokiguess.site/datafiles/ratweight.csv"

weights <- read_csv(my_url)Rows: 27 Columns: 7

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (1): drug

dbl (6): rat, Time0, Time1, Time2, Time3, Time4

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.weightsThere are 27 rats altogether, each measured five times (labelled time 0 through 4). The rats are also labelled rat (the first column), which will be useful later.

\(\blacksquare\)

- Why would it be wrong to use something like

pivot_longerto create one column of weights, and separate columns of drug and time, and then to run a two-way ANOVA? Explain briefly.

Solution

Such a solution would assume that we have measurements on different rats, one for each drug-time combination. But we have sets of five measurements all on the same rat: that is to say, we have repeated measures, and the proper analysis will take that into account.

\(\blacksquare\)

- Create a suitable response variable and fit a suitable

lmas the first step of the repeated-measures analysis.

Solution

The response variable is the same idea as for any MANOVA: just glue the columns together:

response <- with(weights, cbind(Time0, Time1, Time2, Time3, Time4))

weights.1 <- lm(response ~ drug, data = weights)weights %>% select(starts_with("Time")) %>%

as.matrix() -> yNow, we don’t look at weights.1, but we do use it as input to Manova in a moment.

\(\blacksquare\)

- Load the package

carand run a suitableManova. To do this, you will need to set up the right thing foridataandidesign.

Solution

Something like this:

times <- colnames(response)

times[1] "Time0" "Time1" "Time2" "Time3" "Time4"times.df <- data.frame(times=factor(times))

times.dfweights.2 <- Manova(weights.1, idata = times.df, idesign = ~times)The thought process is that the columns of the response (Time.0 through Time.4) are all times. This is the “within-subject design” part of it: within a rat, the different response values are at different times. That’s the only part of it that is within subjects. The different drugs are a “between-subjects” factor: each rat only gets one of the drugs.4

\(\blacksquare\)

- Take a look at all the output from the MANOVA. Is there a significant interaction? What does its significance (or lack thereof) mean?

Solution

Look at the summary, which is rather long:

summary(weights.2)

Type II Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

Time0 1

Time1 1

Time2 1

Time3 1

Time4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 6875579

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.99257 3204.089 1 24 < 2.22e-16 ***

Wilks 1 0.00743 3204.089 1 24 < 2.22e-16 ***

Hotelling-Lawley 1 133.50372 3204.089 1 24 < 2.22e-16 ***

Roy 1 133.50372 3204.089 1 24 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: drug

Response transformation matrix:

(Intercept)

Time0 1

Time1 1

Time2 1

Time3 1

Time4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 33193.27

Multivariate Tests: drug

Df test stat approx F num Df den Df Pr(>F)

Pillai 2 0.3919186 7.734199 2 24 0.0025559 **

Wilks 2 0.6080814 7.734199 2 24 0.0025559 **

Hotelling-Lawley 2 0.6445166 7.734199 2 24 0.0025559 **

Roy 2 0.6445166 7.734199 2 24 0.0025559 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2 times3 times4

Time0 1 0 0 0

Time1 0 1 0 0

Time2 0 0 1 0

Time3 0 0 0 1

Time4 -1 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3 times4

times1 235200.00 178920 116106.67 62906.67

times2 178920.00 136107 88324.00 47854.00

times3 116106.67 88324 57316.15 31053.93

times4 62906.67 47854 31053.93 16825.04

Multivariate Tests: times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.98265 297.3643 4 21 < 2.22e-16 ***

Wilks 1 0.01735 297.3643 4 21 < 2.22e-16 ***

Hotelling-Lawley 1 56.64082 297.3643 4 21 < 2.22e-16 ***

Roy 1 56.64082 297.3643 4 21 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: drug:times

Response transformation matrix:

times1 times2 times3 times4

Time0 1 0 0 0

Time1 0 1 0 0

Time2 0 0 1 0

Time3 0 0 0 1

Time4 -1 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3 times4

times1 9192.071 8948.843 6864.676 3494.448

times2 8948.843 8787.286 6740.286 3381.529

times3 6864.676 6740.286 5170.138 2594.103

times4 3494.448 3381.529 2594.103 1334.006

Multivariate Tests: drug:times

Df test stat approx F num Df den Df Pr(>F)

Pillai 2 0.8779119 4.303151 8 44 0.00069308 ***

Wilks 2 0.2654858 4.939166 8 42 0.00023947 ***

Hotelling-Lawley 2 2.2265461 5.566365 8 40 9.3465e-05 ***

Roy 2 1.9494810 10.722146 4 22 5.6277e-05 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Univariate Type II Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 1375116 1 10300.2 24 3204.0892 < 2.2e-16 ***

drug 6639 2 10300.2 24 7.7342 0.002556 **

times 146292 4 4940.7 96 710.6306 < 2.2e-16 ***

drug:times 6777 8 4940.7 96 16.4606 4.185e-15 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Mauchly Tests for Sphericity

Test statistic p-value

times 0.0072565 1.781e-19

drug:times 0.0072565 1.781e-19

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

times 0.33165 < 2.2e-16 ***

drug:times 0.33165 2.539e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

HF eps Pr(>F[HF])

times 0.3436277 9.831975e-26

drug:times 0.3436277 1.757377e-06Start near the bottom with Mauchly’s test. This is strongly significant (for the interaction, which is our focus here) and means that sphericity fails and the P-value for the interaction in the univariate test is not to be trusted (it is much too small). Look instead at the Huynh-Feldt adjusted P-value at the very bottom, \(1.76 \times 10^{-6}\). This is strongly significant still, but it is a billion times bigger than the one in the univariate table! For comparison, the test for interaction in the multivariate analysis has a P-value of 0.0007 or less, depending on which of the four tests you look at (this time, they are not all the same). As usual, the multivariate tests have bigger P-values than the appropriately adjusted univariate tests, but the P-values are all pointing in the same direction.

The significant interaction means that the effect of time on growth is different for the different drugs: that is, the effect of drug is over the whole time profile, not just something like “a rat on Thyroxin is on average 10 grams heavier than a control rat, over all times”.

Since the interaction is significant, that’s where we stop, as far as interpretation is concerned.

\(\blacksquare\)

- We are going to draw an interaction plot in a moment. To set that up, use

pivot_longeras in the lecture notes to create one column of weights and a second column of times. (You don’t need to do theseparatething that I did in class, though if you want to try it, go ahead.)

Solution

Like this:

weights %>%

pivot_longer(starts_with("Time"), names_to="time", values_to="weight") -> weights.long

weights.longMy data frame was called weights, so I was OK with having a variable called weight. Watch out for that if you call the data frame weight, though.

Since the piece of the time we want is the number, parse_number (from readr, part of the tidyverse) should also work:

weights %>%

pivot_longer(starts_with("Time"), names_to="timex", values_to="weight") %>%

mutate(time = parse_number(timex)) -> weights2.long

weights2.long %>% sample_n(20)I decided to show you a random collection of rows, so that you can see that parse_number worked for various different times.

\(\blacksquare\)

- Obtain an interaction plot. Putting

timeas thexwill put time along the horizontal axis, which is the way we’re used to seeing such things. Begin by calculating the meanweightfor eachtime-drugcombination.

Solution

group_by, summarize and ggplot, the latter using the data frame that came out of the summarize. The second factor drug goes as the colour and group both, since time has grabbed the x spot:

weights.long %>%

group_by(time, drug) %>%

summarize(mean.weight = mean(weight)) %>%

ggplot(aes(x = time, y = mean.weight, colour = drug, group = drug)) +

geom_point() + geom_line()`summarise()` has grouped output by 'time'. You can override using the

`.groups` argument.

\(\blacksquare\)

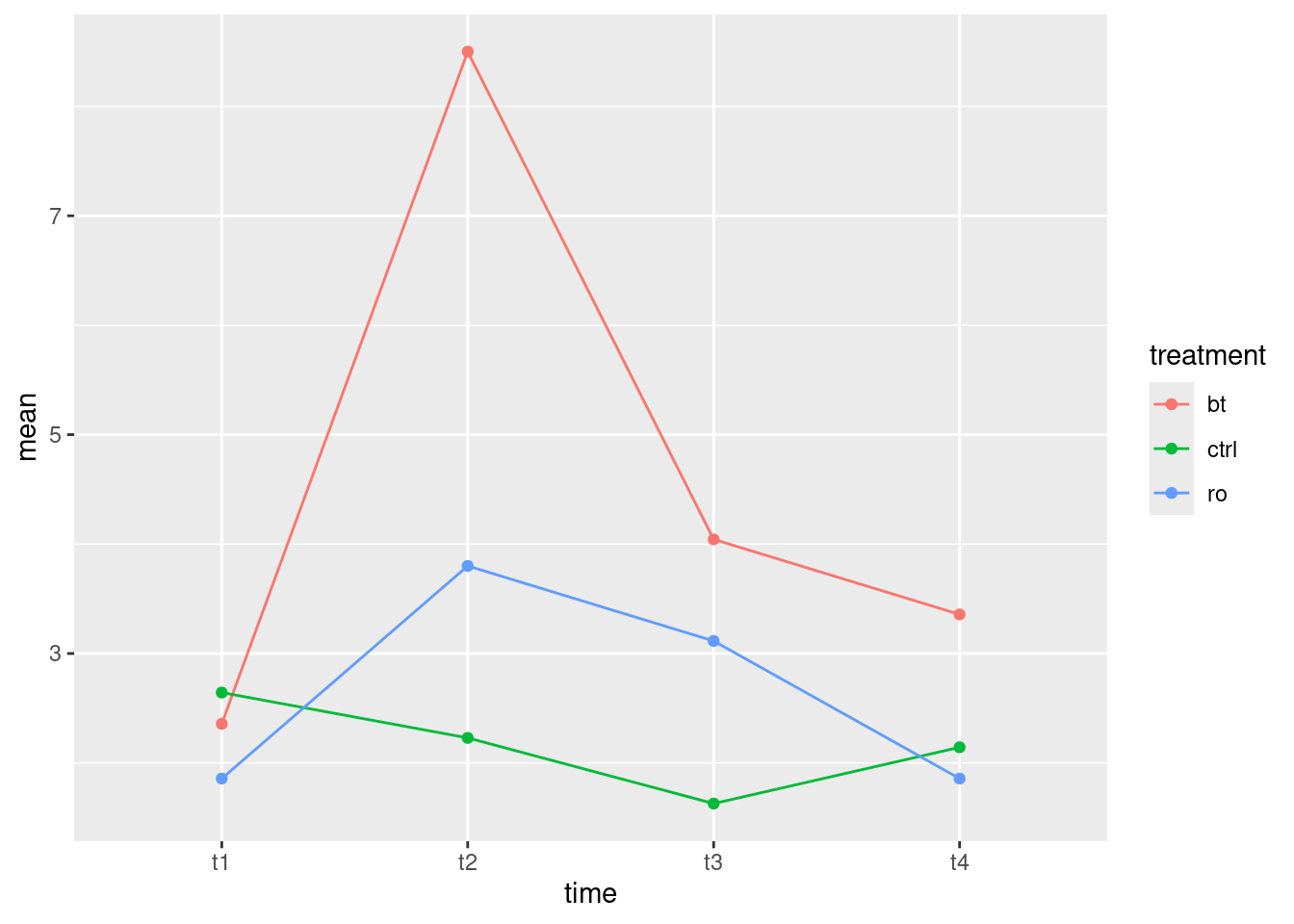

- How does this plot show why the interaction was significant? Explain briefly.

Solution

At the beginning, all the rats have the same average growth, but from time 2 (or maybe even 1) or so, the rats on thiouracil grew more slowly. The idea is not just that thiouracil has a constant effect over all times, but that the pattern of growth is different for the different drugs: whether or not thiouracil inhibits growth, and, if so, by how much, depends on what time point you are looking at.

Rats on thyroxin or the control drug grew at pretty much the same rate over all times, so I wouldn’t concern myself with any differences there.

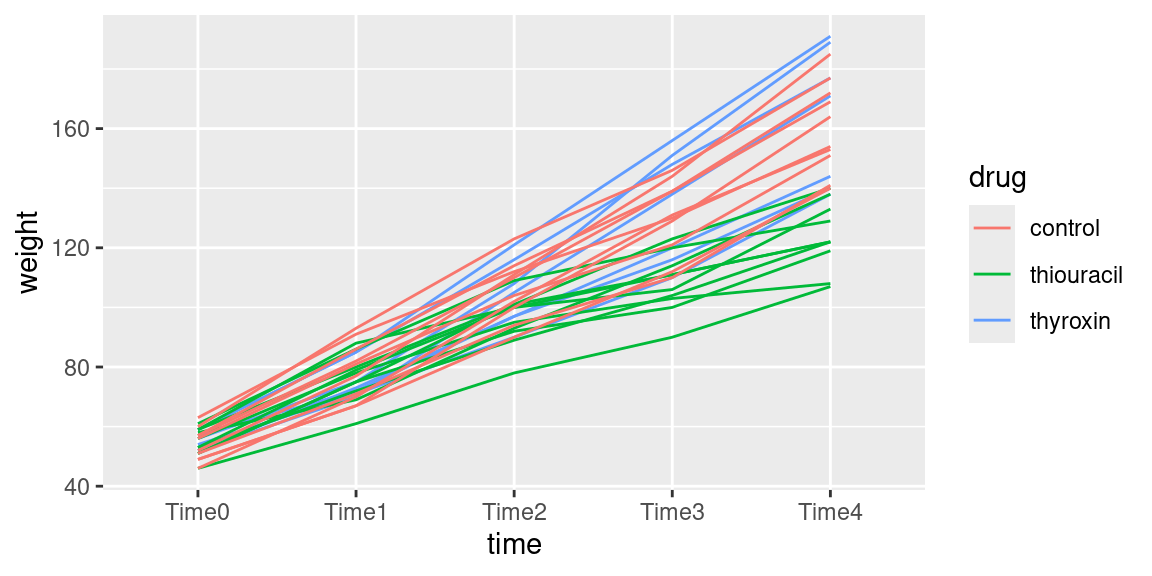

What I thought would be interesting is to plot the growth curves for all the rats individually, colour-coded by which drug the rat was on. This is the repeated-measures version of the ANOVA interaction plot with the data on it, a so-called spaghetti plot. (We don’t use the lines for the means, here, instead using them for joining the measurements belonging to the same subject.)

When I first used this data set, it didn’t have a column identifying which rat was which, which made this plot awkward, but now it does (the column rat). So we can start directly from the dataframe I created above called weights.long:

weights.longEach rat is identified by rat``, which repeats 5 times, once for each value oftime`:

weights.long %>% count(rat)In the data frame weights.long, we plot time (\(x\)) against weight (\(y\)), grouping the points according to rat and colouring them according to drug.

library(ggplot2)

ggplot(weights.long, aes(time, weight, group = rat, colour = drug)) + geom_line()

As you see, “spaghetti plot” is a rather apt name for this kind of thing.

I like this plot because, unlike the interaction plot, which shows only means, this gives a sense of variability as well. The blue and red lines (thyroxin and control) are all intermingled and they go straight up. So there is nothing to choose between these. The green lines, though, start off mixed up with the red and blue ones but finish up at the bottom: the pattern of growth of the thiouracil rats is different from the others, which is why we had a significant interaction between drug and time.

drug is categorical, so ggplot uses a set of distinguishable colours to mark the levels. If our colour had been a numerical variable, ggplot would have used a range of colours like light blue to dark blue, with lighter being higher, for example.

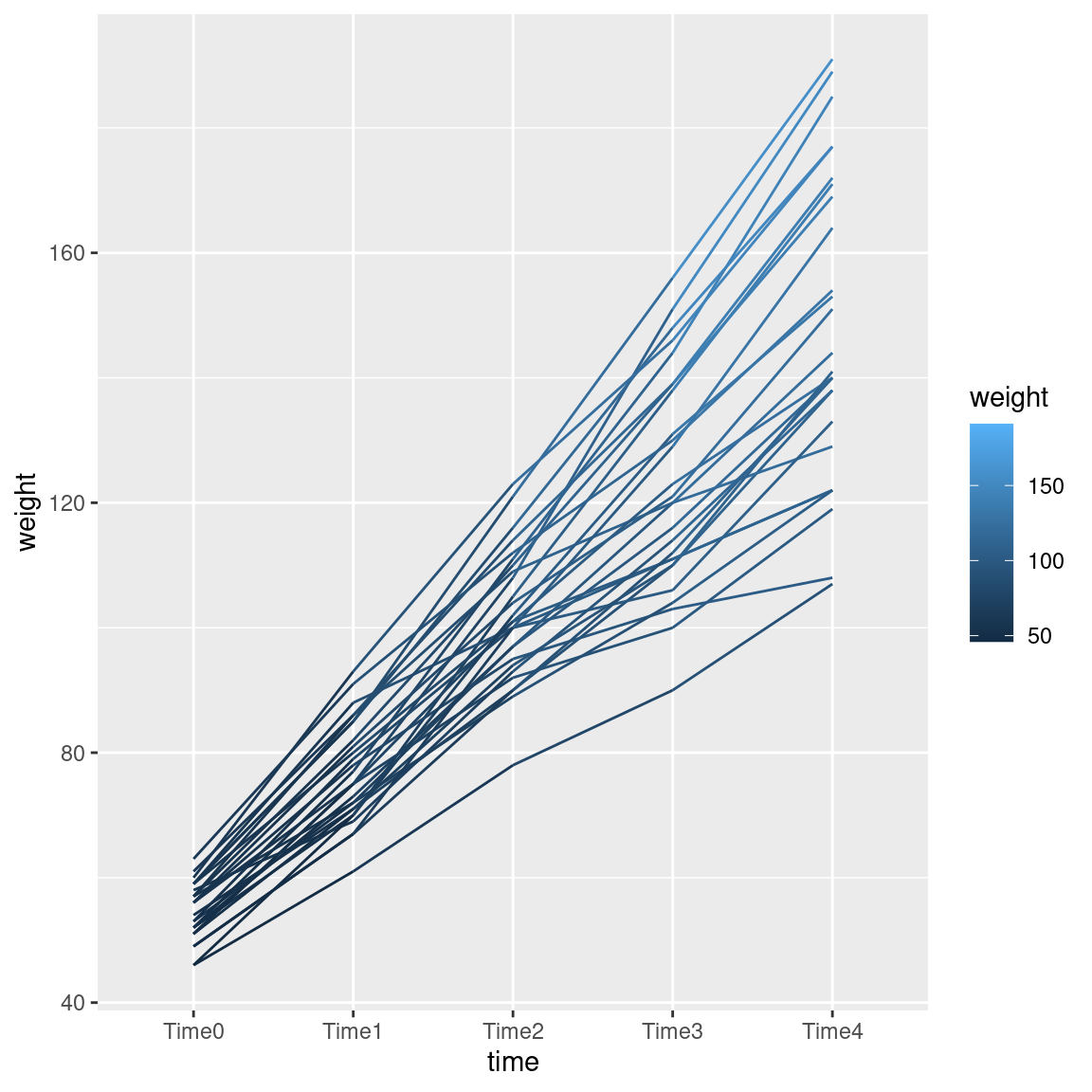

What, you want to see that? All right. This one is kind of silly, but you see the point:

ggplot(weights.long, aes(time, weight, group = rat, colour = weight)) + geom_line()

The line segments get lighter as you go up the page.

Since we went to the trouble of making the “long” data frame, we can also run a repeated measures analysis using the mixed-model idea (described more fully in the problem of the children near the new airport):

wt.1 <- lmer(weight ~ drug * time + (1 | rat), data = weights.long)

drop1(wt.1, test = "Chisq")The drug-by-time interaction is even more strongly significant than in the profile analysis. (The output from drop1 reminds us that the only thing we should be thinking about now is that interaction.)

\(\blacksquare\)

34.9 Children’s stress levels and airports

If you did STAC32, you might remember this question, which we can now do properly. Some of this question is a repeat from there.

The data in link are based on a 1998 study of stress levels in children as a result of the building of a new airport in Munich, Germany. A total of 200 children had their epinephrine levels (a stress indicator) measured at each of four different times: before the airport was built, and 6, 18 and 36 months after it was built. The four measurements are labelled epi_1 through epi_4. Out of the children, 100 were living near the new airport (location 1 in the data set), and could be expected to suffer stress because of the new airport. The other 100 children lived in the same city, but outside the noise impact zone. These children thus serve as a control group. The children are identified with numbers 1 through 200.

- If we were testing for the effect of time, explain briefly what it is about the structure of the data that would make an analysis of variance inappropriate.

Solution

It is the fact that each child was measured four times, rather than each measurement being on a different child (with thus \(4\times 200=800\) observations altogether). It’s the same distinction as between matched pairs and a two-sample \(t\) test.

\(\blacksquare\)

- Read the data into R and demonstrate that you have the right number of observations and variables.

Solution

The usual, data values separated by one space:

my_url <- "http://ritsokiguess.site/datafiles/airport.txt"

airport <- read_delim(my_url, " ")Rows: 200 Columns: 6

── Column specification ────────────────────────────────────────────────────────

Delimiter: " "

dbl (6): epi_1, epi_2, epi_3, epi_4, location, child

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.airportThere are 200 rows (children), with four epi measurements, a location and a child identifier, so that looks good.

(I am mildly concerned about the negative epi measurements, but I don’t know what the scale is, so presumably they are all right. Possibly epinephrine is measured on a log scale, so that a negative value here is less than 1 on the original scale that we don’t see.)

\(\blacksquare\)

- Create and save a “longer” data frame with all the epinephrine values collected together into one column.

Solution

pivot_longer:

airport %>% pivot_longer(starts_with("epi"), names_to="when", values_to="epinephrine") -> airport.long

airport.longSuccess. I’m saving the name time for later, so I’ve called the time points when for now. There were 4 measurements on each of 200 children, so the long data frame should (and does) have \(200\times 4 = 800\) rows.

\(\blacksquare\)

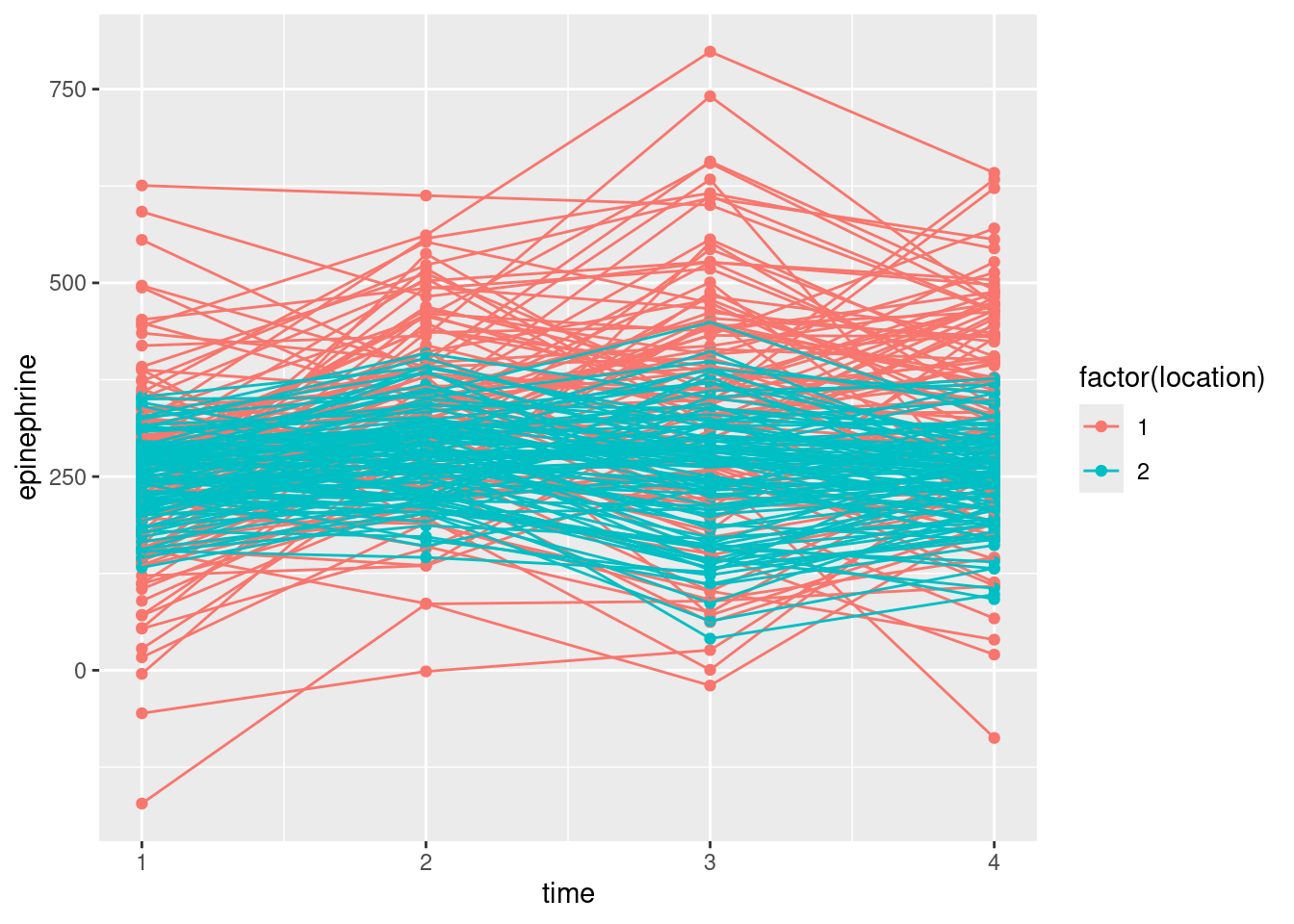

- Make a “spaghetti plot” of these data: that is, a plot of epinephrine levels against time, with the locations identified by colour, and the points for the same child joined by lines. To do this: (i) from the long data frame, create a new column containing only the numeric values of time (1 through 4), (ii) plot epinephrine level against time with the points grouped by child and coloured by location (which you may have to turn from a number into a factor.)

Solution

Note the use of the different things for colour and group, as usual for a spaghetti plot. Also, note that the locations are identified by number, but the number is only a label, and we want to use different colours for the different locations, so we need to turn location into a factor for this.

airport.long %>%

mutate(time = parse_number(when)) %>%

ggplot(aes(x = time, y = epinephrine, colour = factor(location), group = child)) +

geom_point() + geom_line()

This13 is different from the plot we had in C32, where I had you use a different colour for each child, and we ended up with a huge legend of all the children (which we then removed).

If you forget to turn location into a factor, ggplot will assume that you want location to be on a continuous scale, and you’ll get two shades of blue.

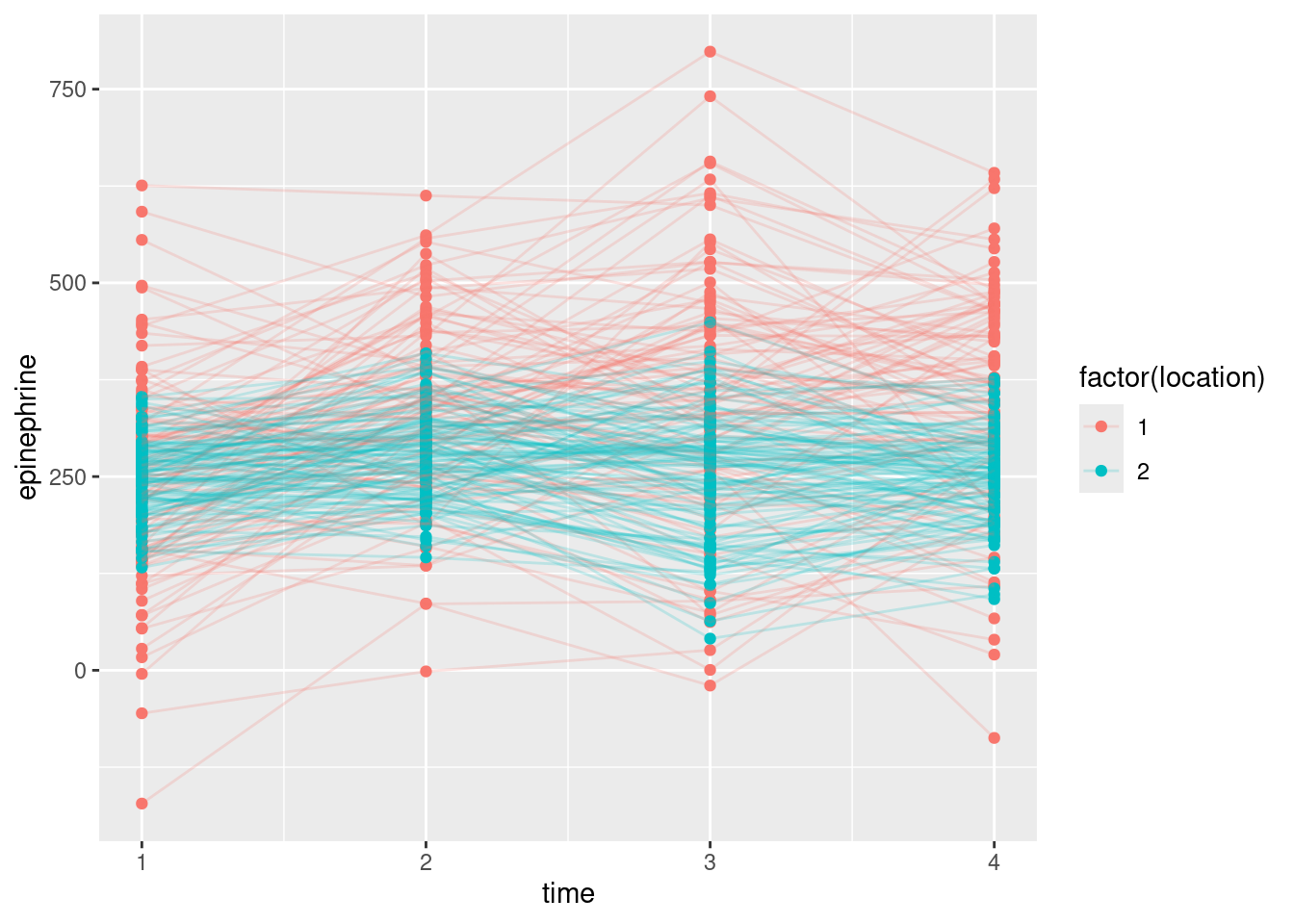

Another problem with this plot is that there are so many children, you can’t see the ones underneath because the ones on top are overwriting them. The solution to that is to make the lines (partly) transparent, which is controlled by a parameter alpha:14

airport.long %>%

mutate(time = parse_number(when)) %>%

ggplot(aes(x = time, y = epinephrine, colour = factor(location), group = child)) +

geom_point() + geom_line(alpha = 0.2)

It seems to make the lines skinnier, so they look more like threads. Even given the lesser thickness, they seem to be a little bit see-through as well. You can experiment with adding transparency to the points in addition.

\(\blacksquare\)

- What do you see on your spaghetti plot? We are looking ahead to possible effects of time, location and their interaction.

Solution

This is not clear, so it’s very much your call. I see the red spaghetti strands as going up further (especially) and maybe down further than the blue ones. The epinephrine levels of the children near the new airport are definitely more spread out, and maybe have a higher mean, than those of the control group of children not near the airport. The red spaghetti strands show something of an increase over time, at least up to time 3, after which they seem to drop again. The blue strands, however, don’t show any kind of trend over time. Since the time trend is different for the two locations, I would expect to see a significant interaction.

\(\blacksquare\)

- The spaghetti plot was hard to interpret because there are so many children. Calculate the mean epinephrine levels for each location-time combination, and make an interaction plot with time on the \(x\)-axis and location as the second factor.

Solution

We’ve done this before:

airport.long %>%

mutate(time = parse_number(when)) %>%

mutate(floc = factor(location)) %>%

group_by(floc, time) %>%

summarize(mean.epi = mean(epinephrine)) %>%

ggplot(aes(x = time, y = mean.epi, group = floc, colour = floc)) +

geom_point() + geom_line()`summarise()` has grouped output by 'floc'. You can override using the

`.groups` argument.

I wanted the actual numerical times, so I made them again. Also, it seemed to be easier to create a factor version of the numeric location up front, and then use it several times later. I’m actually not sure that you need it here, since group_by works with the distinct values of a variable, whatever they are, and group in a boxplot may or may not insist on something other than a number. I should try it:

airport.long %>%

mutate(time = parse_number(when)) %>%

group_by(location, time) %>%

summarize(mean.epi = mean(epinephrine)) %>%

ggplot(aes(x = time, y = mean.epi, group = location, colour = location)) +

geom_point() + geom_line()`summarise()` has grouped output by 'location'. You can override using the

`.groups` argument.

It seems that colour requires a non-number:

airport.long %>%

mutate(time = parse_number(when)) %>%

group_by(location, time) %>%

summarize(mean.epi = mean(epinephrine)) %>%

ggplot(aes(x = time, y = mean.epi, group = location, colour = factor(location))) +

geom_point() + geom_line()`summarise()` has grouped output by 'location'. You can override using the

`.groups` argument.

With a long pipeline like this, none of us get it right the first time (I certainly didn’t), so be prepared to debug it one line at a time. The way I like to do this is to take the pipe symbol and move it down to the next line (moving the cursor to just before it and hitting Enter). This ends the pipe at the end of this line and displays what it produces so far. When you are happy with that, go to the start of the next line (that currently has a pipe symbol by itself) and hit Backspace to move the pipe symbol back where it was. Then go to the end of the next line (where the next pipe symbol is), move that to a line by itself, and so on. Keep going until each line produces what you want, and when you are finished, the whole pipeline will do what you want.

\(\blacksquare\)

- What do you conclude from your interaction plot? Is your conclusion clearer than from the spaghetti plot?

Solution

The two “traces” are not parallel, so I would expect to see an interaction between location and time. The big difference seems to be between times 1 and 2; the traces are the same at time 1, and more or less parallel after time 2. Between times 1 and 2, the mean epinephrine level of the children near the new airport increases sharply, whereas for the children in the control group it increases much less. This, to my mind, is very much easier to interpret than the spaghetti plot, even the second version with the thinner strands, because there is a lot of variability there that obscures the overall pattern. The interaction plot is plain as day, but it might be an oversimplification because it doesn’t show variability.

\(\blacksquare\)

- Run a repeated-measures analysis of variance and display the results. Go back to your original data frame, the one you read in from the file, for this. You’ll need to make sure your numeric

locationgets treated as afactor.

Solution

The usual process. I’ll try the other way I used of making the response:

airport %>%

select(epi_1:epi_4) %>%

as.matrix() -> response

airport.1 <- lm(response ~ factor(location), data = airport)

times <- colnames(response)

times.df <- data.frame(times=factor(times))

airport.2 <- Manova(airport.1, idata = times.df, idesign = ~times)

summary(airport.2)

Type II Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

epi_1 1

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 268516272

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.920129 2281 1 198 < 2.22e-16 ***

Wilks 1 0.079871 2281 1 198 < 2.22e-16 ***

Hotelling-Lawley 1 11.520204 2281 1 198 < 2.22e-16 ***

Roy 1 11.520204 2281 1 198 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: factor(location)

Response transformation matrix:

(Intercept)

epi_1 1

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 3519790

Multivariate Tests: factor(location)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.1311980 29.90002 1 198 1.3611e-07 ***

Wilks 1 0.8688020 29.90002 1 198 1.3611e-07 ***

Hotelling-Lawley 1 0.1510102 29.90002 1 198 1.3611e-07 ***

Roy 1 0.1510102 29.90002 1 198 1.3611e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2 times3

epi_1 1 0 0

epi_2 0 1 0

epi_3 0 0 1

epi_4 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3

times1 497500.84 -113360.08 -56261.667

times2 -113360.08 25830.12 12819.731

times3 -56261.67 12819.73 6362.552

Multivariate Tests: times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.3274131 31.80405 3 196 < 2.22e-16 ***

Wilks 1 0.6725869 31.80405 3 196 < 2.22e-16 ***

Hotelling-Lawley 1 0.4867966 31.80405 3 196 < 2.22e-16 ***

Roy 1 0.4867966 31.80405 3 196 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: factor(location):times

Response transformation matrix:

times1 times2 times3

epi_1 1 0 0

epi_2 0 1 0

epi_3 0 0 1

epi_4 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3

times1 533081.68 206841.01 -14089.6126

times2 206841.01 80256.38 -5466.9104

times3 -14089.61 -5466.91 372.3954

Multivariate Tests: factor(location):times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.2373704 20.33516 3 196 1.6258e-11 ***

Wilks 1 0.7626296 20.33516 3 196 1.6258e-11 ***

Hotelling-Lawley 1 0.3112525 20.33516 3 196 1.6258e-11 ***

Roy 1 0.3112525 20.33516 3 196 1.6258e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Univariate Type II Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 67129068 1 5827073 198 2281.000 < 2.2e-16 ***

factor(location) 879947 1 5827073 198 29.900 1.361e-07 ***

times 475671 3 3341041 594 28.190 < 2.2e-16 ***

factor(location):times 366641 3 3341041 594 21.728 2.306e-13 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Mauchly Tests for Sphericity

Test statistic p-value

times 0.9488 0.066194

factor(location):times 0.9488 0.066194

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

times 0.96685 < 2.2e-16 ***

factor(location):times 0.96685 5.35e-13 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

HF eps Pr(>F[HF])

times 0.9827628 8.418534e-17

factor(location):times 0.9827628 3.571900e-13\(\blacksquare\)

- What do you conclude from the MANOVA? Is that consistent with your graphs? Explain briefly.

Solution

Start with Mauchly’s test near the bottom. This is not quite significant (for the interaction), so we are entitled to look at the univariate test for interaction, which is \(2.3 \times 10^{-13}\), extremely significant. If you want a comparison, look at the Huynh-Feldt adjustment for the interaction, which is almost exactly the same (\(3.57 \times 10^{-13}\)), or the multivariate tests for the interaction (almost the same again).

So, we start and end with the significant interaction: there is an effect of location, but the nature of that effect depends on time. This is the same as we saw in the interaction plot: from time 2 on, the mean epinephrine levels for the children near the new airport were clearly higher.

If you stare at the spaghetti plot, you might come to the same conclusion. Or you might not! I suppose those red dots at time 2 are mostly at the top, and generally so afterwards, whereas at time 1 they are all mixed up with the blue ones.

Interactions of this sort in this kind of analysis are very common. There is an “intervention” or “treatment”, and the time points are chosen so that the first one is before the treatment happens, and the other time points are after. Then, the results are very similar for the first time point, and very different after that, rather than being (say) always higher for the treatment group by about the same amount for all times (in which case there would be no interaction).

So, you have some choices in practice as to how you might go. You might do the MANOVA, get a significant interaction, and draw an interaction plot to see why. You might stop there, or you might do something like what we did in class: having seen that the first time point is different from the others for reasons that you can explain, do the analysis again, but omitting the first time point. For the MANOVA, that means tweaking your definition of your response to omit the first time point. The rest of it stays the same, though you might want to change your model numbers rather than re-using the old ones as I did:

airport %>%

select(epi_2:epi_4) %>%

as.matrix() -> response

airport.1 <- lm(response ~ factor(location), data = airport)

times <- colnames(response)

times.df <- data.frame(times=factor(times))

airport.2 <- Manova(airport.1, idata = times.df, idesign = ~times)

summary(airport.2)Warning in summary.Anova.mlm(airport.2): HF eps > 1 treated as 1

Type II Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 165867956

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.918531 2232.383 1 198 < 2.22e-16 ***

Wilks 1 0.081469 2232.383 1 198 < 2.22e-16 ***

Hotelling-Lawley 1 11.274662 2232.383 1 198 < 2.22e-16 ***

Roy 1 11.274662 2232.383 1 198 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: factor(location)

Response transformation matrix:

(Intercept)

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 3567099

Multivariate Tests: factor(location)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.1951509 48.00886 1 198 5.8219e-11 ***

Wilks 1 0.8048491 48.00886 1 198 5.8219e-11 ***

Hotelling-Lawley 1 0.2424690 48.00886 1 198 5.8219e-11 ***

Roy 1 0.2424690 48.00886 1 198 5.8219e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2

epi_2 1 0

epi_3 0 1

epi_4 -1 -1

Sum of squares and products for the hypothesis:

times1 times2

times1 25830.12 12819.731

times2 12819.73 6362.552

Multivariate Tests: times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.0123563 1.232325 2 197 0.29385

Wilks 1 0.9876437 1.232325 2 197 0.29385

Hotelling-Lawley 1 0.0125109 1.232325 2 197 0.29385

Roy 1 0.0125109 1.232325 2 197 0.29385

------------------------------------------

Term: factor(location):times

Response transformation matrix:

times1 times2

epi_2 1 0

epi_3 0 1

epi_4 -1 -1

Sum of squares and products for the hypothesis:

times1 times2

times1 80256.38 -5466.9104

times2 -5466.91 372.3954

Multivariate Tests: factor(location):times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.0508561 5.277736 2 197 0.0058507 **

Wilks 1 0.9491439 5.277736 2 197 0.0058507 **

Hotelling-Lawley 1 0.0535811 5.277736 2 197 0.0058507 **

Roy 1 0.0535811 5.277736 2 197 0.0058507 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Univariate Type II Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 55289319 1 4903856 198 2232.3830 < 2.2e-16 ***

factor(location) 1189033 1 4903856 198 48.0089 5.822e-11 ***

times 12915 2 2281728 396 1.1207 0.327070

factor(location):times 57397 2 2281728 396 4.9807 0.007306 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Mauchly Tests for Sphericity

Test statistic p-value

times 0.99068 0.39746

factor(location):times 0.99068 0.39746

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

times 0.99076 0.326702

factor(location):times 0.99076 0.007483 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

HF eps Pr(>F[HF])

times 1.000731 0.327069634

factor(location):times 1.000731 0.007305714The interaction is still significant. The sphericity test is not significant, so you can use the 0.0073 in the univariate tests as your P-value (note that the Huynh-Feldt adjustment is actually not adjusted at all).

So there is still not a consistent effect of being near the new airport on epinephrine levels: that is to say, the effect of the new airport still varies over time. That might be because (looking at the interaction plot) for the children near the new airport, the mean epinephrine level went up again between times 2 and 3, whereas for the control children it (for some reason) went dramatically down over the same time period.

We have lots of data here (200 children), so the significant interaction effect also might not be very big.

Experimental designs like this are kind of awkward, because you expect there to be some kind of pattern over time for the treatment group, that will vary over time, whereas for the control group, you expect there to be no pattern over time. So a significant difference shows up as an interaction, which is messier to interpret than you would like.

Extra: the other way to analyze repeated measures data 15 is to treat them as “mixed models”, which requires a different kind of analysis using the lme4 package. I always forget how these go, and I have to look them up when I need them, but the idea is this: the treatments you observe, and the time points at which you observe them, are typically the only ones you care about (a so-called “fixed effect”), but the individuals (children, here) which you happen to observe are something like a random sample of all the children you might have observed (a so-called “random effect”). Models with random effects in them are called “mixed models” (or, I suppose, models with both fixed and random effects). This matters because you have repeated observations on the same child. Some people like to think of this in terms of “sources of variability”: the epinephrine levels vary because of the location and time at which they were observed, but also because of the particular child they happen to have been observed for: each child has a “random effect” that they carry with them through all the time points at which they are observed.

Let’s see if we can make it fly for this example. We need the data in “long” format, the way we arranged it for graphing: the data frame airport.long. I’d like to convert things to factors first:

airport.long %>% mutate(

fchild = factor(child),

flocation = factor(location)

) -> fairport

airport.3 <- lmer(epinephrine ~ flocation * when + (1 | fchild), data = fairport)

anova(airport.3)drop1(airport.3, test = "Chisq")The anova doesn’t actually give us any tests, but what you see in the ANOVA table are the fixed effects. These are testable. The easiest way to see what you can get rid of is drop1; the chi-squared test appears to be the right one (more on that below). This says that the interaction is strongly significant, and we should not consider removing it, the same conclusion as our “profile analysis” before. The other choice for testing is to fit a model without what you are testing and use anova to compare the two models:

airport.4 <- update(airport.3, . ~ . - flocation:when)

anova(airport.4, airport.3)refitting model(s) with ML (instead of REML)There are technical considerations involved in comparing the fit of two models (which is the reason for the “refitting models with…”): there is one method for estimating and a different method for testing. The test is based on “likelihood ratio”, which means that the right test for the drop1 above is Chisq.

If you omit the test in drop1, it just gives you AIC values, which you can use for an informal assessment. In this case, <none> has a much smaller AIC than the interaction (smaller by over 50), so there’s no way we should entertain taking out the interaction. However, if it had not been significant, we would just take it out by fitting a model like airport4: there is no distinction here between “within-subject” and “between-subject” factors that prevented us from taking the interaction out in profile analysis.

As ever when an interaction is significant, we might think about simple effects: that is, look at the two locations separately. That makes sense here because of the kind of experimental design it is: we expect a different kind of relationship with time for the “treatment” children (the ones living near the new airport) as compared to the control children, the ones who live farther away. That approach would work with either the profile-analysis way using Manova or the mixed-modelling way using lmer. In either case, we’d expect to see a time effect at location 1 but not at location 2. (Having separated out locations, only the time effect would be left for testing.) I guess I have to show you that, but I have to get ready for class first.

Later

The nice thing about Wednesday evenings is that I am so tired from class that I have energy for almost nothing except playing with these things. So let’s have a crack at it.

Let’s start with location 1, at which we expect there to be something happening. This is really a simple effect of time at location 1, but in repeated measures guise. The awkwardness is that the profile analysis needs the wide-format data, while the mixed-model analysis needs long format, so we’ll have to repeat our process, once for each format of data set:

loc1 <- airport %>% filter(location == 1)

response <- loc1 %>% select(epi_1:epi_4) %>% as.matrix()

loc1.1 <- lm(response ~ 1, data = loc1)

times <- colnames(response)

times.df <- data.frame(times=factor(times))

loc1.2 <- Manova(loc1.1, idata = times.df, idesign = ~times)Note: model has only an intercept; equivalent type-III tests substituted.summary(loc1.2)Warning in summary.Anova.mlm(loc1.2): HF eps > 1 treated as 1

Type III Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

epi_1 1

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 166760848

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.897288 864.8584 1 99 < 2.22e-16 ***

Wilks 1 0.102712 864.8584 1 99 < 2.22e-16 ***

Hotelling-Lawley 1 8.735944 864.8584 1 99 < 2.22e-16 ***

Roy 1 8.735944 864.8584 1 99 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2 times3

epi_1 1 0 0

epi_2 0 1 0

epi_3 0 0 1

epi_4 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3

times1 1030275.32 87978.051 -71100.691

times2 87978.05 7512.688 -6071.484

times3 -71100.69 -6071.484 4906.755

Multivariate Tests: times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.4542272 26.90988 3 97 9.4963e-13 ***

Wilks 1 0.5457728 26.90988 3 97 9.4963e-13 ***

Hotelling-Lawley 1 0.8322643 26.90988 3 97 9.4963e-13 ***

Roy 1 0.8322643 26.90988 3 97 9.4963e-13 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 41690212 1 4772262 99 864.858 < 2.2e-16 ***

times 776618 3 2774062 297 27.716 7.869e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Mauchly Tests for Sphericity

Test statistic p-value

times 0.95865 0.53129

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

times 0.97423 1.748e-15 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

HF eps Pr(>F[HF])

times 1.007064 7.869406e-16This is one of those times where a pipe doesn’t quite do it. We need to grab the data for location 1, but then we need to do two things with it: one, create a response variable, and two, feed the response variable and the location 1 data into lm. Pipes are for linear sequences of things to do, and this one diverges. So, save what you need to save, and then do it without a pipe.

This is one of those explanatory-variable-less repeated measures where you only have things varying over time. Here, the treatment was location, and we’re only looking at one of those locations. We still have a within-subject design (the four times), but there is no between-subject design left.

The conclusion is that there is very strong evidence of a time effect for location 1, as we would have guessed.

Now, let’s see whether the mixed-model analysis comes to the same conclusion. This time, we have to start from the long data set (the one I’m using is the one called fairport with things as factors) and pull out the rows for location 1. The only remaining fixed effect is time:

fairport %>%

filter(location == 1) %>%

lmer(epinephrine ~ when + (1 | fchild), data = .) %>%

drop1(test = "Chisq")The effect of time is, once again, substantial. The P-value is not the same, because the test is not the same, but it is very similar and points to exactly the same conclusion.

I should perhaps point out that you don’t have to do these models in a pipe. I just did because it was easier for me. But there’s nothing wrong with doing it like this:

tmp <- fairport %>% filter(location == 1)

tmp.1 <- lmer(epinephrine ~ when + (1 | child), data = tmp)

drop1(tmp.1, test = "Chisq")and it gives exactly the same result.

This last model (in either of its versions) is a so-called random intercepts model. What it says is that epinephrine level for a child depends on time, but the effect of being one child rather than another is to shunt the mean epinephrine level up or down by a fixed amount for all times. It seems to me that this is a very reasonable way to model the child-to-child variation in this case, but in other cases, things might be different. lmer allows more sophisticated things, like for example the random child effect depending linearly on time. To do that, you’d rewrite the above like this:

fairport %>%

filter(location == 1) %>%

lmer(epinephrine ~ when + (1 + when | fchild), data = .) %>%

drop1(test = "Chisq")The change is on the lmer line: the bit in brackets has a linear model in when for each child. I didn’t run that, so I’m not certain it works,16 but that’s the idea.

All right, let’s get on to location 2. This was the control location, so we expect to see no dependence of epinephrine level on time, either in the profile analysis or in the mixed-model analysis. There is a large amount of copying and pasting coming up:

airport %>% filter(location == 2) -> loc2

loc2 %>% select(epi_1:epi_4) %>% as.matrix() -> response

loc2.1 <- lm(response ~ 1, data = loc1)

times <- colnames(response)

times.df <- data.frame(times=factor(times))

loc2.2 <- Manova(loc2.1, idata = times.df, idesign = ~times)Note: model has only an intercept; equivalent type-III tests substituted.summary(loc2.2)

Type III Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

epi_1 1

epi_2 1

epi_3 1

epi_4 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 105275213

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.961466 2470.169 1 99 < 2.22e-16 ***

Wilks 1 0.038534 2470.169 1 99 < 2.22e-16 ***

Hotelling-Lawley 1 24.951203 2470.169 1 99 < 2.22e-16 ***

Roy 1 24.951203 2470.169 1 99 < 2.22e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2 times3

epi_1 1 0 0

epi_2 0 1 0

epi_3 0 0 1

epi_4 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3

times1 307.1984 5502.882 749.4117

times2 5502.8823 98573.811 13424.3048

times3 749.4117 13424.305 1828.1931

Multivariate Tests: times

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.3023560 14.01313 3 97 1.1613e-07 ***

Wilks 1 0.6976440 14.01313 3 97 1.1613e-07 ***

Hotelling-Lawley 1 0.4333957 14.01313 3 97 1.1613e-07 ***

Roy 1 0.4333957 14.01313 3 97 1.1613e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 26318803 1 1054811 99 2470.169 < 2.2e-16 ***

times 65694 3 566979 297 11.471 3.884e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Mauchly Tests for Sphericity

Test statistic p-value

times 0.77081 0.00011477

Greenhouse-Geisser and Huynh-Feldt Corrections

for Departure from Sphericity

GG eps Pr(>F[GG])

times 0.84304 2.433e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

HF eps Pr(>F[HF])

times 0.8669702 1.838348e-06I think I changed everything I needed to.

There actually is still an effect of time. This is true even though sphericity fails and so the Huynh-Feldt adjustment to the P-value is fairly substantial; even so, it is still clearly significant.

What kind of effect? We can look at that by finding epinephrine means at each time. That would be easier if we had long format, but I think we can still do it. The magic word is across:

airport %>%

filter(location == 2) %>%

summarize(across(starts_with("epi"), \(epi) mean(epi)))(in words, “for each column that starts with epi, find the mean of it.”)

The message here is that the mean epinephrine levels at time 2 were higher than the others, so that’s what seems to be driving the time effect. And remember, these were the control children, so they had no new airport nearby.

I want to try this:

airport %>% nest_by(location)This is like the idea in the Spilich problem: I want to write a function that calculates the means of the epi_1 through epi_4 columns of a data frame like airport, and then apply it to each of those “nested” data frames.

epi.means <- function(x) {

x %>% summarize(across(starts_with("epi"), \(epi) mean(epi)))

}

epi.means(airport)OK, and then:

airport %>%

nest_by(location) %>%

rowwise() %>%

mutate(means = list(epi.means(data))) %>%

unnest(means)Ooh, nice. There are the means for the four time points at both of the two locations. At location 1, epinephrine starts out low, jumps high after the airport is built, and stays there, while at location 2, the mean is mysteriously higher at time 2 and then epinephrine levels go back down again.

Enough of that. Back to the mixed model analysis, with more copying and pasting. Here is the “simple time effect” at location 2:

fairport %>%

filter(location == 2) %>%

lmer(epinephrine ~ when + (1 | fchild), data = .) %>%

drop1(test = "Chisq")This also finds a time effect at location 2, with a very similar P-value.

So which method is preferred? They are, I think, two different ways of approaching the same problem, and so there is no good reason to prefer one way over the other. The profile-analysis way is easier to follow if you are coming from a MANOVA direction: set it up the same way as you would a MANOVA, with your repeated measures as your multivariate response, and distinguish between the within-subject design (times, for us) and the between-subject design (treatments, stuff like that). As for mixed models: when you get your head around that crazy way of specifying the random effects, which are typically “subjects” in this kind of analysis, the actual model statement is brief: response depends on fixed effects plus random effects with the brackets and vertical bar. I always find the lmer models look very simple once you have figured them out (like ggplot in that regard). I also like the fact that lmer uses “tidy data”, so that you can make graphs and do this flavour of analysis with the same data frame. Having gotten my head around getting my data tidy, it seems odd to me that profile analysis requires the data to be untidy, although it does so for a good reason: if you were genuinely doing a MANOVA, you would want your multiple response variables each in their own column. Doing repeated measures this way is thinking of your responses at different times like different variables.

The approach I’ve taken in this course is for historical reasons. That is to say, my history, not because the historical perspective is necessarily the best one. It began with doing repeated measures in SAS (this was many years ago; I don’t teach SAS any more), and the only way I could get my head around that was the profile-analysis way. I’m not even sure there was software for doing mixed models in those days, certainly not in SAS. Besides, random effects scared me back then. Anyway, when I moved things over to R, I realized I was using basically the same idea in R: make it into a MANOVA and tweak things as needed. So I developed things by showing you MANOVA first as ANOVA when you have lots of \(y\)-variables instead of just one, and then using it for repeated measures by thinking of the repeated measures as “different-but-related” \(y\)-variables. To my mind, this is a reasonable way of doing it in a course like this: you get to see MANOVA, which is worth knowing about anyway, and then you get to apply it to a very common situation.

Mixed models are actually quite recent, as a way of doing this kind of modelling. Back when I was learning this stuff, you learned to distinguish between “fixed effects”, whose levels were the only ones you cared about (like the two locations here), and “random effects”, where the ones you observed were only a sample of the ones you might have seen (like “children” or in general “subjects”). Then you would test the fixed effects by hypothesizing that their mean effect was zero and seeing whether you could reject that (in the same way that you do in regression or regular ANOVA: in the latter case, you test that the effects of all the different groups are zero, which comes down to saying that all the groups have the same mean). The way you handled random effects was to estimate their variance, and then you would test whether a random effect exists or not by testing whether the variance of that random effect is zero (does not exist) or is greater than zero. Zero is the smallest a variance (or SD) can be, which means that testing for it has always been kind of flakey and the standard theory doesn’t work for it (the technical term is “on the boundary of the parameter space”, and the theory applies when the null-hypothesis value is strictly inside the set of possible values the parameter can take).

Back when we did this stuff by hand,17 we had to figure out whether we were testing a fixed or a random effect, and there were rules, involving things called “expected mean squares”, that told you how to get the tests right. Anyway, that is all considered rather old-fashioned now, and mixed models are where it is at. In these, you typically only test the fixed effects, while estimating the size of the random effects, taking it for granted that they exist. This is an active area of research; the things that lmer fits are called “linear mixed models”, and there are also now “generalized linear mixed models”, things like logistic regression with random effects.

We haven’t had to worry about this up to now because in most of the experimental designs we have used, each subject only contributes one measurement. In that case, you cannot separate out a subject-specific “random effect” from random error, and so we lump it all into random error. The one experimental design where a subject gives us more than one measurement is matched pairs, and the way we have handled that so far is to turn the two measurements into one by taking the difference between them; even in that case, each subject contributes only one difference to the analysis. Repeated measures, though, is genuinely different: you can’t wish away multiple measurements on the same subject by taking differences any more, so you have to face up to the issue at last. Either we think of it as several different measurements that might be correlated (the profile-analysis MANOVA way), or we face up to the idea that different subjects bring their own random effect to the table that shows up in every measurement that the subject provides (mixed models).

I did analysis of covariance as a separate mini-section, thinking of it as a regression, but if the numerical covariate is the same thing as the response but measured at a different time (eg., before rather than after), that would also respond to a repeated-measures approach, or to the taking of differences as matched pairs does. There is almost always more than one way to look at these things.

\(\blacksquare\)

34.10 Body fat as repeated measures

This one is also stolen from STAC32. Athletes are concerned with measuring their body fat percentage. Two different methods are available: one using ultrasound, and the other using X-ray technology. We are interested in whether there is a difference in the mean body fat percentage as measured by these two methods, and if so, how big that difference is. Data on 16 athletes are at link.

- Read in the data and check that you have a sensible number of rows and columns.

Solution

This kind of thing:

my_url <- "http://ritsokiguess.site/datafiles/bodyfat.txt"

bodyfat <- read_delim(my_url, " ")Rows: 16 Columns: 3

── Column specification ────────────────────────────────────────────────────────

Delimiter: " "

dbl (3): athlete, xray, ultrasound

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.bodyfat16 rows (athletes) and 3 columns, one for each measurement method and one labelling the athletes. All good.

\(\blacksquare\)

- Carry out a suitable (matched-pairs) \(t\)-test to determine whether the means are the same or different.

Solution

Feed the two columns into t.test along with paired=T. Remember that this is (in effect) a one-sample \(t\)-test, so that you can’t use a data=. You therefore have to wrap everything in a with:

with(bodyfat, t.test(xray, ultrasound, paired = TRUE))

Paired t-test

data: xray and ultrasound

t = -0.30801, df = 15, p-value = 0.7623

alternative hypothesis: true mean difference is not equal to 0

95 percent confidence interval:

-0.7425068 0.5550068

sample estimates:

mean difference

-0.09375 The test we want is two-sided, so we didn’t have to take any special steps to get that.

\(\blacksquare\)

- What do you conclude from the test?

Solution

The P-value of 0.7623 is not at all small, so there is no way we can reject the null hypothesis.18 There is no evidence of a difference in means; we can act as if the two methods produce the same mean body fat percentage. That is to say, on this evidence we can use either method, whichever one is cheaper or more convenient.

\(\blacksquare\)

- Run a repeated-measures analysis of variance, treating the two methods of measuring body fat as the repeated measures (ie., playing the role of “time” that you have seen in most of the other repeated measures analyses). There is no “treatment” here, so there is nothing to go on the right side of the squiggle. Insert a

1there to mean “just an intercept”. Display the results.

Solution

Construct the response variable, run lm, construct the within-subjects part of the design, run Manova:

bodyfat %>%

select(xray:ultrasound) %>%

as.matrix() -> response

bodyfat.1 <- lm(response ~ 1)

methods <- colnames(response)

methods.df <- data.frame(methods=factor(methods))

bodyfat.2 <- Manova(bodyfat.1, idata = methods.df, idesign = ~methods)Note: model has only an intercept; equivalent type-III tests substituted.summary(bodyfat.2)

Type III Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

xray 1

ultrasound 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 9025

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.797341 59.01586 1 15 1.4135e-06 ***

Wilks 1 0.202659 59.01586 1 15 1.4135e-06 ***

Hotelling-Lawley 1 3.934390 59.01586 1 15 1.4135e-06 ***

Roy 1 3.934390 59.01586 1 15 1.4135e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: methods

Response transformation matrix:

methods1

xray -1

ultrasound 1

Sum of squares and products for the hypothesis:

methods1

methods1 0.140625

Multivariate Tests: methods

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.0062849 0.09486999 1 15 0.76231

Wilks 1 0.9937151 0.09486999 1 15 0.76231

Hotelling-Lawley 1 0.0063247 0.09486999 1 15 0.76231

Roy 1 0.0063247 0.09486999 1 15 0.76231

Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

Sum Sq num Df Error SS den Df F value Pr(>F)

(Intercept) 4512.5 1 1146.94 15 59.0159 1.413e-06 ***

methods 0.1 1 11.12 15 0.0949 0.7623

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is an unusual one in that the repeated measures variable is not time but method, and there is no actual treatment. The consequence (with there being only two methods) is that sphericity is automatically good, and the univariate test for methods (at the bottom) has exactly the same P-value as all the multivariate tests, so that the conclusion is the same either way. (Also, see below about the matched pairs.)

\(\blacksquare\)

- Compare your repeated-measures analysis to your matched-pairs one. Do you draw the same conclusions?

Solution

The P-value for methods, which is testing the same thing as the matched pairs, is 0.7623, which is actually identical to the matched pairs \(t\)-test, and so the conclusion is identical also. That is, there is no difference between the two methods for measuring body fat. This goes to show that repeated measures gives the same answer as a matched-pairs \(t\)-test in the situation where they both apply. But repeated measures is, as we have seen, a lot more general.

Since this really is repeated measures, we ought to be able to use a mixed model here too. We need “long” or “tidy” format, which we don’t have yet. One pipe to save them all, to paraphrase Lord of the Rings19 — put all the fat measurements in one column with a label saying which method they were obtained with; create a column which is the athlete number as a factor; fit the linear mixed model; see what we can drop from it:

bodyfatbodyfat %>%

pivot_longer(-athlete, names_to="method", values_to="fat") %>%

mutate(fathlete = factor(athlete)) %>%

lmer(fat ~ method + (1 | fathlete), data = .) %>%

drop1(test = "Chisq")Once again, there is no difference between methods, and though the P-value is different from the matched pairs or profile analysis, it is very close to those.

If you’re not clear about the tidy data frame used for input to lmer, pull the top two lines off the pipeline and see what they produce:

bodyfat %>%

pivot_longer(-athlete, names_to="method", values_to="fat") %>%

mutate(fathlete = factor(athlete))Each athlete now appears twice: once with their fat measured by xray, and again with it measured by ultrasound. The column fathlete is a factor.

The mixed model took me two goes to get right: I forgot that I needed the data=. in lmer, because it works like lm with the model formula first, not the input data. If the pipeline is going too fast for you, create the tidy data frame and save it, then use the saved data frame as input to lmer.

\(\blacksquare\)

34.11 Investigating motor activity in rats

A researcher named King was investigating the effect of the drug midazolam on motor activity in rats. Typically, the first time the drug is injected, a rat’s motor activity decreases substantially, but rats typically develop a “tolerance”, so that further injections of the drug have less impact on the rat’s motor activity.

The data shown in link were all taken in one day, called the “experiment day” below. 24 different rats were used. Each rat, on the experiment day, was injected with a fixed amount of midazolam, and at each of six five-minute intervals after being injected, the rat’s motor activity was measured (these are labelled i1 through i6 in the data). The rats differed in how they had been treated before the experiment day. The control group of rats had previously been injected repeatedly with a saline solution (no active ingredient), so the experiment day was the first time this group of rats had received midazolam. The other two groups of rats had both received midazolam repeatedly before the experiment day: the “same” group was injected on experiment day in the same environment that the previous injections had taken place (this is known in psychology as a “conditioned tolerance”), but the “different” group had the previous injections in a different environment than on experiment day.

The column id identifies the rat from which each sequence of values was obtained.

- Explain briefly why we need to use a repeated measures analysis for these data.

Solution

Each rat is measured at six different times (i1 through i6): that is to say, each row of the data set consists of repeated measurements on the same rat. (If each row had used six different rats to obtain the six measurements, we would have been back in familiar territory and could have used a regular analysis of variance.)

\(\blacksquare\)

- Read in the data and note that you have what was promised in the question.

Solution

The usual:

my_url <- "http://ritsokiguess.site/datafiles/king.csv"

king <- read_csv(my_url)Rows: 24 Columns: 8

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (1): context

dbl (7): id, i1, i2, i3, i4, i5, i6

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.kingThere are 24 rats (rows). The columns label the rat (id) and the times at which motor activity was measured (i1 through i6). The remaining column, context, describes how the rats were treated before experiment day, with the levels being the same ones given in the question.

\(\blacksquare\)

- We are going to do a repeated-measures analysis using the “profile” method shown in class. Create a suitable response variable for this method.

Solution

cbind the appropriate columns together, to make a matrix:

response <- with(king, cbind(i1, i2, i3, i4, i5, i6))This is the “simple” but tedious way, and produces a matrix because the i1 through i6 are vectors (single columns):

class(response)[1] "matrix" "array" i1:i6 does not work here, because we are outside of the tidyverse, and in that world, : only means “through” (as in “this through that”) when the things on either side of it are or represent numbers.

The clever way to get the response is to select the columns and then turn them into a matrix. This does permit the colon because we are now in the tidyverse:

response <- king %>%

select(i1:i6) %>%

as.matrix()

class(response)[1] "matrix" "array" It is indeed a matrix.

I tried to be extra-clever and use starts_with, but I have another column id that starts with i that I do not want to be part of the response. So I had to abandon that idea, but not before trying this:

response <- king %>%

select(matches("i[0-9]")) %>%

as.matrix()

head(response) i1 i2 i3 i4 i5 i6

[1,] 150 44 71 59 132 74

[2,] 335 270 156 160 118 230

[3,] 149 52 91 115 43 154

[4,] 159 31 127 212 71 224

[5,] 292 125 184 246 225 170

[6,] 297 187 66 96 209 74head displays the first six lines (of anything). We don’t normally need it because we are typically dealing with tibble-like data frames that display only ten rows of themselves by default. But this worked. The matches part takes a so-called “regular expression” which is a very flexible way of matching anything: in this case, a column whose name starts with i followed by exactly one digit (something between 0 and 9 inclusive).

\(\blacksquare\)

- Set up the “within-subjects” part of the analysis. That means getting hold of the names of the columns that hold the different times, saving them, and also making a data frame out of them:

Solution

times <- colnames(response)

times.df <- data.frame(times=factor(times))\(\blacksquare\)

- Fit the repeated-measures ANOVA. This will involve fitting an

lmfirst, if you have not already done so.

Solution

Fit the lm first, and then pass that into Manova from car:

king.1 <- lm(response ~ context, data = king)

king.2 <- Manova(king.1, idata = times.df, idesign = ~times)

summary(king.2)

Type II Repeated Measures MANOVA Tests:

------------------------------------------

Term: (Intercept)

Response transformation matrix:

(Intercept)

i1 1

i2 1

i3 1

i4 1

i5 1

i6 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 24399650

Multivariate Tests: (Intercept)

Df test stat approx F num Df den Df Pr(>F)

Pillai 1 0.913261 221.1069 1 21 1.273e-12 ***

Wilks 1 0.086739 221.1069 1 21 1.273e-12 ***

Hotelling-Lawley 1 10.528902 221.1069 1 21 1.273e-12 ***

Roy 1 10.528902 221.1069 1 21 1.273e-12 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: context

Response transformation matrix:

(Intercept)

i1 1

i2 1

i3 1

i4 1

i5 1

i6 1

Sum of squares and products for the hypothesis:

(Intercept)

(Intercept) 1611524

Multivariate Tests: context

Df test stat approx F num Df den Df Pr(>F)

Pillai 2 0.4101695 7.301725 2 21 0.0039141 **

Wilks 2 0.5898305 7.301725 2 21 0.0039141 **

Hotelling-Lawley 2 0.6954024 7.301725 2 21 0.0039141 **

Roy 2 0.6954024 7.301725 2 21 0.0039141 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------

Term: times

Response transformation matrix:

times1 times2 times3 times4 times5

i1 1 0 0 0 0

i2 0 1 0 0 0

i3 0 0 1 0 0

i4 0 0 0 1 0

i5 0 0 0 0 1

i6 -1 -1 -1 -1 -1

Sum of squares and products for the hypothesis:

times1 times2 times3 times4 times5

times1 463982.0417 18075.41667 -17241.16667 -47691.2917 973.291667

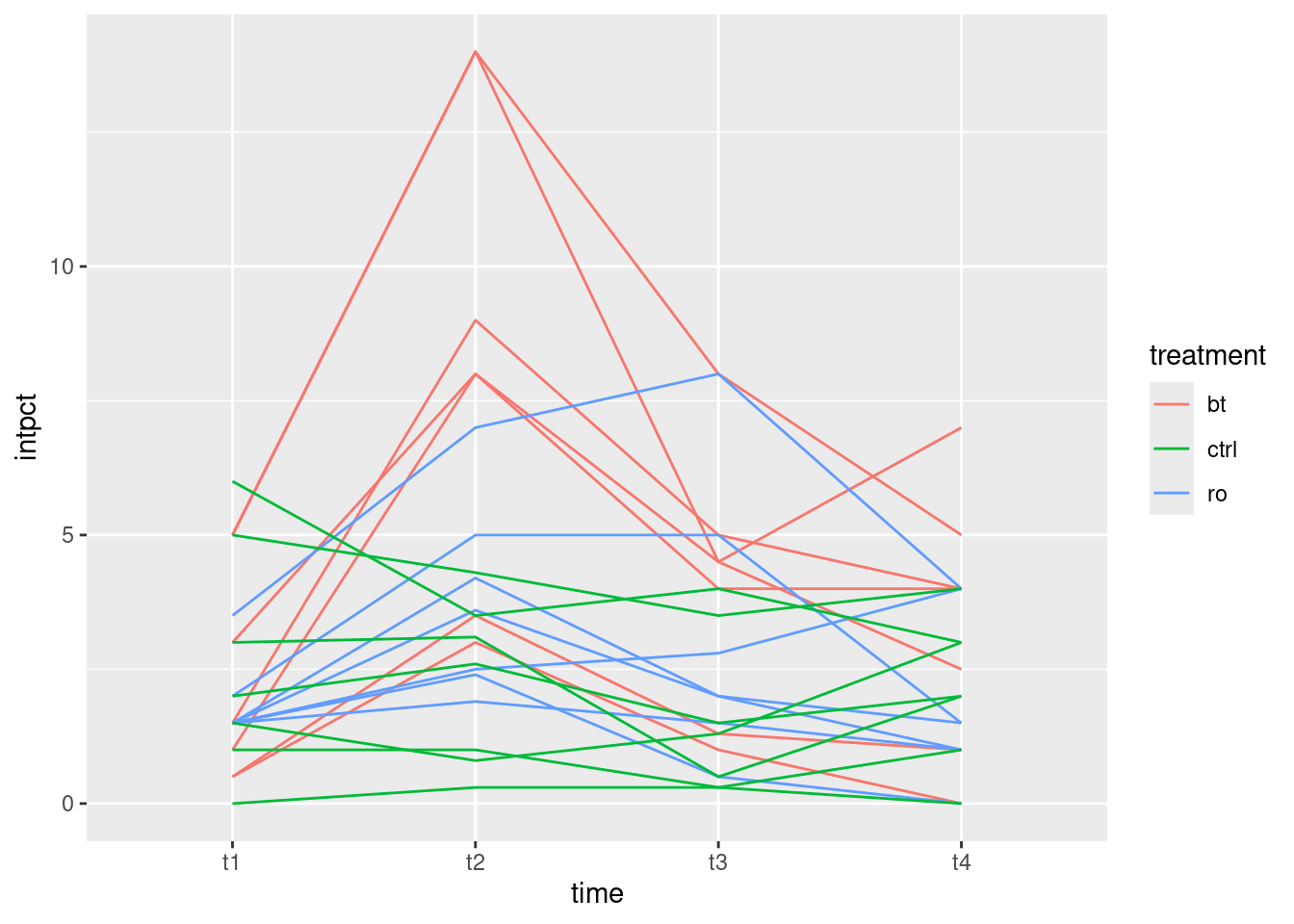

times2 18075.4167 704.16667 -671.66667 -1857.9167 37.916667